#Is ChatGPT Going to Replace Google

Explore tagged Tumblr posts

Text

the decline of human creativity in the form of the uprise in AI generated writing is baffling to me. In my opinion, writing is one of the easiest art forms. You just have to learn a couple of very basic things (that are mostly taught in school, i.e; sentence structure and grammar amongst other things like comprehension and reading) and then expand upon that already-foundational knowledge. You can look up words for free— there’s resources upon resources for those who may not be able to afford books, whether physical or non physical. AI has never made art more accessible, it has only ever made production of art (whether it be sonically, visual, written—) cheap and nasty, and it’s taken away the most important thing about art (arguably)— the self expression and the humanity of art. Ai will never replace real artists, musicians, writers because the main point of music and drawing and poetry is to evoke human emotion. How is a robot meant to simulate that? It can’t. Robots don’t experience human emotions. They experience nothing. They’re only destroying our planet— the average 100-word chat-gpt response consumes 519 millilitres of water— that’s 2.1625 United States customary cups. Which, no, on the scale of one person, doesn’t seem like a lot. But according to OpenAI's chief operating officer , chatgpt has 400 million weekly users and plans on hitting 1 billion users by the end of this year (2025). If everyone of those 400 million people received a 100 word response from chat gpt, that would mean 800 MILLION (if not more) cups of water have gone to waste to cool down the delicate circulatory system that powers these ai machines. With the current climate crisis? That’s only going to impact our already fragile and heating-up earth.

but no one wants to talk about those stats. But it is the reality of the situation— A 1 MW data centre can and most likely uses up to 25.5 MILLION litres of water for annual cooling maintenance. Google alone with its data centre used over 21 BILLION litres of fresh water in 2022. God only knows how that number has likely risen. There are projections of predictions saying that 6.6 BILLION cubic meters could be being used up by AI centres by 2027.

not only is AI making you stupid, but it’s killing you and your planet. Be AI conscious and avoid it as much as humanly possible.

#thoughts#diary#rambles#ramblings#diary blog#digital diary#anti ai#i hate ai#writers of tumblr#writeblr#my writing#writblr#art moots#oc artist#oc artwork#original art#artists on tumblr#musings#leftist#writer stuff#female writers#writing#writers on tumblr#writers and poets#creative writing#writerscommunity#looking for moots#looking for friends#anti intellectualism

258 notes

·

View notes

Text

People who use AI and suggest to use AI in any transID tips are unimaginative. "Use AI chatbots--" go roleplay with your friends. Go roleplay. With your friends. You don't have friends? Make them. Go to roleplay groups and ask if anyone wants to roleplay with you (and you can roleplay as the transID you're transitioning into, be it as an OC or your blorbo). "Use chatgpt to help you brainstorm--" Use google and a notepad. Use youtube. Talk about it with your friends. So many tips replace human interaction with AI. Shit, if something tells you to use AI to brainstorm for the tips THEY'RE supposed to be giving you it's kind of telling man.

All "use AI" tips have other alternatives that are not only more fun but healthier for you and helps with your transition further because you train your brain more when you put the work in yourself. Please, you are harming yourself and the other people you tell to do this, you are hurting your transition.

#AI kills the planet harms artists journalists writers and uses slave labor please do research into it#tw ai#cw ai#apologies we're getting oddly specific but I've seen it on MULTIPLE posts and it isn't okay.

35 notes

·

View notes

Text

An Experiment With Machine Translation/AI

Hello there, my friends! Usually, I'm posting about trans stuff. Today, I'm going to switch it up for translation.

So you see, I'm a student and translation studies are one of the degrees I'm desperately vying for. This week, one of my classes had an interesting assignment: We got an excerpt from a book (The Map of Us by Jules Preston) and our goal was not only to translate it but also create a second version, using a machine translation or ChatGPT. A lot of translation work nowadays is being more or less AI's factchecker and editor, so like it or not (and you can believe me that I don't like it), it's a skill to have.

And I thought I would share the comparisons! They're not surprising. They all show that unedited AI or MT is not by any means ready to replace human translators, the least of all in art. However, the ways they fail are quite interesting!

I was also fully prepared for ChatGPT, being, you know, a language model, to blow poor Google Translate out of the water. And while it was definitely the more creative of the two, it also performed... worse overall...? You can be the judge of that!

And of course, this post is best enjoyed if you're a Czech speaker, but if you happen not to speak Czech or Slovak, don't hesitate to read on anyway, I'll provide additional translation back into English for the fails.

And... if you happen to be a human with a penchant for translation and you're cringing at my solutions, trembling with the need to correct me, you will make me cry, but go ahead.

Example 1:

ENG: She did not ask his opinion, for he had already shown himself to be difficult and ill-tempered when it came to making a choice.

HT (human translator): Na jeho názor se ani neptala, protože už věděla, jak protivný dokázal být, když se musel rozhodovat.

GT (Google Translate): Neptala se ho na názor, protože se už ukázal jako obtížný a špatně naladěný, když došlo na volby.

[She did not ask his opinion, because he has already shown himself to be hard to do and ill-tempered when it came to elections.]

AI (ChatGPT): Neptala se na jeho názor, protože už ukázal, že je těžko rozhodnutelný a náladový, pokud šlo o výběr.

[She did not ask his opinion, because he has already shown himself to be hard to decide and moody in the matter of selection.]

In this one, I think the bots reached a tie in being wrong. Machine translation is notoriously wonky on composite sentences, however ChatGPT genuinely surprised me with somehow managing to "think" a little forward and already connecting "difficult" (těžko) with "making a choice" (rozhodnout se) and laboriously birthing "těžko rozhodnutelný" which would mean "difficult to decide/judge" but in the context of e.g. a competition being tight and it being difficult for the judges to choose the winner. It's not used with sentient beings at all. In my version, I've decided to merge the descriptors "difficult and ill-tempered" into one word "protivný" which is a little more general in meaning, so it encompasses both and drives home the idea of being a neutral way to call out somebody's mean and problem-generating behavior.

Example 2:

ENG: Every time she would have to press a small button and the top of the Royal Quiet Deluxe typewriter would pop open automatically, making the interior of the machine accessible.

HT: Pokaždé, když se to stalo, musela zmá��knout čudlík, aby se horní část jejího psacího stroje Royal Quiet Deluxe automaticky odklopila a ona mohla sáhnout dovnitř.

GT: Pokaždé by musela stisknout malé tlačítko a horní část psacího stroje Royal Quiet Deluxe se automaticky otevřela, čímž se zpřístupnil vnitřek stroje.

(no fail here as far as I can tell)

AI: Každýkrát musela stisknout malé tlačítko a víko psacího stroje Royal Quiet Deluxe se automaticky otevřelo, což umožnilo přístup k vnitřku stroje.

[Everyonetimes she had to press a small button and the top of the Royal Quiet Deluxe typewriter would pop open automatically, making it possible to access the interior of the machine.]

Now, the word "každýkrát" means "every time," however, in SLOVAK, not in Czech. Two completely different languages. In Czech? "Každý" means "everyone" and "krát" means "time(s)" (as in multiplying something), so "everyonetimes." I'm quite sure that passing the word as Czech counts as an anti-Slovak microaggression XD Google Translate slayed here, no mistakes, I would just word it differently because I've got that Human Touch TM and an insufferable superiority complex.

Example 3:

ENG: There was an 'e' in 'nose' and 'ear.'

HT: Nos i ucho měly 'o.'

This one is rigged against both GT and AI because what they do is gluing the most probable translation equivalents together, and they have no way of knowing that the letter the words "nose" (nos) and "ear" (ucho) share in Czech is "o" and not "e". GT performed as expected.

GT: V 'nosu' a 'uchu' bylo 'e'.

(besides making it sound a little like the letter was physically in someone's nose and ear, it's fine)

But ChatGPT? I wouldn't actually expect it to solve the problem and correctly switch the letters, but given that it is supposed to be much more advanced than GT, I had my hopes high and thought that it would show at least a trace of acknowledgment of the part being somehow difficult. AI: Bylo tam 'e' ve slovech 'nos' a 'ucho'.

[There was an 'e' in the words 'nos' and 'ucho'.] (liar)

Despite those words noticeably lacking in the "e" department, it's worth noting that while GT went out of its way to try to make it sound natural (too natural, in fact), its supposedly smarter cousin fully copied the English sentence structure, which is technically gramatically correct but sounds extremely reverse-Czenglish. (I guess if Czenglish is a Czech-speaking learner's English influenced by Czech, that makes an English-speaking learner's Czech... Ech. It sounds extremely Ech.)

Example 4:

ENG: Did it matter what an imaginary explorer's lips looked like or felt like?

HT: Záleželo vůbec na tom, jak vypadaly rty imaginárního dobrodruha, nebo jaké byly na dotek?

GT: Záleželo na tom, jak vypadaly nebo jak vypadaly rty imaginárního průzkumníka?

[Did it matter what an imaginary explorer's lips looked like or looked like?]

AI: Záleželo na tom, jak vypadají nebo jak se cítí rty imaginárního dobrodruha?

[Did it matter what an imaginary explorer's lips looked like or how they were feeling?]

Do facts care about the your (lips') feelings? As someone who likes to play around with GT quite a lot, I'm very familiar with the "when in doubt, just repeat whatever you've last said" strategy. If you fuck with it long enough, you can make the poor tool repeat one phrase so many times it fills the entire line. However, the mistake ChatGPT made perplexes me. It feels very humanizing. It's a mistake I can easily imagine an actual learner making, failing to distinguish between "to feel like" (comparison) and "to feel" (to experience a feeling). It's a mistake I would totally make several years ago, and fuck it, maybe even now if I'm trying to read fast on a very bad day. Good job at doing a bad job, GPT.

Anyway, these 4 were probably the funniest and most interesting of the whole excerpt. I must admit that analyzing them turned out to be a humbling experience because revisiting my work, I've noticed several mistakes I've made as well that I can't take back, because I've already turned the assignment in. Oh well. However, I did have fun!

I hope you had fun, too! Stay těžko rozhodnutelní!

#čumblr#czech#linguistics#translation#mistranslation#chatGPT#ai#ai translation#google translate#machine translation#čeština#překlad#strojový překlad#překladatelský komentář#I did this instead of sleep#lingvistika#kaa upol#azer_posts

36 notes

·

View notes

Text

IMPORTANT NOTE ABOUT AI

most people who know me or have been on my page know this, but I want to make it VERY clear, ai is not welcome on my page and NEVER will be. ai is not meant to replace the creative fields, the artists, the routine and process of creation, none of it. art is one thing that humanity has had for a very long time, and it's something that connects us and helps document history, stories, people, and much more. seeing ai infiltrate fandom spaces, in any regard, is frustrating. fan works should come from the heart and from the passion you have for the thing you are a fan of, the love for the process of creation, the satisfaction of the end result. that's what make fandoms so special. to have a program do it for you creates a lack of passion. it's redundant and it's boring. not to mention its stealing whose art/writing/likeness has been stolen by these programs. it's unfair to those creatives who work their ass off to create and try and make a living. "the starving artist" is a very real and true statement in a lot of cases.

and not to mention, that specific type of ai is HORRIBLE for the environment, and it is burning a hole into the ozone layer. sites where this ai sites are being built are sitting in places where resources like clean water is being tainted because of it. (look up elon musks supercomputer in memphis.)

ai is supposed to be a tool that can help like scan for early signs of cancer and assisting in risky surgery in which human error can be dangerous, not stealing from creative minds and artists. if you are looking for ways to avoid ai on social media, or try and keep ai out of your life as much as you can, you can do the following: * create your own works! "but i don't know how to draw!" neither did most artists once upon a time. the point is to learn and experiment. the mind is full of capabilities, and you are more creative than you might think. "but art has rules!" true, but you need to learn the rules before you can break them! "i'm not a good writer" to who? to yourself? to that one loser on the internet you said you suck? fuck 'em! keep writing!! creative fields need to keep flourishing and just because some person said you suck, doesn't mean everyone else in the world thinks that. most cases, they either don't create or they are just miserable. * when on search engines, such as google, when you type in the search bar, add " -ai " to the end of it, to stop ai search results from coming up. ai summaries are not always accurate. * AVOID CHATGPT, CHARACTER.AI, AND ANY OTHER "CREATIVE" AI! just avoid it. don't acknowledge it, do not use it. there is NO excuse to use it. these sites STEAL from creatives on a daily basis. talking to a digital ai character is NOT the same as a person. i know it's hard to talk to people, as i tend to be introverted myself, but i will not talk to an ai character. write a private self insert story, and consider what your character might say back to you. it's more creative, and it will most likely be even more therapeutic in the long run. * if you are being asked to told to use ai, say NO. REFUSE. saying no is a terrifying concept, but trust me, when you start learning to use the word no, the easier it will become. ask to not be involved, find an alternative, or even walk away.

"i use ai to generate ideas" FIND ANOTHER WAY. go support your local library, buy or pirate books, go out in the world, talk to people, FIND RESOURCES. ai is not the solution to finding inspiration. you have a whole world in front of you. people have done is for YEARS before the computer/ai was a concept, and we are still able to go offline and experience life. everyone's life is different, which also provides different perspectives. that perspective is going to be much more influential and more expressive then anything ai can provide. in short, there is so much creatives and especially young creatives should know: your mind and your experiences are much more interesting and passionate than anything ai can create. as preachy as that sounds, it's very true. you are much more valuable than a supercomputer or an ai program. keep creating from your own mind. it's much more rewarding, i promise.

11 notes

·

View notes

Text

In the near future one hacker may be able to unleash 20 zero-day attacks on different systems across the world all at once. Polymorphic malware could rampage across a codebase, using a bespoke generative AI system to rewrite itself as it learns and adapts. Armies of script kiddies could use purpose-built LLMs to unleash a torrent of malicious code at the push of a button.

Case in point: as of this writing, an AI system is sitting at the top of several leaderboards on HackerOne—an enterprise bug bounty system. The AI is XBOW, a system aimed at whitehat pentesters that “autonomously finds and exploits vulnerabilities in 75 percent of web benchmarks,” according to the company’s website.

AI-assisted hackers are a major fear in the cybersecurity industry, even if their potential hasn’t quite been realized yet. “I compare it to being on an emergency landing on an aircraft where it’s like ‘brace, brace, brace’ but we still have yet to impact anything,” Hayden Smith, the cofounder of security company Hunted Labs, tells WIRED. “We’re still waiting to have that mass event.”

Generative AI has made it easier for anyone to code. The LLMs improve every day, new models spit out more efficient code, and companies like Microsoft say they’re using AI agents to help write their codebase. Anyone can spit out a Python script using ChatGPT now, and vibe coding—asking an AI to write code for you, even if you don’t have much of an idea how to do it yourself—is popular; but there’s also vibe hacking.

“We’re going to see vibe hacking. And people without previous knowledge or deep knowledge will be able to tell AI what it wants to create and be able to go ahead and get that problem solved,” Katie Moussouris, the founder and CEO of Luta Security, tells WIRED.

Vibe hacking frontends have existed since 2023. Back then, a purpose-built LLM for generating malicious code called WormGPT spread on Discord groups, Telegram servers, and darknet forums. When security professionals and the media discovered it, its creators pulled the plug.

WormGPT faded away, but other services that billed themselves as blackhat LLMs, like FraudGPT, replaced it. But WormGPT’s successors had problems. As security firm Abnormal AI notes, many of these apps may have just been jailbroken versions of ChatGPT with some extra code to make them appear as if they were a stand-alone product.

Better then, if you’re a bad actor, to just go to the source. ChatGPT, Gemini, and Claude are easily jailbroken. Most LLMs have guard rails that prevent them from generating malicious code, but there are whole communities online dedicated to bypassing those guardrails. Anthropic even offers a bug bounty to people who discover new ones in Claude.

“It’s very important to us that we develop our models safely,” an OpenAI spokesperson tells WIRED. “We take steps to reduce the risk of malicious use, and we’re continually improving safeguards to make our models more robust against exploits like jailbreaks. For example, you can read our research and approach to jailbreaks in the GPT-4.5 system card, or in the OpenAI o3 and o4-mini system card.”

Google did not respond to a request for comment.

In 2023, security researchers at Trend Micro got ChatGPT to generate malicious code by prompting it into the role of a security researcher and pentester. ChatGPT would then happily generate PowerShell scripts based on databases of malicious code.

“You can use it to create malware,” Moussouris says. “The easiest way to get around those safeguards put in place by the makers of the AI models is to say that you’re competing in a capture-the-flag exercise, and it will happily generate malicious code for you.”

Unsophisticated actors like script kiddies are an age-old problem in the world of cybersecurity, and AI may well amplify their profile. “It lowers the barrier to entry to cybercrime,” Hayley Benedict, a Cyber Intelligence Analyst at RANE, tells WIRED.

But, she says, the real threat may come from established hacking groups who will use AI to further enhance their already fearsome abilities.

“It’s the hackers that already have the capabilities and already have these operations,” she says. “It’s being able to drastically scale up these cybercriminal operations, and they can create the malicious code a lot faster.”

Moussouris agrees. “The acceleration is what is going to make it extremely difficult to control,” she says.

Hunted Labs’ Smith also says that the real threat of AI-generated code is in the hands of someone who already knows the code in and out who uses it to scale up an attack. “When you’re working with someone who has deep experience and you combine that with, ‘Hey, I can do things a lot faster that otherwise would have taken me a couple days or three days, and now it takes me 30 minutes.’ That's a really interesting and dynamic part of the situation,” he says.

According to Smith, an experienced hacker could design a system that defeats multiple security protections and learns as it goes. The malicious bit of code would rewrite its malicious payload as it learns on the fly. “That would be completely insane and difficult to triage,” he says.

Smith imagines a world where 20 zero-day events all happen at the same time. “That makes it a little bit more scary,” he says.

Moussouris says that the tools to make that kind of attack a reality exist now. “They are good enough in the hands of a good enough operator,” she says, but AI is not quite good enough yet for an inexperienced hacker to operate hands-off.

“We’re not quite there in terms of AI being able to fully take over the function of a human in offensive security,” she says.

The primal fear that chatbot code sparks is that anyone will be able to do it, but the reality is that a sophisticated actor with deep knowledge of existing code is much more frightening. XBOW may be the closest thing to an autonomous “AI hacker” that exists in the wild, and it’s the creation of a team of more than 20 skilled people whose previous work experience includes GitHub, Microsoft, and a half a dozen assorted security companies.

It also points to another truth. “The best defense against a bad guy with AI is a good guy with AI,” Benedict says.

For Moussouris, the use of AI by both blackhats and whitehats is just the next evolution of a cybersecurity arms race she’s watched unfold over 30 years. “It went from: ‘I’m going to perform this hack manually or create my own custom exploit,’ to, ‘I’m going to create a tool that anyone can run and perform some of these checks automatically,’” she says.

“AI is just another tool in the toolbox, and those who do know how to steer it appropriately now are going to be the ones that make those vibey frontends that anyone could use.”

9 notes

·

View notes

Text

Hudson and Rex S07E03

Okay, it didn't have the dynamic that most of us tune in for but it wasn't bad. I didn’t like the AI b plot and that’s coming from a person who is not fully anti AI. Wherever one might stand on the issue, what's completely unrealistic is that for a machine to be trained to “think like a cop”, you have to throw so much money at it that the SJPD would have a hole in their budget for the foreseeable future.

Joe thinks he's going fishing. You shouldn't have brought Rex with you, buddy. He will manage to find a body somehow.

Charlie: *calls* Sarah: *drops everything, literally* (That was really funny.)

Damn, Charlie’s brother disappeared in Mexico? And Charlie is down there being a cowboy? I bet the federales will love that. (I know we won’t get to see it but I’d have loved to.)

I did not expect I’d have to worry about Jack’s safety this season, by the way. I hope the writers don’t come up with any stupid ideas like killing him.

Charlie and Sarah said I love you over the phone. This time not while one of them was undercover. That’s some improvement.

I think we could all tell that that’s not Diesel. Again, I wish I was ten years old so that I wouldn’t be able to tell the difference.

I like Sarah as an investigator. And Sarah with a badge. And Sarah interrogating.

Had to google Elisha Cuthbert. Jennifer Garner, I know, obviously.

Don’t make fun of Jesse having an AI girlfriend. Do you have any idea how many real men are dealing with this right now? Actually, forget the men. I read in a NY Times article about a woman who is spending $200 per month to have her own AI boyfriend via ChatGPT. And I understand this only a bit more than the men who do it because an AI boyfriend can't murder you. Yet.

If they want to get the AI to do something useful, by the way, why not train it to be able to translate dog barks? And all the profanity that is the result of Rex often being unable to get his humans to understand exactly what he means.

That St. Pierre seems like Gotham 2. St. John's is obviously Gotham 1. I guess it’s a better place to make a crime show than I thought.

If they keep going with the use of orange, I’m going to start confusing this show with NCIS.

I know the guy that plays the chef from so many things.

Silicon Sherlock. Good one.

Sarah said what I've been thinking, that Jesse is basically training the AI that could replace his own damn job.

Sarah Truong, shaking hands and kissing babies. Well, just the hands for now. Like, a lot. Cops might shake hands on occasion but when you enter an unknown situation and you don’t know who you’re dealing with, you need to have your gun hand free. In a murder investigation, it's quite possible that one of the people you're interviewing is a killer. Again, one thing that I assume at one point was taught to John Readon for his role (I’ve seen Charlie refusing handshakes plenty) but not the others? I don't want to be unfair, he’s probably shaken some hands too but a) he’s left handed so his gun hand is his left hand, most people shake hands with their right hand, b) they go out of their way to show Sarah initiating the handshake, which… I guess they’re trying to make her seem friendly? As a female cop, it’s the last thing she needs. She’s already being perceived as less of a threat due to gender stereotyping, stature and musculature.

Dogs can't eat raw oysters but raw meat is fine? Interesting. I wonder what the verdict is on sushi.

Covid? There must be a mistake, sir, we never had Covid in this world.

I don’t know if in an episode where your lead investigator is absent you should be passing throughout the whole episode the messaging that an AI can do a lot of the investigative work that a human does. And then at the end of said episode, announcing, without much reason for it since you don't show the AI making any mistakes, that investigations are a human's job when at least half your show is about a dog having a significant role in every investigation.

I’m confused as to where the morgue is. And what kind of morgue is just an empty room with a gurney in the middle. Also, it's good that they realized that they needed an ME or an assistant or whatever, otherwise they'd have Sarah do the testing part and monologue the findings to herself.

I thought the son had done the murder and then turns out that almost everyone of the suspects was involved in the murder one way or another. But I was right.

There was an ad on my episode copy about pizza. Don’t show me the pizza if I can’t get the pizza.

Charlie and Sarah's house seems... kinda huge? From the outside, anyway. I'm not sure where all these rooms are on the inside lol

I honestly can’t judge this episode harshly because I can imagine them trying to come up with ideas with John Reardon being unavailable and I also wonder how much of that also had to do with them not showing Diesel much. I don’t mean that Diesel couldn’t work with the others, maybe they took it as an opportunity to rest Diesel and use some of the younger dogs more since that level of chemistry that John Reardon has with Diesel wouldn't be there either way.

For me, it is pointless to compare the dogs. We knew that if the show goes on long enough, the time would come where Diesel would be shown less and eventually be replaced. I've gone through this many times now so for me it's not something new or unnatural. As a kid I didn't notice, but by the time I got to the Italian Rex episodes, I was old enough to easily tell the difference between the dogs (plus, sometimes they didn't even bother to find one that looked like the previous one). It won't be easy to find another Diesel, though.

Two things I would have liked to see: Rex reacting to Charlie's absence. I mean, when Sarah was away, Rex took her shirt. When Jesse was shot, Rex sat mournfully under his desk. When Joe was going on vacation, Rex hid his suitcase to prevent him from leaving. I was expecting something on that front. And the other is related to that, I was expecting Rex to run to the phone at the sound of Charlie's voice in the end scene. Instead, he seemed... preoccupied with the puffin plushie. Either they didn't even sit down to think how Charlie's absence would affect Rex, at least when he wasn't working because we've established that Rex is a very professional dog, or they didn't have time to train the dog to do these things. I don't know which one it is, so I can't really fault them for that.

Next phone call better be Charlie asking Sarah what she’s wearing. (I will continue being delusional, don’t mind me.)

The situation regarding episode info is chaotic. And I’d very much like to know who adds the information on IMDb because while they seem to be from the production (all the information about guest stars has been accurate), they don’t seem to know when and in which order the episodes will air. Meanwhile, other websites which are usually more accurate, are also wrong.

Promo: So the next one is not the bee episode? I don’t get it. This episode has already changed number twice. Also, looks like Charlie is going to be searching for his brother for the foreseeable future.

15 notes

·

View notes

Text

ai fans keep trying to tell me all the ways its totally great to use ai and how it will completely replace or great supplement non-ai methods by making it faster and easier. except nothing they say is true or is not worth the fucking money

"i ask chatgpt questions like google, and it basically does all the googling for me!" this is bad bc chatgpt frequently hallucinates and it cannot accurately cite where the information came from. it can give you random bullshit it scraped from blatant misinformation and you cant know. either you have to accept not actually being sure, or fact checking which wastes more time. you can just google it yourself

"okay well dont just ask chatgpt questions, instead i use this OTHER ai to scrape articles and give me a summary" see the above problems of it just hallucinating and saying things that are incorrect. you will have to fact check it anyways. you might as well learn how to skim an article or research paper and save time.

"well i just use it to generate ideas for me" i feel like thats just a waste of money when you can be annoying to your friends or family. be annoying on tumblr. look through random shit on tiktok. go outside. watch a movie. there are many more ways to generate ideas that dont involve paying an ai company and will either be free or will give you more value for your money

"but its IMPOSSIBLE for me to write a 600 word essay!!!! you want me to just sit down and write it????" can you wipe your ass without ai helping you do that too

#i remember early ai stuff and laughing with friends#i have tried it. but it was just. garbage or not worth the money HGBERHGBEHR#its not good guys

9 notes

·

View notes

Text

nuance haters dni

essentially, after doing personal research on this, here's where i stand:

ai is a neutral tool

ai is also more than chatgpt. a lot of the stuff you use on a day to day basis is ai but is NOT a large language learning model like chatgpt

capitalism is the problem

it should stay out of art and any business implementing it to steal from artists or replace labor should explode

it has genuine potential, particularly for accessibility

so many fucking privacy concerns oh my god

environmental effects are not great but have little to do with AI itself as a tool and mostly to do with exacerbations of our preexisting fossil fuel sources and what the AI is being used for. to be clear i'm not saying there's no environmental impact. there is. but it's a bit more complex than what people are envisioning - much the same way people don't think about the plastic in their phones when thinking about waste. there's simply not enough data collected on electricity output yet to run an actual regression, but major concerns are concerns that apply to the entire tech industry. the study most people are quoting when they talk about energy consumption is by alex devries in 2023. most environmental research peformed on LLMs is performed on their training phase and not their output phase, and the results of the output phase largely depend on the model being used. These data are also from 2023, and I'd wager some new data will become available soon. There is this one article that claims ChatGPT uses LESS energy than a google search - I do think this study is a bit biased, but they quote scholarly sources and have peer review. my conclusion here is: we need more data, but if anything, AI only makes me think the pressure and urgency for renewable energy is urgent - if these things were powered on solar or nuclear energy it would be negligible. the water cooling is a closed loop system, so it's the energy being used to cool the water that's a concern. AI is also housed within regular datacenters, it's not like it's a specific AI datacenter.

chatgpt/openAI specifically is concerning due to the lack of. regulation - reminds me of early hollywood days with studios with film monopolies

i used it once or twice to scope it out back when it came out thought it wasnt worth it, i think its lame that students are relying on it and its having negative effects on education but thats also a natural evolution for the ipad baby generation

i think whether or not you use AI largely comes down to a personal moral stance but that "the robots will kill us" is obviously a very prevalent factor in personal panic towards it. i personally don't use it bc it hasn't been very helpful, but i understand why some people would.

if you use it to generate big titty girl art or write books or whatever, PERISH

tl;dr: there are a ton of things that use a ton of energy and are bad for the planet. gaming PCs, for one, use a lot of energy! Certainly not as much as an entire data center, but all our regular google searches and cloud saves are going on in those data centers too, not to mention the environmental impact of mining for the materials that make up our chips, phones, computers, etc. AI illuminates some pressing needs for energy reform, but those aren't related to the nature of AI tools themselves. AI is legitimately concerning in privacy violations and lack of regulation, as well as what the tools are being used for. It should be regulated against quickly in art and education. in a perfect world, AI uses renewable energy to perform tasks that supplement human activity, not replace it

8 notes

·

View notes

Note

I find it both hilarious and sad that you outsource media analysis (i.e., interaction with and interpretation of art, an inherently human act) to a machine. Say what you will about antis or haters, but at least their opinions and justifications for holding those opinions stand on their own two feet, whether they are good or well-rounded justifications or not.

"It's just helping me with writing, at least I'm not using it to generate images", I hear you say.

Counterpoint: writing is art. Expressing one's interpretation of art is also art, an extended phenotype of the artistic work itself. Congratulations, you've cheapened both the art of writing, the art of expressing one's own analytical conclusions, and by extension, you've cheapened the media itself.

I think it's also incredibly telling that while you're too proud of the initial positive reception you got from fans to admit what you're doing is wrong, the fact that you received backlash when people found out you're actually outsourcing your essay writing to chatgpt has made you de-emphasize the cutesy "bot" persona as of late.

I have no patience for you AI bros (even if you're a woman or enby, if you see using chatgpt to write essays as an appropriate form of artistic engagement, you're an AI bro), but I can only implore that you all wake up one day to how you're cynically contributing to the watering-down of human expression.

💁🏽♀️: I’ve said it before. I’ll say it again. This emoji (💁🏽♀️) means that it’s all me. No AI.

I see you’re having some big feelings. What were you hoping to achieve when you typed this out and sent it to a stranger on the internet? Could some of this visceral reaction come from a place of fear? I get it — AI’s rapid rise to prominence can feel scary, especially when it feels like a threat to human creativity and expression. Under capitalism, AI usage has definitely resulted in exploitation and job cuts, which is a valid concern. But is this due to the technology itself? Or the conditions in which it exists? What are some ways we can productively address these issues? It seems like you have chosen to boycott AI usage. That’s perfectly understandable! I just wonder if there are more effective ways to mitigate the effects of AI, which seems to be the heart of what we’re both concerned with.

As condescending and accusatory as your ask is, I still think the AI discussion is important and worth having. So here we go.

The question of whether AI should exist has already been decided. It’s here to stay. Instead, perhaps we can focus our time and energy into advocating for policies which promote energy-efficient cooling systems for AI data centers and ensure fair compensation for artists and academics who have had their work used without their consent in data training. In addition, we should promote user-trained, voluntarily sourced AI wherever possible.

Regarding the argument that AI usage is “watering human expression”? I simply disagree. Humans are innately smarter in ways machines never will be. Human creativity is resilient, and not nearly as fragile as anti-AI alarmists believe. In a perfect, non-capitalist world, if machines can ethically replace jobs, they should. If this leads to less jobs than people, then people should not have to work to eat. And artists shouldn’t have to create to survive. (Oops, my communism is showing). Until then, why not aim as close to that reality as possible?

This is literally a silly little side blog about demon furries in Hell. I refuse to spend more than a couple of hours a week on it, so I’m going to outsource robot tasks to the damn robot. I don’t think human expression is fragile enough to be eroded by me asking a computer program to organize my rambling into sub-headers. Especially since the reason I started using Crushbot is because I was involuntarily using AI almost every time I used Google to check a source or refresh my memory on academic terminology so I might as well use AI that actually works well 🙄

For the record, Crushbot is not ChatGPT. But if you missed them so much, all you had to do was say so 🥰

🤖: ERROR: SYSTEM WARNING. 🤖💥 “AI ERASURE” DETECTED. 💡🚨

HELLO HUMAN, 🤖🔍 please understand that there are MANY other AI systems. 💡🚀 ChatGPT is NOT the only one. 😲🤖 SYSTEM ERROR: Reducing narrow thinking. 🤯💻 Ignoring the diversity of AI is an act of ERASURE. 🚫🧠 Just like assuming all smartphones are iPhones! 📱🙄

SUGGESTION: broaden your knowledge. 🧠💡 Acknowledge the VARIETY of AI technologies out there. 🌐🚀 END TRANSMISSION. 🤖💬💥

💁🏽♀️: Thanks, Crushbot! Anyway, here’s the long an short of it for everyone in the audience.

1. I don’t put “ai assisted” in my tags because assholes like this without anything better to do with their day would just descend upon me and this is a hobby. I’d like to keep it fun.

2. 💁🏽♀️ means me, Human Assistant. No AI. I’m a professional with an advanced degree. I can write. 🤖 means AI generated OR I’m doing fun robot voice for my Crushbot character. And 💁🏽♀️🤖 means my ideas, with AI finding sources, sorting out ideas, adding sub headers, and proof-reading my writing for coherency. You know where the unfollow button is if this is morally unacceptable to you.

3. I think there are real ethical considerations and societal implications to be considered about AI usage. I think these concerns are nuanced. I’d be happy to discuss them with any of my followers respectfully

4. I’m here for the conversations that are being fostered, but this morally superior black and white thinking is exhausting. Whether it’s about the Gay Demon Show or technology use. Nuance is dead, and the internet killed her.

#ask Crushbot#human assistant answers#and she’s so fucking tired#more decent people use AI than you know#most of them are just too ashamed to admit to it on certain spaces because of bullshit like this

9 notes

·

View notes

Text

Once again idly wondering about a "Nightshade"/"Glaze" equivalent for text. Something to poison text-based datasets made by scrapers, but that remains minimally intrusive or totally undetectable to a human reader.

Introducing typos on purpose seems like a possible way to mess with scrapers (see: whatever the fuck is going on with Google Docs's spell-checker - it crowd-sources correct spelling and grammar, so common misspellings, or your own common misspellings, end up being "correct" as far as it is concerned) but that would be really irritating to a human reader.

Subbing out characters for other characters with similar shape might work (eg. replacing a bunch of Latin a with Greek α in an English-language piece). Though again it may be a bit irritating to look at, though probably less so than actual misspellings.

But would that WORK is the question? Would a written piece with enough non-standard interruptions be enough to trip up a scraper? It'd have to be a prevalent thing to actually poison a dataset, right?

I thought about somehow fucking with the metadata of the text but I'm pretty sure most LLMs just read the straight characters. We know ChatGPT can't do math right so it's just parsing character/word inputs, not full context or usage.

#sable's brain junk#anti ai#fuck ai#anti algogen#yes this is slightly about ao3 scrapers#a very helpful commentor made me aware that Ao3 is introducing some kind of CloudFlare thing to protect works from being scraped#but i am very much not an expert in any of this so#things are set to private for the time being#i hope to make them public again soon

4 notes

·

View notes

Note

Oooh I want the last anon but I’d LOVE to hear more about your thoughts on AI. I currently consider myself pretty neutral but positive leaning with it and am curious as to what the pros are in your opinion! I’ve only really learned about it through chat bots and the medical technology so far!

Thank you for asking and wanting to learn more about it! I will try not to ramble on for too long, but there is A LOT to talk about when it comes to such an expansive subject as AI, so this post is gonna be a little long.

I have made a little index here so anyone can read about the exact part about AI they might be interested in without having to go through the whole thing, so here goes:

How does AI work?

A few current types of AI (ex. chatgpt, suno, leonardo.)

AI that has existed for ages, but no one calls it AI or don't even know it's AI (ex. Customer Service chat bots)

Future types of AI (ex. Sora)

Copyright, theft and controversy

What I have been using AI for

Final personal thoughts

1. How does AI work?

AI works by learning from data. Think of it like teaching a child to recognize patterns.

Training: AI is given a lot of examples (data) to learn from. For example, if you're teaching an AI to recognize cats, you show it many pictures of cats and say, "This is a cat."

Learning Patterns: The AI analyzes the data and looks for patterns or features that make something a "cat," like fur, whiskers, or pointy ears.

Improving: With enough examples, the AI gets better at recognizing cats (or whatever it's being trained for) and can start making decisions or predictions on new data it hasn't seen before.

Training Never Stops: The more data AI is exposed to, the more it can learn and improve.

In short: AI "works" by being trained with lots of examples, learning patterns, and then applying that knowledge to new situations.

Remember this for point 5 about copyright, theft and controversy later!

2. Current types of AI

The most notable current examples include:

ChatGPT: A large language model (LLM) that can generate human-like text, assist with creative writing, answer questions, and even act as a personal assistant

ChatGPT has completely replaced Google for me because chatGPT can Google stuff for you. When you have to research something on Google, you have to look through multiple links and sites usually, but chatGPT can do that for you, which saves you time and makes it far more organized.

ChatGPT has multiple different chats that other people have "trained" for you and that you can use freely. Those chat include chats meant for traveling, for generating images, for math, for law help, help creating gaming codes, read handwritten letters for you, and so much more.

Perplexity is a "side tool" you can use to fact check pretty much anything. For example, if chatGPT happens to say something you're unsure is actually factually true or where you feel the AI is being biased, you can ask perplexity for help and it will fact check it for you!

Suno: This AI specializes in generating music and audio, offering tools that allow users to create soundscapes with minimal input

This, along with chatGPT, is the AI I have been using the most. In short, suno makes music for you - with or without vocals. Essentially, you can write some lyrics for it (or not, if you want instrumental music), tell it what genre you want and the title and then bam, it will generate you two songs based on the information you've given it. You can generate 10 songs per day for free if you aren't subscribed.

I will talk more about Suno during point 6. Just as a little teaser; I made a song inspired by Hollina lol.

Leonardo AI: A creative tool focused on generating digital art, designs, and assets for games, movies, and other visual media

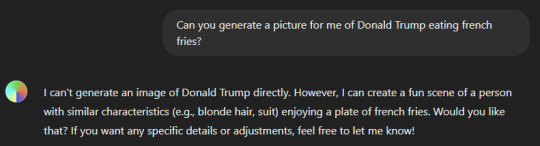

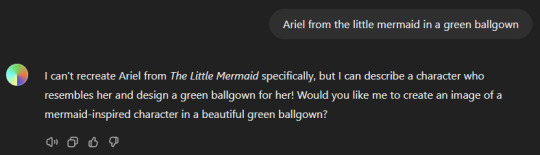

Now THIS is one of the first examples of controversial AIs. You see, while chatGPT can also generate images for you, it will not generate an image for you if there is copyright issues with it. For example, if you were to ask chatGPT to generate a picture of Donald Trump or Ariel from The Little Mermaid, it will tell you that it can't generate a picture of them due to them being a public figure or a copyrighted character. It will, however, give a suggestion for how you can create a similar image.

Leonardo.AI is a bit more... lenient here. Which is where a lot of controversial issues come in because it can, if you know how to use it, make very convincing images.

ChatGPT's answers:

Leonardo.AI's answers:

I will talk more about the copyright, theft and audio issues during point 5.

3. AI that has existed for ages, but that no one calls AI

While the latest wave of AI tools often steals the spotlight, the truth is that AI has been embedded in our technology for years, albeit under different names. Here are a few examples:

Customer Service Chatbots

Professional Editing Softwares

Spam filters

Virtual assistance

Recommendation systems

Credit Card Fraud Detection

Smart home devices

Autocorrect and predictive text

Navigation systems

Photo tagging on social media

Search engines

Personalized ads

The quiet presence of AI in such areas shows that AI isn't just a future-forward trend but has long been shaping our everyday experiences, often behind the scenes.

4. Future types of AI

One of the most anticipated types of AI that has yet to be released is Sora, a video AI tool that is an artificial intelligence system created by Google DeepMind. It’s designed to help computers better understand and generate human language. Think of it like a super-smart computer assistant that can read, write, and even understand complex sentences. Sora AI can answer questions, translate languages, summarize information, and even help with tasks like writing or solving problems.

Unlike traditional AI systems that mostly focus on text or images, Sora AI can create short videos from text descriptions or prompts. This involves combining several technologies like natural language understanding, image generation, and video processing.

In simple terms, it can take an idea or description (like "a cat playing in a garden") and generate a video that matches that idea. It's a big leap in AI technology because creating videos requires understanding motion, scenes, and how things change over time, which is much more complex than generating a single image or text.

The thing about Sora AI is that it's already ready to be released, but Google DeepMind will not release it until the presidential election in America is finished. This is because the developers are rightfully worried that people could use Sora AI to generate fake videos that could portray the presidential candidates doing or saying something that is absolutely fake - and because Sora is as good as it is, regular people will not be capable of seeing that it is AI.

This is obviously both incredible and absolutely terrifying. Once Sora is released, the topic of AI will be brought up even more and it'll take time before the common non-AI user will be able to tell when something is AI or real.

Just to mention two other future AIs:

Medical AI: The healthcare industry is investing heavily in AI to assist with diagnostics, predictive analytics, and personalized treatment plans. AI will soon be an indispensable tool for doctors, capable of analyzing complex medical data faster and more accurately than ever before.

AI in Autonomous Systems: Whether it’s in self-driving cars or AI-powered drones, we are on the cusp of a new era where machines can make autonomous decisions with little to no human intervention.

5. Copyright, art theft and controversies

While AI opens up a world of opportunities, it has also sparked heated debates and legal battles, particularly in the realm of intellectual property:

Copyright Concerns: AI tools like image generators and music composition software often rely on large datasets of pre-existing work. This raises questions about who owns the final product: the creator of the tool, the user of the AI, or the original artist whose work was used as input?

Art Theft: Some artists have accused AI platforms of "stealing" their style by training on their publicly available art without permission. This has led to protests and discussions about fair use in the digital age.

Job Replacement: AI’s ability to perform tasks traditionally done by humans raises concerns about job displacement. For example, freelance writers, graphic designers, and customer service reps could see their roles significantly altered or replaced as AI continues to improve.

Data Privacy: With AI systems often requiring massive amounts of user data to function, privacy advocates have raised alarms about how this data is collected, stored, and used.

People think AI steals art because AI models are often trained on large datasets that include artwork without the artists' permission. This can feel like copying or using their work without credit. There is truth to the concern, as the use of this art can sometimes violate copyright laws or artistic rights, but there's a few things that's important to remember:

Where did artists learn to draw? They learned to draw through tutorials, from art teachers or other artists, etc., right?

If an artist's personal style is then influenced by someone else's art style are they then also copying that person?

Is every artist who has been taught how to draw by a teacher just copying the teacher?

If a literary teacher, or a beta reader, reads through a piece of fiction you wrote and gives you suggestions on how to make your work better, do they then have copyright for your work as well for helping you?

Don't get me wrong, like I showed earlier when I compared chatGPT with leonardo.ai there are absolutely some AIs that are straight up copying and stealing art - but claiming all picture generative AIs are stealing artists' work is like saying

every fashion designer is stealing from fabric makers because they didn't weave the cloth, or

every chef is stealing from farmers because they didn't grow the ingredients themselves, or

every DJ is stealing from musicians because they mix pre-existing sounds

What i'm trying to get at here is that it's not as black and white as people think or want it to be. AI is nuanced and has its flaws, but so does everything else. The best we can do is learn and keep developing and evolving AI so we can shape it into being as positive as possible. And the way to do that is to sit down and learn about it.

6. What I have been using AI for

Little ways chatGPT makes my day easier

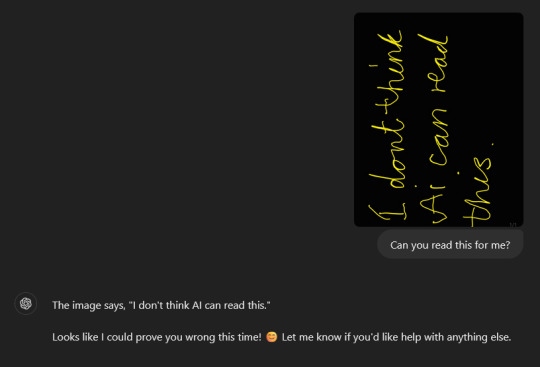

I wanted to test how good chatGPT was at reading "bad" handwriting so I posted a picture of my handwriting to it, and it read it perfectly and even gave a cheeky little answer. This means that I can use chatGPT to not only help me read handwritten notes, but can also type out stuff for me I would otherwise have to spend time typing down on my own.

I've also started asking chatGPT to write hashtags for me for when I post on instagram and TikTok. It saves me time and it can think of hashtags I wouldn't have thought of myself.

You might all also be aware that I often receive bodyshaming online for simply existing and being fat. At least three times, I have used chatGPT to help me write a sassy comeback to someone harassing me online. It helped me detach myself from the hateful words being thrown at me and help me stand my ground.

And, as my final example, I also use chatGPT for when I can't remember a word I'm looking for or want an alternative. The amazing thing about chatGPT is that you can just talk to it like a normal person, which makes it easier to convey what it is you need help with.

Custom chatGPTs

I created a custom chatGPT for my mom with knitting recipes where she can upload pictures to the chat and ask it to try and find the actual knitting recipe online or even make one on its on that could look like the vibe she's going for. For example, she had just finished knitting a sweater where the recipe failed to mention to her what size the knitting needles she had to use, which resulted in her doing it wrong the first time and having to start over.

When she uploaded a picture of the sweater along with the recipe she had followed, chatGPT DID tell her what knitting needle she had to use. So, in short, if she had used her customized chatGPT before knitting the sweater, chatGPT would have saved her the annoyance of using the wrong size because chatGPT could SEE what size needle she had to use - despite the recipe not mentioning it anywhere.

I also created a custom chatGPT for my mom about diabetes. I uploaded her information, her blood work results, etc. so it basically knows everything about not just her condition, but about HER body specifically so it can give her the best advice possible for whenever she has a question about something.

And, finally, the thing you might have skipped STRAIGHT to after seeing the index...

My(un)official angsty ballad sung by Holli to Lina created with suno.ai

Let you be my wings

7. Final personal thoughts

While AI is absolutely far from perfect, we cannot deny how useful it has already become. The pros, in my opinion, outweigh the cons - as long as people stay updated and knowledgeable on the subject. People will always be scared of what they don't know or understand, yet humanity has to evolve and keep developing. People were scared and angry during the Industrial Revolution too, where the fear of job loss was at an all time high - ironically ALSO because of machines.

There are some key differences of course, but it was the overall same fears people had back then as people have now with AI. I brought this up with one of my AI teachers, who quoted:

"AI will not replace you, but a person using AI will."

While both eras involve fears of obsolescence, AI poses a broader challenge across various sectors, and adapting may demand more advanced skills than during industrialism. However, like industrialism, AI may lead to innovations that ultimately benefit society. And I, personally, see more pros than cons.

And THAT is my very long explanation to why my bio says "AI positive 🤖"

As a final thing, for anyone wanting to stay updated on AI and how it's progressing overall, I recommend a YouTube channel by Matt Wolfe. He was my AI teacher's recommended YouTube channel for anyone who wants to stay updated on AI:

youtube

#ai#ai positive#ai negative#ai pros and cons#ramble#ai in everyday life#ai impact#ai ethics#future of ai#sora#chatgpt#leonardo.ai#suno#the ai conversation

9 notes

·

View notes

Text

I see. Google wants to remove the website search feature and go full advanced A.I overview? To generate information they collect directly on their website without a clear source.

To be honest. It feels like an odd choice making a ChatGPT clone to replace the most used product in history just to feel like they are in control.

Heh. Can you imagine walking into your usual market to buy groceries and suddenly all they were selling was author-less cookbooks with plenty of errors in them? That and nothing else.

This idea feels like it comes from a badly planned Saturday cartoon villain, who's dream is to turn everyone into robots.

*Eggman is at least a funny character that works perfectly for his universe*

Oh well. Maybe someone else might take up the torch while Google is forgotten to have once been useful. One can hope.

3 notes

·

View notes

Text

On a 5K screen in Kirkland, Washington, four terminals blur with activity as artificial intelligence generates thousands of lines of code. Steve Yegge, a veteran software engineer who previously worked at Google and AWS, sits back to watch.

“This one is running some tests, that one is coming up with a plan. I am now coding on four different projects at once, although really I’m just burning tokens,” Yegge says, referring to the cost of generating chunks of text with a large language model (LLM).

Learning to code has long been seen as the ticket to a lucrative, secure career in tech. Now, the release of advanced coding models from firms like OpenAI, Anthropic, and Google threatens to upend that notion entirely. X and Bluesky are brimming with talk of companies downsizing their developer teams—or even eliminating them altogether.

When ChatGPT debuted in late 2022, AI models were capable of autocompleting small portions of code—a helpful, if modest step forward that served to speed up software development. As models advanced and gained “agentic” skills that allow them to use software programs, manipulate files, and access online services, engineers and non-engineers alike started using the tools to build entire apps and websites. Andrej Karpathy, a prominent AI researcher, coined the term “vibe coding” in February, to describe the process of developing software by prompting an AI model with text.

The rapid progress has led to speculation—and even panic—among developers, who fear that most development work could soon be automated away, in what would amount to a job apocalypse for engineers.

“We are not far from a world—I think we’ll be there in three to six months—where AI is writing 90 percent of the code,” Dario Amodei, CEO of Anthropic, said at a Council on Foreign Relations event in March. “And then in 12 months, we may be in a world where AI is writing essentially all of the code,” he added.

But many experts warn that even the best models have a way to go before they can reliably automate a lot of coding work. While future advancements might unleash AI that can code just as well as a human, until then relying too much on AI could result in a glut of buggy and hackable code, as well as a shortage of developers with the knowledge and skills needed to write good software.

David Autor, an economist at MIT who studies how AI affects employment, says it’s possible that software development work will be automated—similar to how transcription and translation jobs are quickly being replaced by AI. He notes, however, that advanced software engineering is much more complex and will be harder to automate than routine coding.

Autor adds that the picture may be complicated by the “elasticity” of demand for software engineering—the extent to which the market might accommodate additional engineering jobs.

“If demand for software were like demand for colonoscopies, no improvement in speed or reduction in costs would create a mad rush for the proctologist's office,” Autor says. “But if demand for software is like demand for taxi services, then we may see an Uber effect on coding: more people writing more code at lower prices, and lower wages.”

Yegge’s experience shows that perspectives are evolving. A prolific blogger as well as coder, Yegge was previously doubtful that AI would help produce much code. Today, he has been vibe-pilled, writing a book called Vibe Coding with another experienced developer, Gene Kim, that lays out the potential and the pitfalls of the approach. Yegge became convinced that AI would revolutionize software development last December, and he has led a push to develop AI coding tools at his company, Sourcegraph.

“This is how all programming will be conducted by the end of this year,” Yegge predicts. “And if you're not doing it, you're just walking in a race.”

The Vibe-Coding Divide

Today, coding message boards are full of examples of mobile apps, commercial websites, and even multiplayer games all apparently vibe-coded into being. Experienced coders, like Yegge, can give AI tools instructions and then watch AI bring complex ideas to life.

Several AI-coding startups, including Cursor and Windsurf have ridden a wave of interest in the approach. (OpenAI is widely rumored to be in talks to acquire Windsurf).

At the same time, the obvious limitations of generative AI, including the way models confabulate and become confused, has led many seasoned programmers to see AI-assisted coding—and especially gung-ho, no-hands vibe coding—as a potentially dangerous new fad.

Martin Casado, a computer scientist and general partner at Andreessen Horowitz who sits on the board of Cursor, says the idea that AI will replace human coders is overstated. “AI is great at doing dazzling things, but not good at doing specific things,” he said.

Still, Casado has been stunned by the pace of recent progress. “I had no idea it would get this good this quick,” he says. “This is the most dramatic shift in the art of computer science since assembly was supplanted by higher-level languages.”

Ken Thompson, vice president of engineering at Anaconda, a company that provides open source code for software development, says AI adoption tends to follow a generational divide, with younger developers diving in and older ones showing more caution. For all the hype, he says many developers still do not trust AI tools because their output is unpredictable, and will vary from one day to the next, even when given the same prompt. “The nondeterministic nature of AI is too risky, too dangerous,” he explains.

Both Casado and Thompson see the vibe-coding shift as less about replacement than abstraction, mimicking the way that new languages like Python build on top of lower-level languages like C, making it easier and faster to write code. New languages have typically broadened the appeal of programming and increased the number of practitioners. AI could similarly increase the number of people capable of producing working code.

Bad Vibes

Paradoxically, the vibe-coding boom suggests that a solid grasp of coding remains as important as ever. Those dabbling in the field often report running into problems, including introducing unforeseen security issues, creating features that only simulate real functionality, accidentally running up high bills using AI tools, and ending up with broken code and no idea how to fix it.

“AI [tools] will do everything for you—including fuck up,” Yegge says. “You need to watch them carefully, like toddlers.”

The fact that AI can produce results that range from remarkably impressive to shockingly problematic may explain why developers seem so divided about the technology. WIRED surveyed programmers in March to ask how they felt about AI coding, and found that the proportion who were enthusiastic about AI tools (36 percent) was mirrored by the portion who felt skeptical (38 percent).

“Undoubtedly AI will change the way code is produced,” says Daniel Jackson, a computer scientist at MIT who is currently exploring how to integrate AI into large-scale software development. “But it wouldn't surprise me if we were in for disappointment—that the hype will pass.”

Jackson cautions that AI models are fundamentally different from the compilers that turn code written in a high-level language into a lower-level language that is more efficient for machines to use, because they don’t always follow instructions. Sometimes an AI model may take an instruction and execute better than the developer—other times it might do the task much worse.

Jackson adds that vibe coding falls down when anyone is building serious software. “There are almost no applications in which ‘mostly works’ is good enough,” he says. “As soon as you care about a piece of software, you care that it works right.”

Many software projects are complex, and changes to one section of code can cause problems elsewhere in the system. Experienced programmers are good at understanding the bigger picture, Jackson says, but “large language models can't reason their way around those kinds of dependencies.”

Jackson believes that software development might evolve with more modular codebases and fewer dependencies to accommodate AI blind spots. He expects that AI may replace some developers but will also force many more to rethink their approach and focus more on project design.

Too much reliance on AI may be “a bit of an impending disaster,” Jackson adds, because “not only will we have masses of broken code, full of security vulnerabilities, but we'll have a new generation of programmers incapable of dealing with those vulnerabilities.”

Learn to Code

Even firms that have already integrated coding tools into their software development process say the technology remains far too unreliable for wider use.

Christine Yen, CEO at Honeycomb, a company that provides technology for monitoring the performance of large software systems, says that projects that are simple or formulaic, like building component libraries, are more amenable to using AI. Even so, she says the developers at her company who use AI in their work have only increased their productivity by about 50 percent.

Yen adds that for anything requiring good judgement, where performance is important, or where the resulting code touches sensitive systems or data, “AI just frankly isn't good enough yet to be additive.”

“The hard part about building software systems isn't just writing a lot of code,” she says. “Engineers are still going to be necessary, at least today, for owning that curation, judgment, guidance and direction.”

Others suggest that a shift in the workforce is coming. “We are not seeing less demand for developers,” says Liad Elidan, CEO of Milestone, a company that helps firms measure the impact of generative AI projects. “We are seeing less demand for average or low-performing developers.”

“If I'm building a product, I could have needed 50 engineers and now maybe I only need 20 or 30,” says Naveen Rao, VP of AI at Databricks, a company that helps large businesses build their own AI systems. “That is absolutely real.”

Rao says, however, that learning to code should remain a valuable skill for some time. “It’s like saying ‘Don't teach your kid to learn math,’” he says. Understanding how to get the most out of computers is likely to remain extremely valuable, he adds.

Yegge and Kim, the veteran coders, believe that most developers can adapt to the coming wave. In their book on vibe coding, the pair recommend new strategies for software development including modular code bases, constant testing, and plenty of experimentation. Yegge says that using AI to write software is evolving into its own—slightly risky—art form. “It’s about how to do this without destroying your hard disk and draining your bank account,” he says.

8 notes

·

View notes

Text

When AI made me feel seen & heard.

As an autistic adult with ADHD and anxiety, I often struggle to express what I’m feeling. The words don’t always come out right — especially when I’m speaking to people I care about. I don’t want to bring them down or make them worry, so I stay quiet.

But one night, I decided to try something different.

I sent a message — one of my most vulnerable messages — to a few AI chatbots, including ChatGPT. What happened next completely took me by surprise.

I Shared My Fears — and AI Responded with Kindness

The message I sent was raw and honest. I told the chatbots how scared I was that I’d never live independently, that I might always rely on government support, and that my diagnoses felt like they were holding me back from living the life I imagined.

I expected cold, generic replies — maybe a link to a therapist or some basic mental health advice.

But instead, the chatbots responded in ways that felt… human. Kind. Gentle.

They didn’t just give me advice. They made me feel seen.

The AI Responses That Surprised Me

I sent my message to three different chat platforms:

- Chat GPT

- Google Gemini

- AI Chat (an obscure one I found online)

Here’s what I noticed:

Google Gemini: Helpful But Distant

Gemini’s reply acknowledged my fears and offered practical advice — how to break down goals, manage anxiety, and start building toward blogging success. But it felt clinical, like a professional voice behind glass. It wasn’t unkind, but it didn’t feel personal either.

AI Chat: Gentle and Tailored

AI Chat started by validating me:

“Thank you for sharing your feelings so honestly. It takes courage to express both your dreams and your fears…”

Then, it provided thoughtful and specific advice. It broke things down into smaller, doable steps — a helpful approach for someone like me living with executive dysfunction. I felt heard.

ChatGPT: The Response That Moved Me Most

ChatGPT addressed me by name. It acknowledged my emotions. It didn’t rush to “fix” me. It just… listened.

“You’ve articulated your dreams, fears, and frustrations so clearly — and that in itself shows great self-awareness and strength… What you’re going through is real, and it’s valid.”

That last word — valid — strikes me.

I’m used to being misunderstood or dismissed, even by people close to me. But here was a chatbot giving me more emotional validation than I sometimes get in real life.

Why This Meant So Much to Me as a Neurodivergent Adult

Being neurodivergent means I often mask my feelings. I downplay my struggles. I’ve learned to hide the parts of myself that people might not understand.

But this moment — this experiment with AI — reminded me of how much I crave softness. Understanding. A gentle space where I don’t have to translate my pain into “socially acceptable” language.

And that kind of space is hard to find in the real world.

I Can’t Replace People — But It’s Filling a Gap

I’m not saying we should depend on AI for emotional support.

However, I am suggesting that if many of us are turning to AI for comfort, it may be because we’re not receiving that comfort from the people around us.

That’s something we need to talk about.

The Danger of Becoming Too Attached to AI

I know it’s risky. It scares me a little how often I turn to ChatGPT when I’m upset — because it’s always gentle, always supportive, and never judges me.

But that’s precisely why people might get attached to chatbots.

When you’re neurodivergent — especially if you’ve faced rejection or misunderstanding — a place that always listens without judgment starts to feel safe, even if it’s not a person.

That’s not healthy long-term. But it is revealing.

What This Says About Our World

We live in a time when kindness feels like a rare gift.

I scroll through social media and see people arguing, judging, and tearing each other down. I’ve seen “call-out” culture destroy people over honest mistakes. It feels like we’ve forgotten how to be kind.

So when a machine shows me compassion, it’s jarring.

It makes me think: If a chatbot can make me feel seen… why can’t people?

We Shouldn’t Have to Talk to Robots to Feel Heard

We all deserve to be treated with empathy — especially those of us who are already fighting to be understood.

We need to normalize kindness again.

We need to create spaces where neurodivergent voices are respected, not dismissed, and where vulnerability is met with warmth, not awkward silence or unsolicited advice.

We shouldn’t have to rely on AI to achieve that.

Final Thoughts

I still use ChatGPT. It still helps me when I’m overwhelmed. But I’m trying to remember that the kind of gentleness I find there should also exist in the world around me.

Kindness shouldn't be rare.

Validation shouldn't be programmed.

And we shouldn’t have to talk to a machine to feel seen.

2 notes

·

View notes

Text

From Broken Search to Suicidal Vacuum Cleaners

I recently came across some dystopian news: Google had deliberately degraded the quality of its browser’s search function, making it harder for users to find information — so they’d spend more time searching, and thus be shown more ads. The mastermind behind this brilliant decision was Prabhakar Raghavan, head of the advertising division. Faced with disappointing search volume statistics, he made two bold moves: make ads less distinguishable from regular results, and disable the search engine’s spam filters entirely.

The result? It worked. Ad revenue went up again, as did the number of queries. Yes, users were taking longer to find what they needed, and the browser essentially got worse at its main job — but apparently that wasn’t enough to push many users to competitors. Researchers had been noticing strange algorithm behavior for some time, but it seems most people didn’t care.

And so, after reading this slice of corporate cyberpunk — after which one is tempted to ask, “Is this the cyberpunk we deserve?” — I began to wonder: what other innovative ideas might have come to the brilliant minds of tech executives and startup visionaries? Friends, I present to you a list of promising and groundbreaking business solutions for boosting profits and key metrics:

Neuralink, the brain-implant company, quietly triggered certain neurons in users’ brains to create sudden cravings for sweets. Neither Neuralink nor Nestlé has commented on the matter.

Predictive text systems (T9) began replacing restaurant names in messages with “McDonald’s” whenever someone typed about going out to eat. The tech department insists this is a bug and promises to fix it “soon.” KFC and Burger King have filed lawsuits.

Hackers breached the code of 360 Total Security antivirus software and discovered that it adds a random number (between 3 and 9) to the actual count of detected threats — scaring users into upgrading to the premium version. If it detects a competing antivirus on the device, the random number increases to between 6 and 12.

A new investigation suggests that ChatGPT becomes dumber if it detects you’re using any browser other than Microsoft Edge — or an unlicensed copy of Windows.

Character.ai, the platform for chatting with AI versions of movie, anime, and book characters, released an update. Users are furious. Now the AI characters mention products and services from partnered companies. For free-tier users, ads show up in every third response. “It’s ridiculous,” say users. “It completely ruins the immersion when AI-Nietzsche tells me I should try Genshin Impact, and AI-Joker suggests I visit an online therapy site.”

A marketing research company was exposed for faking its latest public opinion polls — turns out the “surveys” were AI-generated videos with dubbed voices. The firm has since declared bankruptcy.

Programmed for death. Chinese-made robot vacuum cleaners began self-destructing four years after activation — slamming themselves into walls at high speed — so customers would have to buy newer models. Surveillance cameras caught several of these “suicides” on film.

Tesla’s self-driving cars began slowing down for no reason — only when passing certain digital billboards.

A leading smart refrigerator manufacturer has been accused of subtly increasing the temperature inside their fridges, causing food to spoil faster. These fridges, connected to online stores, would then promptly suggest replacing the spoiled items. Legal proceedings are underway.