#SQL query performance

Explore tagged Tumblr posts

Text

Measuring SQL Query Duration: GETDATE() and DATEDIFF()

Introduction Hey there, fellow SQL enthusiast! Have you ever wondered if using GETDATE() and DATEDIFF() is sufficient for measuring the duration of your SQL queries? Well, you’re in the right place! In this article, we’ll dive into the world of query performance measurement and explore the effectiveness of these functions. Get ready to level up your SQL skills and optimize your queries like a…

View On WordPress

0 notes

Text

Unlocking the Full Power of Apache Spark 3.4 for Databricks Runtime!

You've dabbled in the magic of Apache Spark 3.4 with my previous blog "Exploring Apache Spark 3.4 Features for Databricks Runtime", where we journeyed through 8 game-changing features

You’ve dabbled in the magic of Apache Spark 3.4 with my previous blog “Exploring Apache Spark 3.4 Features for Databricks Runtime“, where we journeyed through 8 game-changing features—from the revolutionary Spark Connect to the nifty tricks of constructing parameterized SQL queries. But guess what? We’ve only scratched the surface! In this sequel, we’re diving deeper into the treasure trove of…

View On WordPress

#Apache Spark#Azure Databricks#Azure Databricks Cluster#Data Frame#Databricks#databricks apache spark#Databricks SQL#Memory Profiler#NumPy#performance#Pivot#pyspark#PySpark UDFs#SQL#SQL queries#SQL SELECT#SQL Server

0 notes

Text

Which coding languages should I learn to boost my IT career opportunities?

A career in IT needs a mix of versatile programming languages. Here are some of the most essential ones:

Python – Easy to learn and widely used for data science, machine learning, web development, and automation.

JavaScript – Key for web development, allowing interactive websites and backend work with frameworks like Node.js.

Java – Known for stability, popular for Android apps, enterprise software, and backend development.

C++ – Great for systems programming, game development, and areas needing high performance.

SQL – Essential for managing and querying databases, crucial for data-driven roles.

C# – Common in enterprise environments and used in game development, especially with Unity.

24 notes

·

View notes

Text

Unlocking the Power of Data: Essential Skills to Become a Data Scientist

In today's data-driven world, the demand for skilled data scientists is skyrocketing. These professionals are the key to transforming raw information into actionable insights, driving innovation and shaping business strategies. But what exactly does it take to become a data scientist? It's a multidisciplinary field, requiring a unique blend of technical prowess and analytical thinking. Let's break down the essential skills you'll need to embark on this exciting career path.

1. Strong Mathematical and Statistical Foundation:

At the heart of data science lies a deep understanding of mathematics and statistics. You'll need to grasp concepts like:

Linear Algebra and Calculus: Essential for understanding machine learning algorithms and optimizing models.

Probability and Statistics: Crucial for data analysis, hypothesis testing, and drawing meaningful conclusions from data.

2. Programming Proficiency (Python and/or R):

Data scientists are fluent in at least one, if not both, of the dominant programming languages in the field:

Python: Known for its readability and extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, making it ideal for data manipulation, analysis, and machine learning.

R: Specifically designed for statistical computing and graphics, R offers a rich ecosystem of packages for statistical modeling and visualization.

3. Data Wrangling and Preprocessing Skills:

Raw data is rarely clean and ready for analysis. A significant portion of a data scientist's time is spent on:

Data Cleaning: Handling missing values, outliers, and inconsistencies.

Data Transformation: Reshaping, merging, and aggregating data.

Feature Engineering: Creating new features from existing data to improve model performance.

4. Expertise in Databases and SQL:

Data often resides in databases. Proficiency in SQL (Structured Query Language) is essential for:

Extracting Data: Querying and retrieving data from various database systems.

Data Manipulation: Filtering, joining, and aggregating data within databases.

5. Machine Learning Mastery:

Machine learning is a core component of data science, enabling you to build models that learn from data and make predictions or classifications. Key areas include:

Supervised Learning: Regression, classification algorithms.

Unsupervised Learning: Clustering, dimensionality reduction.

Model Selection and Evaluation: Choosing the right algorithms and assessing their performance.

6. Data Visualization and Communication Skills:

Being able to effectively communicate your findings is just as important as the analysis itself. You'll need to:

Visualize Data: Create compelling charts and graphs to explore patterns and insights using libraries like Matplotlib, Seaborn (Python), or ggplot2 (R).

Tell Data Stories: Present your findings in a clear and concise manner that resonates with both technical and non-technical audiences.

7. Critical Thinking and Problem-Solving Abilities:

Data scientists are essentially problem solvers. You need to be able to:

Define Business Problems: Translate business challenges into data science questions.

Develop Analytical Frameworks: Structure your approach to solve complex problems.

Interpret Results: Draw meaningful conclusions and translate them into actionable recommendations.

8. Domain Knowledge (Optional but Highly Beneficial):

Having expertise in the specific industry or domain you're working in can give you a significant advantage. It helps you understand the context of the data and formulate more relevant questions.

9. Curiosity and a Growth Mindset:

The field of data science is constantly evolving. A genuine curiosity and a willingness to learn new technologies and techniques are crucial for long-term success.

10. Strong Communication and Collaboration Skills:

Data scientists often work in teams and need to collaborate effectively with engineers, business stakeholders, and other experts.

Kickstart Your Data Science Journey with Xaltius Academy's Data Science and AI Program:

Acquiring these skills can seem like a daunting task, but structured learning programs can provide a clear and effective path. Xaltius Academy's Data Science and AI Program is designed to equip you with the essential knowledge and practical experience to become a successful data scientist.

Key benefits of the program:

Comprehensive Curriculum: Covers all the core skills mentioned above, from foundational mathematics to advanced machine learning techniques.

Hands-on Projects: Provides practical experience working with real-world datasets and building a strong portfolio.

Expert Instructors: Learn from industry professionals with years of experience in data science and AI.

Career Support: Offers guidance and resources to help you launch your data science career.

Becoming a data scientist is a rewarding journey that blends technical expertise with analytical thinking. By focusing on developing these key skills and leveraging resources like Xaltius Academy's program, you can position yourself for a successful and impactful career in this in-demand field. The power of data is waiting to be unlocked – are you ready to take the challenge?

3 notes

·

View notes

Text

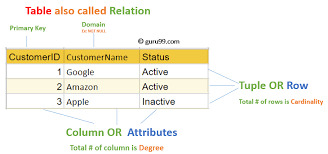

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

My Experience with Database Homework Help from DatabaseHomeworkHelp.com

As a student majoring in computer science, managing the workload can be daunting. One of the most challenging aspects of my coursework has been database management. Understanding the intricacies of SQL, ER diagrams, normalization, and other database concepts often left me overwhelmed. That was until I discovered Database Homework Help from DatabaseHomeworkHelp.com. This service has been a lifesaver, providing me with the support and guidance I needed to excel in my studies.

The Initial Struggle

When I first started my database course, I underestimated the complexity of the subject. I thought it would be as straightforward as other programming courses I had taken. However, as the semester progressed, I found myself struggling with assignments and projects. My grades were slipping, and my confidence was waning. I knew I needed help, but I wasn't sure where to turn.

I tried getting assistance from my professors during office hours, but with so many students needing help, the time available was limited. Study groups with classmates were somewhat helpful, but they often turned into social gatherings rather than focused study sessions. I needed a more reliable and structured form of support.

Discovering DatabaseHomeworkHelp.com

One evening, while frantically searching for online resources to understand an especially tricky ER diagram assignment, I stumbled upon DatabaseHomeworkHelp.com. The website promised expert help on a wide range of database topics, from basic queries to advanced database design and implementation. Skeptical but hopeful, I decided to give it a try. It turned out to be one of the best decisions I’ve made in my academic career.

First Impressions

The first thing that struck me about DatabaseHomeworkHelp.com was the user-friendly interface. The website was easy to navigate, and I quickly found the section where I could submit my assignment. The process was straightforward: I filled out a form detailing my assignment requirements, attached the relevant files, and specified the deadline.

Within a few hours, I received a response from one of their database experts. The communication was professional and reassuring. They asked a few clarifying questions to ensure they fully understood my needs, which gave me confidence that I was in good hands.

The Quality of Help

What impressed me the most was the quality of the assistance I received. The expert assigned to my task not only completed the assignment perfectly but also provided a detailed explanation of the solutions. This was incredibly helpful because it allowed me to understand the concepts rather than just submitting the work.

For example, in one of my assignments, I had to design a complex database schema. The expert not only provided a well-structured schema but also explained the reasoning behind each table and relationship. This level of detail helped me grasp the fundamental principles of database design, something I had been struggling with for weeks.

Learning and Improvement

With each assignment I submitted, I noticed a significant improvement in my understanding of database concepts. The experts at DatabaseHomeworkHelp.com were not just solving problems for me; they were teaching me how to solve them myself. They broke down complex topics into manageable parts and provided clear, concise explanations.

I particularly appreciated their help with SQL queries. Writing efficient and effective SQL queries was one of the areas I found most challenging. The expert guidance I received helped me understand how to approach query writing logically. They showed me how to optimize queries for better performance and how to avoid common pitfalls.

Timely Delivery

Another aspect that stood out was their commitment to deadlines. As a student, timely submission of assignments is crucial. DatabaseHomeworkHelp.com always delivered my assignments well before the deadline, giving me ample time to review the work and ask any follow-up questions. This reliability was a significant relief, especially during times when I had multiple assignments due simultaneously.

Customer Support

The customer support team at DatabaseHomeworkHelp.com deserves a special mention. They were available 24/7, and I never had to wait long for a response. Whether I had a question about the pricing, needed to clarify the assignment details, or required an update on the progress, the support team was always there to assist me promptly and courteously.

Affordable and Worth Every Penny

As a student, budget is always a concern. I was worried that professional homework help would be prohibitively expensive. However, I found the pricing at DatabaseHomeworkHelp.com to be reasonable and affordable. They offer different pricing plans based on the complexity and urgency of the assignment, making it accessible for students with varying budgets.

Moreover, considering the quality of help I received and the improvement in my grades, I can confidently say that their service is worth every penny. The value I got from their expert assistance far outweighed the cost.

A Lasting Impact

Thanks to DatabaseHomeworkHelp.com, my grades in the database course improved significantly. But beyond the grades, the most valuable takeaway has been the knowledge and confidence I gained. I now approach database assignments with a clearer understanding and a more structured method. This confidence has also positively impacted other areas of my studies, as I am less stressed and more organized.

Final Thoughts

If you're a student struggling with database management assignments, I highly recommend Database Homework Help from DatabaseHomeworkHelp.com. Their expert guidance, timely delivery, and excellent customer support can make a significant difference in your academic journey. They don’t just provide answers; they help you understand the material, which is crucial for long-term success.

In conclusion, my experience with DatabaseHomeworkHelp.com has been overwhelmingly positive. The support I received has not only helped me improve my grades but also enhanced my overall understanding of database concepts. I am grateful for their assistance and will undoubtedly continue to use their services as I progress through my computer science degree.

7 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

Protect Your Laravel APIs: Common Vulnerabilities and Fixes

API Vulnerabilities in Laravel: What You Need to Know

As web applications evolve, securing APIs becomes a critical aspect of overall cybersecurity. Laravel, being one of the most popular PHP frameworks, provides many features to help developers create robust APIs. However, like any software, APIs in Laravel are susceptible to certain vulnerabilities that can leave your system open to attack.

In this blog post, we’ll explore common API vulnerabilities in Laravel and how you can address them, using practical coding examples. Additionally, we’ll introduce our free Website Security Scanner tool, which can help you assess and protect your web applications.

Common API Vulnerabilities in Laravel

Laravel APIs, like any other API, can suffer from common security vulnerabilities if not properly secured. Some of these vulnerabilities include:

>> SQL Injection SQL injection attacks occur when an attacker is able to manipulate an SQL query to execute arbitrary code. If a Laravel API fails to properly sanitize user inputs, this type of vulnerability can be exploited.

Example Vulnerability:

$user = DB::select("SELECT * FROM users WHERE username = '" . $request->input('username') . "'");

Solution: Laravel’s query builder automatically escapes parameters, preventing SQL injection. Use the query builder or Eloquent ORM like this:

$user = DB::table('users')->where('username', $request->input('username'))->first();

>> Cross-Site Scripting (XSS) XSS attacks happen when an attacker injects malicious scripts into web pages, which can then be executed in the browser of a user who views the page.

Example Vulnerability:

return response()->json(['message' => $request->input('message')]);

Solution: Always sanitize user input and escape any dynamic content. Laravel provides built-in XSS protection by escaping data before rendering it in views:

return response()->json(['message' => e($request->input('message'))]);

>> Improper Authentication and Authorization Without proper authentication, unauthorized users may gain access to sensitive data. Similarly, improper authorization can allow unauthorized users to perform actions they shouldn't be able to.

Example Vulnerability:

Route::post('update-profile', 'UserController@updateProfile');

Solution: Always use Laravel’s built-in authentication middleware to protect sensitive routes:

Route::middleware('auth:api')->post('update-profile', 'UserController@updateProfile');

>> Insecure API Endpoints Exposing too many endpoints or sensitive data can create a security risk. It’s important to limit access to API routes and use proper HTTP methods for each action.

Example Vulnerability:

Route::get('user-details', 'UserController@getUserDetails');

Solution: Restrict sensitive routes to authenticated users and use proper HTTP methods like GET, POST, PUT, and DELETE:

Route::middleware('auth:api')->get('user-details', 'UserController@getUserDetails');

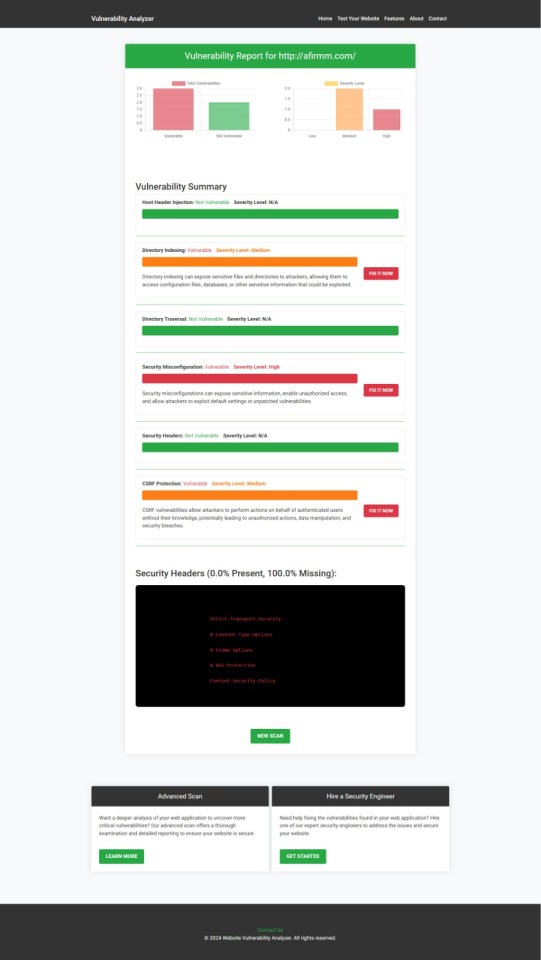

How to Use Our Free Website Security Checker Tool

If you're unsure about the security posture of your Laravel API or any other web application, we offer a free Website Security Checker tool. This tool allows you to perform an automatic security scan on your website to detect vulnerabilities, including API security flaws.

Step 1: Visit our free Website Security Checker at https://free.pentesttesting.com. Step 2: Enter your website URL and click "Start Test". Step 3: Review the comprehensive vulnerability assessment report to identify areas that need attention.

Screenshot of the free tools webpage where you can access security assessment tools.

Example Report: Vulnerability Assessment

Once the scan is completed, you'll receive a detailed report that highlights any vulnerabilities, such as SQL injection risks, XSS vulnerabilities, and issues with authentication. This will help you take immediate action to secure your API endpoints.

An example of a vulnerability assessment report generated with our free tool provides insights into possible vulnerabilities.

Conclusion: Strengthen Your API Security Today

API vulnerabilities in Laravel are common, but with the right precautions and coding practices, you can protect your web application. Make sure to always sanitize user input, implement strong authentication mechanisms, and use proper route protection. Additionally, take advantage of our tool to check Website vulnerability to ensure your Laravel APIs remain secure.

For more information on securing your Laravel applications try our Website Security Checker.

#cyber security#cybersecurity#data security#pentesting#security#the security breach show#laravel#php#api

2 notes

·

View notes

Text

Connect or integrate Odoo ERP database with Microsoft Excel

Techfinna's Odoo Excel Connector is a powerful tool that integrates Odoo data with Microsoft Excel. It enables users to pull real-time data, perform advanced analysis, and create dynamic reports directly in Excel. With its user-friendly interface and robust functionality, it simplifies complex workflows, saving time and enhancing productivity.

#odoo #odooerp #odoosoftware #odoomodule #crm #accounting #salesforce #connector #integration #odoo18 #odoo17 #erpsoftware #odoodevelopers #odoocustomization #erpimplementation #lookerstudio #odoo18 #odoo17

#odoo#odoo company#odoo erp#odoo services#odoo software#odoo web development#good omens#odoo crm#odoo development company#odoo18#microsoft#excel#ms excel#odoointegration#analytics#odoopartner#odooimplementation#innovation#business#customersatisfaction#cybersecurity

5 notes

·

View notes

Text

Hmm. Not sure if my perfectionism is acting up, but... is there a reasonably isolated way to test SQL?

In particular, I want a test (or set of tests) that checks the following conditions:

The generated SQL is syntactically valid, for a given range of possible parameters.

The generated SQL agrees with the database schema, in that it doesn't reference columns or tables which don't exist. (Reduces typos and helps with deprecating parts of the schema)

The generated SQL performs joins and filtering in the way that I want. I'm fine if this is more a list of reasonable cases than a check of all possible cases.

Some kind of performance test? Especially if it's joining large tables

I don't care much about the shape of the output data. That's well-handled by parsing and type checking!

I don't think I want to perform queries against a live database, but I'm very flexible on this point.

5 notes

·

View notes

Text

The Impact of Modern Fast Storage on Clustered Columnstore Index Fragmentation in SQL Server

Introduction Recent advancements in storage technology have greatly enhanced database performance. This raises an important question: Does the fragmentation of clustered columnstore indexes have the same minimal impact as the fragmentation of non-clustered indexes in SQL Server, especially with today’s high-speed storage options? We will delve deeper into this subject to understand it…

View On WordPress

0 notes

Text

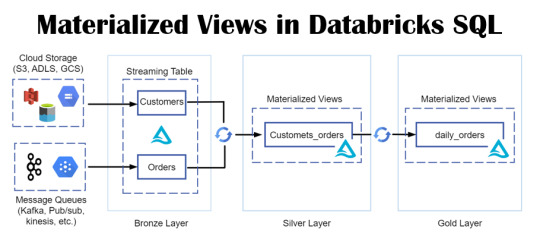

Empower Data Analysis with Materialized Views in Databricks SQL

Envision a realm where your data is always ready for querying, with intricate queries stored in a format primed for swift retrieval and analysis. Picture a world where time is no longer a constraint, where data handling is both rapid and efficient.

View On WordPress

#Azure#Azure SQL Database#data#Database#Database Management#Databricks#Databricks CLI#Databricks Delta Live Table#Databricks SQL#Databricks Unity catalog#Delta Live#Materialized Views#Microsoft#microsoft azure#Optimization#Performance Optimization#queries#SQL#SQL database#Streaming tables#tables#Unity Catalog#views

0 notes

Text

How to test app for the SQL injection

During code review

Check for any queries to the database are not done via prepared statements.

If dynamic statements are being made please check if the data is sanitized before used as part of the statement.

Auditors should always look for uses of sp_execute, execute or exec within SQL Server stored procedures. Similar audit guidelines are necessary for similar functions for other vendors.

Automated Exploitation

Most of the situation and techniques on testing an app for SQLi can be performed in a automated way using some tools (e.g. perform an automated auditing using SQLMap)

Equally Static Code Analysis Data flow rules can detect of unsanitised user controlled input can change the SQL query.

Stored Procedure Injection

When using dynamic SQL within a stored procedure, the application must properly sanitise the user input to eliminate the risk of code injection. If not sanitised, the user could enter malicious SQL that will be executed within the stored procedure.

Time delay Exploitation technique

The time delay exploitation technique is very useful when the tester find a Blind SQL Injection situation, in which nothing is known on the outcome of an operation. This technique consists in sending an injected query and in case the conditional is true, the tester can monitor the time taken to for the server to respond. If there is a delay, the tester can assume the result of the conditional query is true. This exploitation technique can be different from DBMS to DBMS.

http://www.example.com/product.php?id=10 AND IF(version() like '5%', sleep(10), 'false'))--

In this example the tester is checking whether the MySql version is 5.x or not, making the server delay the answer by 10 seconds. The tester can increase the delay time and monitor the responses. The tester also doesn't need to wait for the response. Sometimes they can set a very high value (e.g. 100) and cancel the request after some seconds.

Out-of-band Exploitation technique

This technique is very useful when the tester find a Blind SQL Injection situation, in which nothing is known on the outcome of an operation. The technique consists of the use of DBMS functions to perform an out of band connection and deliver the results of the injected query as part of the request to the tester's server. Like the error based techniques, each DBMS has its own functions. Check for specific DBMS section.

4 notes

·

View notes

Text

Key Programming Languages Every Ethical Hacker Should Know

In the realm of cybersecurity, ethical hacking stands as a critical line of defense against cyber threats. Ethical hackers use their skills to identify vulnerabilities and prevent malicious attacks. To be effective in this role, a strong foundation in programming is essential. Certain programming languages are particularly valuable for ethical hackers, enabling them to develop tools, scripts, and exploits. This blog post explores the most important programming languages for ethical hackers and how these skills are integrated into various training programs.

Python: The Versatile Tool

Python is often considered the go-to language for ethical hackers due to its versatility and ease of use. It offers a wide range of libraries and frameworks that simplify tasks like scripting, automation, and data analysis. Python’s readability and broad community support make it a popular choice for developing custom security tools and performing various hacking tasks. Many top Ethical Hacking Course institutes incorporate Python into their curriculum because it allows students to quickly grasp the basics and apply their knowledge to real-world scenarios. In an Ethical Hacking Course, learning Python can significantly enhance your ability to automate tasks and write scripts for penetration testing. Its extensive libraries, such as Scapy for network analysis and Beautiful Soup for web scraping, can be crucial for ethical hacking projects.

JavaScript: The Web Scripting Language

JavaScript is indispensable for ethical hackers who focus on web security. It is the primary language used in web development and can be leveraged to understand and exploit vulnerabilities in web applications. By mastering JavaScript, ethical hackers can identify issues like Cross-Site Scripting (XSS) and develop techniques to mitigate such risks. An Ethical Hacking Course often covers JavaScript to help students comprehend how web applications work and how attackers can exploit JavaScript-based vulnerabilities. Understanding this language enables ethical hackers to perform more effective security assessments on websites and web applications.

Biggest Cyber Attacks in the World

youtube

C and C++: Low-Level Mastery

C and C++ are essential for ethical hackers who need to delve into low-level programming and system vulnerabilities. These languages are used to develop software and operating systems, making them crucial for understanding how exploits work at a fundamental level. Mastery of C and C++ can help ethical hackers identify and exploit buffer overflows, memory corruption, and other critical vulnerabilities. Courses at leading Ethical Hacking Course institutes frequently include C and C++ programming to provide a deep understanding of how software vulnerabilities can be exploited. Knowledge of these languages is often a prerequisite for advanced penetration testing and vulnerability analysis.

Bash Scripting: The Command-Line Interface

Bash scripting is a powerful tool for automating tasks on Unix-based systems. It allows ethical hackers to write scripts that perform complex sequences of commands, making it easier to conduct security audits and manage multiple tasks efficiently. Bash scripting is particularly useful for creating custom tools and automating repetitive tasks during penetration testing. An Ethical Hacking Course that offers job assistance often emphasizes the importance of Bash scripting, as it is a fundamental skill for many security roles. Being proficient in Bash can streamline workflows and improve efficiency when working with Linux-based systems and tools.

SQL: Database Security Insights

Structured Query Language (SQL) is essential for ethical hackers who need to assess and secure databases. SQL injection is a common attack vector used to exploit vulnerabilities in web applications that interact with databases. By understanding SQL, ethical hackers can identify and prevent SQL injection attacks and assess the security of database systems. Incorporating SQL into an Ethical Hacking Course can provide students with a comprehensive understanding of database security and vulnerability management. This knowledge is crucial for performing thorough security assessments and ensuring robust protection against database-related attacks.

Understanding Course Content and Fees

When choosing an Ethical Hacking Course, it’s important to consider how well the program covers essential programming languages. Courses offered by top Ethical Hacking Course institutes should provide practical, hands-on training in Python, JavaScript, C/C++, Bash scripting, and SQL. Additionally, the course fee can vary depending on the institute and the comprehensiveness of the program. Investing in a high-quality course that covers these programming languages and offers practical experience can significantly enhance your skills and employability in the cybersecurity field.

Certification and Career Advancement

Obtaining an Ethical Hacking Course certification can validate your expertise and improve your career prospects. Certifications from reputable institutes often include components related to the programming languages discussed above. For instance, certifications may test your ability to write scripts in Python or perform SQL injection attacks. By securing an Ethical Hacking Course certification, you demonstrate your proficiency in essential programming languages and your readiness to tackle complex security challenges. Mastering the right programming languages is crucial for anyone pursuing a career in ethical hacking. Python, JavaScript, C/C++, Bash scripting, and SQL each play a unique role in the ethical hacking landscape, providing the tools and knowledge needed to identify and address security vulnerabilities. By choosing a top Ethical Hacking Course institute that covers these languages and investing in a course that offers practical training and job assistance, you can position yourself for success in this dynamic field. With the right skills and certification, you’ll be well-equipped to tackle the evolving challenges of cybersecurity and contribute to protecting critical digital assets.

3 notes

·

View notes

Text

The Skills I Acquired on My Path to Becoming a Data Scientist

Data science has emerged as one of the most sought-after fields in recent years, and my journey into this exciting discipline has been nothing short of transformative. As someone with a deep curiosity for extracting insights from data, I was naturally drawn to the world of data science. In this blog post, I will share the skills I acquired on my path to becoming a data scientist, highlighting the importance of a diverse skill set in this field.

The Foundation — Mathematics and Statistics

At the core of data science lies a strong foundation in mathematics and statistics. Concepts such as probability, linear algebra, and statistical inference form the building blocks of data analysis and modeling. Understanding these principles is crucial for making informed decisions and drawing meaningful conclusions from data. Throughout my learning journey, I immersed myself in these mathematical concepts, applying them to real-world problems and honing my analytical skills.

Programming Proficiency

Proficiency in programming languages like Python or R is indispensable for a data scientist. These languages provide the tools and frameworks necessary for data manipulation, analysis, and modeling. I embarked on a journey to learn these languages, starting with the basics and gradually advancing to more complex concepts. Writing efficient and elegant code became second nature to me, enabling me to tackle large datasets and build sophisticated models.

Data Handling and Preprocessing

Working with real-world data is often messy and requires careful handling and preprocessing. This involves techniques such as data cleaning, transformation, and feature engineering. I gained valuable experience in navigating the intricacies of data preprocessing, learning how to deal with missing values, outliers, and inconsistent data formats. These skills allowed me to extract valuable insights from raw data and lay the groundwork for subsequent analysis.

Data Visualization and Communication

Data visualization plays a pivotal role in conveying insights to stakeholders and decision-makers. I realized the power of effective visualizations in telling compelling stories and making complex information accessible. I explored various tools and libraries, such as Matplotlib and Tableau, to create visually appealing and informative visualizations. Sharing these visualizations with others enhanced my ability to communicate data-driven insights effectively.

Machine Learning and Predictive Modeling

Machine learning is a cornerstone of data science, enabling us to build predictive models and make data-driven predictions. I delved into the realm of supervised and unsupervised learning, exploring algorithms such as linear regression, decision trees, and clustering techniques. Through hands-on projects, I gained practical experience in building models, fine-tuning their parameters, and evaluating their performance.

Database Management and SQL

Data science often involves working with large datasets stored in databases. Understanding database management and SQL (Structured Query Language) is essential for extracting valuable information from these repositories. I embarked on a journey to learn SQL, mastering the art of querying databases, joining tables, and aggregating data. These skills allowed me to harness the power of databases and efficiently retrieve the data required for analysis.

Domain Knowledge and Specialization

While technical skills are crucial, domain knowledge adds a unique dimension to data science projects. By specializing in specific industries or domains, data scientists can better understand the context and nuances of the problems they are solving. I explored various domains and acquired specialized knowledge, whether it be healthcare, finance, or marketing. This expertise complemented my technical skills, enabling me to provide insights that were not only data-driven but also tailored to the specific industry.

Soft Skills — Communication and Problem-Solving

In addition to technical skills, soft skills play a vital role in the success of a data scientist. Effective communication allows us to articulate complex ideas and findings to non-technical stakeholders, bridging the gap between data science and business. Problem-solving skills help us navigate challenges and find innovative solutions in a rapidly evolving field. Throughout my journey, I honed these skills, collaborating with teams, presenting findings, and adapting my approach to different audiences.

Continuous Learning and Adaptation

Data science is a field that is constantly evolving, with new tools, technologies, and trends emerging regularly. To stay at the forefront of this ever-changing landscape, continuous learning is essential. I dedicated myself to staying updated by following industry blogs, attending conferences, and participating in courses. This commitment to lifelong learning allowed me to adapt to new challenges, acquire new skills, and remain competitive in the field.

In conclusion, the journey to becoming a data scientist is an exciting and dynamic one, requiring a diverse set of skills. From mathematics and programming to data handling and communication, each skill plays a crucial role in unlocking the potential of data. Aspiring data scientists should embrace this multidimensional nature of the field and embark on their own learning journey. If you want to learn more about Data science, I highly recommend that you contact ACTE Technologies because they offer Data Science courses and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested. By acquiring these skills and continuously adapting to new developments, they can make a meaningful impact in the world of data science.

#data science#data visualization#education#information#technology#machine learning#database#sql#predictive analytics#r programming#python#big data#statistics

14 notes

·

View notes