#What is AI-Powered Development

Explore tagged Tumblr posts

Text

The Future of Business Growth: AI-Powered Development Strat

AI-powered development is revolutionizing business growth, efficiency, and innovation. By 2024, businesses that harness AI's potential will achieve unprecedented growth, outpacing their competitors. AI's incorporation into business operations enhances productivity, accuracy, and customer experience, driving revenue growth. McKinsey's report indicates that AI could deliver an additional $13 trillion to the global economy by 2030. With the global AI market expected to grow at a CAGR of 37.3% from 2023 to 2030, AI's role in business is becoming increasingly crucial.

AI-powered development uses advanced technologies like machine learning, natural language processing, and computer vision to perform tasks that typically require human intelligence. AI is transforming industries from finance to healthcare, providing solutions like automated trading systems and predictive diagnostics. AI enhances efficiency by automating repetitive tasks, optimizing operations, and enabling employees to focus on strategic activities. AI-driven chatbots and virtual assistants offer real-time support and personalized interactions, improving customer experience. AI's predictive analytics capabilities provide data-driven insights, helping businesses make informed decisions and stay ahead of market trends.

For businesses to fully leverage AI's benefits, a strategic approach to AI implementation is essential. This includes evaluating goals, identifying data sources, selecting appropriate AI tools, and investing in training and education. Addressing challenges like data privacy, system integration, and ethical considerations is critical for successful AI adoption. Partnering with Intelisync can facilitate this process, providing comprehensive AI services that ensure successful AI integration and maximize business impact. Intelisync's expertise in machine learning, data analytics, and AI-driven automation helps businesses unlock their full potential. Contact Intelisync today to start your AI journey and transform your Learn more....

#AI Development#AI-Powered Development for Businesses#AI-Powered Development: Boosting Business Growth in 2024#Blockchain Development Solution: Intelisync Boost Decision-Making#Boosting Business Growth in 2024#Challenges and Considerations in AI Adoption#Choose the Right AI Tools and Technologies#Evaluate your Goals and Needs#How can AI drive innovation in my business?#How can AI increase efficiency in my business?#How can Intelisync help with AI implementation?#Identify the Right Data Sources#Implementing AI in Your Business Improved Customer Experience#Increased Efficiency Innovation and Competitive Advantage#Intelisync AI Consulting#intelisync ai service Invest in Training and Education#Top 5 Benefits of AI#Top 5 Benefits of AI-Powered Development for Businesses#Understanding AI-Powered Development#Vendor Selection#What is AI Development#What is AI-Powered Development#What is the Future of AI-Powered Development in Business?#intelisync ai development service.

0 notes

Note

one 100 word email written with ai costs roughly one bottle of water to produce. the discussion of whether or not using ai for work is lazy becomes a non issue when you understand there is no ethical way to use it regardless of your intentions or your personal capabilities for the task at hand

with all due respect, this isnt true. *training* generative ai takes a ton of power, but actually using it takes about as much energy as a google search (with image generation being slightly more expensive). we can talk about resource costs when averaged over the amount of work that any model does, but its unhelpful to put a smokescreen over that fact. when you approach it like an issue of scale (i.e. "training ai is bad for the environment, we should think better about where we deploy it/boycott it/otherwise organize abt this) it has power as a movement. but otherwise it becomes a personal choice, moralizing "you personally are harming the environment by using chatgpt" which is not really effective messaging. and that in turn drives the sort of "you are stupid/evil for using ai" rhetoric that i hate. my point is not whether or not using ai is immoral (i mean, i dont think it is, but beyond that). its that the most common arguments against it from ostensible progressives end up just being reactionary

i like this quote a little more- its perfectly fine to have reservations about the current state of gen ai, but its not just going to go away.

#i also generally agree with the genie in the bottle metaphor. like ai is here#ai HAS been here but now it is a llm gen ai and more accessible to the average user#we should respond to that rather than trying to. what. stop development of generative ai? forever?#im also not sure that the ai industry is particularly worse for the environment than other resource intense industries#like the paper industry makes up about 2% of the industrial sectors power consumption#which is about 40% of global totals (making it about 1% of world total energy consumption)#current ai energy consumption estimates itll be at .5% of total energy consumption by 2027#every data center in the world meaning also everything that the internet runs on accounts for about 2% of total energy consumption#again you can say ai is a unnecessary use of resources but you cannot say it is uniquely more destructive

1K notes

·

View notes

Text

okay i just marathoned the entirety of ATLA live action & i might do an actual review of it explaining my thoughts more in depth, but the TLDR version basically boils down to this:

if you want to watch Avatar: The Last Airbender, just go watch the 2005 cartoon

#i was trying to keep an open mind & all that cuz of OPLA (my beloved) but. holy shit it was actually worse than i expected :/#like what were they thinking. did they use AI to write this or are the writers just like. really shitty#notes: they linger too much on random bullshit & refuse to move character development along#they tell when they should be showing & when they DO show it's for stuff that benefited from brief environmental storytelling in the OG#the plot drags so hard it was basically stagnant#there were some fun things but like. those things could've been funner if they'd been given the time other useless stuff was taking up#they changed so many minor details that really don't matter in order to make them more important#but this failed spectacularly because now there's just. stupid bullshit clogging up the plot??#instead of having 10 minute monologues 3 times an episode about plot irrelevant things#they should have taken a page out of the original's book & kept minor details to a minimum & focused on ACTUAL PLOT#SO MUCH CGI. LIKE I KNOW THEY NEED IT BUT COME ON. EVEN THE CHARACTERS?????? WHO ARE JUST STANDING THERE????????#they were given 8 hours & almost all of it was Aang angsting (lol) over being the avatar & not practicing actual bending#& then they ended the plot too early so they had to fill in the last like 20 minutes with something else#so they made up random lore that literally makes no sense. & overexplained all of it to the point i was blanking out from boredom#i think this is why i didn't enjoy Korra. they over explain the spirit world stuff & avatar powers & bending#that plus i just don't vibe with the aesthetic#being a writer is a curse because when i dislike something it's because i know exactly what went wrong & why#it's always with the analyzing & the judging & the internal note taking#even when i really try i can't just enjoy shit for fun

15 notes

·

View notes

Text

ed zitron, a tech beat reporter, wrote an article about a recent paper that came out from goldman-sachs calling AI, in nicer terms, a grift. it is a really interesting article; hearing criticism from people who are not ignorant of the tech and have no reason to mince words is refreshing. it also brings up points and asks the right questions:

if AI is going to be a trillion dollar investment, what trillion dollar problem is it solving?

what does it mean when people say that AI will "get better"? what does that look like and how would it even be achieved? the article makes a point to debunk talking points about how all tech is misunderstood at first by pointing out that the tech it gets compared to the most, the internet and smartphones, were both created over the course of decades with roadmaps and clear goals. AI does not have this.

the american power grid straight up cannot handle the load required to run AI because it has not been meaningfully developed in decades. how are they going to overcome this hurdle (they aren't)?

people who are losing their jobs to this tech aren't being "replaced". they're just getting a taste of how little their managers care about their craft and how little they think of their consumer base. ai is not capable of replacing humans and there's no indication they ever will because...

all of these models use the same training data so now they're all giving the same wrong answers in the same voice. without massive and i mean EXPONENTIALLY MASSIVE troves of data to work with, they are pretty much as a standstill for any innovation they're imagining in their heads

76K notes

·

View notes

Text

At the California Institute of the Arts, it all started with a videoconference between the registrar’s office and a nonprofit.

One of the nonprofit’s representatives had enabled an AI note-taking tool from Read AI. At the end of the meeting, it emailed a summary to all attendees, said Allan Chen, the institute’s chief technology officer. They could have a copy of the notes, if they wanted — they just needed to create their own account.

Next thing Chen knew, Read AI’s bot had popped up inabout a dozen of his meetings over a one-week span. It was in one-on-one check-ins. Project meetings. “Everything.”

The spread “was very aggressive,” recalled Chen, who also serves as vice president for institute technology. And it “took us by surprise.”

The scenariounderscores a growing challenge for colleges: Tech adoption and experimentation among students, faculty, and staff — especially as it pertains to AI — are outpacing institutions’ governance of these technologies and may even violate their data-privacy and security policies.

That has been the case with note-taking tools from companies including Read AI, Otter.ai, and Fireflies.ai.They can integrate with platforms like Zoom, Google Meet, and Microsoft Teamsto provide live transcriptions, meeting summaries, audio and video recordings, and other services.

Higher-ed interest in these products isn’t surprising.For those bogged down with virtual rendezvouses, a tool that can ingest long, winding conversations and spit outkey takeaways and action items is alluring. These services can also aid people with disabilities, including those who are deaf.

But the tools can quickly propagate unchecked across a university. They can auto-join any virtual meetings on a user’s calendar — even if that person is not in attendance. And that’s a concern, administrators say, if it means third-party productsthat an institution hasn’t reviewedmay be capturing and analyzing personal information, proprietary material, or confidential communications.

“What keeps me up at night is the ability for individual users to do things that are very powerful, but they don’t realize what they’re doing,” Chen said. “You may not realize you’re opening a can of worms.“

The Chronicle documented both individual and universitywide instances of this trend. At Tidewater Community College, in Virginia, Heather Brown, an instructional designer, unwittingly gave Otter.ai’s tool access to her calendar, and it joined a Faculty Senate meeting she didn’t end up attending. “One of our [associate vice presidents] reached out to inform me,” she wrote in a message. “I was mortified!”

24K notes

·

View notes

Text

Generative AI is revolutionizing the digital world by enabling machines to create human-like content, including text, images, music, and even code. Unlike traditional AI, which follows predefined rules, Generative AI uses deep learning models like GPT and DALL·E to generate new and unique outputs based on patterns in existing data.

This technology is transforming industries, from automating content creation and enhancing customer support to generating realistic artwork and simulations. Businesses leverage it for personalized marketing, while creatives use it for inspiration and design.

One key feature of Generative AI is its ability to produce high-quality, original content, mimicking human creativity. However, ethical concerns such as misinformation and bias require careful management. As AI advances, its role in shaping the future of digital content creation continues to grow, offering endless possibilities while demanding responsible use.

#digitalpreeyam#generative ai#what are the key features and benefits of using sap cloud connector?#what are the key features and benefits of using ihp in web development?#"unlock the power of ai#what are the key features and benefits of using labelme for image annotation?#fundamentals of generative ai#generative ai explained#benefits of micro llms#microsoft for startups unlocking the power of openai for your startup | odbrk53#unlocking research potential with ai#mastering generative ai

0 notes

Text

Generative AI is revolutionizing the digital world by enabling machines to create human-like content, including text, images, music, and even code. Unlike traditional AI, which follows predefined rules, Generative AI uses deep learning models like GPT and DALL·E to generate new and unique outputs based on patterns in existing data.

This technology is transforming industries, from automating content creation and enhancing customer support to generating realistic artwork and simulations. Businesses leverage it for personalized marketing, while creatives use it for inspiration and design. One key feature of Generative AI is its ability to produce high-quality, original content, mimicking human creativity. However, ethical concerns such as misinformation and bias require careful management. As AI advances, its role in shaping the future of digital content creation continues to grow, offering endless possibilities while demanding responsible use.

#digitalpreeyam#generative ai#what are the key features and benefits of using sap cloud connector?#what are the key features and benefits of using ihp in web development?#"unlock the power of ai#what are the key features and benefits of using labelme for image annotation?#fundamentals of generative ai#generative ai explained#benefits of micro llms#microsoft for startups unlocking the power of openai for your startup | odbrk53#unlocking research potential with ai#mastering generative ai

0 notes

Text

My most recent mildly boomer ass take: technology that consumers have specifically purchased in the past because they have the option to truly customize their experience SHOULD NOT remove that option ever, even to branch out into the untapped market of the consumers that need their hand held, for lack of a better term.

#samsung removing mutliple options to adjust and change the files in the phones without using aftermarket developer tools#windows assuming that youll want the latest and greatest and adjusting your settings to Their liking#the unnecessary introduction of AI into EVERYTHING#you buy a new phone your lock button opens the AI and not the power menu#not giving error messages or any kind of explanation on why something cant logically be done with the phone#but Hey! you can use our nifty feature that doesn't actually have the function of what you were interested in doing!#get bent

1 note

·

View note

Text

Generative AI for Startups: 5 Essential Boosts to Boost Your Business

The future of business growth lies in the ability to innovate rapidly, deliver personalized customer experiences, and operate efficiently. Generative AI is at the forefront of this transformation, offering startups unparalleled opportunities for growth in 2024.

Generative AI is a game-changer for startups, significantly accelerating product development by quickly generating prototypes and innovative ideas. This enables startups to innovate faster, stay ahead of the competition, and bring new products to market more efficiently. The technology also allows for a high level of customization, helping startups create highly personalized products and solutions that meet specific customer needs. This enhances customer satisfaction and loyalty, giving startups a competitive edge in their respective industries.

By automating repetitive tasks and optimizing workflows, Generative AI improves operational efficiency, saving time and resources while minimizing human errors. This allows startups to focus on strategic initiatives that drive growth and profitability. Additionally, Generative AI’s ability to analyze large datasets provides startups with valuable insights for data-driven decision-making, ensuring that their actions are informed and impactful. This data-driven approach enhances marketing strategies, making them more effective and personalized.

Intelisync offers comprehensive AI/ML services that support startups in leveraging Generative AI for growth and innovation. With Intelisync’s expertise, startups can enhance product development, improve operational efficiency, and develop effective marketing strategies. Transform your business with the power of Generative AI—Contact Intelisync today and unlock your Learn more...

#5 Powerful Ways Generative AI Boosts Your Startup#advanced AI tools support startups#Driving Innovation and Growth#Enhancing Customer Experience#Forecasting Data Analysis and Decision-Making#Generative AI#Generative AI improves operational efficiency#How can a startup get started with Generative AI?#Is Generative AI suitable for all types of startups?#marketing strategies for startups#Streamlining Operations#Strengthen Product Development#Transform your business with AI-driven innovation#What is Generative AI#Customized AI Solutions#AI Development Services#Custom Generative AI Model Development.

0 notes

Text

(Read on our blog)

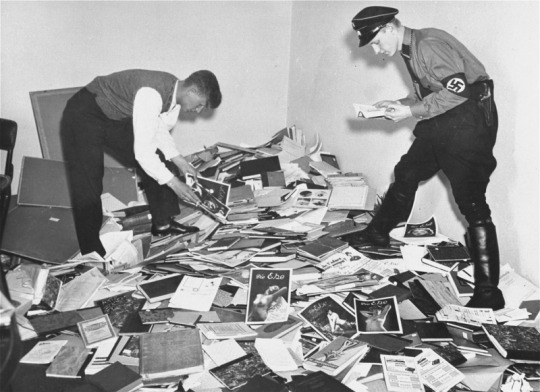

Beginning in 1933, the Nazis burned books to erase the ideas they feared—works of literature, politics, philosophy, criticism; works by Jewish and leftist authors, and research from the Institute for Sexual Science, which documented and affirmed queer and trans identities.

(Nazis collect "anti-German" books to be destroyed at a Berlin book-burning on May 10, 1933 (Source)

Stories tell truths.

These weren’t just books; they were lifelines.

Writing by, for, and about marginalized people isn’t just about representation, but survival. Writing has always been an incredibly powerful tool—perhaps the most resilient form of resistance, as fascism seeks to disconnect people from knowledge, empathy, history, and finally each other. Empathy is one of the most valuable resources we have, and in the darkest times writers armed with nothing but words have exposed injustice, changed culture, and kept their communities connected.

(A Nazi student and a member of the SA raid the Institute for Sexual Science's library in Berlin, May 6, 1933. Source)

Less than two weeks after the US presidential inauguration, the nightmare of Project 2025 is starting to unfold. What these proposals will mean for creative freedom and freedom of expression is uncertain, but the intent is clear. A chilling effect on subjects that writers engage with every day—queer narratives, racial justice, and critiques of power—is already manifest. The places where these works are published and shared may soon face increased pressure, censorship, and legal jeopardy.

And with speed-run fascism comes a rising tide of misinformation and hostility. The tech giants that facilitate writing, sharing, publishing, and communication—Google, Microsoft, Amazon, the-hellscape-formerly-known-as-Twitter, Facebook, TikTok—have folded like paper in a light breeze. OpenAI, embroiled in lawsuits for training its models on stolen works, is now positioned as the AI of choice for the administration, bolstered by a $500 billion investment. And privacy-focused companies are showing a newfound willingness to align with a polarizing administration, chilling news for writers who rely on digital privacy to protect their work and sources; even their personal safety.

Where does that leave writers?

Writing communities have always been a creative refuge, but they’re more than that now—they are a means of continuity. The information landscape is shifting rapidly, so staying informed on legal and political developments will be essential for protecting creative freedom and pushing back against censorship wherever possible. Direct your energy to the communities that need it, stay connected, check in on each other—and keep backup spaces in case platforms become unsafe.

We can’t stress this enough—support tools and platforms that prioritize creative freedom. The systems we rely on are being rewritten in real time, and the future of writing spaces depends on what we build now. We at Ellipsus will continue working to provide space for our community—one that protects and facilitates creative expression, not undermines it.

Above all—keep writing.

Keep imagining, keep documenting, keep sharing—keep connecting. Suppression thrives on silence, but words have survived every attempt at erasure.

- The Ellipsus team

#writeblr#writers on tumblr#writing#fiction#fanfic#fanfiction#us politics#american politics#lgbtq community#lgbtq rights#trans rights#freedom of expression#writers

4K notes

·

View notes

Text

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train their models without the creator’s permission. Using it to “poison” this training data could damage future iterations of image-generating AI models, such as DALL-E, Midjourney, and Stable Diffusion, by rendering some of their outputs useless—dogs become cats, cars become cows, and so forth. MIT Technology Review got an exclusive preview of the research, which has been submitted for peer review at computer security conference Usenix.

AI companies such as OpenAI, Meta, Google, and Stability AI are facing a slew of lawsuits from artists who claim that their copyrighted material and personal information was scraped without consent or compensation. Ben Zhao, a professor at the University of Chicago, who led the team that created Nightshade, says the hope is that it will help tip the power balance back from AI companies towards artists, by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property. Meta, Google, Stability AI, and OpenAI did not respond to MIT Technology Review’s request for comment on how they might respond.

Zhao’s team also developed Glaze, a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

Continue reading article here

#Ben Zhao and his team are absolute heroes#artificial intelligence#plagiarism software#more rambles#glaze#nightshade#ai theft#art theft#gleeful dancing

22K notes

·

View notes

Text

a spiderverse x spiderman!reader x batfam concept different from my spidernoir one

exposition is fairly simple, peni-parker!reader comes back from the boarding school they were sent to by the family to "keep them out of vigilante business" but are blissfully unaware that for the past few months, peni!reader's been working on a mech suit to support their new found spider powers, after getting bitten by a radioactive spider while away at school.

with access to bruce's batcave, luke's indulgence in your "academic strive" and your stealth and sneaking about, you're able to make your suit pretty quickly. unresolved feelings from your past, and this sense of debt you feel, you decide to repay by being SP//dr... spider for easy-comms.

the thing is, peni!reader is an anomaly, since this spiderman in this universe in not meant to exist. maybe some stuff with the spider society and all can come in and we find out that actually, the spider that bit peni!reader was from this universe and spiderman is allowed to exist here.

but to investigate what a radioactive spider with the wrong genetic data was doing in your universe, where is wasn't supposed to be* spidernoir agrees to drop down to gotham to help peni!reader to figure it out. he becomes, essentially, a father figure for reader, something that bruce hasn't been able to due to the weight of reader's and his past.

meanwhile, when peni!reader comes back to the manor from 'boarding school' the family notices physical and mental changes in them. their more distant, dismissive... confident in their skin. though you guys never had much time to talk or hangout or bond like they do, the development is difficult to notice.

additionally, sightings of a man in a trench coat and a car-sized robot swinging around have been going around, doing god knows what. the batman doesn't like being unprepared, and tries to scour out their identities and whereabouts. i have some really small little ideas that'd be funny for the whole run, like spidernoir showing up for a parent-teacher conference instead of bruce, ai assistant karen, commentary from spiderpunk, constantine and strange link up and also delve a little into what the themes between spiderman variants, spiderman, and batman are that make them so different are.

i'm rotting away like an oxidised apple but rlly dont know if i should write it cus ive got so much 2 do... if ppl are interested at all i mkigbt consider

in conclusion: I LOVE YOU SPIDERNOIR AND PENI PARKER!!!!!

*supposed to be = not in the sense that how mile's spider teleported to another earth, but like, peni!reader was just not meant to be bit, and that spider is not supposed to exist. the dc and marvel universes are parallel, with peni!reader's existence being a small, hairline road between the two.

#saria's 💤 writing#saria 💤 says#batfam x neglected reader#yandere batfam#batfam x reader#batman x reader#bruce wayne x reader#nightwing x reader#jason todd x reader#red hood x reader#damian wayne x reader#cassandra cain x reader#felicia hardy x reader#dc x reader#platonic yandere batfam x reader#dick grayson x reader#yandere dc x reader#platonic yandere batfam#neglected reader#spider reader#spiderman x batman#spiderman x batfam#tim drake x reader#atsv x reader#peter parker x reader#spiderman x reader#spiderverse x reader#miles morales x reader#gwen stacy x reader#mary jane x reader

1K notes

·

View notes

Text

Green energy is in its heyday.

Renewable energy sources now account for 22% of the nation’s electricity, and solar has skyrocketed eight times over in the last decade. This spring in California, wind, water, and solar power energy sources exceeded expectations, accounting for an average of 61.5 percent of the state's electricity demand across 52 days.

But green energy has a lithium problem. Lithium batteries control more than 90% of the global grid battery storage market.

That’s not just cell phones, laptops, electric toothbrushes, and tools. Scooters, e-bikes, hybrids, and electric vehicles all rely on rechargeable lithium batteries to get going.

Fortunately, this past week, Natron Energy launched its first-ever commercial-scale production of sodium-ion batteries in the U.S.

“Sodium-ion batteries offer a unique alternative to lithium-ion, with higher power, faster recharge, longer lifecycle and a completely safe and stable chemistry,” said Colin Wessells — Natron Founder and Co-CEO — at the kick-off event in Michigan.

The new sodium-ion batteries charge and discharge at rates 10 times faster than lithium-ion, with an estimated lifespan of 50,000 cycles.

Wessells said that using sodium as a primary mineral alternative eliminates industry-wide issues of worker negligence, geopolitical disruption, and the “questionable environmental impacts” inextricably linked to lithium mining.

“The electrification of our economy is dependent on the development and production of new, innovative energy storage solutions,” Wessells said.

Why are sodium batteries a better alternative to lithium?

The birth and death cycle of lithium is shadowed in environmental destruction. The process of extracting lithium pollutes the water, air, and soil, and when it’s eventually discarded, the flammable batteries are prone to bursting into flames and burning out in landfills.

There’s also a human cost. Lithium-ion materials like cobalt and nickel are not only harder to source and procure, but their supply chains are also overwhelmingly attributed to hazardous working conditions and child labor law violations.

Sodium, on the other hand, is estimated to be 1,000 times more abundant in the earth’s crust than lithium.

“Unlike lithium, sodium can be produced from an abundant material: salt,” engineer Casey Crownhart wrote in the MIT Technology Review. “Because the raw ingredients are cheap and widely available, there’s potential for sodium-ion batteries to be significantly less expensive than their lithium-ion counterparts if more companies start making more of them.”

What will these batteries be used for?

Right now, Natron has its focus set on AI models and data storage centers, which consume hefty amounts of energy. In 2023, the MIT Technology Review reported that one AI model can emit more than 626,00 pounds of carbon dioxide equivalent.

“We expect our battery solutions will be used to power the explosive growth in data centers used for Artificial Intelligence,” said Wendell Brooks, co-CEO of Natron.

“With the start of commercial-scale production here in Michigan, we are well-positioned to capitalize on the growing demand for efficient, safe, and reliable battery energy storage.”

The fast-charging energy alternative also has limitless potential on a consumer level, and Natron is eying telecommunications and EV fast-charging once it begins servicing AI data storage centers in June.

On a larger scale, sodium-ion batteries could radically change the manufacturing and production sectors — from housing energy to lower electricity costs in warehouses, to charging backup stations and powering electric vehicles, trucks, forklifts, and so on.

“I founded Natron because we saw climate change as the defining problem of our time,” Wessells said. “We believe batteries have a role to play.”

-via GoodGoodGood, May 3, 2024

--

Note: I wanted to make sure this was legit (scientifically and in general), and I'm happy to report that it really is! x, x, x, x

#batteries#lithium#lithium ion batteries#lithium battery#sodium#clean energy#energy storage#electrochemistry#lithium mining#pollution#human rights#displacement#forced labor#child labor#mining#good news#hope

3K notes

·

View notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

decided to crack open my skull and pour the contents of my brain onto the keyboard. thought the denizens of tumblr might enjoy it. bon appetite

Mech Pilot Care guide

You never expect it, do you. Even as you see the flashes of pulse-decay fire in the sky, illuminating a scene of violence on the cosmic scale. Planetary defense satellites forming Monolithic structures in the sky, their purpose now revealed as they scatter constellations of destruction across the night horizon, drowning out the stars and replacing them with ones born of death. The oxygen in a ship catching fire and burning away in an instant, a flash of light that marks the death of its crew of hundreds. Even if you take your telescope to watch this spectacle, this war in a place without screams, you still feel profoundly disconnected from it.

Even as you see a pilot cleave through a drone hive with a fusion blade, the molten metal glistening in the light of the explosions around it, scattering without gravity to the corners of the universe, even as two mechs dance across the sky, their reactors pouring into the engines enough energy to power the house atop which you sit for ten thousand years, flying in a 3.5 dimensional dance with only one word to the song that can reach across the vacuum: “I Will Kill You.” you don’t feel even the slightest glimpse of what goes on inside their minds. You don’t feel the neurological feedback tearing across the brain-computer interface, filling her mind with more simultaneous pain and elation that an unmodified human could ever experience. You don’t feel it as the pneumatic lance punctures through steel and nanocarbon polymer, the mech AI sending floods of a sensation you could never truly know through the skull and into every corner of the body carried on enhanced nerves for every layer of armor punctured, tearing into the enemy chassis with a desire beyond anything the flesh can provide. Let the stars kill each other. After all, I am safe on earth. No, you don’t expect it when the star is hit with a sub-relativistic projectile, piercing through both engines in an instant. You don’t expect it to fall. You never would have expected it to land, the impact nearly vaporizing the soil and setting trees aflame, on the hill beyond your house, and you would never have expected, beneath the layers of cooling slag, for the life-support indicator light to still be visible.

All the fire extinguishers in your house, your old plasma cutter that you haven’t used in years, and whatever medical supplies you think they might still be able to benefit from. All that on a hoverbike, speeding at 120 kilometers per hour through the valley and up onto the hill, still illuminated by the battle above, unsurprisingly unchanged by this new development. 200 meters. 100 meters. You don’t know how much time you’ve got. It wasn’t exactly covered in school, how long a pilot can survive in an overheating frame. You’ve heard rumors, of course, of what these things that used to be human have become. That they don’t eat and barely need air. That they don’t feel any desire beyond what instructions are pumped directly into their brains. Not so much of a person as much as an attack dog. It’s understandably a bit concerning, as if they are alive, then it’s not guaranteed that you will be. Three fire extinguishers later, the surface of the mech is mostly solid, and the cutter slices through the exterior plating. With a satisfying crunch, the cockpit is forced open, revealing the pilot, and confirming a few of the rumors, while refuting others. Pilots, it seems, are not quite emotionless. In fact, there seems to be genuine fear on its face when it sees you, followed by… a sort of grim certainty as it opens its mouth, moves its jaw into a strange position, and you only have half a second to react before it would have bitten down with all its force on the tooth that seemed to be made of a different material then all the rest.

Your thumb is definitely bleeding, and is caught between a metamaterial-based dental implant, and one containing a military-grade neurotoxin. You’re not sure exactly why you did it. The pilot looks at you for a second, before the tubes that attach to its arms like puppet strings run out of stimulants, and it passes out after who knows how long without sleep. This battle has been going on for weeks already. Has it been fighting that long? Its various frame-tethered implants disconnect easily, the unconscious pilot draped over your shoulder twitching slightly with each one you remove. It’s a much longer ride back to the house. Avoiding having the pilot fall off the bike is the top priority, and the injured thumb stings in the fast-moving air.

An internet search doesn’t lead to many helpful sources to the question of “there is a mech pilot on my couch, what do I do?” a few articles about how easy targets retired pilots are for the “doll sellers,” a few military recruitment ads, and a couple near-incomprehensible legal documents full of words like “proprietary technology” or “instant termination.” However, there is one link, a few rows down from the top-- “Mech Pilot Care Guide.” It’s a detailed list, arranged in numbered steps. The website has no other links on it, just the step-by-step instructions: a quick read reveals that this isn’t going to be easy, but looking at the unconscious pilot, unabsorbed chemicals dripping from the ports in its arms and head onto the mildly bloodstained towel, you come to the conclusion that there’s no other option.

Step one: the first 24 hours.

The first thing you should know is that pilots aren’t used to sleeping. They’re used to being put under for transport and storage, but after the neural augmentations and years of week-long battles sustained by stimulants that would fry the brain of anyone that still has an intact one, they’ve more or less forgotten what real sleep is. If they see you asleep, they’ll think you’re dead, so don’t try to let them stay in your room yet. Once you’ve removed the neurotoxin from the tooth (it breaks easily with a bit of applied pressure, but be careful not to let any fall into their mouth or onto your skin.), start by moving them into a chair (preferably a recliner or gaming chair, as the mech seat is about halfway in between), and putting a heavy blanket over them. Don’t worry, they don’t need as much air as normal humans do, and can handle high temperatures up to a point. This is an environment similar to the one they’re used to. It’ll stay like this for about 12 hours-- barely breathing, trembling slightly underneath the blanket. Feel free to check if it’s alive every few hours, not that you could help it if it wasn’t. It won’t freak out when it wakes up. In fact, it doesn’t seem like they can. Turn down the lights and remove the blanket from its face. It’ll stare blankly at you, trying to evaluate the situation with a brain that’s not connected to a computer that’s bigger than they are anymore. Coming to terms, if you could call it that, with the fact that it isn’t dead. Don’t expect it to start reacting to things for a while yet, give it a couple hours.

It’s been a bit, and its eyes are starting to focus on you. The next thing you should know is this: pilots only have two groups into which they can categorize non-pilots: handler and enemy. You need to work on making sure you’re in the right one. Move slowly, standing up and walking toward them, making sure they can see where you’re going to step. Place both hands on their shoulders, then slide one under their arm and carefully pick them up. Don’t be startled by how light they are, or how they still shake slightly as they realize their arms don’t have anything connected to them. Most importantly, don’t break. Don’t reflect on how something can be done to a person so that this is all that’s left. Just focus on rotating them as if you’re inspecting all the brain-computer interface ports, while holding them at half an arm’s length. Set them back down, wrap the blanket around them, then lean in close and say “status report.” they won’t say anything, as they usually upload the data via interface, but what’s important is that now they recognise you as their handler. Their entire mind will be focused on the fact that they exist now to do what you want. Now it’s up to you to prove them wrong.

Step two: the first week.

They’re shaking so hard that you’ve had to move them from the chair back to the couch, sweating heavily as they pant like the dog they’ve been trained to think they are. This was to be expected, really. Pilots are constantly being filled with a mix of stimulants, painkillers, and who knows what else, and you’ve just cut them off completely. You’ve woken up several times in the night and rushed to check if they’re still breathing, debating whether you should try to tell them that they’re going to be okay. The guide says they’re not ready for that yet, whatever that means. They’re still wearing the suit you found them in, made from nanofiber mesh and apparently recycling nutrients and water before re-infusing them intravenously. It’s been three days since you tore them out of the lump of metal atop the hill outside. Long enough that the suit’s battery, apparently, has run out. You lift them gently from the couch and carry them to the bathroom. The shower’s been on for the past hour or so, meaning the temperature should be high enough. You set them on their chair, which you’ve rolled there from the living room and covered with a towel. Removing the suit normally isn’t done except in between missions, and it’s only done to exchange it for a new one. Without the proper tools, you’ve opted for a pair of scissors. Cutting through the suit takes a bit of time, but you manage to cut a sizable line from the neck down to the front to the bottom of the torso. The pilot recoils slightly from the cold metal against their skin, but you manage to peel off the suit without incident, The Temperature of which was roughly the same as the steam filling the room, and you’ve done your best to minimize air currents. They’ve got a bit more shape to them than you expected of someone who’s been so heavily modified. Perhaps what little fat storage it provides helps on longer missions, or perhaps this is for the purposes of marketing. Just another recruitment ad that appeals to baser instincts. Either way, it doesn’t matter. Using a cloth with the least noticeable texture possible, you wash off as much sweat and dead skin as you can, avoiding the various interface and IV ports, as you’re not yet sure that they’re waterproof. Embarrassment is the enemy of efficiency, so you’re slightly glad that their eyes never completely focus on you. They shift their weight slightly, however. Despite the difficulty moving with their current symptoms, they lean in the direction opposite the places you wash once you're done, allowing you to more easily access the places you haven’t got to yet. An act of trust that you have a suspicion they weren't “programmed” to do. As they dry out, you prepare for the difficult part. You take the blanket that previously wrapped around their suit, and gently touch a corner of it to their shoulder. Pilots are used to an amount of sensory information that would overload any normal human in an instant, but most rarely experience textures against their skin. After about half an hour, they’re used to it enough that you’re able to replace what’s left of the suit with it, and after another you’re able to wrap them in it again. You carry them back to the couch, and place a few of your old shirts next to their hand. They pick one and touch it with one finger before recoiling slightly. Eventually, they’ll be used to at least one of them enough that they can wear it. It’s slow progress, but it’s progress.

Step 3: food

It goes without saying that it’s usually been at least a year since they’ve eaten anything. The augmentations scooped out much of their knowledge on how to survive as a human, assuming that they would die before ever needing to be one again. Start them off with just flavors. Give them a chance to pick favorites by giving them a wide selection and firmly telling them to try all of them. Avoid anything solid for the first month or so, both because they can’t digest it and because they associate chewing with their self-destruct mechanism. Trying to and surviving might make them think the “mission’s fully compromised” and attempt to improvise. They’ll typically pick out favorites quickly with their enhanced senses, so once they’ve sampled everything, tell them to pick one. Remember it, not in order to use it as a reward or anything, but them still being able to have a “favorite” anything is something you should keep in mind for later.

Use a similar method anytime they become able to handle the next level of solidity. Don’t be alarmed if one of their favorite foods is the meat that’s most similar to humans (such as pork.) they’re not going to eat you, they just will have already formed an association between that flavor and the moment they went from being a weapon to living in your house. Don’t worry about your thumb getting infected, by the way. Pilots barely have a microbiome.

Step 4: entertainment:

Roll them over to your computer and give them access to your game library. No, really. They need enrichment, and there’s only one activity that they’re able to enjoy at the moment. A simulation of it will make the shift from weapon to guest easier. Start them off with an FPS with a story. Don’t go multiplayer, as your account may get banned for being suspected of using aimbots. Watch as they progress the story. The military left pilots with just enough of a personality to allow them to improvise, and that should be enough for them to make decisions on this level. They won’t do much character customization, but keep an eye on which starting character body shape they pick. No pilot would consciously think they have enough of a “Self” to still have a gender, but keep track of the ones they pick in the games. As for the one you’ve found, it appears that she’s got a player-character preference. You even saw her nudge one of the appearance sliders before clicking “start game.” Whether this means that a pilot doesn’t think of themselves as “it” or that it means there’s still enough of their mind left for them to know there’s more to themselves than the body they have, it’s a handy bit of information to know. Some pilots might have had this decision influenced by their handlers having referred to them as “she” in the way it refers to boats, but still, on some level they always know that “it” meant that they’re a weapon.

Step 6: outside:

There’s a profound difference between experiencing the world through information fed directly into your brain and standing up for the first time, wandering around the room and investigating with hands not made of a half-ton of metal. She’s not used to feeling the air on her skin as she stands in front of the window, visual data coming from two eyes instead of seven cameras. It’ll take a while to get used to it again. New old data, reminiscent of a time before she’s been trained not to remember. It’ll take a while until she’s walking like a human and not a mech, as the muscles used are different, and the ones to hold herself upright haven’t been used in a while. She’s going to fall down at least once. Be sure you’re standing next to her when it happens, as pilots that fall aren’t trained to think they can get back up. It’s worth it, though, when she opens the door herself and strides into the yard, still wobbly but standing. Be careful not to let her look into the sun, partially because it looks nearly identical to the barrel of a pulse-decay blaster milliseconds before it fires. She would get hurt trying to dodge it. It will be somewhat confusing for her, standing on a hill as she once did, but not contained within a 12-meter metal chassis. A feeling of being small and alone without the voices of the computer. This means it’s time for step seven.

Step 7:

All this time, and any idea that she’s still a person has, for her, been subconscious. Any thought of humanity is stopped when it slams into the wall of her handlers and mech AIs reminding her for years before now that she is a weapon. She’ll still ask for your permission before doing just about anything, and that’s just the rare times that she’ll do something you don’t tell her to. Even after you’ve moved her into your room, she’ll still try to sleep on the floor. She still thinks that beds are only for humans. Kneel next to her as she curls into a ball on the ground, assuming that’s what she’s supposed to do. Expect her to try to move down to the foot of the bed after you set her down on it. Gently move her back up until her head’s on the pillow. Sit on the edge of the bed, and hold out your hand to her. After a bit, she’ll take it, wrapping both hands around it and tracing her fingers along the scar on your thumb. Lie down next to her, an arm’s length apart. Place your other hand on her forearm, then slide it up her arm to her shoulder. Don’t move too quickly, and don’t surprise her. Whisper softly but audibly every movement you’re going to make in advance. Move in a bit closer, until you’re wrapped in her arms. Mech pilots aren’t used to this. They aren't used to feeling someone next to them. Not above them, but next to them, getting exactly as much out of this as they are. Even after several months, many won’t admit they deserve it. You wouldn’t waste time lying next to a gun. So why do they feel so strongly that they don’t want you to leave? Why do they hold on tighter? They often feel they’re doing something wrong. Overstepping a boundary. There’s a rift between what they want and what they’re told they can want that nearly tears their mind in half, and it hurts. No normal human will ever know how much it hurts them to think they’ve broken some instruction, that they feel things they aren’t allowed to. Nobody said it was easy, learning how to become human again. Tell her it’s okay. That she’s allowed to feel this way. She still won’t know why. It’s time to tell her. The guide can’t tell you what to say, only that you have to say it. It has to come from you. You have to be the one that tells her what she is underneath all the modifications. It’s time, say it.

“Do you feel that? Do you feel your heart start to beat faster as it presses up against mine? Do you feel your own breath against your skin after it reflects off my shoulder? Do you feel your muscles start to tighten as I slide my hand across them, then relax because you know it means that you are safe? It’s because you’re alive. Because despite everything, you’re still alive. Still someone left after all the changes, all the augmentations. And I know you’re someone because you are someone that likes food a bit spicier than most would prefer. Someone that closes her eyes and gets lost in music whenever it’s playing. Someone that added that one piece of customization to her character, even though they would wear a helmet for most of the game and nobody would know it was there but you. Maybe you aren’t the same person you were before. Maybe they did take some things from you that nothing can give back. But you’re still someone. Someone that people can still care about, and I know because I do.”

You can feel her tears drip down onto your neck as she pulls you closer. She tries to say something, but you can’t understand what. You tell her it’s okay. That it’s not easy, and that she doesn’t have to pretend that it is. Not for you, and not for anyone anymore. She doesn’t have to be useful anymore. No need to keep it together. All that matters is that she’s alive.

There’s another battle going on in the night sky outside. The same flashes of light you saw the night you stopped living alone, even if the other person couldn’t admit that they were one yet. She still flinches at the brighter bursts of pulse-decay fire, still stretches out her hand on reflex to prime a pneumatic lance that isn’t there. But she knows it’s not her, it’s just a ghost of the weapon that died when it hit the ground. You can feel her relax as she realizes this, moving her hand back to dry her face before reaching out towards yours. You hadn’t noticed the tears on your own face. You place your hand on hers as she wipes the corner of your eye. Outside and above, the war continues on a cosmic scale, so far apart from where you both are now that you barely notice it. Let the stars kill each other. After all, the one before you has already fallen, and she doesn’t have to return to the sky. Together, you are safe on earth.

2K notes

·

View notes

Note

Why reblog machine-generated art?

When I was ten years old I took a photography class where we developed black and white photos by projecting light on papers bathed in chemicals. If we wanted to change something in the image, we had to go through a gradual, arduous process called dodging and burning.

When I was fifteen years old I used photoshop for the first time, and I remember clicking on the clone tool or the blur tool and feeling like I was cheating.

When I was twenty eight I got my first smartphone. The phone could edit photos. A few taps with my thumb were enough to apply filters and change contrast and even spot correct. I was holding in my hand something more powerful than the huge light machines I'd first used to edit images.

When I was thirty six, just a few weeks ago, I took a photo class that used Lightroom Classic and again, it felt like cheating. It made me really understand how much the color profiles of popular web images I'd been seeing for years had been pumped and tweaked and layered with local edits to make something that, to my eyes, didn't much resemble photography. To me, photography is light on paper. It's what you capture in the lens. It's not automatic skin smoothing and a local filter to boost the sky. This reminded me a lot more of the photomanipulations my friend used to make on deviantart; layered things with unnatural colors that put wings on buildings or turned an eye into a swimming pool. It didn't remake the images to that extent, obviously, but it tipped into the uncanny valley. More real than real, more saturated more sharp and more present than the actual world my lens saw. And that was before I found the AI assisted filters and the tool that would identify the whole sky for you, picking pieces of it out from between leaves.

You know, it's funny, when people talk about artists who might lose their jobs to AI they don't talk about the people who have already had to move on from their photo editing work because of technology. You used to be able to get paid for basic photo manipulation, you know? If you were quick with a lasso or skilled with masks you could get a pretty decent chunk of change by pulling subjects out of backgrounds for family holiday cards or isolating the pies on the menu for a mom and pop. Not a lot, but enough to help. But, of course, you can just do that on your phone now. There's no need to pay a human for it, even if they might do a better job or be more considerate toward the aesthetic of an image.

And they certainly don't talk about all the development labs that went away, or the way that you could have trained to be a studio photographer if you wanted to take good photos of your family to hang on the walls and that digital photography allowed in a parade of amateurs who can make dozens of iterations of the same bad photo until they hit on a good one by sheer volume and luck; if you want to be a good photographer everyone can do that why didn't you train for it and spend a long time taking photos on film and being okay with bad photography don't you know that digital photography drove thousands of people out of their jobs.

My dad told me that he plays with AI the other day. He hosts a movie podcast and he puts up thumbnails for the downloads. In the past, he'd just take a screengrab from the film. Now he tells the Bing AI to make him little vignettes. A cowboy running away from a rhino, a dragon arm-wrestling a teddy bear. That kind of thing. Usually based on a joke that was made on the show, or about the subject of the film and an interest of the guest.

People talk about "well AI art doesn't allow people to create things, people were already able to create things, if they wanted to create things they should learn to create things." Not everyone wants to make good art that's creative. Even fewer people want to put the effort into making bad art for something that they aren't passionate about. Some people want filler to go on the cover of their youtube video. My dad isn't going to learn to draw, and as the person who he used to ask to photoshop him as Ant-Man because he certainly couldn't pay anyone for that kind of thing, I think this is a great use case for AI art. This senior citizen isn't going to start cartooning and at two recordings a week with a one-day editing turnaround he doesn't even really have the time for something like a Fiverr commission. This is a great use of AI art, actually.

I also know an artist who is going Hog Fucking Wild creating AI art of their blorbos. They're genuinely an incredibly talented artist who happens to want to see their niche interest represented visually without having to draw it all themself. They're posting the funny and good results to a small circle of mutuals on socials with clear information about the source of the images; they aren't trying to sell any of the images, they're basically using them as inserts for custom memes. Who is harmed by this person saying "i would like to see my blorbo lasciviously eating an ice cream cone in the is this a pigeon meme"?

The way I use machine-generated art, as an artist, is to proof things. Can I get an explosion to look like this. What would a wall of dead computer monitors look like. Would a ballerina leaping over the grand canyon look cool? Sometimes I use AI art to generate copyright free objects that I can snip for a collage. A lot of the time I use it to generate ideas. I start naming random things and seeing what it shows me and I start getting inspired. I can ask CrAIon for pose reference, I can ask it to show me the interior of spaces from a specific angle.

I profoundly dislike the antipathy that tumblr has for AI art. I understand if people don't want their art used in training pools. I understand if people don't want AI trained on their art to mimic their style. You should absolutely use those tools that poison datasets if you don't want your art included in AI training. I think that's an incredibly appropriate action to take as an artist who doesn't want AI learning from your work.

However I'm pretty fucking aggressively opposed to copyright and most of the "solid" arguments against AI art come down to "the AIs viewed and learned from people's copyrighted artwork and therefore AI is theft rather than fair use" and that's a losing argument for me. In. Like. A lot of ways. Primarily because it is saying that not only is copying someone's art theft, it is saying that looking at and learning from someone's art can be defined as theft rather than fair use.

Also because it's just patently untrue.

But that doesn't really answer your question. Why reblog machine-generated art? Because I liked that piece of art.

It was made by a machine that had looked at billions of images - some copyrighted, some not, some new, some old, some interesting, many boring - and guided by a human and I liked it. It was pretty. It communicated something to me. I looked at an image a machine made - an artificial picture, a total construct, something with no intrinsic meaning - and I felt a sense of quiet and loss and nostalgia. I looked at a collection of automatically arranged pixels and tasted salt and smelled the humidity in the air.

I liked it.

I don't think that all AI art is ugly. I don't think that AI art is all soulless (i actually think that 'having soul' is a bizarre descriptor for art and that lacking soul is an equally bizarre criticism). I don't think that AI art is bad for artists. I think the problem that people have with AI art is capitalism and I don't think that's a problem that can really be laid at the feet of people curating an aesthetic AI art blog on tumblr.

Machine learning isn't the fucking problem the problem is massive corporations have been trying hard not to pay artists for as long as massive corporations have existed (isn't that a b-plot in the shape of water? the neighbor who draws ads gets pushed out of his job by product photography? did you know that as recently as ten years ago NewEgg had in-house photographers who would take pictures of the products so users wouldn't have to rely on the manufacturer photos? I want you to guess what killed that job and I'll give you a hint: it wasn't AI)

Am I putting a human out of a job because I reblogged an AI-generated "photo" of curtains waving in the pale green waters of an imaginary beach? Who would have taken this photo of a place that doesn't exist? Who would have painted this hypersurrealistic image? What meaning would it have had if they had painted it or would it have just been for the aesthetic? Would someone have paid for it or would it be like so many of the things that artists on this site have spent dozens of hours on only to get no attention or value for their work?

My worst ratio of hours to notes is an 8-page hand-drawn detailed ink comic about getting assaulted at a concert and the complicated feelings that evoked that took me weeks of daily drawing after work with something like 54 notes after 8 years; should I be offended if something generated from a prompt has more notes than me? What does that actually get the blogger? Clout? I believe someone said that popularity on tumblr gets you one thing and that is yelled at.

What do you get out of this? Are you helping artists right now? You're helping me, and I'm an artist. I've wanted to unload this opinion for a while because I'm sick of the argument that all Real Artists think AI is bullshit. I'm a Real Artist. I've been paid for Real Art. I've been commissioned as an artist.

And I find a hell of a lot of AI art a lot more interesting than I find human-generated corporate art or Thomas Kincaid (but then, I repeat myself).

There are plenty of people who don't like AI art and don't want to interact with it. I am not one of those people. I thought the gay sex cats were funny and looked good and that shitposting is the ideal use of a machine image generation: to make uncopyrightable images to laugh at.

I think that tumblr has decided to take a principled stand against something that most people making the argument don't understand. I think tumblr's loathing for AI has, generally speaking, thrown weight behind a bunch of ideas that I think are going to be incredibly harmful *to artists specifically* in the long run.

Anyway. If you hate AI art and you don't want to interact with people who interact with it, block me.

5K notes

·

View notes