#best example of artificial intelligence

Explore tagged Tumblr posts

Text

Hello @Everyone Marufu Islam excels in data labelling for startups and businesses of all sizes, using tools like Supervisely, Super Annotate, Labelbox, CVAT, and more. Fluent in COCO, XML, JSON, and CSV formats. Ready to enhance your projects? Everyone please check the gig and let me know if there is anything wrong https://www.fiverr.com/s/lP63gy @everyone Fiveer And Upwork Community Group Link https://discord.gg/VsVGKYwA Please Join

#data annotator#image annotation#annotations#annotating books#ai image#ai artwork#artificial intelligence#ai generated#@data annotation baeldung#@data annotation dependency#@data annotation in spring boot#@data annotation in spring boot example#@data annotation not working#@data annotation not working in spring boot#@data annotation of lombok#@data annotation used for#ai data annotator#ai data annotator jobs#ai data annotator salary#annotating data meaning#annotator for clinical data#apa itu data annotator#appen data annotator#appen data annotator salary#best data annotation companies#best data annotation tools#clinical data annotator#cover letter for data annotator#cpl data annotator#data annotation

0 notes

Text

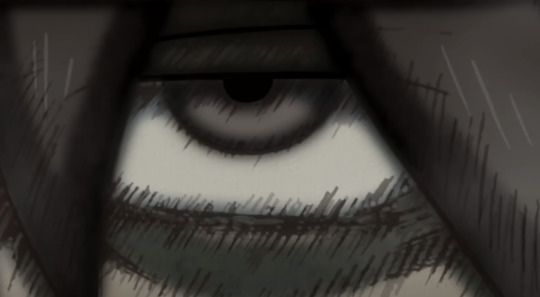

slightly mixed feelings about the angel engine. on the one hand, the characters are nice, the atmosphere is conveyed well. on the other hand, everything is spoiled by the AI. on reddit now there are some disputes that it should be boycotted, but I do not agree with this. Ai has a place in application when it is done well, and at first I did not even understand that it was Ai. It gives out "3D animation" in different styles. although my first thought was "wow, how can I also adjust my work in AI so that it looks the same?" so I do not see anything wrong, as long as it is done for the soul. Correct work with Ai does not kill art, I would say the angel engine is the best example of working with artificial intelligence.

(sorry for mistakes or typos in the text. This is not my native language. Correct me if anything)

#art#artists on tumblr#artwork#drawing#digital art#digital illustration#digital painting#арт#illustration#my art#angel engine#the mandela catalogue#mandela catalouge gabriel

187 notes

·

View notes

Text

Zodiark's Tempering

A lot of people have been confused about whether the Unsundered were tempered (they were) and how tempering works.

Long post under the cut.

First, I'm going to point at the exact line from Emet-Selch in Shadowbringers: "He tempered us. It was only natural. There is no resisting such power."

I believe this was said in one of the ocular cutscenes, but explicitly in no uncertain terms--the Convocation was tempered. This includes the Unsundered. The tempering was, in fact, so powerful, that even after having their souls cleansed in the Lifestream Convocation members still make 'the best servants' according to Emet-Selch.

Zodiark was not only the first primal, but a primal on a scale beyond fathoming. This was half a star's worth of souls, billions of people. I'd argue that we also see what this tempering looks like in practice with Emet-Selch at The Ladder scene in Kholusia, where he is genuinely moved and expresses admiration of both the Warrior of Light and the people of Kholusia coming together only to be railroaded back to 'but the world as it was was better'.

That was not a natural thought pattern. That was tempering. We see further evidence in how Emet-Selch tried repeatedly to live alongside The Sundered and had only the most negative qualities amplified--preventing him from ever finding peace. Hell, it shows in his argument that the qualities of a soul diminish with sundering too. For one, the default quality in a person isn't positive. He frames things in terms of other shards becoming proportionally less intelligent for example, or less kind--but arguably cruelty should have been diminished as well. The civilizations and inhabitants of other shards are also, notably, not at a huge personally/intellectually different framework compared to The Source--where souls are more dense and would (by Hades' argument) have been more advanced and capable.

What we actually know of unsundered versus souls mechanically is that they are more aetherically dense. Being more aetherically dense, it takes more dynamis to influence them. The ancients still feel absolutely and are vulnerable to Meteion, but the sundered are probably a bit more reactive on the whole. It might also be like an inertia situation where once an unsundered starts to feel something it tends to continue and build. That's speculation though.

Zodiark's tempering appears to be closer to magically enforced mental illness in the sense that it warps thought patterns, elevates some tendencies and minimizes/negates others, prevents certain ideas, twists perception, keeps some memories or experiences at the forefront while diminishing or losing others, etc. Psychological wounds that are useful to the mission are kept open artificially well past the point someone would have naturally started to scar over. There is a reason I've been arguing that it's closer to coercion and insanity plea in terms of diminished responsibility. The tempered aren't even able to accurately understand the situations they are in due to thought warping, and claims that their position is reasonable amounts to a completely psychotic person claiming not to be crazy. It's not as simple as mind control from an external source. It's that the person's own thoughts and tendencies are manipulated in unnatural ways to form a cage forcing them into compliance with the primal's mission.

I'd argue it's also very suspect that Elidibus, the lunar shades, and (IIRC) the despairing post-Terminus ancients Venat encountered all separately repeat the exact phrase wishing for 'a world free from sorrow'. Lahabrea explicitly referring to Zodiark as 'the master' at Praetorium strongly indicates tempering too.

A major source of confusion stems from the following scene:

Creation magics are complex and highly sensitive, requiring a tremendous amount of focus. A single moment of distraction can change the outcome of creation. Hades creating his phantom Amaurot having an idle thought 'Hythlodaeus would know the truth' is enough to make the shade of Hythlodaeus aware, even if it wasn't on purpose. Even if it was a split second.

Zodiark was a creation that involved not only the sacrifice of half a star (so likely billions of people)--it also involved the active participation and focus of those people in the summoning process. We know from the environmental storytelling and evidence at Akademia Anyder that I cited in other analysis that Lahabrea was the mind behind the Zodiark concept. We know that the scale of the creation was enormous to the point that it would not function without elevating one individual to steer it--the Heart. This being Elidibus. But the actual summoning was still extremely complex and on a vast scale involving multitudes of people at different skill levels. Hythlodaeus, while experienced as Chief of the Bureau of the Architect, has very limited abilities in creation himself due to aether deficiency. He still sacrificed himself as one of the participants in Zodiark's summoning ritual.

Faith was necessary to simplify the process across that many people of varying life experiences and skill levels. The Convocation would have been handling the more technical elements and forms the concept would take, and guess who was at the head of the Convocation's efforts?

Lahabrea. Who has recently failed to contain Archaeotania despite his people's every faith in him, who we know to be extremely traumatized and has every reason to be terrified not only of the situation but of not performing up to the expectations placed on him. For god's sake, one of the last things Athena said to him involved calling him disappointing after getting full access to his soul.

A single moment of Lahabrea being afraid and hoping everyone would be able to join together to save the star, to be on the same page, would be enough to cause tempering. He's not perfect, but he's been expected to be. He's expected to have perfect composure, impervious to normal human emotions. And of course emotions bled through at a time like that.

The same hope that others would join in to support the mission has bled into every subsequent primal summoning where tempering became a problem.

Venat's summoning technique is different from the summoning technique used by the Ascians. It's also different from the technique used by the Loporrits. Venat used standard creation magic without elevating faith as a tool. She had less people to worry about. The loporrits decided faith would be a useful tool for The Ragnarok insofar as the primals could help fuel its journey, but going off of pure faith rather than the hybrid of faith and strict procedure is dangerous. So they combined the two in a controlled environment knowing the risks.

What Livingway is saying is that using the hybrid technique that is being employed for the first time in that scene, a primal as powerful as Zodiark would cause a slight tug instead of the full force of tempering. Normally there isn't any sense of influence at all with that technique. Zodiark is on a scale and at such a monumental power level that even the safe method would try to influence its summoners along with any bystanders. Zodiark has the most powerful tempering of any primal that has ever existed.

I also want to take a moment to point at what primals are and how they work as distinct from standard creations.

When discussing creations, the shades at Hades' phantom Amaurot mention that souls are gifts from the star and cannot be artificially created. This is part of why Hermes claimed to be so distraught about the way concepts were being handled--there wasn't any accounting for dynamis as a factor.

Livingway mentions that Venat forbade loporrits from making anything possessed of a soul (impossible) or similar.

Here I'm going to point you back to the lecture from the ARR quest What Little Gods Are Made Of:

Primals, brought into being with faith rather than as pure technical concepts, have something like a soul. They are archetypes shared by the living and when they are slain, they aren't destroyed because archetypes can't be destroyed. They return to the aetherial sea, like souls, until they are called forth again. These archetypes reflect common human experiences and desires shared across many, many people. It makes sense that Zodiark would be built off of this premise in the first place as a way of creating common ground with that many participants.

It also makes some sense that something resembling a soul is advantageous, since logistically in FFXIV souls are sources of power in their own right. Thordan, Nidhogg, Shinryu, and The Alexandrians can attest to that.

I understand that there are people who prefer not to use tempering as a key factor in characterization of The Unsundered, and disregard tempering from their headcanons. Obviously this is allowed, but it's not canon. The game is explicit on this point and underlines it multiple times in multiple ways. Hades when told about what lies ahead is completely horrified and does not want to go down the path the Warrior describes--not just for his own sake but because he morally disagrees with it. His line about staying true to his principles at Ultima Thule is deliberately ambiguous--is he referring to pursuit of the Ardor? Trying to save his people? Trying to resist tempering as best he could despite being helpless against it? Giving the Warrior of Light an opportunity to mercy kill him? We don't know.

And regarding the memory of Lahabrea saying he can believe he would get lost trying to save his people to the point of becoming something horrific during Anabaseios... it's very, very important to remember that Lahabrea hates himself. Lahabrea just accepted for years that Erichthonios is better off with the idealized memory of his dead, abusive mother rather than the living father who rescued him. Lahabrea has been ready to commit pseudo-suicide throughout Pandaemonium. His entire Savage transformation design reflects that he thinks the only thing he's good for is being used for his DNA and serving to protect people as Lahabrea. He tries to shield his heart with his wings and the left arm representative of his personal self is long/at a distance, anemic, and basically non-functional due to too many joints. He doesn't want to exist as a person because he hates himself and he expects to be hurt.

And that's before everything to do with The Final Days.

Lahabrea is not a reliable narrator when it comes to questions about whether Lahabrea is a good person. He might be the least reliable source you could find. He is a guilt katamari who is ready to think the worst of himself given the slightest opportunity.

A huge part of what makes Zodiark's tempering interesting is that even if any of the Unsundered are freed, it's difficult to definitively answer the question of whether they might have made the same choices organically. Anything in their heads that might have given them tools to make another choice was taken away. And we know the sundered Convocation members were not tempered when they decided to join The Ardor as Ascians. Fandaniel was able to kill Zodiark because of this.

As it stands though, none of the Unsundered were free. They cannot be judged by the standards of people who are.

I hope this helps clear things up!

157 notes

·

View notes

Note

Hello Mr. AI's biggest hater,

How do I communicate to my autism-help-person that it makes me uncomfortable if she asks chatgpt stuff during our sessions?

For example she was helping me to write a cover letter for an internship I'm applying to, and she kept asking chatgpt for help which made me feel really guilty because of how bad ai is for the environment. Also I just genuinely hate ai.

Sincerely, Google didn't have an answer for me and asking you instead felt right.

ok, so there are a couple things you could bring up: the environmental issues with gen ai, the ethical issues with gen ai, and the effect that using it has on critical thinking skills

if (like me) you have issues articulating something like this where you’re uncomfortable, you could send her an email about it, that way you can lay everything out clearly and include links

under the cut are a whole bunch of source articles and talking points

for the environmental issues:

here’s an MIT news article from earlier this year about the environmental impact, which estimates that a single chat gpt prompt uses 5 times more energy than a google search: https://news.mit.edu/2025/explained-generative-ai-environmental-impact-0117

an article from the un environment programme from last fall about ai’s environmental impact: https://www.unep.org/news-and-stories/story/ai-has-environmental-problem-heres-what-world-can-do-about

an article posted this week by time about ai impact, and it includes a link to the 2025 energy report from the international energy agency: https://time.com/7295844/climate-emissions-impact-ai-prompts/

for ethical issues:

an article breaking down what chat gpt is and how it’s trained, including how open ai uses the common crawl data set that includes billions of webpages: https://www.edureka.co/blog/how-chatgpt-works-training-model-of-chatgpt/#:~:text=ChatGPT%20was%20trained%20on%20large,which%20the%20model%20is%20exposed

you can talk about how these data sets contain so much data from people who did not consent. here are two pictures from ‘unmasking ai’ by dr joy buolamwini, which is about her experience working in ai research and development (and is an excellent read), about the issues with this:

baker law has a case tracker for lawsuits about ai and copyright infringement: https://www.bakerlaw.com/services/artificial-intelligence-ai/case-tracker-artificial-intelligence-copyrights-and-class-actions/

for the critical thinking skills:

here’s a recent mit study about how frequent chat gpt use worsens critical thinking skills, and using it when writing causes less brain activity and engagement, and you don’t get much out of it: https://time.com/7295195/ai-chatgpt-google-learning-school/

explain to her that you want to actually practice and get better at writing cover letters, and using chat gpt is going to have the opposite effect

as i’m sure you know, i have a playlist on tiktok with about 150 videos, and feel free to use those as well

best of luck with all of this, and if you want any more help, please feel welcome to send another ask or dm me directly (on here, i rarely check my tiktok dm requests)!

#ai asks#generative ai resources#cringe is dead except for generative ai#generative ai#anti ai#ai is theft

55 notes

·

View notes

Note

i just finished LO and im bothered by so much of it i dont know where to start. persephone is maybe the most inconsistent, all over the board character ive read in any media that i can remember. im incredibly confused as to why a character supposedly brimming with sensitivity and empathy to the point where she doesnt want her rapist harmed (cant relate)...has zero trauma regarding the thousands of largely innocent mortals she inadvertently murdered. twice. wheres that same energy??? wtf

congrats on crossing the finishing line !

So much beyond even just Persephone is inconsistent as all hell, and it's what lends to my belief that LO kind of crumbled under the weight of its own success. Like, the comic was clearly only meant to be a self-indulgent Tumblr fluff piece in the beginning, and that's what it frankly excelled at being - only to suddenly expand into an actual franchise with merch deals, TV licensing, and most of all, actual real expectations from paying readers and Webtoons shareholders that it would payoff as something it was never meant to be in the first place.

Persephone is supposedly guilty about the Act of Wrath, but we never see a shred of guilt throughout the entire first season, not until the Act of Wrath is actually established and she's artificially given the opportunity to show guilt - and if anything, we see more scenes of her being vindictive and terrible to people that are lower class than her than we see of her actually being guilty over the AoW. She couldn't even accept the punishment she got, she acted like she was entitled to getting off without a single consequence and then when she did suffer consequences, she was crying purely over the fact she didn't get to tell Hades she loved him.

Persephone is supposedly blessed with so much beauty and intelligence that she had a secret 'affair' with Ares while she was in the Mortal Realm and she has an entire CVS receipt of accomplishments prior to moving to Olympus - and yet she doesn't know what "sleeping to the top" means and constantly acts dumber than a doorknob.

Hades is supposedly a cruel and cunning womanizer who misinterprets Persephone's requests for help as a booty call and warns her that the neighbors might see them fucking in the kitchen, but then as soon as they're in a relationship and actually interested in having sex with each other, he's bumbling like a high school boy who can't even say the word "penis" without giggling.

Even entire scenes tend to oppose each other - like in the finale, when Hades looks depressed and gets a phone call from Hecate checking in on him, which is juxtaposed against flashbacks that Persephone has to spend a few months out of the year in the Mortal Realm, only to then cut back to Hades who's about to meet up with Persephone because it's time for her to return to the Underworld. Why was he so depressed as if she had just left him ??? 😭😆

And why in the world was the comic's final messaging - the "moral of the story" if you could call it that - summed up and exposited as "true love really does exist"??? Isn't there already a couple in the comic who have gone out of their way to prove that? (cough Eros and Psyche cough) What about Poseidon and Amphitrite? How can you have a plotline that resolves itself as "true love really exists!" in a world where the gods of love also already exist?

I could go on and on and on about all the different examples of inconsistent writing, the plot points and character traits that contradict each other - sometimes as soon as within the same episode they're introduced - and all the loose threads that were left unanswered and, at best, handwaved away by Rachel in the finale's Q&A session.

But the story really does speak for itself just by reading it, especially when there isn't stretches of time between each episode to blur the details. When you can read each episode back to back, you really do notice all the inconsistencies even more, and the lack of explanations for each of them.

Not that I'm planning on reading it, but here's hoping Rachel at least prepares herself better for the next comic she plans on making. She's said in the past that she "thrives in the chaos" but I really hope she knows deep down that she bit off more than she could chew and that chaos was more so to her detriment than her benefit. No one fell in love with LO with the expectation that it would turn into a cutesy Disney film or a hyped up Marvel blockbuster - we just wanted the intimate storytelling that revolved around Hades and Persephone, the storytelling that was lost as the story grew too big for itself to function.

#lore olympus critical#anti lore olympus#anon ask me anything#lo critical#ask me anything#anon ama#ama

94 notes

·

View notes

Text

Writing Notes: Hard Science Fiction

Hard science fiction - a subgenre of science fiction writing that emphasizes scientific accuracy and precise technical detail as part of its world-building.

When these science fiction stories touch on real-world topics like space travel, earth science, computer advancements, and artificial intelligence, they do so with an eye toward accuracy.

Hard science fiction also goes by shortened names like “hard sci-fi” and “hard SF.”

Prominent awards in the hard science fiction space include the Hugo Award, the Arthur C. Clarke Award, the Nebula Award, and the Jupiter Award.

Hard sci-fi contrasts with another type of science fiction writing that can be called soft science fiction.

Soft sci-fi novels and movies deal with topics that do not comport with science as we understand it.

For instance, the recreation of dinosaurs in Jurassic Park and its sequels does not fully overlap with scientific reality, although the book's explanation of DNA technology is largely accurate.

Other scientifically unfeasible topics like time travel or faster-than-light spaceships similarly qualify a book as soft sci-fi.

Examples of Hard Science Fiction Books

There is no shortage of science fiction novels, short stories, movies, and TV shows that lean into hard science as part of their world-building. Explore some of the influential hard science fiction books of the past few decades.

Foundation by Isaac Asimov (1951): This book, which kicked off a long series by Asimov, takes place in a distant future but is anchored by concepts in real-world mathematics, holographs, and psychology.

The Sentinel by Arthur C. Clarke (1951): Clarke adapted his short story into the Stanley Kubrick-directed cinematic space opera 2001: A Space Odyssey. Between the initial short story and the movie, Clarke deals with topics of evolution, space travel, starships, artificial intelligence, and the first contact with alien life forms.

Mission of Gravity by Hal Clement (1953): This early standard of hard science fiction novels takes place on a disk-shaped planet called Mesklin where human life cannot survive, but human-made probes can encounter aliens. As Clement describes this alien new world, he grounds his science in proven truths about chemistry and physics.

The Andromeda Strain by Michael Crichton (1969): Crichton is best known for Jurassic Park, but in his first book, he tackled the subject of pandemic viruses.

Tau Zero by Poul Anderson (1970): This hard science fiction novel is a thrill ride in both the figurative and literal sense, as it imagines a near-light-speed vehicle that careens out of control. While most physicists strongly doubt a future where humans could travel anywhere near the speed of light, Anderson does anchor a great deal of the novel in real-world physics.

Ringworld by Larry Niven (1973): Niven's novel is about aliens and massive artificial worlds, but it is based on the hard science of Newtonian physics and Mendelian biology.

Rendezvous with Rama by Arthur C. Clarke (1973): Clarke followed up his work on 2001: A Space Odyssey by penning this tale where a strange spaceship called Rama enters the solar system. The book is packed with scientifically accurate descriptions of mechanics and astrophysics.

Dragon's Egg by Robert L. Forward (1980): This hard sci-fi book drew rave reviews from genre masters Isaac Asimov and Arthur C. Clarke for its tale of neutron stars and extraterrestrial life.

Neuromancer by William Gibson (1984): Gibson’s dystopian novel, which bears some resemblance to Blade Runner (which came out two years prior), deals with computer science cyberpunk culture and the rise of artificial intelligence.

Red Mars by Kim Stanley Robinson (1992): This novel, which kicks off Robinson's Mars trilogy, deals with the colonization of Mars in a world where Earth has become uninhabitable. Robinson starts with a foundation of real-world astrophysics and geopolitics and then builds from there.

A Fire Upon the Deep by Vernor Vinge (1992): This dystopian novel about future wars with alien races bases its science on real-life military technology, artificial intelligence, astrophysics, and cognitive science.

Starfish by Peter Watts (1999): This hard science fiction novel takes place under the sea. It deals with marine biology and bioengineering but also psychology and mental illness, topics that set it apart from many other hard sci-fi works.

Revelation Space by Alastair Reynolds (2000): Reynolds holds a Ph.D. in astronomy and he uses it to great effect in this interstellar thriller that combines the quiet dread of Arthur C. Clarke with the astronomical wonder of Carl Sagan.

Schild's Ladder by Greg Egan (2002): Egan bases this novel on the work of real-life mathematician Alfred Schild and his contributions to differential geometry.

The Three-Body Problem by Cixin Liu (2008): This novel by sci-fi author Cixin Liu takes its title from a real concept in orbital mechanics. It imagines a near future where the human race awaits an interstellar invasion from beyond our solar system. Its characters discover a planet that belongs to three different suns, and while this may not be scientifically possible, it is still grounded in elements of real-world physics.

The Martian by Andy Weir (2012): Weir's debut novel, which was later the basis of a Hollywood blockbuster, involves an astronaut stranded on Mars. His survival techniques—and the techniques of his earthbound comrades trying to rescue him—are grounded in real-world scientific facts and discoveries.

Source ⚜ More: References ⚜ Writing Resources PDFs

#science fiction#writing notes#writeblr#literature#writers on tumblr#writing reference#dark academia#spilled ink#writing prompt#creative writing#writing inspiration#writing ideas#light academia#writing resources

70 notes

·

View notes

Text

If you’re intent on using tools like ChatGPT to write, I’m probably not going to be able to convince you not to. I do, however, want to say one thing, which is that you have absolutely nothing to gain from doing so.

A book that has been generated by something like ChatGPT will never be the same as a book that has actually been written by a person, for one key reason; ChatGPT doesn’t actually write.

A writer is deliberate; They plot events in the order that they have determined is best for the story, place the introduction of certain elements and characters where it would be most beneficial, and add symbolism and metaphors throughout their work.

The choices the author makes is what creates the book; It would not exist without deliberate actions taken over a long period of thinking and planning. Everything that’s in a book is there because the author put it there.

ChatGPT is almost the complete opposite to this. Despite what many people believe, humanity hasn’t technically invented Artificial Intelligence yet; ChatGPT and similar models don’t think like humans do.

ChatGPT works by scraping the internet to see what other people and sources have to say on a given topic. If you ask it a question, there’s not only a good chance it will give you the wrong answer, but that you’ll get a hilariously wrong answer; These occurrences are due to the model pulling from sources like Reddit and other social media, often from comments meant as jokes, and incorporating them into its database of knowledge.

(A major example of this is Google’s new “AI Overview” feature; Look up responses and you’ll see the infamous machine telling you to add glue to your pizza, eat rocks, and jump off the Golden Gate Bridge if you’re feeling suicidal)

Anything “written” by ChatGPT, for example, would be cobbled together from multiple different sources, a good portion of which would probably conflict with one another; If all you’re telling the language model to do is “write me a book about [x]”, it’s going to pull from a variety of different novels and put together what it has determined makes a good book.

Have you ever read a book that you thought felt clunky at times, and later found out that it had multiple different authors? That’s the best comparison I can make here; A novel “written” by a language model like ChatGPT would resemble a novel cowritten by a large group of people who didn’t adequately communicate with one another, with the “authors” in this case being multiple different works that were never meant to be stitched together.

So, what do you get in the end? A not-very-good, clunky novel that you yourself had no hand in making beyond the base idea. What exactly do you have to gain from this? You didn’t get any practice as a writer (To do that, you would have to have actually written something), and you didn’t get a very good book, either.

Writing a book is hard. It’s especially hard when you’re new to the craft, or have a busy schedule, or don’t even know what it is you want to write. But it’s incredibly rewarding, too.

I like to think of writing as a reflection of the writer; By writing, we reveal things about ourselves that we often don’t even understand or realize. You can tell a surprising amount about a person based on their work; I fail to see what you could realize about a prompter when reading a GPT-generated novel besides what works it pulled from.

If you really want to use ChatGPT to generate your novel for you, then I can’t stop you. But by doing so, you’re losing out on a lot; You’re also probably losing out on what could be an amazing novel if you would actually take the time to write it yourself.

Delete the app and add another writer to the world; You have nothing to lose.

#writing#writeblr#writer#writers#aspiring author#writers on tumblr#author#writing advice#chatgpt#anti chatgpt#ai#anti ai#fuck ai#generative ai#anti genai#anti generative ai#genai#anti gen ai#gen ai#ai writing#anti ai writing#fuck generative ai#fuck genai#long post#ams

56 notes

·

View notes

Note

deep processing layer acts a lot like an "organic algorithm" based off the patterns, and I think slime molds could be a good comparison alongside the conways game of life and bacterial colony simulations. either way, its like a math process but organic...

Oh yeah definitely. It could be a massive array of bioluminescent microorganisms that behave very similar to a cellular automaton, or a similar "organic algorithm" like you said.

(Left: Deep Processing, Right: Conway's Game of Life)

Slime molds in particular use a method called heuristics to "search" for an optimal solution. It may not be the "best" solution, but often it can come close. One of the most commonly cited examples of using slime molds in this way is in the optimization of transit systems:

Physarum polycephalum network grown in a period of 26 hours (6 stages shown) to simulate greater Tokyo's rail network (Wikipedia)

Another type of computing based on biology are neural networks, a type of machine learning. The models are based on the way neurons are arranged in the brain- mathematical nodes are connected in layers the same way neurons are connected by synapses.

[1] [2]

I know very little about this form of computation (the most I know about it is from the first few chapters of How to Create a Mind by Ray Kurzweil, a very good book about artificial intelligence which I should probably finish reading at some point), but I imagine the cognitive structure of iterators is arranged in a very similar way.

I personally think that the neuronal structure of iterators closely resembles networks of fungal mycelia, which can transmit electrical signals similar to networks of neurons. The connections between their different components might resemble a mycorrhizal network, the connections between fungal mycelia and plant roots.

Iterators, being huge bio-mechanical computers, probably use some combination of the above, in addition to more traditional computing methods.

Anyway... this ask did lead to me looking at the wikipedia articles for a couple of different cellular automata, and this one looks a LOT like the memory conflux lattices...

54 notes

·

View notes

Note

honestly, the whole ai fight or disagreement thing is kinda insane. we’re seeing the same pattern that happened when the first advanced computers and laptops came out. people went on the theory that they’d replace humans, but in the end, they just became tools. the same thing happened in the arts. writing, whether through books or handwritten texts, has survived countless technological revolutions from ancient civilizations to our modern world.

you’re writing and sharing your work through a phone, so being against ai sounds a little hypocritical. you might as well quit technology altogether and go 100 percent analog. it’s a never ending cycle. every time there’s a new tech revolution, people act like we’re living in the terminator movies even though we don’t even have flying cars yet. ai is just ai and it’s crappy. people assume the worst but like everything before it it will probably just end up being another tool because people is now going to believe anything, nowadays.

Okay so...no. It's never that black and white. Otherwise I could argue that you might as well go 100% technological and never touch grass again. Which sounds just as silly. There are many problems with AI and it's more than just 'robots taking over'. It's actually a deeper conversation about equity, ethics, environmentalism, corruption and capitalism. That's an essay I'm not sure a lot of people are willing to read, otherwise they would be doing their own research on this. I'll sum it up the best I can.

DISCLAIMER As usual I am not responsible for my grammar errors, this was written and posted in one go and I did not look back even once. I'm not a professional source. I just want to explain this and put this discussion to rest on my blog. Please do your own research as well.

There's helpful advancement tools and there's harmful advancement tools. I would argue that AI falls into the latter for a few of reasons.

It's not 'just AI', it's a tool weaponised for more harm than good: Obvious examples include deep fakes and scamming, but here's more incase you're interested.

A more common nuisance is that humans now have to prove that they are not AI. More specifically, writers and students are at risk of being accused of using AI when their work reads more advance that basic writing criteria. I dealt with this just last year actually. I had to prove that the essay I dedicated weeks of my time researching, writing and gathering citations for was actually mine.

I have mutuals that have been accused of using AI because their writing seems 'too advanced' or whatever bs. Personally, I feel that an AI accusation is more valid when the words are more hollow and lack feeling (as AI ≠ emotional intelligence), not when a writer 'sounds too smart'.

"You're being biased."

Okay, here is an unbiased article for you. Please don't forget to take note of the fact that the negative is all stuff that can genuinely ruin lives and the positive is stuff that makes tasks more convenient. This is the trend in every article I've read.

Equity, ethics, corruption, environmentalism and capitalism:

Maybe there could be a world where AI is able to improve and truly help humans, but in this capitalistic world I don't see it being a reality. AI is not the actual problem in my eyes, this is. Resources are finite and lacking amongst humans. The wealthy hoard them for personal comfort and selfish innovations leading to more financial gain, instead of sharing them according to need. Capitalism is another topic of its own and I want to keep my focus on AI specifically so here are some sources on this topic. I highly recommend skimming through them at least.

> Artificial Intelligence and the Black Hole of Capitalism: A More-than-Human Political Ethology > Exploiting the margin: How capitalism fuels AI at the expense of minoritized groups > Rethinking of Marxist perspectives on big data, artificial intelligence (AI) and capitalist economic development

I want to circle back to your first paragraph and just dissect it really quick.

"we’re seeing the same pattern that happened when the first advanced computers and laptops came out. people went on the theory that they’d replace humans, but in the end, they just became tools."

One quick google search gives you many articles explaining that and deeming this statement irrelevant to this discussion. I think this was more a case of inexperience with the internet and online data. The generations since are more experienced/familiar with this sort of technology. You may have heard of 'once it's out there it can never be deleted' pertaining to how nothing can be deleted off the internet. I do not think you're stupid anon, I think you understand this and how dangerous it truly is. Especially with the rise in weaponisation of AI. I'm going to link some quora and reddit posts (horrible journalism ik but luckily I'm not a journalist), because taking personal opinions from people who experienced that era feels important.

> Quora | When the internet came out, were people afraid of it to a similar degree that people are afraid of AI? > Reddit | Were people as scared of computers when they were a new thing, as they are about AI now? > Reddit | Was there hysteria surrounding the introduction of computers and potential job losses?

"the same thing happened in the arts. writing, whether through books or handwritten texts, has survived countless technological revolutions from ancient civilizations to our modern world."

I think this is a logical guess based on pattern recognition. I cannot find any sources to back this up. Either that or you mean to say that artists and writers are not being harmed by AI. Which would be a really ignorant statement.

We know about stolen content from creatives (writers, artists, musicians, etc) to train AI. Everybody knows exactly why this is wrong even if they're not willing to admit it to themselves.

Let's use writers for example. The work writers put out there is used without their consent to train AI for improvement. This is stealing. Remember the very recent issue of writer having to state that they do not consent to their work being uploaded or shared anywhere else because of those apps stealing it and putting it behind a paywall?

I shouldn't have to expand further on why this is a problem. Everybody knows exactly why this is wrong even if they're not willing to admit it to themselves. If you're still wanting to argue it's not going to be with me, here are some sources to help you out.

> AI, Inspiration, and Content Stealing > ‘Biggest act of copyright theft in history’: thousands of Australian books allegedly used to train AI model > AI Detectors Get It Wrong. Writers Are Being Fired Anyway

"you’re writing and sharing your work through a phone, so being against ai sounds a little hypocritical. you might as well quit technology altogether and go 100 percent analog."

...

"it’s a never ending cycle. every time there’s a new tech revolution, people act like we’re living in the terminator movies even though we don’t even have flying cars yet."

Yes there is usually a general fear of the unknown. Take covid for example and how people were mass buying toilet paper. The reason this statement cannot be applied here is due to evidence of it being an actual issue. You can see AI's effects every single day. Think about AI generated videos on facebook (from harmless hope core videos to proaganda) that older generations easily fall for. With recent developments, it's actually becoming harder for experienced technology users to differentiate between the real and fake content too. Do I really need to explain why this is a major, major problem?

> AI-generated images already fool people. Why experts say they'll only get harder to detect. > Q&A: The increasing difficulty of detecting AI- versus human-generated text > New results in AI research: Humans barely able to recognize AI-generated media

"ai is just ai and it’s crappy. people assume the worst but like everything before it it will probably just end up being another tool because people is now going to believe anything, nowadays."

AI is man-made. It only knows what it has been fed from us. Its intelligence is currently limited to what humans know. And it's definitely not as intelligent as humans because of the lack of emotional intelligence (which is a lot harder to program because it's more than math, repetition and coding). At this stage, I don't think AI is going to replace humans. Truthfully I don't know if it ever can. What I do know is that even if you don’t agree with everything else, you can’t disagree with the environmental factor. We can't really have AI without the resources to help run it.

Which leads us back to: finite number of resources. I'm not sure if you're aware of how much water and energy go into running even generative AI, but I can tell you that it's not sustainable. This is important because we're already in an irrevocable stage of the climate crisis and scientists are unsure if Earth as we know it can last another decade, let alone century. AI does not help in the slightest. It actually adds to the crisis, we're just uncertain to what degree at this point. It's not looking good though.

I am not against AI being used as a tool if it was sustainable. You can refute all my other arguments, but you can't refute this. It's a fact and your denial or lack of care won't change the outcome.

My final and probably the most insignificant reason on this list but it matters to me: It’s contributing to humans becoming dumber and lazier.

It's no secret that humans are declining in intelligence. What makes AI so attractive is its ability to provide quick solutions. It gathers the information we're looking for at record speed and saves us the time of having to do the work ourselves.

And I suppose that is the point of invention, to make human life easier. I am of the belief that too much is of anything is every good, though. Too much hardship is not good but neither is everything being too easy. Problem solving pushes intellectual growth, but it can't happen if we never solver our own problems.

Allowing humans to believe that they can stop learning to do even basic tasks (such as writing an email, learning to cite sources, etc) because 'AI can do it for you' is not helping us. This is really just more of a personal grievance and therefore does not matter. I just wanted to say it.

"What about an argument for instances where AI is more helpful than harmful?"

I would love for you to write about it and show me because unfortunately in all my research on this topic, the statistics do not lean in favour of that question. Of course there's always pros and cons to everything. Including phones, computers, the internet, etc. There are definitely instances of AI being helpful. Just not to the scale or same level of impact of all the negatives. And when the bad outweighs the good it's not something worst keeping around in my opinion.

In a perfect world, AI would take over the boring corporate tasks and stuff so that humans can enjoy life– recreation, art and music– as we were meant to. However in this capitalist world, that is not a possiblility and AI is killing joy and abolish AI and AI users DNI and I will probably not be talking about this anymore and if you want to send hate to my inbox on this don't bother because I'll block your anon and you won't get a response to feed your eristicism and you can never send anything anonymous again💙

66 notes

·

View notes

Text

Jasper's Guide To Energyforms

The various categories that any given energyform may fall under.

There are innumerable names and ideas for energyforms. This will merely go over my own categorizations and definitions of them. These are not universal, but they’ll hopefully provide a good starting point for your own understanding! Ultimately, this is all my own understanding, and all “sources” are meant to encourage you to look around and explore this subject on your own!

What Is An Energyform?

An energyform is any entity made of energy, often your own. I use the term as the catch-all category for servitors, thoughtforms, and so forth, because having a catch-all term for them is very helpful to me. The term is derived from “manaform” from Magic: The Gathering, which is a being that is made of pure mana. [1] I altered it to energyform for my own practice.

Types Of Energyforms

Egregore: An energyform created by an entire group of magical practitioners, typically more than 4. Three or fewer magical practitioners working together on an energyform will still produce a servitor. An example of an egregore is GOFLOWOLFOG. [2]

Pop Culture Entities: One theory for the validity of pop culture magic is that the pop culture entities – including deities, heroes, spirits, and more – are egregores. This is not a universal approach to pop culture magic.

Godform: The image or incarnation of a god. [3] Not quite an energyform of its own, but an energyform can be made to be a godform or to channel parts of a god’s power. [4] A godform energyform can fall under any other category.

Living Spell: An extremely basic energyform that is only a step above the basic spell by way of being given a form to deliver the said spell to its intended target. These typically fade away or are automatically destroyed once they accomplish their goal.

Servitor: A servitor is the “default” type of energyform, lacking in “sentience” and being focused on one or a handful of very specific goals. Extremely simple to make and often look more like “people” (humans, animals, demons/angels, et cetera) than a living spell. Most servitors are best described as magical computer programs.

Viral Servitors [5]: Servitors that have automatic ways built into them to copy themselves to continue doing their job so that there are many of them. One example of a viral servitor is Fotamecus. [6]

Sigil: Most sigils are symbols created for particular purposes and are more often connected to energyforms rather than being energyforms in and of themselves. However, certain animist approaches [7] may see sigils as spirits in and of themselves!

Chao-Mines [5], Energy Store-House Entities [4], Linking Sigils [8]: Functionally the same thing, these are particular sigils or energy points that you can use to draw energy from a place, another energyform, or a thing.

Hypersigils: The term was coined by Grant Morrison and elaborated upon by Aidan Wachter. It is a work of art, such as a novel, journal, or piece of art, that functions as an elaborate sigil. [9]

Sigil Shoals: A collection of sigils that are led by one that is guaranteed to happen, thus forcing the rest of them to come true as well. [10]

Thoughtform: A thoughtform is a “sentient” energyform, though the definition of “sentient” is up for debate. Typically, thoughtforms can think for themselves and do not need to be specifically directed around obstacles keeping them from accomplishing their goals. Thoughtforms are often compared to the type of “artificial intelligence” found in science fiction.

Citations, Resources, And Further Reading

[1] “Mana” on the MTG wiki, compiled by Fandom users, through a Breezewiki mirror: https://antifandom.com/mtg/wiki/Mana

[2] “GOFLOWOLFOG” on the Paranormal wiki, compiled by para.wiki users: https://para.wiki/w/GOFLOWOLFOG

[3] “Godform” on Wiktionary: https://en.wiktionary.org/wiki/godform

[4] Creating Magickal Entities: A Complete Guide to Entity Creation by David Michael Cunningham with contributions by Taylor Ellwood and T. Amanda R. Wagener “Servitors as Links” specifically can be found here: https://jasper-grimoire.tumblr.com/post/763362873038782464 “Energy Store-House Entities (ESHEs)” specifically can be found here: https://jasper-grimoire.tumblr.com/post/763362842589151232

[5] Condensed Chaos: an introduction to chaos magic by Phil Hine “Chao-Mines” specifically can be found here: https://jasper-grimoire.tumblr.com/post/763430369816182784

[6] “Fotamecus” by Fenwick Rysen: www.chaosmatrix.org/library/chaos/texts/fotamec1.html

[7] “Sigils: Scribbles to Forget or Spirits to Remember” by witchofsouthernlight on Tumblr: https://jasper-grimoire.tumblr.com/post/762793274388938752

[8] “Create Your Own Linking Sigil” by Jareth Tempest on The Shadow Binder: https://theshadowbinder.com/2019/02/22/create-your-own-linking-sigil/

[9] “Hypersigil” by writingdirty on Tumblr: https://jasper-grimoire.tumblr.com/post/710434325688123392 “Hypersigil” by windvexer on Tumblr: https://jasper-grimoire.tumblr.com/post/700376884034355200/hello-chicken-can-you-share-your-thoughts-on

[10] “Sigil Shoaling: A Chaos Magic Tool” by Cristina Farella on a personal website: https://www.cristinafarella.com/astrological-magic-tools/sigil-shoaling-a-chaos-magic-tool “Sigil Shoals and Robofish” by Mahigan on Kitchen Toad: https://www.kitchentoad.com/blog/sigil-shoals-and-robofish

“Jasper’s Servitor/Thoughtform Resource Post”, compiled on Tumblr by jasper-pagan-witch: https://jasper-pagan-witch.tumblr.com/post/762988504970100736/jaspers-servitorthoughtform-resource-post

93 notes

·

View notes

Text

A Quick Chat About AZ

Which won't be quick at all.

I've talked for a little about coming to understand Lysandre, and now I'd like to talk about AZ, who is still somewhat of a mystery to me. We know of his backstory, but what I'm missing is what defines his personality. We don't speak with him enough in game to know it, so I had to do some digging around so I can form some assumptions. Most of this post will be me using Canon and Non-Canon [But still official] sources to get a grasp on what kind of man AZ is, just in case we don't get more information about him in Legends ZA.

-I want to know what he's like, because I want to make more artwork with him. ^^'-

Before I get into what I've found, I want to first talk about a character who I think is clearly defined, by his sheer simplicity. That's right,

It's Larry.

Larry, for example, has very clear likes and dislikes. He's an overworked, professional, brooding, middle aged man, who has respect for rules and simplicity. He dresses plainly, and uses relatively ordinary or normal type pokemon. He's vocal and assertive of his preferred lifestyle, to the point of stubbornness [ of which is only thwarted by his desire for his paycheck]. He also loves food and the pursuit of an extraordinary meal. Despite his introvert-like demeanor, he's shown to be friendly, deeply contemplative, and hiding a quirky, dad joke-like sense of humor.

With all of this, I can extrapolate what kind of decisions Larry would make if I were to put him in a new non-canonical situation. And, I can also define where I'd like to bend or add on to his personality in my own form of fandom play.

--

Now, back to the main topic. All of this to digest with a grain of salt. I also apologize in advance if I hop around a little between sources.

AZ, I can only assume is underutilized because of his grand age. 3,000 years old, means 3,000 years of knowledge or a direct eye witness of history. He wondered in search of his best friend, gradually witnessing the world transition from ancient to modern. Chances are, he can answer regional mysteries that gamefreak wouldn't want to touch upon. So, he's here one moment, and then gone the next after serving his key purpose in the game narrative.

Which brings me to all of the other official items I looked into and some thoughts on his intelligence. I watched his appearance in the Pokemon Generations Episode 18: The Redemption. [ no one asked but i think i prefer the japanese voice much more ] And I also was given a data bank to look through Pokemon XY game script.

AZ build the ultimate weapon. Though, if he had any assistance with it, it's unspecified. IF I RECALL CORRECTLY, in the recent XY development leaks, Sycamore, Lysandre, and AZ were all the same character, before the role was properly divided into three. Still, I'm under the impression, that AZ wasn't just a king, but a well respected researcher.

There’s research material on the bookshelf [In Lysandre Labs] “The king was proud of the technology he had used to bring Kalos prosperity, but he couldn’t help but use it in a way that had never been intended... AZ, the man who was king, disappeared.”

I think, AZ being keenly intelligent, is an easy assertion to make. He could build and operate complicated machinery, and probably still can. There are even more side notes I can make about his more complex understanding of pokemon. I don't think I have the clarity of mind to pull out even more examples, so I'll use just this one:

AZ does have a Golurk of unspecified age on his small team. I wonder...is it possible he built his Golurk himself? There are many pokadex entries stating the creation of, and ancient use of pokemon in these old cities. AZ appears to understand the infinite energy that dwells within pokemon well enough to contribute to the society he ruled over. I don't think 'artificial' pokemon construction is beyond his understanding, if he knew well enough that he could bring one back to life.

---

Moving along.

After building the weapon to revive his friend:

"...his rage still had not subsided."

I absolutely love this flashback sequence. I love how they portrayed the rawness of AZ's emotions. The unnerving look in his eye as his horrific choice forms. You get the sense that he truly did just...snap.

Which Makes Me Wonder: How tethered is AZ to his emotions? Is he like Lysandre, who appears to allow himself to freely feel his own anger and frustration, letting it drive him to obssession. Does he have a slight sense of entitlement, too? Entitled to take the world's problems and other lives in his hands. If so, did he leave that wicked part of himself behind?

AZ is royalty. He's a former -literal- king during a time of war, unlike Lysandre who's a more metaphorical king during a time of general peace. That may be an excuse for him easily taking on, beyond important, harrowing decisions. I wonder if this was the most difficult point in his reign. That aside, AZ doesn't seem to be concerned with that title living in modern day.

He doesn't demand that he should be treated like his former title. I'm going to make another assumption that he has let that go a long time ago. He struggles with being forgiven, maybe even struggles with caring about himself. He's traded his old royal regalia, a robe, golden arm cuffs, and golden neck piece, for old, worn, patchy clothes. He doesn't care about his royalty, or his clothes, and AZ never makes any mention that I can remember about his own height.

None of it appears to matter to him. Only "where is she?"

---

Speaking of.

AZ's ability to hold on to hope is...something.

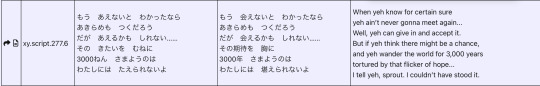

When yeh know for certain sure yeh ain’t never gonna meet again... Well, yeh can give in and accept it. But if yeh think there might be a chance, and yeh wander the world for 3,000 years tortured by that flicker of hope... I tell yeh, sprout. I couldn’t have stood it.

I don't think I could have stood it either. To not give up on his Floette for 3,000 years, to muscle through that torture until finally you meet again. What would you call the kind of 'grit' that would make you endure something like this? In the XY manga, he's plagued by nightmares of his past. He described his ordeal officially in the game as 'endless suffering'. Is it a certain kind of stubbornness? A kind of unconditional love? I'm not sure... AZ, in another one of my opinions, has got to be one of the series' most strong willed characters. You can't survive 3,000 years with weak resolve. He can't die of old age, but..well...

...

Despite the horrors he's capable of, he's got a gentle quality to him. I like the contrast, between a giant and a pokemon so delicate and tiny. I'm sure the juxtaposition of AZ and his Floette is purposeful, and in itself helps inform of his character.

This is from the Pokemon Adventure XY Manga, and isn't canonical, but...look at him. I found him greeting Trevor's Flabebe so sweet. He's respectful to the children also, and doesn't belittle them in the slightest.

His smile. He calls her beautiful, and she is! He has some stony expressions, but also some very softened ones in Anime, Game, and Manga. He hasn't lost his ability to smile after all this time. Which is nice...

OOF, I've been writing this for a long while, so I'll wrap things up. I can't trust myself to write a comprehensive summary, like Larry, at this time, but I hope to have one later. Again, I'm hoping Legends ZA will provide more before I start my true 'blorbo madness'.

Here are all of my assumptions in a list AZ is:

Extraordinarily Intelligent, capable of making and operating dangerous technology. I believe he wasn't just a King, but a contributing engineer/ researcher.

Deeply emotional, allowing himself to openly cry, feel anger, and sorrow. Despite his intelligence, his emotions can cloud his judgement. THOUGH, he may have much more emotional maturity now. [ i find it interesting both he and lysandre are allowed to shed tears ]

Strong of will, or is a person of unwavering conviction.

Stern, somewhat of a languisher, but gentle.

That's all I have for now. Let me know if anyone else has thoughts!

76 notes

·

View notes

Text

Steve DeCanio, an ex-Berkeley activist now doing graduate work at M.I.T., is a good example of a legion of young radicals who know they have lost their influence but have no clear idea how to get it back again. “The alliance between hippies and political radicals is bound to break up,” he said in a recent letter. “There’s just too big a jump from the slogan of ‘Flower Power’ to the deadly realm of politics. Something has to give, and drugs are too ready-made as opiates of the people for the bastards (the police) to fail to take advantage of it.” Decanio spent three months in various Bay Area jails as a result of his civil rights activities and now he is lying low for a while, waiting for an opening. “I’m spending an amazing amount of time studying,” he wrote. “It’s mainly because I’m scared; three months on the bottom of humanity’s trash heap got to me worse than it’s healthy to admit. The country is going to hell, the left is going to pot, but not me. I still want to figure out a way to win.”

Re-reading Hunter S. Thompson's 1967 article about Haight-Ashbury, I thought: "huh, this guy sounds like he's going places. I wonder whether he ever did 'figure out a way to win'?"

So I web searched his name, and ... huh!

My current research interests include Artificial Intelligence, philosophy of the social sciences, and the economics of climate change. Several years ago I examined the consequences of computational limits for economics and social theory in Limits of Economic and Social Knowledge (Palgrave Macmillan, 2013). Over the course of my academic career I have worked in the fields of global environmental protection, the theory of the firm, and economic history. I have written about both the contributions and misuse of economics for long-run policy issues such as climate change and stratospheric ozone layer protection. An earlier book, Economic Models of Climate Change: A Critique (Palgrave Macmillan, 2003), discussed the problems with conventional general equilibrium models applied to climate policy. From 1986 to 1987 I served as Senior Staff Economist at the President’s Council of Economic Advisers. I have been a member of the United Nations Environment Programme’s Economic Options Panel, which reviewed the economic aspects of the Montreal Protocol on Substances that Deplete the Ozone Layer, and I served as Co-Chair of the Montreal Protocol’s Agricultural Economics Task Force of the Technical and Economics Assessment Panel. I participated in the Intergovernmental Panel on Climate Change that shared the 2007 Nobel Peace Prize, and was a recipient of the Leontief Prize for Advancing the Frontiers of Economic Thought in 2007. In 1996 I was honored with a Stratospheric Ozone Protection Award, and in 2007 a “Best of the Best” Stratospheric Ozone Protection Award from the U.S. Environmental Protection Agency. I served as Director of the UCSB Washington Program from 2004 to 2009.

I don't know whether this successful academic career would count as "winning" by his own 1967 standards. But it was a pleasant surprise to find anything noteworthy about the guy at all, given that he was quoted as a non-public figure in a >50-year-old article.

82 notes

·

View notes

Text

you don’t hate Howard, you hate fatphobic tropes

Here at Mr Fart Powered Dot Com, I’m a long-time hater of the “fat best friend” trope and a long-time lover of jerkass characters, so I think I’m uniquely qualified to comment on this LOL

The biggest critiques I see of Howard are as follows: he’s gross, he’s stupid, he’s selfish, he’s lazy. Below the cut, I deconstruct each of these four criticisms not as faults of Howard, but faults of the writing, largely as a result of fatphobia.

These are all traits associated with the fat idiot trope, popularized by Homer Simpson and Peter Griffin. Think about any other character who possess all of the above characteristics. Far more often than not, they’re a fat character. Plenty of non-fat characters possess any of those traits individually — selfishness, stupidity, laziness, and grossness are not exclusive to fat characters. Nor do they inherently make a character 'bad,' irredeemable, or otherwise unlikeable! But all too often, especially in dated media, we see this flimsy, weak writing apply to the fat villain...or the fat comic relief...or the fat best friend.

Howard falls victim to these ugly, annoying 'fat guy' tropes whenever the writing is in need of a cheap laugh, or when they need to make Randy look extra good. Howard does have unique, interesting traits, but they are painfully underutilized in exchange for role fulfillment as the comic relief.

Stupidity

Contrary to what the show wants us to believe, Howard is not a complete idiot. His intelligence may not be of the academic variety (and even this is debatable), but I would argue he is more clever than Randy. Of the two of them, Howard's got more common sense. Randy misinterprets almost every lesson the Nomicon gives him, while H quickly understands each riddle he gets the chance to know about. (See “a ninja’s choice must be chosen by his own choosing,” “don’t go in someone else’s house,” “when facing an unfamiliar foe, seek an unlikely ally.”)

You could argue against this point in Shloomp! There It Is, where he literally gets to see the lesson as it is presented in the nomicon and doesn’t get it. But I’d argue that this was purposeful mischaracterization in order to further the plot, a point which will unfortunately recur in this essay. The writers care more about Howard as a tool than as a character, but instead of using the capabilities they build within him, they default to stereotypes.

Where conventional academics are concerned, we have one concrete example of his abilities: Howard is incredible at chess. It’s the iconic nerd game; it requires strategy, careful thinking, and the ability to predict your opponent’s moves. Who cares that he doesn't know the pieces' names? Who cares that he doesn’t abide by typical strategies? He can kick artificially-intelligent ass at the game, not to mention follow someone else's plays the way most people follow a football game.

And he's got street smarts that save Randy's ass on multiple occasions. He's more sociable, a better liar, and a quick thinker in stressful situations. Much of this particular point is pulled from @cunningweiner ‘s brain, who pointed out that Howard is really well-received by crowds (Heidi’s MeCast, the talent show, the Tummynator). Another interesting instance of this is Howard’s time as the Ninja — both the fake monster drill ninja, and the actual Ninja. He may not have accomplished his duties as a hero, but the onlookers Absolutely Ate Up his crowd work. He’s not the most physically willing guy around, but he knows how to appeal to an audience. His major flaw in remaining a well-liked public figure is that his ego gets real damn big, real damn fast. But he’s 15! If you blame a teenager for having empathy and esteem issues, I don’t know what to tell you.

Despite his emotional immaturity, Howard is wise beyond his years as a businessman. Before we move forward, I need to tell you: look at this section purely from a business standpoint. You have to forget morals, you have to forget standards, this is Disney XD meta and we are analyzing a man named Weiner, okay?

Okay. Howard embarks on a total of three business endeavors throughout this show, and each one is highly successful. Ninja Agent, weapon reseller, and McFist-o-plex manager. He embodied “work smarter, not harder” every time. Being an agent takes social skill and smooth talking, and clearly he appealed to a wide range of clients (not to mention earned their trust! What would you say if someone called you up and said “yeah, I manage Superman. Want him to appear in a commercial for you?”). Being a manager requires delegation skills and good memory. Reselling Ninja weapons is honestly just genius and I can’t believe he’s the first guy to do it.

Everyone around Howard, and Big H himself, views him as a dumbass. But time and time again, the episodes show us his mental capabilities! Imagine how much fun the writers could’ve had if they’d leaned on a lazy genius trope instead of a fat idiot.

Grossness

I don’t know about you guys, but I can’t think of a single thin character who relies on gross-out humor. Take, for example, Total Drama, a franchise with a bodily diverse cast and a heavy emphasis on gross-out humor. I mean, there’s an entire episode in the original season where every single character pukes onscreen. TD overall utilizes irreverent humor, but while grossness is a major player, it is not the only source of comedy.

And then you’ve got Owen, the only fat character in the original cast. His whole shtick is being fat, greedy, and nasty. Other characters will fart and burp and overeat — all things that Owen does frequently — but they also have other gags. Maybe they’re bitchy, or they’re geeky, or they’re a literal convict. Owen does not enjoy the luxury of character depth. He is only good for grossing out the audience. (Side tangent: Owen has notably made me laugh out loud a handful of times over the course of the four seasons he featured in. But guess what! Every single one of those laughs was begotten from a rare moment when, instead of farting or burping or eating something he shouldn’t, the writers stepped outside the ‘Owen zone’ and gave him a joke unrelated to his fatness. Fatphobic humor is truly a plague.)

I know I’m being a bit heavy-handed, but I want to emphasize how similar that is to RC9GN! Randy does schnasty shit too sometimes, but he gets to be funny in other ways. Grossness is Howard’s primary mode of comedy. During my first watch-through of the show, I remember being outraged at Howard’s tendency to eat Randy’s food, which, of course, was followed by digestion noises or farts. I was too angry to write down which episodes, but I counted four separate instances where they used that exact convention specifically to get Randy angry at Howard, thus catalyzing the episode’s storyline. (At some point I will have to go back and fact-check that, but we’re 900 words deep at this point and this has been in my drafts for over a month, so we move forward for now okay!!)

We do get to see flashes of other humor from Howard, especially into Season 2! His cleverness and apathy make for hilarious setups. But even these instances are undercut by something foul. An example that comes to mind is Fear Factor, a perfectly fine episode — one that I love quite a lot — except for the very last gag. Really? Howard gets to be normal-funny the entire episode, until the last minute? The idea that his biggest fear is running out of food literally only works because he is fat. Had this joke been given to any other character, it probably wouldn’t have even made it to storyboards. Even worse, if Howard had not been fat, this joke would never have been conceptualized in the first place. It is almost as if the writers are trying to hit a quota of gross-out jokes for Howard. At a certain point, my anger morphed to pure disappointment. That’s how disheartening it is to see.

Selfishness

Okay, Howard Weinerman is selfish. I'll give you that. But just because he's self-centered does not make him a bad person. May I bring to mind Gumball Watterson, Marcy Wu, Louise Belcher? All are textbook examples of selfish characters, and frequently act in their own best interest, but are ultimately good people. I mention them as proof that characters can have negative defining traits without sacrificing the audience’s sympathy.

Here's where I really get frustrated with RC9GN’s writing... They want to portray Howard as a jerk with a heart of gold — such as in Debbie Meddle — but they always undercut his few selfless moments with a gross-out gag, or a rude offhand comment, usually directed at Randy. Sometimes, Randy will reciprocate, in which case I give it a pass. There, the grossness or general assholery showcases their friendship, instead of putting Howard down for a stale laugh.

But like I said, that’s the ‘sometimes.’ The ‘often’ is every time we see him almost embody the ‘heart of gold’ part of his attempted archetype, only to be thrown out the window for a lame gag. A specific example is in “Bro Money Bro Problems,” where Howard has cash to spare for once. He immediately opts to spend it on Randy!….until Randy shloomps into the nomicon, then comes out to find that Howard spent everything he had on the Food Hole’s dinner menu. Sure, this was used to set the rest of the episode in motion. They run out of money, but they need more, so they go out and sell ninja weapons. But here’s the thing: for the rest of the episode, Howard spends his money on both him and Randy, rather than just himself, effectively making that dinner menu joke inconsistent with his characterization.

“Well how else would they set the episode in motion?” They could spend it all on arcade games. Or they spend it all at the boardwalk both times. OR, they are just excitable teenagers who realize, hey, this shit is lucrative! Let’s go get rich! Boom. Fixed your episode, fixed your Howard, fixed your fatphobia.

Laziness

Over and over again, the show tries to tell us that Howard is a lazy piece of shit. Other characters regard him as such, and honestly, so does Howard himself. But I would argue that he is no lazier than your average teenager — not to mention, no lazier than Randy! The difference is that for Howard, the writers intertwine his laziness with his alleged stupidity. They try to convince the audience that Howard is too stupid to care what’s going on.

However, this trait is unique from the other three, because I think this one manages to give him depth. Or at least, in my heart of hearts, it has the potential to do so. This characteristic lends to Howard’s most clever jokes, I think, because ultimately:

Howard is capable, but apathetic.

From the earliest episodes, it is established that he aims for minimum effort, maximum benefit. There’s the bit where Randy asks Howard to come up with the plan for once, and they both laugh at the idea of Howard doing the heavy lifting. Or even all the way into “Mort-al Kombat,” he says people are ‘really handing him the answers today’ when Randy puts in the work to get Howard ungrounded.

But just because Howard prefers not to do any work, doesn’t mean he won’t! And when he does put in effort, the results show that he is damn good at what he does. His time as Le Beret more than proves this point: from his ability to work under the radar, to the plans he forms, to the knowledge he has about Mort’s job & McFist Industries that allows him to get all the cool equipment he uses. We also see his skills and capability in “Debbie Meddle” (the ninja dummy), “Viva El Nomicon” (learning Spanish quickly), “Secret Stache” (commitment to the bit), “The Ninja Identity/Supremacy,” and more.

He very much operates under the mindset of ‘work smarter, not harder.’ He’ll get the job done if he has to. He’ll excel at the job if it benefits him. This is a really interesting character mechanic that would have been so much fun to explore. Like I said so many times above, though, the writers most often choose to undercut his abilities in favor of comedic expense.

Conclusion

Howard, in comparison to Randy, is obviously a lot harder to root for. Overall, Randy is a more conventional character with conventional flaws. Like most duos in media, the sidekick juxtaposes the hero — I would even argue that Howard, in some ways, is Randy in reverse. Randy is highly moral, but still has a lot of learning to do skill-wise; Howard is already extremely capable, but also very amoral. Because of this, the narrative places Randy at a higher value than Howard — which, yknow, fair enough! He is the protag, and that’s a great setup for a protagonist. But simply by virtue of being fat, Howard is not treated with the same level of respect as other sidekick/best friend characters.

For all his quirks and flaws, Howard is not a supremely unique character. His basic core aligns with so many other characters. But because the writers lean on his fatness, instead of leaning into his potential and his complexities, it is much harder to root for him — and it strips him of originality. I love this show with all my heart, but I would be lying if I said I didn’t mind the way it treated Howard. He had so much potential, even as the show was airing, and I will forever be upset that the crew squandered it on fatphobic tropes.

#howard weinerman#rc9gn#randy cunningham 9th grade ninja#character analysis#rc9gn meta#also I HAAAATED howard for most of s1 it took me a long time to warm up to him#trust that I have really mulled this over#this isnt randy hate either btw. dont anyone go putting words in my mouth#UPDATE: dudes…..I’ve been working on this for over two months now#on and off#and I’m STILL not happy w it 😭😭#but I have GOT to post it so. PLS lmk ur thoughts ur comments ur questions….#I loooove ninja meta. teehee#ninj-originals

74 notes

·

View notes

Note

What's your guys' opinion on artificial intelligence? I wanna know because I have strong opinions on it and I need to hear your opinions too 👻

JF: at first it was equal parts fascination and annoyance, and it hasn't changed too much. Of course this is early days, and ultimately they will probably replace our relatives with Ai, but right now it seems very useful for coders, problematic for scholarship, and--based on some clearly Ai-generated articles I've tripped over in recent days--capable of the dullest, most anodyne prose writing imaginable.

Like everyone else, I am very curious what AI-generated art or music could be. While I don't think very much about plagiarism in general, I have noticed that the best examples seem to be generated out of the most narrow prompts (veering closest to simply reshuffling the quite specific creative decisions found in pre-existing artists work) Just seems a little weak as an artistic statement. But right here is where some folk--and folks I know--have a very negative response, because they recognize how directly derivative the output is, and feel like its just an escalation of the wholesale theft of material introduced by the internet so long ago (and that is another too-long conversation)

On a personal level--I have experienced with inputting short bits of lyrics into these free "song-generating" sites, and found that no matter what prompts were given for the style of song, if the lyrics inputted have an additional or contrary point of view (like, say, a uptempo disco song with a "I'm falling asleep" lyric) the Ai really can't navigate the difference, and uses the lyric as a guide as if you need to unify all the idea in a song. In this way it's hard for me not to think the driving "idea" the Ai has is to make an object in the (preexisting) shape of a song, where human writers ideas for songs are to make things that are in new shapes.

122 notes

·

View notes

Text