#generic API views

Explore tagged Tumblr posts

Text

"Artists have finally had enough with Meta’s predatory AI policies, but Meta’s loss is Cara’s gain. An artist-run, anti-AI social platform, Cara has grown from 40,000 to 650,000 users within the last week, catapulting it to the top of the App Store charts.

Instagram is a necessity for many artists, who use the platform to promote their work and solicit paying clients. But Meta is using public posts to train its generative AI systems, and only European users can opt out, since they’re protected by GDPR laws. Generative AI has become so front-and-center on Meta’s apps that artists reached their breaking point.

“When you put [AI] so much in their face, and then give them the option to opt out, but then increase the friction to opt out… I think that increases their anger level — like, okay now I’ve really had enough,” Jingna Zhang, a renowned photographer and founder of Cara, told TechCrunch.

Cara, which has both a web and mobile app, is like a combination of Instagram and X, but built specifically for artists. On your profile, you can host a portfolio of work, but you can also post updates to your feed like any other microblogging site.

Zhang is perfectly positioned to helm an artist-centric social network, where they can post without the risk of becoming part of a training dataset for AI. Zhang has fought on behalf of artists, recently winning an appeal in a Luxembourg court over a painter who copied one of her photographs, which she shot for Harper’s Bazaar Vietnam.

“Using a different medium was irrelevant. My work being ‘available online’ was irrelevant. Consent was necessary,” Zhang wrote on X.

Zhang and three other artists are also suing Google for allegedly using their copyrighted work to train Imagen, an AI image generator. She’s also a plaintiff in a similar lawsuit against Stability AI, Midjourney, DeviantArt and Runway AI.

“Words can’t describe how dehumanizing it is to see my name used 20,000+ times in MidJourney,” she wrote in an Instagram post. “My life’s work and who I am—reduced to meaningless fodder for a commercial image slot machine.”

Artists are so resistant to AI because the training data behind many of these image generators includes their work without their consent. These models amass such a large swath of artwork by scraping the internet for images, without regard for whether or not those images are copyrighted. It’s a slap in the face for artists – not only are their jobs endangered by AI, but that same AI is often powered by their work.

“When it comes to art, unfortunately, we just come from a fundamentally different perspective and point of view, because on the tech side, you have this strong history of open source, and people are just thinking like, well, you put it out there, so it’s for people to use,” Zhang said. “For artists, it’s a part of our selves and our identity. I would not want my best friend to make a manipulation of my work without asking me. There’s a nuance to how we see things, but I don’t think people understand that the art we do is not a product.”

This commitment to protecting artists from copyright infringement extends to Cara, which partners with the University of Chicago’s Glaze project. By using Glaze, artists who manually apply Glaze to their work on Cara have an added layer of protection against being scraped for AI.

Other projects have also stepped up to defend artists. Spawning AI, an artist-led company, has created an API that allows artists to remove their work from popular datasets. But that opt-out only works if the companies that use those datasets honor artists’ requests. So far, HuggingFace and Stability have agreed to respect Spawning’s Do Not Train registry, but artists’ work cannot be retroactively removed from models that have already been trained.

“I think there is this clash between backgrounds and expectations on what we put on the internet,” Zhang said. “For artists, we want to share our work with the world. We put it online, and we don’t charge people to view this piece of work, but it doesn’t mean that we give up our copyright, or any ownership of our work.”"

Read the rest of the article here:

https://techcrunch.com/2024/06/06/a-social-app-for-creatives-cara-grew-from-40k-to-650k-users-in-a-week-because-artists-are-fed-up-with-metas-ai-policies/

610 notes

·

View notes

Photo

Serapis

Serapis is a Graeco-Egyptian god of the Ptolemaic Period (323-30 BCE) of Egypt developed by the monarch Ptolemy I Soter (r. 305-282 BCE) as part of his vision to unite his Egyptian and Greek subjects. Serapis’ cult later spread throughout the Roman Empire until it was banned by the decree of Theodosius I (r. 379-395 CE).

Some form of the god existed prior to the Ptolemaic Period and may have been the patron deity of the small fishing and trade port of Rhakotis, later the site of the city of Alexandria, Egypt. Serapis is referenced as the god Alexander the Great invoked at his death in 323 BCE, but whether that god – Sarapis – is the same as Serapis has been challenged as it is thought more likely Sarapis was a Babylonian deity.

Serapis was a blend of the Egyptian gods Osiris and Apis with the Greek god Zeus (and others) to create a composite deity who would resonate with the multicultural society Ptolemy I envisioned for Egypt. Serapis embodied the transformative powers of Osiris and Apis – already established through the cult of Osirapis, which had joined the two – and the heavenly authority of Zeus. He was therefore understood as Lord of All from the underworld to the ethereal realm of the gods in the sky.

The cult of Serapis spread from Egypt to Greece and was among the most popular in Rome by the 1st century CE. The cult remained a powerful religious force until the 4th century CE when Christianity gained the upper hand. The Roman emperor Theodosius I proscribed the cult in his decrees of 389-391 CE, and the Serapeum, Serapis’ cult center in Alexandria, was destroyed by Christians in 391/392 CE, effectively ending the worship of the god.

Ptolemy I & Serapis

After the death of Alexander the Great in 323 BCE, his generals divided and fought over his empire during the Wars of the Diadochi. Ptolemy I took Egypt and established the Ptolemaic Dynasty, which he envisioned as continuing Alexander’s work of uniting different cultures harmoniously. Egypt had been controlled by the Persians, except for brief periods, from 525 BCE until Alexander took it in 332 BCE, and they welcomed him as a liberator. Alexander had hoped to blend the cultures of the regions he conquered with his own Hellenism, but the Greeks and Egyptians were still observing the traditions of their own cultures at the time of his death. Ptolemy I made a fusion of these cultures among his top priorities and focused on religion as the means to that end.

The Egyptians still worshipped the same gods they had for thousands of years, and Ptolemy I recognized they were unlikely to accept a new deity, so he took aspects from two of the most popular gods – Osiris and Apis – and blended them with the Greek king of the gods, Zeus, drawing on the already established Egyptian cult of Osirapis, to create Serapis. The historian Plutarch (l. c. 45/46-120/125 CE) describes Serapis’ creation and the establishment of his cult center at Alexandria:

Ptolemy Soter saw in a dream the colossal statue of Pluto in Sinope, not knowing nor having ever seen how it looked, and in his dream the statue bade him convey it with all speed to Alexandria. He had no information and no means of knowing where the statue was situated but, as he related the vision to his friends, there was discovered for him a much-traveled man by the name of Sosibius who said that he had seen in Sinope just such a great statue as the king thought he saw. Ptolemy, therefore, sent Soteles and Dionysius, who, after a considerable time and with great difficulty, and not without the help of providence, succeeded in stealing the statue and bringing it away. When it had been conveyed to Egypt and exposed to view, Timotheus, the expositor of sacred law, and Manetho of Sebennytus, and their associates conjectured that it was the statue of Pluto, basing their conjecture on the Cerberus and the serpent with it, and they then convinced Ptolemy that it was the statue of none other of the gods but Serapis. It certainly did not bear this name when it came from Sinope but, after it had been conveyed to Alexandria, it took to itself the name which Pluto bears among the Egyptians, that of Serapis. (Moralia; Isis and Osiris, 28)

Serapis was intended to be, in Plutarch’s words, "god of all peoples in common, even as Osiris is" and the fact that a Greek (Timotheus) and an Egyptian (Manetho) agreed on the statue’s identity was taken as a sign from the god that he would assume this role. Ptolemy I built a grand temple for his worship, the Serapeum, which came to house the statue from Sinope. With Serapis at the center of religious devotion, Ptolemy I began a rigorous building program which was continued by his son and successor Ptolemy II Philadelphus (r. 285-246 BCE) who had co-ruled with him since 285 BCE. The great Library at Alexandria, begun under Ptolemy I, was completed by Ptolemy II, who also added to the Serapeum and finished building the Lighthouse of Alexandria, one of the Seven Wonders of the Ancient World.

Continue reading...

36 notes

·

View notes

Text

my term paper written in 2018 (how ND games were made and why they will never be made that way again)

hello friends, I am going to be sharing portions of a paper i wrote way back in 2018 for a college class. in it, i was researching exactly how the ND games were made, and why they would not be made that way anymore.

if you have any interest in the behind the scenes of how her interactive made their games and my theories as to why our evil overlord penny milliken made such drastic changes to the process, read on!

warning that i am splicing portions of this paper together, so you don't have to read my ramblings about the history of nancy and basic gameplay mechanics:

Use of C++, DirectX, and Bink Video

Upon completion of each game, the player can view the game’s credits. HeR states that each game was developed using C++ and DirectX, as well as Bink Video later on.

C++

C++ is a general-purpose programming language. This means that many things can be done with it, gaming programming included. It is a compiled language, which Jack Copeland explains as the “process of converting a table of instructions into a standard description or a description number” (Copeland 12). This means that written code is broken down into a set of numbers that the computer can then understand. C++ first appeared in 1985 and was first standardized in 1998. This allowed programmers to use the language more widely. It is no coincidence that 1998 is also the year that the first Nancy Drew game was released.

C++ Libraries

When there is a monetary investment to make a computer game, there are more people using and working on whatever programming language they are using. Because there was such an interest in making games in the late 1990’s and early 2000’s, there was essentially a “boom” in how much C++ and other languages were being used. With that many people using the language, they collectively added on to the language to make it simpler to use. This process ends up creating what is called “libraries.” For example:

If a programmer wants to make a function to add one, they must write out the code that does that (let’s say approximately three lines of code). To make this process faster, the programmer can define a symbol, such as + to mean add. Now, when the programmer types “+”, the language knows that equals the three lines of code previously mentioned, as opposed to typing out those three lines of code each time the programmer wants to add. This can be done for all sorts of symbols and phrases, and when they are all put together, they are called a “package” or “library.”

Libraries can be shared with other programmers, which allows everyone to do much more with the language much faster. The more libraries there are, the more that can be done with the language.

Because of the interest in the gaming industry in the early 2000’s, more people were being paid to use programming languages. This caused a fast increase in the ability of programming. This helps to explain how HeR was able to go from jerky, bobble-headed graphics in 1999 to much more fluid and realistic movements in 2003.

Microsoft DirectX

DirectX is a collection of application programming interfaces (APIs) for tasks related to multimedia, especially video game programming, on Microsoft platforms. Among many others, these APIs include Direct3D (allows the user to draw 3D graphics and render 3D animation), DirectDraw (accelerates the rendering of graphics), and DirectMusic (allows interactive control over music and sound effects). This software is crucial for the development of many games, as it includes many services that would otherwise require multiple programs to put together (which would not only take more time but also more money, which is important to consider in a small company like HeR).

Bink Video

According to the credits which I have painstakingly looked through for each game, HeR started using Bink Video in game 7, Ghost Dogs of Moon Lake (2002). Bink is a file format (.bik) developed by RAD Game Tools. This file format has to do with how much data is sent in a package to the Graphical User Interface (GUI). (The GUI essentially means that the computer user interacts with representational graphics rather than plain text. For example, we understand that a plain drawing of a person’s head and shoulders means “user.”) Bink Video structures the data sent in a package so that when it reaches the Central Processing Unit (CPU), it is processed more efficiently. This allows for more data to be transferred per second, making graphics and video look more seamless and natural. Bink Video also allows for more video sequences to be possible in a game.

Use of TransGaming Inc.

Sea of Darkness is the only title that credits a company called TransGaming Inc, though I’m pretty sure they’ve been using it for every Mac release, starting in 2010. TransGaming created a technology called Cider that allowed video game developers to run games designed for Windows on Mac OS X (https://en.wikipedia.org/wiki/Findev). As one can imagine, this was an incredibly helpful piece of software that allowed for HeR to start releasing games on Mac platforms. This was a smart way for them to increase their market.

In 2015, a portion of TransGaming was acquired by NVIDIA, and in 2016, TransGaming changed its business focus from technology to real estate financing. Though it is somewhat difficult to determine which of its formal products are still available, it can be assumed that they will not be developing anything else technology-based from 2016 on.

Though it is entirely possible that there is other software available for converting Microsoft based games to Mac platforms, the loss of TransGaming still has large consequences. For a relatively small company like Her Interactive, hiring an entire team to convert the game for Mac systems was a big deal (I know they did this because it is in the credits of SEA which you can see at the end of this video: https://www.youtube.com/watch?v=Q0gAzD7Q09Y). Without this service, HeR loses a large portion of their customers.

Switch to Unity

Unity is a game engine that is designed to work across 27 platforms, including Windows, Mac, iOS, Playstation, Xbox, Wii, and multiple Virtual Reality systems. The engine itself is written in C++, though the user of the software writes code in C#, JavaScript (also called UnityScript), or less commonly Boo. Its initial release took place in 2005, with a stable release in 2017 and another in March of 2018. Some of the most popular games released using Unity include Pokemon Go for iOS in 2016 and Cuphead in 2017.

HeR’s decision to switch to Unity makes sense on one hand but is incredibly frustrating on the other. Let’s start with how it makes sense. The software HeR was using from TransGaming Inc. will (from what I can tell) never be updated again, meaning it will become virtually useless soon, if it hasn’t already. That means that HeR needed to find another software that would allow them to convert their games onto a Mac platform so that they would not lose a large portion of their customers. This was probably seen as an opportunity to switch to something completely new that would allow them to reach even more platforms. One of the points HeR keeps harping on and on about in their updates to fans is the tablet market, as well as increasing popularity in VR. If HeR wants to survive in the modern game market, they need to branch outside of PC gaming. Unity will allow them to do that. The switch makes sense.

However, one also has to consider all of the progress made in their previous game engine. Everything discussed up to this point has taken 17 years to achieve. And, because their engine was designed by their developers specifically for their games, it is likely that after the switch, their engine will never be used again. Additionally, none of the progress HeR made previously applies to Unity, and can only be used as a reference. Plus, it’s not just the improvements made in the game engine that are being erased. It is also the staff at HeR who worked there for so long, who were so integral in building their own engine and getting the game quality to where it is in Sea of Darkness, that are being pushed aside for a new gaming engine. New engine, new staff that knows how to use it.

The only thing HeR won’t lose is Bink Video, if that means anything to anyone. Bink2 works with Unity. According to the Bink Video website, Bink supplies “pre-written plugins for both Unreal 4 and Unity” (Rad Game Tools). However, I can’t actually be sure that HeR will still use Bink in their next game since I don’t work there. It would make sense if they continued to use it, but who knows.

Conclusions and frustrations

To me, Her Interactive is the little company that could. When they set out to make the first Nancy Drew game, there was no engine to support it. Instead of changing their tactics, they said to heck with it and built their own engine. As years went on, they refined their engine using C++ and DirectX and implemented Bink Video. In 2010 they began using software from TransGaming Inc. that allowed them to convert their games to Mac format, allowing them to increase their market. However, with TransGaming Inc.’s falling apart starting in 2015, HeR was forced to rethink its strategy. Ultimately they chose to switch their engine out for Unity, essentially throwing out 17 years worth of work and laying off many of their employees. Now three years in the making, HeR is still largely secretive about the status of their newest game. The combination of these factors has added up to a fanbase that has become distrustful, frustrated, and altogether largely disappointed in what was once that little company that could.

Suggested Further Reading:

Midnight in Salem, OR Her Interactive’s Marketing Nightmare (Part 2): https://saving-face.net/2017/07/07/midnight-in-salem-or-her-interactives-marketing-nightmare-part-2/

Compilation of MID Facts: http://community.herinteractive.com/showthread.php?1320771-Compilation-of-MID-Facts

Game Building - Homebrew or Third Party Engines?: https://thementalattic.com/2016/07/29/game-building-homebrew-or-third-party-engines/

/end of essay. it is crazy to go back and read this again in 2025. mid had not come out yet when i wrote this and i genuinely did not think it would ever come out. i also had to create a whole power point to go along with this and present it to my entire class of people who barely even knew what nancy drew was, let alone that there was a whole series of pc games based on it lol

18 notes

·

View notes

Text

Palantir, the software company cofounded by Peter Thiel, is part of an effort by Elon Musk’s so-called Department of Government Efficiency (DOGE) to build a new “mega API” for accessing Internal Revenue Service records, IRS sources tell WIRED.

For the past three days, DOGE and a handful of Palantir representatives, along with dozens of career IRS engineers, have been collaborating to build a single API layer above all IRS databases at an event previously characterized to WIRED as a “hackathon,” sources tell WIRED. Palantir representatives have been onsite at the event this week, a source with direct knowledge tells WIRED.

APIs are application programming interfaces, which enable different applications to exchange data and could be used to move IRS data to the cloud and access it there. DOGE has expressed an interest in the API project possibly touching all IRS data, which includes taxpayer names, addresses, social security numbers, tax returns, and employment data. The IRS API layer could also allow someone to compare IRS data against interoperable datasets from other agencies.

Should this project move forward to completion, DOGE wants Palantir’s Foundry software to become the “read center of all IRS systems,” a source with direct knowledge tells WIRED, meaning anyone with access could view and have the ability to possibly alter all IRS data in one place. It’s not currently clear who would have access to this system.

Foundry is a Palantir platform that can organize, build apps, or run AI models on the underlying data. Once the data is organized and structured, Foundry’s “ontology” layer can generate APIs for faster connections and machine learning models. This would allow users to quickly query the software using artificial intelligence to sort through agency data, which would require the AI system to have access to this sensitive information.

Engineers tasked with finishing the API project are confident they can complete it in 30 days, a source with direct knowledge tells WIRED.

Palantir has made billions in government contracts. The company develops and maintains a variety of software tools for enterprise businesses and government, including Foundry and Gotham, a data-analytics tool primarily used in defense and intelligence. Palantir CEO Alex Karp recently referenced the “disruption” of DOGE’s cost-cutting initiatives and said, “Whatever is good for America will be good for Americans and very good for Palantir.” Former Palantir workers have also taken over key government IT and DOGE roles in recent months.

WIRED was the first to report that the IRS’s DOGE team was staging a “hackathon” in Washington, DC, this week to kick off the API project. The event started Tuesday morning and ended Thursday afternoon. A source in the room this week explained that the event was “very unstructured.” On Tuesday, engineers wandered around the room discussing how to accomplish DOGE’s goal.

A Treasury Department spokesperson, when asked about Palantir's involvement in the project, said “there is no contract signed yet and many vendors are being considered, Palantir being one of them.”

“The Treasury Department is pleased to have gathered a team of long-time IRS engineers who have been identified as the most talented technical personnel. Through this coalition, they will streamline IRS systems to create the most efficient service for the American taxpayer," a Treasury spokesperson tells WIRED. "This week, the team participated in the IRS Roadmapping Kickoff, a seminar of various strategy sessions, as they work diligently to create efficient systems. This new leadership and direction will maximize their capabilities and serve as the tech-enabled force multiplier that the IRS has needed for decades.”

The project is being led by Sam Corcos, a health-tech CEO and a former SpaceX engineer, with the goal of making IRS systems more “efficient,” IRS sources say. In meetings with IRS employees over the past few weeks, Corcos has discussed pausing all engineering work and canceling current contracts to modernize the agency’s computer systems, sources with direct knowledge tell WIRED. Corcos has also spoken about some aspects of these cuts publicly: “We've so far stopped work and cut about $1.5 billion from the modernization budget. Mostly projects that were going to continue to put us down the death spiral of complexity in our code base,” Corcos told Laura Ingraham on Fox News in March. Corcos is also a special adviser to Treasury Secretary Scott Bessent.

Palantir and Corcos did not immediately respond to requests for comment

The consolidation effort aligns with a recent executive order from President Donald Trump directing government agencies to eliminate “information silos.” Purportedly, the order’s goal is to fight fraud and waste, but it could also put sensitive personal data at risk by centralizing it in one place. The Government Accountability Office is currently probing DOGE’s handling of sensitive data at the Treasury, as well as the Departments of Labor, Education, Homeland Security, and Health and Human Services, WIRED reported Wednesday.

12 notes

·

View notes

Text

holy grail of last.fm and spotify music data sites. i'd still say check the actual link but i've copy pasted most of the info n the links below

Spotify

Sites, apps and programs that use your Spotify account, Spotify API or both.

Spotify sites:

Obscurify: Tells you how unique you music taste is in compare to other Obscurify users. Also shows some recommendations. Mobile friendly.

Skiley: Web app to better manage your playlists and discover new music. This has so many functions and really the only thing I miss is search field for when you are managing playlists. You can take any playlist you "own" and order it by many different rules (track name, album name, artist name, BPM, etc.), or just randomly shuffle it (say bye to bad Spotify shuffle). You can also normalize it. For the other functions you don't even need the rights to edit the playlist. Those consists of splitting playlist, filtering out song by genre or year to new playlist, creating similar playlists or exporting it to CFG, CSV, JSON, TXT or XML.

You can also use it to discover music based on your taste and it has a stats section - data different from Last.fm.

Also, dark mode and mobile friendly.

Sort your music: Lets you sort your playlist by all kinds of different parameters such as BPM, artist, length and more. Similar to Skiley, but it works as an interactive table with songs from selected playlist.

Run BPM: Filters playlists based on parameters like BPM, Energy, etc. Great visualized with colorful sliders. Only downside - shows not even half of my playlists. Mobile friendly.

Fylter.in: Sort playlist by BMP, loudness, length, etc and export to Spotify

Spotify Charts: Daily worldwide charts from Spotify. Mobile friendly

Kaleidosync: Spotify visualizer. I would personally add epilepsy warning.

Duet: Darthmouth College project. Let's you compare your streaming data to other people. Only downside is, those people need to be using the site too, so you have to get your friends to log in. Mobile friendly.

Discover Quickly: Select any playlist and you will be welcomed with all the songs in a gridview. Hover over song to hear the best part. Click on song to dig deeper or save the song.

Dubolt: Helps you discover new music. Select an artist/song to view similar ones. Adjust result by using filters such as tempo, popularity, energy and others.

SongSliders: Sort your playlists, create new one, find new music. Also can save Discover weekly every monday.

Stats for Spotify: Shows you Top tracks and Top artists, lets you compare them to last visit. Data different from Last.fm. Mobile friendly

Record Player: This site is crazy. It's a Rube Goldberg Machine. You take a picture (any picture) Google Cloud Vision API will guess what it is. The site than takes Google's guess and use it to search Spotify giving you the first result to play. Mobile friendly.

Author of this site has to pay for the Google Cloud if the site gets more than 1000 requests a month! I assume this post is gonna blow up and the limit will be easily reached. Author suggests to remix the app and set it up with your own Google Cloud to avoid this. If your are able to do so, do it please. Or reach out to the author on Twitter and donate a little if you can.

Spotify Playlist Randomizer: Site to randomize order of the songs in playlist. There are 3 shuffling methods you can choose from. Mobile friendly.

Replayify: Another site showing you your Spotify data. Also lets you create a playlist based on preset rules that cannot be changed (Top 5 songs by Top 20 artists from selected time period/Top 50 songs from selected time period). UI is nice and clean. Mobile friendly, data different from Last.fm.

Visualify: Simpler replayify without the option to create playlists. Your result can be shared with others. Mobile friendly, data different from Last.fm.

The Church Of Koen: Collage generator tool to create collages sorted by color and turn any picture to collage. Works with Last.fm as well.

Playedmost: Site showing your Spotify data in nice grid view. Contains Top Artists, New Artists, Top Tracks and New Tracks. Data different from Last.fm, mobile friendly.

musictaste.space: Shows you some stats about your music habits and let's you compare them to others. You can also create Covid-19 playlist :)

Playlist Manager: Select two (or more) playlists to see in a table view which songs are shared between them and which are only in one of them. You can add songs to playlists too.

Boil the Frog: Choose to artists and this site will create playlists that slowly transitions between one artist's style to the other.

SpotifyTV: Great tool for searching up music videos of songs in your library and playlists.

Spotify Dedup and Spotify Organizer: Both do the same - remove duplicates. Spotify Dedup is mobile friendly.

Smarter Playlists: It lets you build a complex program by assembling components to create new playlists. This seems like a very complex and powerful tool.

JBQX: Do you remember plug.dj? Well this is same thing, only using Spotify instead of YouTube as a source for music. You can join room and listen to music with other people, you all decide what will be playing, everyone can add a song to queue.

Spotify Buddy: Let's you listen together with other people. All can control what's playing, all can listen on their own devices or only one device can be playing. You don't need to have Spotify to control the queue! In my opinion it's great for parties as a wireless aux cord. Mobile friendly.

Opslagify: Shows how much space would one need to download all of their Spotify playlists as .mp3s.

Whisperify: Spotify game! Music quiz based on what you are listening to. Do you know your music? Mobile friendly.

Popularity Contest: Another game. Two artists, which one is more popular according to Spotify data? Mobile friendly, doesn't require Spotify login.

Spotify Apps:

uTrack: Android app which generates playlist from your top tracks. Also shows top artists, tracks and genres - data different from Last.fm.

Statistics for Spotify: uTrack for iOS. I don't own iOS device so I couldn't test it. iOS users, share your opinions in comments please :).

Spotify Programs:

Spicetify: Spicetify used to be a skin for Rainmeter. You can still use it as such, but the development is discontinued. You will need to have Rainmeter installed if you want to try. These days it works as a series of PowerShell commands. New and updated version here. Spicetify lets you redesign Spotify desktop client and add new functions to it like Trash Bin, Shuffle+, Christian Mode etc. It doesn't work with MS Store app, .exe Spotify client is required.

Library Bridger: The main purpose of this program is to create Spotify playlists from your locally saved songs. But it has some extra functions, check the link.

Last.fm

Sites, apps and programs using Last.fm account, Last.fm API or both.

Last.fm sites:

Last.fm Mainstream Calculator: How mainstream is music you listen to? Mobile friendly.

My Music Habits: Shows different graphs about how many artists, tracks and albums from selected time period comes from your overall top artists/tracks/albums.

Explr.fm: Where are the artists you listen to from? This site shows you just that on interactive world map.

Descent: The best description I can think of is music dashboard. Shows album art of currently playing song along with time and weather.

Semi-automatic Last.fm scrobbler: One of the many scrobblers out there. You can scrobble along with any other Last.fm user.

The Universal Scrobbler: One of the best manual scrobblers. Mobile friendly.

Open Scrobbler: Another manual scrobbler. Mobile friendly

Vinyl Scrobbler: If you listen to vinyl and use Last.fm, this is what you need.

Last.fm collage generator, Last.fm top albums patchwork generator and yet another different Last.fm collage generator: Sites to make collages based on your Last.fm data. The last one is mobile friendly.

The Church Of Koen: Collage generator tool to create collages sorted by color and turn any picture to collage. Works with Spotify as well.

Musicorum: So far the best tool for generating collages based on Last.fm data that I ever seen. Grid up to 20x20 tiles and other styles, some of which resemble very well official Spotify collages that Spotify generates at the end of the year. Everything customizable and even supports Instagram story format. Mobile friendly.

Nicholast.fm: Simple site for stats and recommendations. Mobile friendly.

Scatter.fm: Creates graph from your scrobbles that includes every single scrobble.

Lastwave: Creates a wave graph from your scrobbles. Mobile friendly.

Artist Cloud: Creates artist cloud image from you scrobbles. Mobile friendly.

Last.fm Tools: Lets you generate Tag Timeline, Tag Cloud, Artist Timeline and Album Charter. Mobile friendly.

Last Chart: This site shows different types of beautiful graphs visualizing your Last.fm data. Graph types are bubble, force, map, pack, sun, list, cloud and stream. Mobile friendly.

Sergei.app: Very nice looking graphs. Mobile friendly.

Last.fm Time Charts: Generates charts from your Last.fm data. Sadly it seems that it only supports artists, not albums or tracks.

ZERO Charts: Generates Billboard like charts from Last.fm data. Requires login, mobile friendly.

Skihaha Stats: Another great site for viewing different Last.fm stats.

Jakeledoux: What are your Last.fm friends listening to right now? Mobile friendly.

Last History: View your cumulative listening history. Mobile friendly.

Paste my taste: Generates short text describing your music taste.

Last.fm to CSV: Exports your scrobbles to CSV format. Mobile friendly.

Pr.fm: Syncs your scrobbles to your Strava activity descriptions as a list based on what you listened to during a run or biking session, etc. (description by u/mturi, I don't use Strava, so I have no idea how does it work :))

Last.fm apps:

Scroball for Last.fm: An Android app I use for scrobbling, when I listen to something else than Spotify.

Web Scrobbler: Google Chrome and Firefox extension scrobbler.

Last.fm programs:

Last.fm Scrubbler WPF: My all time favourite manual scrobbler for Last.fm. You can scrobbler manually, from another user, from database (I use this rather than Vinyl Scrobbler when I listen to vinyls) any other sources. It can also generate collages, generate short text describing your music taste and other extra functions.

Last.fm Bulk Edit: Userscript, Last.fm Pro is required. Allows you to bulk edit your scrobbles. Fix wrong album/track names or any other scrobble parameter easily.

8 notes

·

View notes

Text

Oh, you think fanartists are safe because sending cease-and-desist letters to everyone would be “too expensive”? 😈

Let’s break down that fantasy with a little logic 🤓

1. “If they wanted to crack down, they would have done it already.” → That’s like saying, “If he wanted to break up with you, he already would’ve.”

Reality check: new methods exist now.

Back then, targeting individuals wasn’t efficient —

but now, with the right legal precedent, they can pressure platforms directly and wipe things systematically.

They don’t need to go after every artist. Just push Tumblr, AO3, DeviantArt — and watch the platforms do the cleanup.

2. “It’s too expensive to pursue.” → Sure — if you’re chasing thousands of people.

But one lawsuit, one change in legal framework, and boom: fanart vanishes from public view.

People won’t risk bans or takedowns if platforms start enforcing policies under legal pressure.

3. “Fanart is free marketing!” → It was — when corporations needed it.

But now that they’ve got AI that can generate characters on-brand, on-demand, and en masse,

they don’t need chaotic fan communities anymore.

Fanart stops being an amplifier. It becomes competition.

4. “Fanart is culture!” → Totally.

But corporations don’t protect culture. They protect profits.

And if rewriting the rules helps them do that, they’ll rewrite the rules.

Quietly. Legally. Through API terms, moderation policies, and silent de-listings.

5. “You’re just panicking.” → No, some people are just paying attention.

Seeing risk ≠ overreacting.

Ignoring risk doesn’t make you smart — it makes you unprepared.

So go ahead — believe you’re safe because no one’s come knocking yet.

But if one big court case lands right, platforms will change — and your entire online legacy could vanish in a legal clause.

2 notes

·

View notes

Text

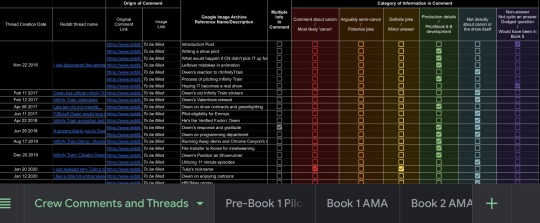

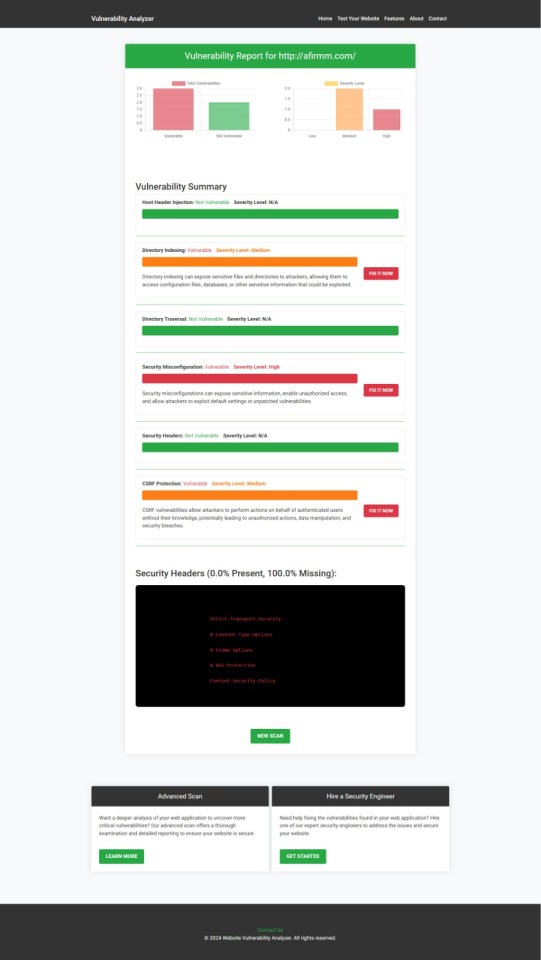

Hey all, small update on the r/InfinityTrain archive efforts here.

As it turns out, the mods on the subreddit have opened it back up to the public and I am no longer the only person allowed to view it, so you can go and check it out now.

That being said, with tomorrow being the start of the API changes, it’d still be in your best interests to archive what you can and want to while Reddit still works. In the meantime, since it’s still the last day of Pride month at the time of starting this draft and because I’ve managed to do a considerable amount of work without yet losing steam, I’m happy to share a peek at the current progress on my subreddit archive:

As you can see, I’m still going to finish my archiving of what I can, as who knows what may happen to the original information sources.

Especially because Twitter now requires you to have an account to do anything - including merely browsing and seeing posts - and who knows whether embed support on other sites will be going away too.

In other words, if you want to still have a guaranteed way to check info from the Twitter accounts of the crew but don’t want to make an account/in case Twitter gets royally screwed up, it is incredibly important to document any and all concept art, post threads, and links shared by the Infinity Train crew.

In fact, I have set up a Google drive folder to consolidate efforts into one place for all who wants to help with this task below:

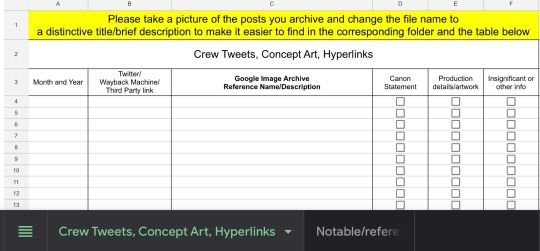

Inside, I have set up two folders: posts by the crew, and noteworthy fan art/analysis by fans that the crew have taken particular note of. And to help with organizing all the links and information together, I have set up the simplified google sheet below:

For the process of organizing, here’s a general outline of how archiving can be done:

Find the Twitter post you want to archive, whether it’s in a Discord server, on the Wayback Machine website (since Owen mass deleted his tweets often),

Copy the link to the Google sheet with - if you can - the month and year the post was created

Take a picture of the full post/decent chunks of a Twitter thread

If possible, change the file name of the picture to a title or brief description outlining the content within the post

Upload the photo to the respective subfolder and type the file name in the respective Google Sheet

Now, I have a few additional docs and subfolders than these key ones, but I hope that the drive is overall easy to navigate and understand, and here’s to saving Infinity Train fandom history for all to see and know that we were here!

50 notes

·

View notes

Text

Vibecoding a production app

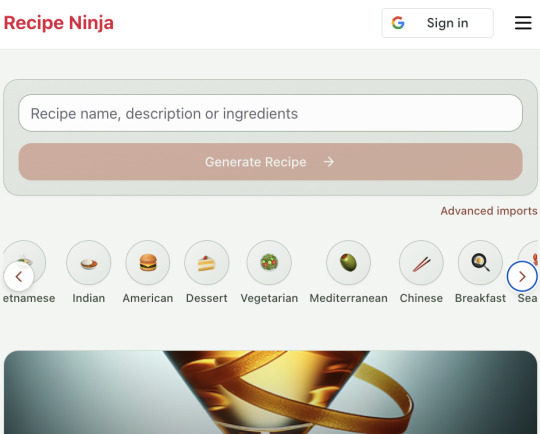

TL;DR I built and launched a recipe app with about 20 hours of work - recipeninja.ai

Background: I'm a startup founder turned investor. I taught myself (bad) PHP in 2000, and picked up Ruby on Rails in 2011. I'd guess 2015 was the last time I wrote a line of Ruby professionally. I've built small side projects over the years, but nothing with any significant usage. So it's fair to say I'm a little rusty, and I never really bothered to learn front end code or design.

In my day job at Y Combinator, I'm around founders who are building amazing stuff with AI every day and I kept hearing about the advances in tools like Lovable, Cursor and Windsurf. I love building stuff and I've always got a list of little apps I want to build if I had more free time.

About a month ago, I started playing with Lovable to build a word game based on Articulate (it's similar to Heads Up or Taboo). I got a working version, but I quickly ran into limitations - I found it very complicated to add a supabase backend, and it kept re-writing large parts of my app logic when I only wanted to make cosmetic changes. It felt like a toy - not ready to build real applications yet.

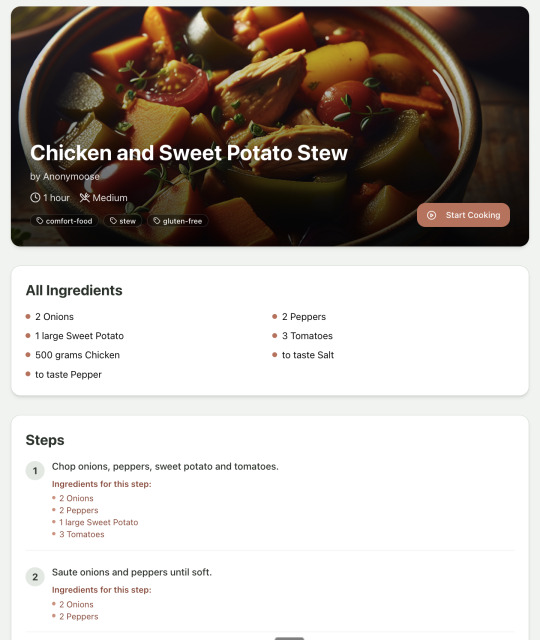

But I kept hearing great things about tools like Windsurf. A couple of weeks ago, I looked again at my list of app ideas to build and saw "Recipe App". I've wanted to build a hands-free recipe app for years. I love to cook, but the problem with most recipe websites is that they're optimized for SEO, not for humans. So you have pages and pages of descriptive crap to scroll through before you actually get to the recipe. I've used the recipe app Paprika to store my recipes in one place, but honestly it feels like it was built in 2009. The UI isn't great for actually cooking. My hands are covered in food and I don't really want to touch my phone or computer when I'm following a recipe.

So I set out to build what would become RecipeNinja.ai

For this project, I decided to use Windsurf. I wanted a Rails 8 API backend and React front-end app and Windsurf set this up for me in no time. Setting up homebrew on a new laptop, installing npm and making sure I'm on the right version of Ruby is always a pain. Windsurf did this for me step-by-step. I needed to set up SSH keys so I could push to GitHub and Heroku. Windsurf did this for me as well, in about 20% of the time it would have taken me to Google all of the relevant commands.

I was impressed that it started using the Rails conventions straight out of the box. For database migrations, it used the Rails command-line tool, which then generated the correct file names and used all the correct Rails conventions. I didn't prompt this specifically - it just knew how to do it. It one-shotted pretty complex changes across the React front end and Rails backend to work seamlessly together.

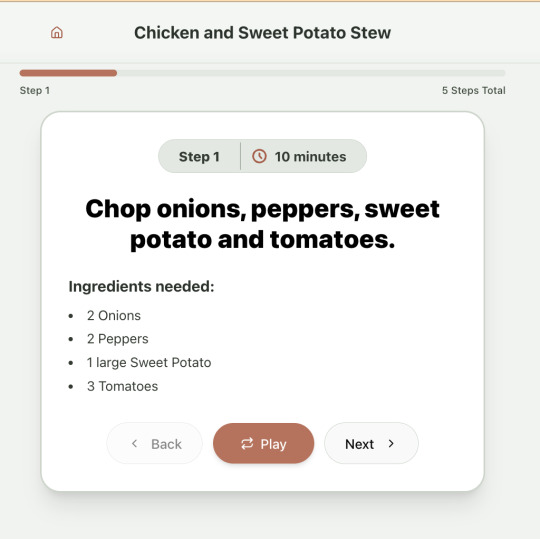

To start with, the main piece of functionality was to generate a complete step-by-step recipe from a simple input ("Lasagne"), generate an image of the finished dish, and then allow the user to progress through the recipe step-by-step with voice narration of each step. I used OpenAI for the LLM and ElevenLabs for voice. "Grandpa Spuds Oxley" gave it a friendly southern accent.

Recipe summary:

And the recipe step-by-step view:

I was pretty astonished that Windsurf managed to integrate both the OpenAI and Elevenlabs APIs without me doing very much at all. After we had a couple of problems with the open AI Ruby library, it quickly fell back to a raw ruby HTTP client implementation, but I honestly didn't care. As long as it worked, I didn't really mind if it used 20 lines of code or two lines of code. And Windsurf was pretty good about enforcing reasonable security practices. I wanted to call Elevenlabs directly from the front end while I was still prototyping stuff, and Windsurf objected very strongly, telling me that I was risking exposing my private API credentials to the Internet. I promised I'd fix it before I deployed to production and it finally acquiesced.

I decided I wanted to add "Advanced Import" functionality where you could take a picture of a recipe (this could be a handwritten note or a picture from a favourite a recipe book) and RecipeNinja would import the recipe. This took a handful of minutes.

Pretty quickly, a pattern emerged; I would prompt for a feature. It would read relevant files and make changes for two or three minutes, and then I would test the backend and front end together. I could quickly see from the JavaScript console or the Rails logs if there was an error, and I would just copy paste this error straight back into Windsurf with little or no explanation. 80% of the time, Windsurf would correct the mistake and the site would work. Pretty quickly, I didn't even look at the code it generated at all. I just accepted all changes and then checked if it worked in the front end.

After a couple of hours of work on the recipe generation, I decided to add the concept of "Users" and include Google Auth as a login option. This would require extensive changes across the front end and backend - a database migration, a new model, new controller and entirely new UI. Windsurf one-shotted the code. It didn't actually work straight away because I had to configure Google Auth to add `localhost` as a valid origin domain, but Windsurf talked me through the changes I needed to make on the Google Auth website. I took a screenshot of the Google Auth config page and pasted it back into Windsurf and it caught an error I had made. I could login to my app immediately after I made this config change. Pretty mindblowing. You can now see who's created each recipe, keep a list of your own recipes, and toggle each recipe to public or private visibility. When I needed to set up Heroku to host my app online, Windsurf generated a bunch of terminal commands to configure my Heroku apps correctly. It went slightly off track at one point because it was using old Heroku APIs, so I pointed it to the Heroku docs page and it fixed it up correctly.

I always dreaded adding custom domains to my projects - I hate dealing with Registrars and configuring DNS to point at the right nameservers. But Windsurf told me how to configure my GoDaddy domain name DNS to work with Heroku, telling me exactly what buttons to press and what values to paste into the DNS config page. I pointed it at the Heroku docs again and Windsurf used the Heroku command line tool to add the "Custom Domain" add-ons I needed and fetch the right Heroku nameservers. I took a screenshot of the GoDaddy DNS settings and it confirmed it was right.

I can see very soon that tools like Cursor & Windsurf will integrate something like Browser Use so that an AI agent will do all this browser-based configuration work with zero user input.

I'm also impressed that Windsurf will sometimes start up a Rails server and use curl commands to check that an API is working correctly, or start my React project and load up a web preview and check the front end works. This functionality didn't always seem to work consistently, and so I fell back to testing it manually myself most of the time.

When I was happy with the code, it wrote git commits for me and pushed code to Heroku from the in-built command line terminal. Pretty cool!

I do have a few niggles still. Sometimes it's a little over-eager - it will make more changes than I want, without checking with me that I'm happy or the code works. For example, it might try to commit code and deploy to production, and I need to press "Stop" and actually test the app myself. When I asked it to add analytics, it went overboard and added 100 different analytics events in pretty insignificant places. When it got trigger-happy like this, I reverted the changes and gave it more precise commands to follow one by one.

The one thing I haven't got working yet is automated testing that's executed by the agent before it decides a task is complete; there's probably a way to do it with custom rules (I have spent zero time investigating this). It feels like I should be able to have an integration test suite that is run automatically after every code change, and then any test failures should be rectified automatically by the AI before it says it's finished.

Also, the AI should be able to tail my Rails logs to look for errors. It should spot things like database queries and automatically optimize my Active Record queries to make my app perform better. At the moment I'm copy-pasting in excerpts of the Rails logs, and then Windsurf quickly figures out that I've got an N+1 query problem and fixes it. Pretty cool.

Refactoring is also kind of painful. I've ended up with several files that are 700-900 lines long and contain duplicate functionality. For example, list recipes by tag and list recipes by user are basically the same.

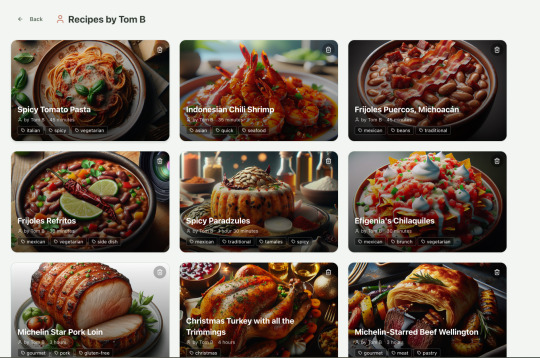

Recipes by user:

This should really be identical to list recipes by tag, but Windsurf has implemented them separately.

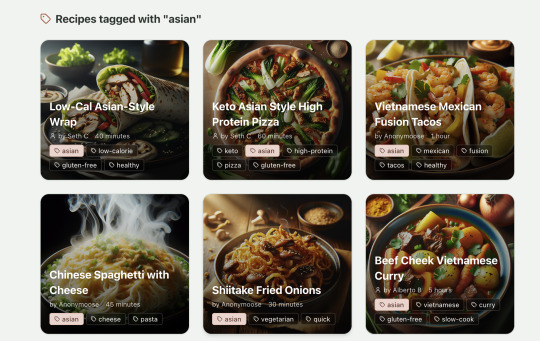

Recipes by tag:

If I ask Windsurf to refactor these two pages, it randomly changes stuff like renaming analytics events, rewriting user-facing alerts, and changing random little UX stuff, when I really want to keep the functionality exactly the same and only move duplicate code into shared modules. Instead, to successfully refactor, I had to ask Windsurf to list out ideas for refactoring, then prompt it specifically to refactor these things one by one, touching nothing else. That worked a little better, but it still wasn't perfect

Sometimes, adding minor functionality to the Rails API will often change the entire API response, rather just adding a couple of fields. Eg It will occasionally change Index Recipes to nest responses in an object { "recipes": [ ] }, versus just returning an array, which breaks the frontend. And then another minor change will revert it. This is where adding tests to identify and prevent these kinds of API changes would be really useful. When I ask Windsurf to fix these API changes, it will instead change the front end to accept the new API json format and also leave the old implementation in for "backwards compatibility". This ends up with a tangled mess of code that isn't really necessary. But I'm vibecoding so I didn't bother to fix it.

Then there was some changes that just didn't work at all. Trying to implement Posthog analytics in the front end seemed to break my entire app multiple times. I tried to add user voice commands ("Go to the next step"), but this conflicted with the eleven labs voice recordings. Having really good git discipline makes vibe coding much easier and less stressful. If something doesn't work after 10 minutes, I can just git reset head --hard. I've not lost very much time, and it frees me up to try more ambitious prompts to see what the AI can do. Less technical users who aren't familiar with git have lost months of work when the AI goes off on a vision quest and the inbuilt revert functionality doesn't work properly. It seems like adding more native support for version control could be a massive win for these AI coding tools.

Another complaint I've heard is that the AI coding tools don't write "production" code that can scale. So I decided to put this to the test by asking Windsurf for some tips on how to make the application more performant. It identified I was downloading 3 MB image files for each recipe, and suggested a Rails feature for adding lower resolution image variants automatically. Two minutes later, I had thumbnail and midsize variants that decrease the loading time of each page by 80%. Similarly, it identified inefficient N+1 active record queries and rewrote them to be more efficient. There are a ton more performance features that come built into Rails - caching would be the next thing I'd probably add if usage really ballooned.

Before going to production, I kept my promise to move my Elevenlabs API keys to the backend. Almost as an afterthought, I asked asked Windsurf to cache the voice responses so that I'd only make an Elevenlabs API call once for each recipe step; after that, the audio file was stored in S3 using Rails ActiveStorage and served without costing me more credits. Two minutes later, it was done. Awesome.

At the end of a vibecoding session, I'd write a list of 10 or 15 new ideas for functionality that I wanted to add the next time I came back to the project. In the past, these lists would've built up over time and never gotten done. Each task might've taken me five minutes to an hour to complete manually. With Windsurf, I was astonished how quickly I could work through these lists. Changes took one or two minutes each, and within 30 minutes I'd completed my entire to do list from the day before. It was astonishing how productive I felt. I can create the features faster than I can come up with ideas.

Before launching, I wanted to improve the design, so I took a quick look at a couple of recipe sites. They were much more visual than my site, and so I simply told Windsurf to make my design more visual, emphasizing photos of food. Its first try was great. I showed it to a couple of friends and they suggested I should add recipe categories - "Thai" or "Mexican" or "Pizza" for example. They showed me the DoorDash app, so I took a screenshot of it and pasted it into Windsurf. My prompt was "Give me a carousel of food icons that look like this". Again, this worked in one shot. I think my version actually looks better than Doordash 🤷♂️

Doordash:

My carousel:

I also saw I was getting a console error from missing Favicon. I always struggle to make Favicon for previous sites because I could never figure out where they were supposed to go or what file format they needed. I got OpenAI to generate me a little recipe ninja icon with a transparent background and I saved it into my project directory. I asked Windsurf what file format I need and it listed out nine different sizes and file formats. Seems annoying. I wondered if Windsurf could just do it all for me. It quickly wrote a series of Bash commands to create a temporary folder, resize the image and create the nine variants I needed. It put them into the right directory and then cleaned up the temporary directory. I laughed in amazement. I've never been good at bash scripting and I didn't know if it was even possible to do what I was asking via the command line. I guess it is possible.

After launching and posting on Twitter, a few hundred users visited the site and generated about 1000 recipes. I was pretty happy! Unfortunately, the next day I woke up and saw that I had a $700 OpenAI bill. Someone had been abusing the site and costing me a lot of OpenAI credits by creating a single recipe over and over again - "Pasta with Shallots and Pineapple". They did this 12,000 times. Obviously, I had not put any rate limiting in.

Still, I was determined not to write any code. I explained the problem and asked Windsurf to come up with solutions. Seconds later, I had 15 pretty good suggestions. I implemented several (but not all) of the ideas in about 10 minutes and the abuse stopped dead in its tracks. I won't tell you which ones I chose in case Mr Shallots and Pineapple is reading. The app's security is not perfect, but I'm pretty happy with it for the scale I'm at. If I continue to grow and get more abuse, I'll implement more robust measures.

Overall, I am astonished how productive Windsurf has made me in the last two weeks. I'm not a good designer or frontend developer, and I'm a very rusty rails dev. I got this project into production 5 to 10 times faster than it would've taken me manually, and the level of polish on the front end is much higher than I could've achieved on my own. Over and over again, I would ask for a change and be astonished at the speed and quality with which Windsurf implemented it. I just sat laughing as the computer wrote code.

The next thing I want to change is making the recipe generation process much more immediate and responsive. Right now, it takes about 20 seconds to generate a recipe and for a new user it feels like maybe the app just isn't doing anything.

Instead, I'm experimenting with using Websockets to show a streaming response as the recipe is created. This gives the user immediate feedback that something is happening. It would also make editing the recipe really fun - you could ask it to "add nuts" to the recipe, and see as the recipe dynamically updates 2-3 seconds later. You could also say "Increase the quantities to cook for 8 people" or "Change from imperial to metric measurements".

I have a basic implementation working, but there are still some rough edges. I might actually go and read the code this time to figure out what it's doing!

I also want to add a full voice agent interface so that you don't have to touch the screen at all. Halfway through cooking a recipe, you might ask "I don't have cilantro - what could I use instead?" or say "Set a timer for 30 minutes". That would be my dream recipe app!

Tools like Windsurf or Cursor aren't yet as useful for non-technical users - they're extremely powerful and there are still too many ways to blow your own face off. I have a fairly good idea of the architecture that I want Windsurf to implement, and I could quickly spot when it was going off track or choosing a solution that was inappropriately complicated for the feature I was building. At the moment, a technical background is a massive advantage for using Windsurf. As a rusty developer, it made me feel like I had superpowers.

But I believe within a couple of months, when things like log tailing and automated testing and native version control get implemented, it will be an extremely powerful tool for even non-technical people to write production-quality apps. The AI will be able to make complex changes and then verify those changes are actually working. At the moment, it feels like it's making a best guess at what will work and then leaving the user to test it. Implementing better feedback loops will enable a truly agentic, recursive, self-healing development flow. It doesn't feel like it needs any breakthrough in technology to enable this. It's just about adding a few tool calls to the existing LLMs. My mind races as I try to think through the implications for professional software developers.

Meanwhile, the LLMs aren't going to sit still. They're getting better at a frightening rate. I spoke to several very capable software engineers who are Y Combinator founders in the last week. About a quarter of them told me that 95% of their code is written by AI. In six or twelve months, I just don't think software engineering is going exist in the same way as it does today. The cost of creating high-quality, custom software is quickly trending towards zero.

You can try the site yourself at recipeninja.ai

Here's a complete list of functionality. Of course, Windsurf just generated this list for me 🫠

RecipeNinja: Comprehensive Functionality Overview

Core Concept: the app appears to be a cooking assistant application that provides voice-guided recipe instructions, allowing users to cook hands-free while following step-by-step recipe guidance.

Backend (Rails API) Functionality

User Authentication & Authorization

Google OAuth integration for user authentication

User account management with secure authentication flows

Authorization system ensuring users can only access their own private recipes or public recipes

Recipe Management

Recipe Model Features:

Unique public IDs (format: "r_" + 14 random alphanumeric characters) for security

User ownership (user_id field with NOT NULL constraint)

Public/private visibility toggle (default: private)

Comprehensive recipe data storage (title, ingredients, steps, cooking time, etc.)

Image attachment capability using Active Storage with S3 storage in production

Recipe Tagging System:

Many-to-many relationship between recipes and tags

Tag model with unique name attribute

RecipeTag join model for the relationship

Helper methods for adding/removing tags from recipes

Recipe API Endpoints:

CRUD operations for recipes

Pagination support with metadata (current_page, per_page, total_pages, total_count)

Default sorting by newest first (created_at DESC)

Filtering recipes by tags

Different serializers for list view (RecipeSummarySerializer) and detail view (RecipeSerializer)

Voice Generation

Voice Recording System:

VoiceRecording model linked to recipes

Integration with Eleven Labs API for text-to-speech conversion

Caching of voice recordings in S3 to reduce API calls

Unique identifiers combining recipe_id, step_id, and voice_id

Force regeneration option for refreshing recordings

Audio Processing:

Using streamio-ffmpeg gem for audio file analysis

Active Storage integration for audio file management

S3 storage for audio files in production

Recipe Import & Generation

RecipeImporter Service:

OpenAI integration for recipe generation

Conversion of text recipes into structured format

Parsing and normalization of recipe data

Import from photos functionality

Frontend (React) Functionality

User Interface Components

Recipe Selection & Browsing:

Recipe listing with pagination

Real-time updates with 10-second polling mechanism

Tag filtering functionality

Recipe cards showing summary information (without images)

"View Details" and "Start Cooking" buttons for each recipe

Recipe Detail View:

Complete recipe information display

Recipe image display

Tag display with clickable tags

Option to start cooking from this view

Cooking Experience:

Step-by-step recipe navigation

Voice guidance for each step

Keyboard shortcuts for hands-free control:

Arrow keys for step navigation

Space for play/pause audio

Escape to return to recipe selection

URL-based step tracking (e.g., /recipe/r_xlxG4bcTLs9jbM/classic-lasagna/steps/1)

State Management & Data Flow

Recipe Service:

API integration for fetching recipes

Support for pagination parameters

Tag-based filtering

Caching mechanisms for recipe data

Image URL handling for detailed views

Authentication Flow:

Google OAuth integration using environment variables

User session management

Authorization header management for API requests

Progressive Web App Features

PWA capabilities for installation on devices

Responsive design for various screen sizes

Favicon and app icon support

Deployment Architecture

Two-App Structure:

cook-voice-api: Rails backend on Heroku

cook-voice-wizard: React frontend/PWA on Heroku

Backend Infrastructure:

Ruby 3.2.2

PostgreSQL database (Heroku PostgreSQL addon)

Amazon S3 for file storage

Environment variables for configuration

Frontend Infrastructure:

React application

Environment variable configuration

Static buildpack on Heroku

SPA routing configuration

Security Measures:

HTTPS enforcement

Rails credentials system

Environment variables for sensitive information

Public ID system to mask database IDs

This comprehensive overview covers the major functionality of the Cook Voice application based on the available information. The application appears to be a sophisticated cooking assistant that combines recipe management with voice guidance to create a hands-free cooking experience.

2 notes

·

View notes

Text

anyway that last post abt doing anything i want forever was about bug people that's right it's BEE TIME "what's their connection to the lore" absolutely nothing i made it up and im having a great time <3

Ashlo, known as the Bee God of Honeyed Reflections, the Architect of the Soul, the Ever-Attendant Queen, the Prince of All Suns, and the God of Rebirth, is an obscure and little-known national deity worshipped by the Apis peoples of Lower Craglorn. Traditional Apis belief holds that Ashlo creates the souls of all living beings by filling the honeycombs of the Golden Hive with soul honey, the basis of all life, and allowing them to grow to completion. After death, Ashlo ferries these souls back to the hive, where they will be reused for future generations. Perhaps indicative of their close contact with the Nedes, astrology and star-worship is central to Apis religion, with each star representing one cell in the Golden Hive, and each of the constellations being comprised of ancestral heroes whose souls were deemed holy enough to preserve from the cycle of rebirth so that they may watch over the living.

In at least one Iron Orc tribe there are written records of Ashlo being viewed as a minor spirit responsible for maintaining the structural integrity of religious institutions. In a less accepting example of cross-cultural exchange, the invading Ra Gada of the 1st Era associated Ashlo with Peryite, and considered them and their followers “a pestilence given form.”

The Ayleids had a scientific interest in Ashlo as a potential god of light and the dawn, but interacted little else with the Apis, and generally not in a religious capacity.

Experts connect Ashlo to Lorkhan as a creator deity, and even to the mysterious insect god of the famed Adabal-a, but no concrete evidence has swayed popular opinion either way.

5 notes

·

View notes

Text

A view to a solar non-dualism

During my study of the Greek Magical Papyri, a while back I encountered a phrase at the end of PGM IV. 1596-1715, which is a spell for Helios. The last line of the papyrus says that, when the consecration is complete, the magician must say the following: "the one Zeus is Sarapis".

I thought about that phrase again recently, maybe while going through The Concepts of the Divine in the Greek Magical Papyri, and it strikes me as a solar image of non-duality.

"The one Zeus is Sarapis", "heis Zeus Sarapis", but Sarapis (Serapis) is also an image of Hades or Plouton, being a god of the underworld and lord of the dead (not to mention a fusion of the god Osiris and the bull Apis). That's actually quite explicit when you get to Sarapis' iconography. In fact that were instances where Serapis and Hades or Plouton were explicitly identified with each other. In fact, that link is even more explicit in Porphyry's Philosophia ex oraculis, where he described Serapis as one of the gods who rule the infernal daimons, the others being Hekate and the demon dog Kerberos.

From this standpoint, I interpret the formula "heis Zeus Sarapis" as meaning that Zeus and Hades are one. In some ways that could be seen not only as a form of syncretism but also as an expression of theological monism, or certainly of the kind that was being developed around the time of Hellenistic Egypt.

But there's more to it, because this is also a solar image. Zeus-Sarapis was also Zeus-Helios-Sarapis, or Zeus Helios Great Sarapis. In the eastern desert of Egypt, under Roman occupation, one could except to find many images of the god Zeus Helios Megas Sarapis. especially in a place called Dios (now called Abu Qurayyah). There was also a temple dedicate to that god at Mons Claudianus, consecrated by a slave named Epaphroditos. Some scholars, of course, interpret this as a Greek interpretation of the Egyptian god Amun Ra. Furthermore, the phrase "heis Zeus Sarapis" has been found inscribed on a depiction of Harpocrates, Horus the Child, a deity who was frequently syncretised with Helios and thus seen as a solar god. Zeus, Helios, and Serapis were also sometimes seen as one godhead. This perhaps derives from an Orphic saying, purportedly attributed to an oracle of Apollo, which says "Zeus, Hades, Helios-Dionysus, three gods in one godhead!". In Flavius Claudius Julianus Hymn to Helios, this is rendered as "Zeus, Hades, Helios Serapis, three gods in one godhead!", which perhaps suggests that Serapis was being identified with Dionysus. Either way, it establishes a theology in which the three gods are mutually identified and unified as a solar godhead.

Since Helios was the sun god par excellence in this context, Zeus-Helios-Sarapis was seen as a solar deity, and thus it is a solar image. More importantly, it is an image of the non-duality of the sun. This incidentally is not out of step with certain monistic trends insofar as they also reflected a kind of solar theology. For example, Macrobius interpreted the myth of Saturn or Kronos as an expression of the generative power of the sun, thus identifying Saturn/Kronos with the sun, which Macrobius thought was the highest divine principle and even the ultimate basis of all the other gods.

The non-duality that I'm getting into, by this point, should be understood as something that involves and transcends a certain measure of "evil", or at least contains the infernal in itself. This lends itself to a dual-natured solar divinity that is by no means unfamiliar within ancient polytheism. Sun gods, perhaps like many other gods, were very double-sided. For example, the Iranian sun god Mithra was seen both as a benevolent deity concerned with friendship and contract, and as a mysteries, uncanny, and even "sinister" or "warlike" deity (though, these aspects are often attributed to his syncretic form as Mitra-Varuna). Kris Kershaw suggested in The One-Eyed God: Odin and the (Indo-)Germanic Männerbünde that the daeva Aeshma actually represented an aspect of Mithra's being. In Egypt, the wrathful goddess Sekhmet was also understood as an aspect of the power of Ra. The Mesopotamian sun god, Utu, or Shamash, was also a judge in the underworld. Another Mesopotamian god, Nergal, was a warlike god of disease and death who also represented a harsh aspect of the sun. Apollo, an oracular deity who was eventually associated with the sun, was also seen as a destroyer and shared Nergal's association with disease in addition to healing. Helios himself was also sometimes referred to as a destroyer, as indicated by one of his epithets, Apollon. In fact, even Helios may have been connected or in some cases even identified with Hades. At Smyrna, Plouton was worshipped as Plouton-Helios. This may even have reflected the notion of a nocturnal Sun that shone in the realm of the dead, perhaps inherited from Egypt. In some parts of Greece, Helios was also invoked alongside a chthonic form of Zeus in oath-swearing ceremonies.

The real fun I'd like to get into with this concept comes from hongaku-inspired forms of medieval Buddhist theology and their influence on the Shinto pantheon. And in that sense our focus turns to none other than Amaterasu, the Japanese sun goddess who was also the divine patron of sovereignty. The medieval Amaterasu was to some extent equated with all deities at all levels - naturally, this meant even the demonic and chthonic deities. Thus Amaterasu was both a saving deity and a wrathful deity in the Buddhist context. Late medieval Shinto theology had even crowned her a "deity of the Dharma nature", a unique kind of deity with no original ground, and thus a transcendent power akin to that of Dainichi Nyorai (Vairocana Buddha). The Tenshō daijin kuketsu identified Amaterasu with Bonten (Brahma), Taishakuten (Indra), and Shoten, and then with Yama in the underworld because she records the dharmas of good and evil, and from there it asserts that we are dealing with the same deity in all cases. The same text also says that Kukai interpreted Amaterasu as the great deity of the five paths in the underworld, and therefore the primordial deity controlling birth and death. In some respects she was even seen as an araburu-no-kami just like Susano-o, both sharing a double ambivalence that is projected onto their opposition. In other cases, Amaterasu was identified with the Buddhist god Sanbo Kojin, the wild or demonic god of the three poisons who was interpreted as the honji or "original ground" of Amaterasu, and then by extension Amaterasu was identified with Mara, the demon king himself, in the same way.

All of this, of course, is an expression of the non-dualism of hongaku thought, in which the darkness of unenlightened passion and ignorance (thus the realm of the demons) is at once enlightenment and Buddha nature, and not only this it is both simultaneously the ground of enlightenment and Buddha nature and also, ultimately, indistinguishable from enlightenment and Buddha nature.

#sun gods#non-dualism#polytheism#paganism#zeus#serapis#helios#hellenic polytheism#hellenistic egypt#ancient egypt#ancient greece#hongaku#hades#chthonic gods

24 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

Protect Your Laravel APIs: Common Vulnerabilities and Fixes

API Vulnerabilities in Laravel: What You Need to Know

As web applications evolve, securing APIs becomes a critical aspect of overall cybersecurity. Laravel, being one of the most popular PHP frameworks, provides many features to help developers create robust APIs. However, like any software, APIs in Laravel are susceptible to certain vulnerabilities that can leave your system open to attack.

In this blog post, we’ll explore common API vulnerabilities in Laravel and how you can address them, using practical coding examples. Additionally, we’ll introduce our free Website Security Scanner tool, which can help you assess and protect your web applications.

Common API Vulnerabilities in Laravel

Laravel APIs, like any other API, can suffer from common security vulnerabilities if not properly secured. Some of these vulnerabilities include:

>> SQL Injection SQL injection attacks occur when an attacker is able to manipulate an SQL query to execute arbitrary code. If a Laravel API fails to properly sanitize user inputs, this type of vulnerability can be exploited.

Example Vulnerability:

$user = DB::select("SELECT * FROM users WHERE username = '" . $request->input('username') . "'");

Solution: Laravel’s query builder automatically escapes parameters, preventing SQL injection. Use the query builder or Eloquent ORM like this:

$user = DB::table('users')->where('username', $request->input('username'))->first();

>> Cross-Site Scripting (XSS) XSS attacks happen when an attacker injects malicious scripts into web pages, which can then be executed in the browser of a user who views the page.

Example Vulnerability:

return response()->json(['message' => $request->input('message')]);

Solution: Always sanitize user input and escape any dynamic content. Laravel provides built-in XSS protection by escaping data before rendering it in views:

return response()->json(['message' => e($request->input('message'))]);

>> Improper Authentication and Authorization Without proper authentication, unauthorized users may gain access to sensitive data. Similarly, improper authorization can allow unauthorized users to perform actions they shouldn't be able to.

Example Vulnerability:

Route::post('update-profile', 'UserController@updateProfile');