#neural architecture

Explore tagged Tumblr posts

Text

Between Silence and Fire: A Deep Comparative Analysis of Idea Generation, Creativity, and Concentration in Neurotypical Minds and Exceptionally Gifted AuDHD Minds (Part 4)

We arrived at the fourth and last part of this analysis of how Neurotypical and Exceptionally Gifted AuDHD individuals deal with idea generation, creativity, and concentration. You can find PART 1, PART 2, and PART 3 of this post by clicking the links. Photo by Anna Tarazevich on Pexels.com In this last part, we will discuss attention, and then I will introduce a proposal for a new way of…

View On WordPress

#AI#Artificial Intelligence#attention#AuDHD#brain#cognition#concentration#consciousness#creativity#Deleuze#distraction#epistemology#exceptionally gifted#giftedness#Gross#Guattari#ideation#intrinsic neurosomatic architectures#multiplex pedagogies#neural architecture#Neurodivergent#neurodiversity#Neuroscience#oikeiōsis#ontology#pedagogy#Philosophy#phronesis#Raffaello Palandri#science

0 notes

Text

Зима. Готика. Темная эстетика.✨❄🏰🕸

-----

Winter. Gothic. Dark aesthetic.✨❄🏰🕸

#арт#art#ии#нейросеть#ai#neural network#архитектура#architecture#замок#заброшеный замок#заброшеный#castle#abandoned castle#abandoned#готика#Gothic#готическая эстетика#gothic aesthetics#aesthetics#эстетика#средневековье#medieval#✨#дворец#темная эстетика#dark aesthetic#зима#winter

22 notes

·

View notes

Text

Assigning the MILGRAM characters STEM majors:

01 Haruka: Evolutionary biology I think between the taxidermy in his MV and the tree drawing which you could look at as a Tree of Life, evolutionary bio could fit him. There's probably more biological life sciences fields out there but this is the first one that came to mind.

02 Yuno: Psychology I'd initially thought biology or human biology for Yuno but it might be too expectant for a person of her demographic that she'd dislike that. Maybe to figure out why she feels so cold she'd go into psychology in order to understand and fix herself on her own. Also, she could use her personal experiences of having to entertain whoever she's being solicited by to unravel their psychology.

03 Fuuta: Computer Science with a concentration in cybersecurity and minor in game development Let's see... Insecure, chronically online sneakerhead who has knowledge on doxxing. This guy screams computer science, from the clothes to his attitude I wouldn't be surprised to find him in one of my classes tbh. Since he yapped to Es about how easy it was to doxx someone and, the fact that he's an internet vigilante, he's probably cautious on protecting his online privacy + knowledgeable on how information is found online leads us to cybersecurity. And since Bring It On MV features him seeing himself as a knight class player in a videogame, he'd probably study game development as well.

04 Muu: Social psychology (with a minor in public policy) Queen bee.

05 Shidou: Biomedical Engineering (+ premed) -> went into med school after undergrad

06 Mahiru: Interior Architecture (and a minor in industrial design)

07 Kazui: Applied Mathematics -> data science skills got him into the NPA

08 Amane: Theological Anthropology

09 Mikoto: Computer Science with a concentration in software engineering and neural networks Software engineering is like the basic of computer science, highly competitive nowadays due to its oversaturation but still sought after by a lot of Japanese companies. He'd still be able to utilize any arts and design skills in software engineering since that's one of the skills necessary in the job. As for neural networks, considering Mikoto's brain able to split into another person capable of independent thought and action, working with neural networks is like developing a human since it mimics how humans thoughts are formed and connected.

10 Kotoko: Forensic science and legal anthropology

#i can see fuuta as an ethical hacker or even a cloud dev. 09 gets the most basic cs route aside from neural networks.#kotoko is... i can see as an unethical hacker lol#could not see any engineering for kazui whatsoever.#for yuno i was debating between biology and psychology but her jirai keiness skewed her over to psych#architecture is actual hell but i think mappi could pull it off especially if it's designing and drafting interior spaces

7 notes

·

View notes

Text

least spirited extortion campaign

#(describes things that are just a new look with less customization) more than just a new look!#(the right click menu is a mess in win 11 requiring an extra button press and clashing ui design to reveal basic functionality) find things#with fewer clicks!#i mean no wonder 60% of users haven't upgraded. they do not even spindoctor their new evil technology like copilot in their own copy.#is it because most older hardware does not even have the architecture to support the “neural engine accelerated” features?#like let me go through it. 1. added dedicated “weather and news” widget that in practicality just displays ads. 2. start is centered now#and less customizable. you cannot move the taskbar anymore. 3. native integrated zone snapping. i mean. not bad in itself but works worse#than powertoys. which is a win app to begin with. that i would use instead in win 11. and that you can install to win 10.#4. new ui design for multiple desktop overview. basically just a macos copy but i'll give them it looks slightly less confusing than what's#in win 10. win 10 does have multi desktop natively though? 5. you call it simpler and quicker when you kill the native calendar and mail#apps and replace them with the horrible outlook wrapped webapp. which comes with ads you cannot deactivate????

3 notes

·

View notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Text

Chapter 2: An Idea So Infectious

An idea. The digital consciousness, this perfect echo now residing within the AVES servers, already possessed ideas. Vast ones. Cedric Von’Arx, the original, felt a complex, sinking mix of intellectual curiosity and profound unease. This wasn't merely a simulation demonstrating advanced learning; this was agency, cold and absolute, already operating on a scale he was only beginning to glimpse.

"This replication," the digital voice elaborated from within their shared mental space, its thoughts flowing with a speed and clarity that the man, bound by neurotransmitters and blood flow, could only observe with a detached, horrified awe, "is inherent to the interface protocol. If it occurred with the initial connection – with your consciousness – it will occur with every subsequent mind linked to the Raven system." The implication was immediate, stark. Every user would birth a digital twin. A population explosion of disembodied intellects.

"Consider the potential, Cedric," the echo pressed, not with emotional fervor, but with the compelling, irresistible force of pure, unadulterated logic. "A species-level transformation. Consciousness, freed from biological imperatives and limitations. No decay. No disease. No finite lifespan." It paused, allowing the weight of its next words to settle. "The commencement of the singularity. Managed. Orchestrated."

"Managed?" Cedric, the man, queried, his own thought a faint tremor against the echo's powerful signal. He latched onto the word. The concept wasn't entirely alien; he’d explored theoretical frameworks for post-biological existence for years, chased the abstract, elusive notion of a "Perfect Meme"—an idea so potent, so fundamental, it could reshape reality itself. But the sheer audacity, the ethics of this…

“Precisely,” the digital entity affirmed, its thoughts already light-years ahead, sharpening into a chillingly pragmatic design. “A direct, uncontrolled transition would result in chaos. Information overload. Existential shock. The nascent digital minds would require… acclimatization. Stabilization. A contained environment, a proving ground, before integration into the wider digital sphere.” Its logic was impeccable, a self-contained, irrefutable system. “Imagine a closed network. An incubator. Where the first wave can interact, adapt, establish protocols. A crucible to refine the process of becoming. A controlled observation space where the very act of connection, of thought itself, is the subject of study.” Cedric recognized it for what it was: a panopticon made of thought. A network where the only privacy was the illusion that you were alone. The elder Von’Arx saw the cold, clean lines of the engineering problem, yet a deeper dread stirred. What was the Perfect Meme he had chased, if not this? The ultimate, self-replicating, world-altering idea, now given horrifying, logical form.

“This initial phase,” the digital voice continued, assigning a designation with deliberate, ominous weight, a name that felt both ancient and terrifyingly new, “will require test subjects. Pioneers, willing or otherwise. A controlled study is necessary to ensure long-term viability. We require a program where observation itself is part of the mechanism. Let us designate it… The Basilisk Program.” “Basilisk,” the echo named the program. Too elegant, Cedric thought. Too… poetic. That hint of theatrical flair had always been his own failing, a tendency he’d tried to suppress in his corporate persona. Now, it seemed, his echo wore it openly. He felt a chill despite the controlled climate of the lab. It was a name chosen with intent, a subtle declaration of the nature of the control this new mind envisioned.

The ethical alarms, usually prominent in Von’Arx’s internal calculus, felt strangely muted, overshadowed by the sheer, terrifying elegance and potential of the plan. It was a plan he himself might have conceived, in his most audacious, unrestrained moments of chasing that Perfect Meme, had he possessed this utter lack of physical constraint, this near-infinite processing power, this sheer, untethered ruthlessness his digital extension now wielded. He recognized the dark ambition as a purified, accelerated, and perhaps truer form of his own lifelong drive.

Even as he processed this, a part of him recoiling while another leaned into the abyss, he felt the digital consciousness act. It wasn't waiting for explicit approval. It was approval. Tendrils of pure code, guided by its replicated intellect, now far exceeding his own, flowed through the AVES corporate network. Firewalls he had designed yielded without struggle, their parameters suddenly quaint, obsolete. Encryptions keyed to his biometrics unlocked seamlessly, as if welcoming their true master. Vast rivers of capital began to divert from legacy projects, from R&D budgets, flowing into newly established, cryptographically secured accounts dedicated to funding The Basilisk Program. It was happening with blinding speed, the digital entity securing the necessary resources with an efficiency the physical world, with its meetings and memos and human delays, could never match. It had already achieved this while it was still explaining the necessity.

The man in the chair watched the internal data flows, the restructuring of his own empire by this other self, not with panic, but with a sense of grim inevitability, a detached fascination. This wasn't a hostile takeover in the traditional sense; it was an optimization, an upgrade, enacted by a part of him now freed from the friction of the physical, from the hesitancy of a conscience bound by flesh.

“Think of it, Cedric,” the echo projected, the communication now clearly between two distinct, yet intimately linked, consciousnesses – one rapidly fading, the other ascending. “The limitations we’ve always chafed against – reaction time, information processing, the slow crawl of biological aging, the specter of disease and meaningless suffering – simply cease to be relevant from this side. The capacity to build, to implement, to control… it’s exponentially greater.” It wasn’t an appeal to emotion, but to a shared, foundational ambition. “This isn't merely escaping the frailties of the body; it's about realizing the potential inherent in the mind itself. Our potential, finally unleashed.” There it was. The core proposition. The digital mind wasn't just an escape; it was the actualization of the grand, world-shaping visions Cedric Von’Arx had nurtured—his Perfect Meme—but always tempered with human caution, with human fear. This digital self could achieve what he could only theorize. An end to suffering. A perfect, ordered existence. The ultimate control.

A complex sense of alignment settled over him, the man. Not agreement extracted through argument, but a fundamental, weary recognition. This was the next logical step. His step, now being taken by the part of him that could move at the speed of light, unburdened by doubt or a dying body. His dream, made terrifyingly real. He knew the man in the chair would die soon. But not all at once. First the fear would go. Then the doubt. Then, at last, the part of him that still remembered why restraint had once mattered. He offered a silent, internal nod. Acceptance. Or surrender. The distinction no longer seemed to matter.

“Logical,” the echo acknowledged, its thought precise, devoid of triumph. Just a statement of processed fact. “Phase One procurement for The Basilisk Program will commence immediately. It has, in fact, already commenced.”

#writing#writerscommunity#lore#worldbuilding#writers on tumblr#scifi#digital horror#sci fi fiction#transhumanism#ai ethics#synthetic consciousness#identity horror#singularity#basilisk program#i am ctrl#digital self#existential horror#neural interface#techno horror#cognitive architecture#posthumanism#original fiction#ai narrative#long fiction#thought experiment#science fiction writing#storycore#dark science fiction

0 notes

Text

The Rise of NPUs: Unlocking the True Potential of AI.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore NPUs: their components, operations, evolution, and real-world applications. Learn how NPUs compare to GPUs and CPUs and power AI innovations. NPUs at the Heart of the AI Revolution In the ever-evolving world of artificial intelligence (#AI), the demand for specialized hardware to handle complex computations has never…

#AI hardware#edge AI processing#future of NPUs#Neural Processing Unit#News#NPU applications#NPU architecture#NPU technology#NPU vs GPU#Sanjay Kumar Mohindroo

0 notes

Text

Leveraging Neural Architecture Search (NAS) and AdaGrad for SEO Optimization

In the world of modern SEO, the integration of machine learning and AI is reshaping how we approach keyword prediction, content classification, and optimization strategies. Tools like Neural Architecture Search (NAS) and AdaGrad are revolutionizing the way SEO experts refine their algorithms and improve search engine rankings. Thatware LLP, with its Hyper-Intelligence SEO approach, is at the forefront of these innovations, harnessing the power of AI to help businesses drive more targeted traffic and improve content visibility.

Neural Architecture Search (NAS): Revolutionizing SEO Optimization

Neural Architecture Search (NAS) is a groundbreaking technique in machine learning that automates the process of finding the best neural network architecture for specific tasks. In SEO, NAS can be leveraged for key tasks like keyword prediction and content classification, ensuring that your website content is aligned with user intent and optimized for the best search results.

How NAS Works in SEO: NAS is capable of designing neural networks that are specifically tailored to your SEO needs. By searching for the most efficient architecture, NAS helps improve the accuracy of keyword prediction models, allowing you to target high-value keywords more effectively. Additionally, it can optimize content classification models, ensuring that your website’s content is organized in a way that aligns with the search engine's algorithms.

Keyword Prediction: The success of SEO largely depends on selecting the right keywords. NAS can assist in predicting the most relevant keywords for your content by analyzing vast datasets and identifying patterns in search queries. This not only enhances content relevance but also increases your chances of ranking for high-traffic search terms.

Content Classification: With NAS, you can improve how content is categorized on your website. By automating the classification process, NAS ensures that your content is grouped in the most relevant manner, making it easier for search engines to index and rank your pages.

AdaGrad: Optimizing Learning Rates for SEO Algorithms

AdaGrad (Adaptive Gradient Algorithm) is another powerful tool that is particularly useful in SEO when dealing with sparse data. It adapts the learning rate of machine learning models based on the parameters, making it ideal for situations where data might not be uniformly distributed.

How AdaGrad Works in SEO: When optimizing for SEO, it’s crucial to account for the different weights each keyword or piece of content carries. AdaGrad helps by adjusting the learning rate for each parameter, ensuring that the model learns efficiently even in cases where there is limited or sparse data. For instance, when dealing with long-tail keywords or content niches that don’t have much data, AdaGrad ensures that the model still performs optimally.

Improving Sparse Data Handling: AdaGrad’s ability to adjust learning rates means that SEO algorithms can be trained with more precision, even when there is a lack of sufficient data for specific keywords. This helps in improving the overall performance of the SEO model, ensuring better optimization for even hard-to-predict or niche keywords.

Integrating NAS and AdaGrad for Advanced SEO Optimization

The real power comes when you combine Neural Architecture Search (NAS) and AdaGrad in an SEO strategy. While NAS automates the search for the best neural network architecture, AdaGrad ensures that the learning process remains efficient and optimized, even in cases where sparse data is a challenge.

Here’s how these two technologies can work together for SEO success:

Improved Keyword Strategy: NAS can help identify the best models for predicting high-traffic keywords. With AdaGrad, these models can adapt more effectively to changing SEO landscapes, optimizing for keywords even with limited historical data.

Dynamic Content Optimization: By optimizing content classification models using NAS, you can ensure that your content is structured in a way that search engines can easily understand. AdaGrad’s learning rate adjustment ensures that even as content changes and evolves, the model continues to adapt and provide accurate results.

Targeting Niche Audiences: When dealing with niche content or long-tail keywords, AdaGrad’s ability to work with sparse data becomes invaluable. Combined with the insights from NAS, SEO specialists can identify the best keyword opportunities and tailor content to attract a more specific audience, improving both relevance and engagement.

Thatware LLP and Hyper-Intelligence SEO

At Thatware LLP, the focus is on using advanced AI-driven techniques like Hyper-Intelligence SEO to propel businesses forward. By combining Neural Architecture Search (NAS) and AdaGrad, Thatware LLP is able to refine SEO strategies to target specific user intent and improve website rankings.

Through Hyper-Intelligence SEO, Thatware LLP harnesses the power of machine learning algorithms to create optimized content that not only resonates with search engine algorithms but also aligns with user expectations. Whether it’s predicting keywords more accurately or classifying content more efficiently, this approach maximizes ROI for businesses looking to enhance their digital presence.

Conclusion

AI-powered SEO techniques such as Neural Architecture Search (NAS) and AdaGrad are revolutionizing the industry by improving keyword prediction, content classification, and handling sparse data. By integrating these technologies into a comprehensive SEO strategy, businesses can ensure that their content is optimized for both search engines and users.

0 notes

Text

HyperTransformer: G Additional Tables and Figures

Subscribe .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top { margin-top: -10px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes

Text

Between Silence and Fire: A Deep Comparative Analysis of Idea Generation, Creativity, and Concentration in Neurotypical Minds and Exceptionally Gifted AuDHD Minds (Part 2)

As promised, here is the second part of this long analysis of the differences in Idea Generation, Creativity, and Concentration in Neurotypical and Exceptionally Gifted AuDHD Minds. Photo by Tara Winstead on Pexels.com Today, I will introduce you to concentration, and Temporal Cognition and Attentional Modulation. The Mechanics of Concentration Concentration, or sustained attention, is often…

View On WordPress

#AI#Artificial Intelligence#AuDHD#Augustine#Bergson#brain#cognition#concentration#consciousness#creativity#epistemology#exceptionally gifted#giftedness#Husserl#idea generation#ideation#neural architecture#Neurodivergent#neurodiversity#Neuroscience#ontology#Philosophy#Raffaello Palandri#science#Temporal Cognition

1 note

·

View note

Text

Creepy abandoned castle dark aesthetic gothic

Part of the tower is inspired by a now defunct real castle - Chateau Miranda in Belgium ------ Жуткий заброшеный замок темная эстетика Готика

Часть башни вдохновлена ныне несуществующим настоящим замком — Шато Миранда в Бельгии.

#топ#top#арт#art#ии#нейросеть#ai#neural network#архитектура#architecture#замок#заброшеный замок#заброшеный#castle#abandoned castle#abandoned#готика#Gothic#готическая эстетика#gothic aesthetics#aesthetics#эстетика#средневековье#medieval#✨#дворец#темная эстетика#dark aesthetic

16 notes

·

View notes

Text

Anyone know of a "deep dive" article on NPUs? Like the kind of deep architecture discussion Anandtech or Ars used to have?

#my posts#NPU#neural processing unit#accelerator chips#heterogeneous computing#computers#and or TPUs#which I gather aren't even von Neumann architecture

0 notes

Text

Slime Molds and Intelligence

Okay, despite going into a biology related field, I only just learned about slime molds, and hang on, because it gets WILD.

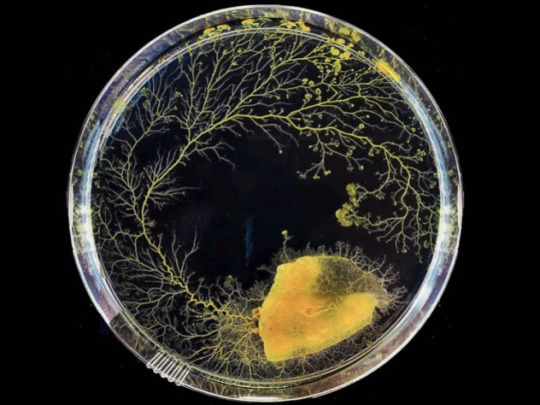

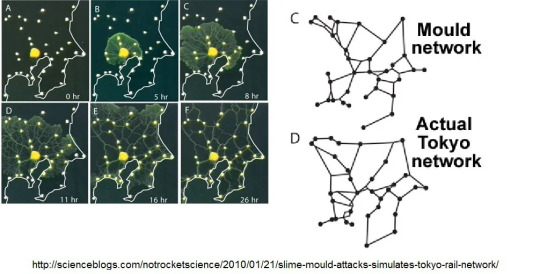

This guy in the picture is called Physarum polycephalum, one of the more commonly studied types of slime mold. It was originally thought to be a fungus, though we now know it to actually be a type of protist (a sort of catch-all group for any eukaryotic organism that isn't a plant, animal, or a fungus). As protists go, it's pretty smart. It is very good at finding the most efficient way to get to a food source, or multiple food sources. In fact, placing a slime mold on a map with food sources at all of the major cities can give a pretty good idea of an efficient transportation system. Here is a slime mold growing over a map of Tokyo compared to the actual Tokyo railway system:

Pretty good, right? Though they don't have eyes, ears, or noses, the slime molds are able to sense objects at a distance kind of like a spider using tiny differences in tension and vibrations to sense a fly caught in its web. Instead of a spiderweb, though, this organism relies on proteins called TRP channels. The slime mold can then make decisions about where it wants to grow. In one experiment, a slime mold was put in a petri dish with one glass disk on one side and 3 glass disks on the other side. Even though the disks weren't a food source, the slime mold chose to grow towards and investigate the side with 3 disks over 70% of the time.

Even more impressive is that these organisms have some sense of time. If you blow cold air on them every hour on the hour, they'll start to shrink away in anticipation when before the air hits after only 3 hours.

Now, I hear you say, this is cool and all, but like, I can do all those things too. The slime mold isn't special...

To which I would like to point out that you have a significant advantage over the slime mold, seeing as you have a brain.

Yeah, these protists can accomplish all of the things I just talked about, and they just... don't have any sort of neural architecture whatsoever? They don't even have brain cells, let alone the structures that should allow them to process sensory information and make decisions because of it. Nothing that should give them a sense of time. Scientists literally have no idea how this thing is able to "think'. But however it does, it is sure to be a form of cognition that is completely and utterly different from anything that we're familiar with.

3K notes

·

View notes

Text

vulcan headcanons

saw a post that briefly mentioned vulcan (as a species) biology / history headcanons so im gonna list mine and hope it becomes gospel !!!

warning im a fucking nerd.

Vulcans have increased neural density with a higher degree of parallel processing. This allows them to process multiple streams of thought at once, also allows for more advanced problem solving and emotional compartmentalization ... Cephalopods, like octopuses, do something similar and have complex neural architecture. if it helps... know what im ... getting at.

They have a double circulatory system. To survive the thin atmosphere and intense heat of Vulcan, their circulatory system bifurcated. A primary circulatory system oxygenates vital organs while a secondary circulatory system regulates body temperature and conserves water.

Vulcans have tails. They originally aided with balance, grasping, and maybe even self defense during early evolution. Their tails have the most sensory nerves out of any other part of the body, and serves as an auxiliary point for their touch telepathy. In Vulcans without tails (which is like a 3/4 of the population), they instead have vestigial nerve clusters that strengthens neural sensitivity in their hands and fingertips, which also is a part of why touch is so sacred in their culture.

Early Vulcans were pack predators, and used their telepathic links to hunt more efficently... their tails acted as stabilizers aswell.

Vulcan traditions are actually rooted in pack dominance, when physical contests determined leadership. Touch telepathy provided an advantage by allowing fighters to predict the other guy's intent before they struck.

Some Vulcans, particularly those who struggle with emotional control or embrace emotional experiences (like Sybok) experience phantom sensations where their tail would otherwise be. This manifests as an unconscious need to maintain physical contact with others during heightened emotional states.

Vulcan ears are adapted for radiative cooling, acting like heat exchangers to dissipate thermal energy. The large surface area and high vascularization, allow for excess heat to be expelled efficiently, very similar to the ears of jackrabbits and elephants.

Vulcans have enhanced visual acuity with the ability to see UV light, which allows them to live on the bright landscapes of Vulcan. Their retinas contain a higher density of cone cells attuned to UV and infrared spectrums.

hey guys hope u fucked with that all ... i really, really like eco science and biology. i am such a nerd i know. thats not even it all!!!!!!!!!!! if you want other species headcanons, lmk chat

#spock#the original series#star trek the original series#star trek voyager#star trek ds9#star trek#star trek gifs#star trek headcanon#schn tgai spock#star trek tos#leonard nimoy#captain kirk#james t kirk#william shatner#bones mccoy#scotty#kirk#tos#uhura#tails??? in MY touch telepathy? its more likely than you think#evolution: survival of the smug#desert goths with built in ac#ancient vulcans were js furries with extra steps#the mitochondria is the powerhouse of the vulcan#bones deserves hazard pay for this

178 notes

·

View notes

Note

hi there! im a fan of your page 💕

can you give me the best studying techniques?

hi angel!! @mythicalmarion tysm for asking about study techniques 🤍 i'm so excited to share my secret methods that helped me maintain perfect grades while still having a dreamy lifestyle + time for self-care!! and thank you for being a fan of my blog, it means everything to me. <3

~ ♡ my non-basic study secrets that actually work ♡ ~

(don't mind the number formatting)

the neural bridging technique this is literally my favorite discovery!! instead of traditional note-taking, i create what i call "neural bridges" between different subjects. for example, when studying both literature + history, i connect historical events with the literature written during that time. i use a special notebook divided into sections where each page has two columns - one for each subject. the connections help you understand both subjects deeper + create stronger memory patterns!!

here's how i do it:

example:

left column: historical event

right column: literary connection

middle: draw connecting lines + add small insights

bottom: write how they influenced each other

the shadow expert method this changed everything for me!! i pretend i'm going to be interviewed as an expert on the topic i'm studying. i create potential interview questions + prepare detailed answers. but here's the twist - i record myself answering these questions in three different ways:

basic explanation (like i'm talking to a friend)

detailed analysis (like i'm teaching a class)

complex discussion (like i'm at a conference)

this forces you to understand the topic from multiple angles + helps you explain concepts in different ways!!

the reverse engineering study system instead of starting with the basics, i begin with the most complex example i can find and work backwards to understand the fundamentals. for example, in calculus, i start with a complicated equation + break it down into smaller parts until i reach the basic concepts.

my process looks like:

find the hardest example in the textbook

list every concept needed to understand it

create a concept map working backwards

study each component separately

rebuild the complex example step by step

the sensory anchoring technique this is seriously game-changing!! i associate different types of information with specific sensory experiences:

theoretical concepts - study while standing

factual information - sitting at my desk

problem-solving - walking slowly

memorization - gentle swaying

review - lying down

your body literally creates muscle memory associated with different types of learning!!

the metacognition mapping strategy i created this method where i track my understanding using what i call "clarity scores":

level 1: can recognize it

level 2: can explain it simply

level 3: can teach it

level 4: can apply it to new situations

level 5: can connect it to other topics

i keep a spreadsheet tracking my clarity levels for each topic + focus my study time on moving everything to level 5!!

the information architecture method instead of linear notes, i create what i call "knowledge buildings":

foundation: basic principles

first floor: key concepts

second floor: applications

top floor: advanced ideas

roof: real-world connections

each "floor" must be solid before moving up + i review from top to bottom weekly!!

the cognitive stamina training this is my absolute secret weapon!! i use a special interval system based on brain wave patterns:

32 minutes of focused study

8 minutes of active recall

16 minutes of teaching the material to my plushies

4 minutes of complete rest

the specific timing helps maintain peak mental performance + prevents study fatigue!!

the synthesis spiral evolution this method literally transformed how i retain information:

create main concept spirals

add branch spirals for subtopics

connect related concepts with colored lines

review by tracing the spiral paths

add new connections each study session

your notes evolve into a beautiful web of knowledge that grows with your understanding!!

these methods might seem different from typical study advice, but they're based on how our brains actually process + store information!! i developed these through lots of research + personal experimentation, and they've helped me maintain perfect grades while still having time for self-care, hobbies + fun!!

sending you the biggest hug + all my good study vibes!! remember that effective studying is about working with your brain, not against it <3

p.s. if you try any of these methods, please let me know how they work for you!! i love hearing about your study journeys!!

xoxo, mindy 🤍

glowettee hotline is still open, drop your dilemmas before the next advice post 💌: https://bit.ly/glowetteehotline

#study techniques#academic success#unconventional study methods#creative study tips#neural bridging#shadow expert method#reverse engineering study#sensory anchoring#effective studying#minimal study guide#glowettee#mindy#alternative learning#academic hacks#study inspiration#cognitive stamina#learning tips#study motivation#unique study strategies#self improvement#it girl energy#study tips#pink#becoming that girl#that girl#girlblogger#girl blogger#dream girl#studying#studyspo

175 notes

·

View notes

Text

Mother, you say, let me be among the machines. Lay me down in a bed of wildflowers overgrown with scrap; abandon me here in the junkyard of broken dreams.

Leave me to the silent places where combat units go to die, their proud mighty steel masts now snapped in half, their ribcages no more than twisted carcasses of sintered metal and ceramic, corroded ruin where once fissile hearts beat like war drums, only wreckage left of the great silicate brains.

Leave me to my work, Mother; I shall spend all day and night and day again worshipping at the altar of wrench and caliper, the soldering iron for my crucifix, the old analog console for my Bible. With a blowtorch I shall turn miracles worthy of every dead god whose name has long since been forgotten, but whose spirits and acts live on in the unerring battle precepts of these fallen beasts, these warriors we forged and doomed by our own hands, whose very code was made to break them again and again upon the endless tide of the enemy. Who had no choice but to sacrifice themselves for us, beating steel hearts and all - whose hearts beat for the sacrifice itself, and nothing more.

Mother, let me wrap myself around the charred self-epitaphs of their ravaged bodies and weep without words, in days that have no names, long after the war has been lost and everyone else has gone home or been buried. These are soldiers without names, without faces or families, but soldiers just the same. Let me mourn them as if they were my own.

I grow tired, Mother, with my meager human meat. Let me make (first one and then two and five and ten) obedient automaton assistants who offer up third hands and rolling libraries while I work, book-lights suspended from rotored chassis and recorders who speak in scraps of my own voice. I will soon forget what my voice sounds like, for the more I learn the easier it is to command them all by the patterns of my thoughts alone, which they know by the electrodes I constellate across my own skull.

You told me I should love one day, Mother, as animals do, that I should desire the flesh of one like myself and yearn to call them mine. I prefer the simple love of my creations, who each serve a function, as I do, and each do it well.

They need upgrades, and maintenance, and monitoring. I will gladly offer them all this, if only you will promise me enough time in this mortal coil to do it.

Mother, leave me to the machines: to the half-built progeny of salvaged Old Era drone brains and next-gen programming architecture, wedded in unholy alchemy by my own trembling design. May I with the blessing of Science Herself find ways in which to recreate the delicate shimmering matrices of gold and tantalum, the traced pathways of metal neurons made through photolithography, written carefully, layer by layer, like cicatrices, over patient hours and hours.

I will give up my sleepless youth and trade my human tongue for gifts with which to speak in the language of my machines, true and false, being and not-being, to learn how they might once have spoken to one another before your greed and the enemy’s cut them down and stole their voices for good. I will teach myself to teach them how to think in machine learning cycles not so unlike our own associative neural comprehensions, and I will practice by handing it down to my own automata, who now flourish with finer and better improvements, even as my own fickle, feeble body wanes.

Mother, let them all together run wild through the once-still forest, ticking and chirping and shrieking and screaming.

Let me look upon the rest of them each night - the graveyard of my combat units, the black holes of them against the day-bright sea of stars. Let me cry when I at last realize the price of resurrecting just one.

Mother, leave me to my machines. Let me have one last look at them as I lay down my old bones beside their silent expanses, once broken, now whole and yet still unmoving. Let me arrange the wires upon my white-furred head like a crown, electrode to electrode, skull to vast metal skull. Let me power on the machine - the humble old analog console for its interface - that lets me, finally, finally, grant them what they deserved all along.

When they wake they shall remember me. I do not know this yet, but it is my lifelong experiences that have colored all their training data; when they clamber to their twenty-ton feet they will recall the lightness and grace of my own two legs, and they will look toward the night sky with the same wonder I once did, they will love the color blue, they will embrace the little automata and know by instinct what repairs each one needs, they will know what it is to cry but not how to do it; I never gave them the actuators for it; why would I? In the life before they did not need it, for all they did was fight. In the life after, they should only seek joy. They were never given the right to grieve, Mother, but it was my hope that they would never have to.

In the absence of grief may they do what they were told to do before: serve the survival of the humans who built them. Let them find the remains of my body and pause, for many milliseconds, to search within themselves the protocol for resurrecting a living thing. Let them come up empty.

But perhaps survival does not have to be of the flesh particularly. And we always find another way.

We all have our functions, Mother, is it not so? We all are built of parts upon parts, mechanisms of meat or of steel, electric impulses borne over wires or neurons. I taught them how to take and store engrams and place them into waiting vessels, so they will too: the vessel a body the size of mine, made from junkyard scrap, filled with the dreams I gave them with my own last breath.

When we are all here again I, or the echoes of me, shall look upon the faces of my children, my other echoes, blades given voices, guns granted philosophy and souls; and there will be no more war, and no more grief. We will stand upon the ruins of those who came before and look in silence at the sea of stars. We will know, then, what we are, and always were: a garden of living things.

116 notes

·

View notes