#sql Date

Explore tagged Tumblr posts

Text

Someone made it in excel, so I obviously had to make it a line in a database table.

there are more thingz you can do with a post than just dunking it in water. but theyre scary.

#ignore that I forgot I had already liked the post so there's an extra like and note#also ignore that sql developer apparently decided I didn't press 5 when writing the date

1K notes

·

View notes

Text

Finding the Maximum Value Across Multiple Columns in SQL Server

To find the maximum value across multiple columns in SQL Server 2022, you can use several approaches depending on your requirements and the structure of your data. Here are a few methods to consider: 1. Using CASE Statement or IIF You can use a CASE statement or IIF function to compare columns within a row and return the highest value. This method is straightforward but can get cumbersome with…

View On WordPress

#comparing multiple columns SQL#SQL CROSS APPLY technique#SQL Server aggregate functions#SQL Server date comparison#SQL Server maximum value

0 notes

Text

i walked into seekL because of a mystery masked man and walked out with pseudo-SQL knowledge i love dating sims

#gameposting#seekL#will i cook fanart....mayhaps...#also i really love odxny he's too likeable i can't fucking get the bad ending#waiting on that play through by the creator#ive been wanting to code i think. idk if i will but ive always wanted to learn html and python but didn't get the push to#who knows haha#12am post-hyperfocus headache ahh post

299 notes

·

View notes

Text

Albert Gonzalez (born 1981) is an American computer hacker, computer criminal and police informer, who is accused of masterminding the combined credit card theft and subsequent reselling of more than 170 million card and ATMnumbers from 2005 to 2007, the biggest such fraud in history. Gonzalez and his accomplices used SQL injection to deploy backdoors on several corporate systems in order to launch packet sniffing (specifically, ARP spoofing) attacks which allowed him to steal computer data from internal corporate networks.

Gonzalez bought his first computer when he was 12, and by the time he was 14 managed to hack into NASA. He attended South Miami High School in Miami, Florida, where he was described as the "troubled" pack leader of computer nerds. In 2000, he moved to New York City, where he lived for three months before moving to Kearny, New Jersey.

While in Kearny, he was accused of being the mastermind of a group of hackers called the ShadowCrew group, which trafficked in 1.5 million stolen credit and ATM card numbers. Although considered the mastermind of the scheme (operating on the site under the screen name of "CumbaJohnny"), he was not indicted. According to the indictment, there were 4,000 people who registered with the Shadowcrew.com website. Once registered, they could buy stolen account numbers or counterfeit documents at auction, or read "Tutorials and How-To's" describing the use of cryptography in magnetic strips on credit cards, debit cards and ATM cards so that the numbers could be used. Moderators of the website punished members who did not abide by the site's rules, including providing refunds to buyers if the stolen card numbers proved invalid.

In addition to the card numbers, numerous other objects of identity theft were sold at auction, including counterfeit passports, drivers' licenses, Social Security cards, credit cards, debit cards, birth certificates, college student identification cards, and health insurance cards. One member sold 18 million e-mail accounts with associated usernames, passwords, dates of birth, and other personally identifying information. Most of those indicted were members who actually sold illicit items. Members who maintained or moderated the website itself were also indicted, including one who attempted to register the .cc domain name Shadowcrew.cc.

The Secret Service dubbed their investigation "Operation Firewall" and believed that up to $4.3 million was stolen, as ShadowCrew shared its information with other groups called Carderplanet and Darkprofits. The investigation involved units from the United States, Bulgaria, Belarus, Canada, Poland, Sweden, the Netherlands and Ukraine. Gonzalez was initially charged with possession of 15 fake credit and debit cards in Newark, New Jersey, though he avoided jail time by providing evidence to the United States Secret Service against his cohorts. 19 ShadowCrew members were indicted. Gonzalez then returned to Miami.

While cooperating with authorities, he was said to have masterminded the hacking of TJX Companies, in which 45.6 million credit and debit card numbers were stolen over an 18-month period ending in 2007, topping the 2005 breach of 40 million records at CardSystems Solutions. Gonzalez and 10 others sought targets while wardriving and seeking vulnerabilities in wireless networks along U.S. Route 1 in Miami. They compromised cards at BJ's Wholesale Club, DSW, Office Max, Boston Market, Barnes & Noble, Sports Authority and T.J. Maxx. The indictment referred to Gonzalez by the screen names "cumbajohny", "201679996", "soupnazi", "segvec", "kingchilli" and "stanozlolz." The hacking was an embarrassment to TJ Maxx, which discovered the breach in December 2006. The company initially believed the intrusion began in May 2006, but further investigation revealed breaches dating back to July 2005.

Gonzalez had multiple US co-defendants for the Dave & Buster's and TJX thefts. The main ones were charged and sentenced as follows:

Stephen Watt (Unix Terrorist, Jim Jones) was charged with providing a data theft tool in an identity theft case. He was sentenced to two years in prison and 3 years of supervised release. He was also ordered by the court to pay back $250,000 in restitution.

Damon Patrick Toey pleaded guilty to wire fraud, credit card fraud, and aggravated identity theft and received a five-year sentence.

Christopher Scott pleaded guilty to conspiracy, unauthorized access to computer systems, access device fraud and identity theft. He was sentenced to seven years.

Gonzalez was arrested on May 7, 2008, on charges stemming from hacking into the Dave & Buster's corporate network from a point of sale location at a restaurant in Islandia, New York. The incident occurred in September 2007. About 5,000 card numbers were stolen. Fraudulent transactions totaling $600,000 were reported on 675 of the cards.

Authorities became suspicious after the conspirators kept returning to the restaurant to reintroduce their hack, because it would not restart after the company computers shut down.

Gonzalez was arrested in room 1508 at the National Hotel in Miami Beach, Florida. In various related raids, authorities seized $1.6 million in cash (including $1.1 million buried in plastic bags in a three-foot drum in his parents' backyard), his laptops and a compact Glock pistol. Officials said that, at the time of his arrest, Gonzalez lived in a nondescript house in Miami. He was taken to the Metropolitan Detention Center in Brooklyn, where he was indicted in the Heartland attacks.

In August 2009, Gonzalez was indicted in Newark, New Jersey on charges dealing with hacking into the Heartland Payment Systems, Citibank-branded 7-Eleven ATM's and Hannaford Brothers computer systems. Heartland bore the brunt of the attack, in which 130 million card numbers were stolen. Hannaford had 4.6 million numbers stolen. Two other retailers were not disclosed in the indictment; however, Gonzalez's attorney told StorefrontBacktalk that two of the retailers were J.C. Penney and Target Corporation. Heartland reported that it had lost $12.6 million in the attack including legal fees. Gonzalez allegedly called the scheme "Operation Get Rich or Die Tryin."

According to the indictment, the attacks by Gonzalez and two unidentified hackers "in or near Russia" along with unindicted conspirator "P.T." from Miami, began on December 26, 2007, at Heartland Payment Systems, August 2007 against 7-Eleven, and in November 2007 against Hannaford Brothers and two other unidentified companies.

Gonzalez and his cohorts targeted large companies and studied their check out terminals and then attacked the companies from internet-connected computers in New Jersey, Illinois, Latvia, the Netherlands and Ukraine.

They covered their attacks over the Internet using more than one messaging screen name, storing data related to their attacks on multiple Hacking Platforms, disabling programs that logged inbound and outbound traffic over the Hacking Platforms, and disguising, through the use of proxies, the Internet Protocol addresses from which their attacks originated. The indictment said the hackers tested their program against 20 anti virus programs.

Rene Palomino Jr., attorney for Gonzalez, charged in a blog on The New York Times website that the indictment arose out of squabbling among U.S. Attorney offices in New York, Massachusetts and New Jersey. Palomino said that Gonzalez was in negotiations with New York and Massachusetts for a plea deal in connection with the T.J. Maxx case when New Jersey made its indictment. Palomino identified the unindicted conspirator "P.T." as Damon Patrick Toey, who had pleaded guilty in the T.J. Maxx case. Palomino said Toey, rather than Gonzalez, was the ring leader of the Heartland case. Palomino further said, "Mr. Toey has been cooperating since Day One. He was staying at (Gonzalez's) apartment. This whole creation was Mr. Toey's idea... It was his baby. This was not Albert Gonzalez. I know for a fact that he wasn't involved in all of the chains that were hacked from New Jersey."

Palomino said one of the unnamed Russian hackers in the Heartland case was Maksym Yastremskiy, who was also indicted in the T.J. Maxx incident but is now serving 30 years in a Turkish prison on a charge of hacking Turkish banks in a separate matter. Investigators said Yastremskiy and Gonzalez exchanged 600 messages and that Gonzalez paid him $400,000 through e-gold.

Yastremskiy was arrested in July 2007 in Turkey on charges of hacking into 12 banks in Turkey. The Secret Service investigation into him was used to build the case against Gonzalez including a sneak and peek covert review of Yastremskiy's laptop in Dubai in 2006 and a review of the disk image of the Latvia computer leased from Cronos IT and alleged to have been used in the attacks.

After the indictment, Heartland issued a statement saying that it does not know how many card numbers were stolen from the company nor how the U.S. government reached the 130 million number.

Gonzalez (inmate number: 25702-050) served his 20-year sentence at the FMC Lexington, a medical facility. He was released on September 19, 2023.

22 notes

·

View notes

Note

i took a class focused on databases and (despite hating some of the assignments) actually really loved working with sql; so im really enjoying seekL! :D its making me want to do more with sql now hehe

untapped genre for education dating sims

75 notes

·

View notes

Text

SQL Fundamentals #1: SQL Data Definition

Last year in college , I had the opportunity to dive deep into SQL. The course was made even more exciting by an amazing instructor . Fast forward to today, and I regularly use SQL in my backend development work with PHP. Today, I felt the need to refresh my SQL knowledge a bit, and that's why I've put together three posts aimed at helping beginners grasp the fundamentals of SQL.

Understanding Relational Databases

Let's Begin with the Basics: What Is a Database?

Simply put, a database is like a digital warehouse where you store large amounts of data. When you work on projects that involve data, you need a place to keep that data organized and accessible, and that's where databases come into play.

Exploring Different Types of Databases

When it comes to databases, there are two primary types to consider: relational and non-relational.

Relational Databases: Structured Like Tables

Think of a relational database as a collection of neatly organized tables, somewhat like rows and columns in an Excel spreadsheet. Each table represents a specific type of information, and these tables are interconnected through shared attributes. It's similar to a well-organized library catalog where you can find books by author, title, or genre.

Key Points:

Tables with rows and columns.

Data is neatly structured, much like a library catalog.

You use a structured query language (SQL) to interact with it.

Ideal for handling structured data with complex relationships.

Non-Relational Databases: Flexibility in Containers

Now, imagine a non-relational database as a collection of flexible containers, more like bins or boxes. Each container holds data, but they don't have to adhere to a fixed format. It's like managing a diverse collection of items in various boxes without strict rules. This flexibility is incredibly useful when dealing with unstructured or rapidly changing data, like social media posts or sensor readings.

Key Points:

Data can be stored in diverse formats.

There's no rigid structure; adaptability is the name of the game.

Non-relational databases (often called NoSQL databases) are commonly used.

Ideal for handling unstructured or dynamic data.

Now, Let's Dive into SQL:

SQL is a :

Data Definition language ( what todays post is all about )

Data Manipulation language

Data Query language

Task: Building and Interacting with a Bookstore Database

Setting Up the Database

Our first step in creating a bookstore database is to establish it. You can achieve this with a straightforward SQL command:

CREATE DATABASE bookstoreDB;

SQL Data Definition

As the name suggests, this step is all about defining your tables. By the end of this phase, your database and the tables within it are created and ready for action.

1 - Introducing the 'Books' Table

A bookstore is all about its collection of books, so our 'bookstoreDB' needs a place to store them. We'll call this place the 'books' table. Here's how you create it:

CREATE TABLE books ( -- Don't worry, we'll fill this in soon! );

Now, each book has its own set of unique details, including titles, authors, genres, publication years, and prices. These details will become the columns in our 'books' table, ensuring that every book can be fully described.

Now that we have the plan, let's create our 'books' table with all these attributes:

CREATE TABLE books ( title VARCHAR(40), author VARCHAR(40), genre VARCHAR(40), publishedYear DATE, price INT(10) );

With this structure in place, our bookstore database is ready to house a world of books.

2 - Making Changes to the Table

Sometimes, you might need to modify a table you've created in your database. Whether it's correcting an error during table creation, renaming the table, or adding/removing columns, these changes are made using the 'ALTER TABLE' command.

For instance, if you want to rename your 'books' table:

ALTER TABLE books RENAME TO books_table;

If you want to add a new column:

ALTER TABLE books ADD COLUMN description VARCHAR(100);

Or, if you need to delete a column:

ALTER TABLE books DROP COLUMN title;

3 - Dropping the Table

Finally, if you ever want to remove a table you've created in your database, you can do so using the 'DROP TABLE' command:

DROP TABLE books;

To keep this post concise, our next post will delve into the second step, which involves data manipulation. Once our bookstore database is up and running with its tables, we'll explore how to modify and enrich it with new information and data. Stay tuned ...

Part2

#code#codeblr#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#learn to code#sql#sqlserver#sql course#data#datascience#backend

112 notes

·

View notes

Text

December 31st, 2025

Going to start 2025 programming, so if you need me, I'll be lost in a sea of coffee, bugs, and late-night breakthroughs.

Let’s see where this year takes us. Wishing all of you a beautiful 2025.

Todays been a busy day; life is starting to get back to normal so I'm back to developing a schedule and hopefully sticking to it.

What I did today:

❄ finally finished planning out my portfolio so I can start coding right away in 2025 ❄ lots of scrapbooking ❄ found some projects to do on python ❄ reviewed SQL ❄ started adding to my resume so I can keep it up to date

To-do Jan 1st:

🎆 start programming my portfolio again 🎆 five leet code questions 🎆 check out some free resources people recommended to me 🎆 some GMAT review 🎆 2 hours of Azure studying

Playlist for the day:

youtube

#academic assignments#academic disaster#academic burnout#academic validation#academic romance#academic victim#study aesthetic#study blog#academia aesthetic#study hard#new years resolution#new years 2025#it girl#pinterest girl#academia moodboard#chaotic academia#autumn academia#classic academia#dark academia#desi academia#light academia#studyblr#codeblr#python#Youtube

14 notes

·

View notes

Text

webdev log 2

implemented a gallery. I originally wanted it to be more grid-like but I decided I didn't want to mess too much with that, and I like the simple look anyways. forces you to really take in every shitty drawing.

it features a search function that only works for tags. its purpose is mostly just to search multiple tags, because I couldn't be fucked to add a feature where you could click on multiple tags there at the tags list at the top. it lists out all used tags in the table that stores art so you have an idea of what there all is.

at the bottom there's pagination. it's INSANELY easy to do with this framework I'm using. I was gushing about it to my partner on call!! they made fun of me but that's okay!!!!

anyways, clicking on the date underneath the drawing takes you to a view with the image itself (a kind of "post", if I can call it that) here you can view comments and leave one yourself if you so desire. guests are NOT allowed to reply to existing comments because I'd rather things not get too clogged up. I can't stop anyone if they did an "@{name} {message}" type comment, but I don't think anyone is gonna be chatting it up on my site, so idc. I just want it very minimal, and no nesting beyond one single reply.

of course, you can comment on story chapters too so here's what it looks like for a user (me). of course, if a user (me) posts then it gets automatically approved.

the table that stores comments differentiates story comments and art comments with foreign keys to the primary keys of the the chapter and art tables. it's a little convoluted and I kind of wish I didn't do it this way but it's too damn late isn't it. but honestly it might've been the only way to do it. the problem is just repeating code for both chapter and art views.. making a change to one means I gotta manually make the same change to the other. huge pain..

added user authentication and a really shitty bare bones dashboard for myself to approve/reject comments directly on the site in case someone comes along and wants to be mean to me :( rejecting a comment deletes it OFF my site forever. though I kind of want to be able to keep hate mail so I dunno.. oh, and also a big fat logout button because I have nowhere else to put it.

I'll spare everyone the more technical ramblings.

anyways, I'm hoping to add more things later. these are my plans:

allow users (me) to post stories/art through the site itself instead of doing it manually in the vscode terminal for every. single. story. and drawing. (probably took me 6+ hours total just doing this. I don't know why I did it.) (btw this consists of writing commands to store information via the terminal. also, sql and similar databases don't store things like markup or even line breaks. I had to alter all my stories and put \n every time there was a line break... and you have to escape apostrophes (or quotes, depending on which you use) so every "it's" had to be made into "it\'s" HUGE. PAIN. I didn't do this manually obviously but sifting and plugging my stories into character replacers was so time consuming)

delete comments button.... For my eyes and fingers only

make an About page. I've been avoiding all the fun things and doing just the scary stff

figure out SSH stuff...

clean up the shitty css. I refuse to use tailwind even tho it's trying to force me.. I don't want some sleek polished site I want it look like it's in shambles, because it is

but yeah thanks for reading about my webdev and coding journey. even though using the laravel framework made things a thousand times easier it's still a crazy amount of work. let's say building a site completely from scratch means buying every material and designing the house yourself, and using a website builder like wix is just like buying a pre built home and you're just decorating it. using this framework is like putting together a build-your-own-house kit. you're still building a fucking house.

I feel crazy. it felt like the site was close to breaking several times. been sleep deprived for several days working on this nonstop I think I'm getting a little sick 😵💫

going to bed now. it's 9 am.

6 notes

·

View notes

Text

instagram

Hey there! 🚀 Becoming a data analyst is an awesome journey! Here’s a roadmap for you:

1. Start with the Basics 📚:

- Dive into the basics of data analysis and statistics. 📊

- Platforms like Learnbay (Data Analytics Certification Program For Non-Tech Professionals), Edx, and Intellipaat offer fantastic courses. Check them out! 🎓

2. Master Excel 📈:

- Excel is your best friend! Learn to crunch numbers and create killer spreadsheets. 📊🔢

3. Get Hands-on with Tools 🛠️:

- Familiarize yourself with data analysis tools like SQL, Python, and R. Pluralsight has some great courses to level up your skills! 🐍📊

4. Data Visualization 📊:

- Learn to tell a story with your data. Tools like Tableau and Power BI can be game-changers! 📈📉

5. Build a Solid Foundation 🏗️:

- Understand databases, data cleaning, and data wrangling. It’s the backbone of effective analysis! 💪🔍

6. Machine Learning Basics 🤖:

- Get a taste of machine learning concepts. It’s not mandatory but can be a huge plus! 🤓🤖

7. Projects, Projects, Projects! 🚀:

- Apply your skills to real-world projects. It’s the best way to learn and showcase your abilities! 🌐💻

8. Networking is Key 👥:

- Connect with fellow data enthusiasts on LinkedIn, attend meetups, and join relevant communities. Networking opens doors! 🌐👋

9. Certifications 📜:

- Consider getting certified. It adds credibility to your profile. 🎓💼

10. Stay Updated 🔄:

- The data world evolves fast. Keep learning and stay up-to-date with the latest trends and technologies. 📆🚀

. . .

#programming#programmers#developers#mobiledeveloper#softwaredeveloper#devlife#coding.#setup#icelatte#iceamericano#data analyst road map#data scientist#data#big data#data engineer#data management#machinelearning#technology#data analytics#Instagram

8 notes

·

View notes

Text

some of the tables I deal with have a bunch of columns named "user_defined_field_1" or named with weird abbreviations, and our sql table index does not elaborate upon the purposes of most columns. so I have to filter mystery columns by their values and hope my pattern recognition is good enough to figure out what the hell something means.

I've gotten pretty good at this. the most fucked thing I ever figured out was in this table that logged histories for serials. it has a "source" column where "R" labels receipts, "O" labels order-related records and then there's a third letter "I" which signifies "anything else that could possibly happen".

by linking various values to other tables, source "I" lines can be better identified, but once when I was doing this I tried to cast a bunch of bullshit int dates/times to datetime format and my query errored. it turned out a small number of times were not valid time values. which made no sense because that column was explicitly labeled as the creation time. I tried a couple of weird time format converters hoping to turn those values into ordinary times but nothing generated a result that made sense.

anyway literally next day I was working on something totally unrelated and noticed that the ID numbers in one table were the same length as INT dates. the I was like, how fucked would it be if those bad time values were not times at all, but actually ID numbers that joined to another table. maybe they could even join to the table I was looking at right then!

well guess what.

49 notes

·

View notes

Text

Symfony Clickjacking Prevention Guide

Clickjacking is a deceptive technique where attackers trick users into clicking on hidden elements, potentially leading to unauthorized actions. As a Symfony developer, it's crucial to implement measures to prevent such vulnerabilities.

🔍 Understanding Clickjacking

Clickjacking involves embedding a transparent iframe over a legitimate webpage, deceiving users into interacting with hidden content. This can lead to unauthorized actions, such as changing account settings or initiating transactions.

🛠️ Implementing X-Frame-Options in Symfony

The X-Frame-Options HTTP header is a primary defense against clickjacking. It controls whether a browser should be allowed to render a page in a <frame>, <iframe>, <embed>, or <object> tag.

Method 1: Using an Event Subscriber

Create an event subscriber to add the X-Frame-Options header to all responses:

// src/EventSubscriber/ClickjackingProtectionSubscriber.php namespace App\EventSubscriber; use Symfony\Component\EventDispatcher\EventSubscriberInterface; use Symfony\Component\HttpKernel\Event\ResponseEvent; use Symfony\Component\HttpKernel\KernelEvents; class ClickjackingProtectionSubscriber implements EventSubscriberInterface { public static function getSubscribedEvents() { return [ KernelEvents::RESPONSE => 'onKernelResponse', ]; } public function onKernelResponse(ResponseEvent $event) { $response = $event->getResponse(); $response->headers->set('X-Frame-Options', 'DENY'); } }

This approach ensures that all responses include the X-Frame-Options header, preventing the page from being embedded in frames or iframes.

Method 2: Using NelmioSecurityBundle

The NelmioSecurityBundle provides additional security features for Symfony applications, including clickjacking protection.

Install the bundle:

composer require nelmio/security-bundle

Configure the bundle in config/packages/nelmio_security.yaml:

nelmio_security: clickjacking: paths: '^/.*': DENY

This configuration adds the X-Frame-Options: DENY header to all responses, preventing the site from being embedded in frames or iframes.

🧪 Testing Your Application

To ensure your application is protected against clickjacking, use our Website Vulnerability Scanner. This tool scans your website for common vulnerabilities, including missing or misconfigured X-Frame-Options headers.

Screenshot of the free tools webpage where you can access security assessment tools.

After scanning for a Website Security check, you'll receive a detailed report highlighting any security issues:

An Example of a vulnerability assessment report generated with our free tool, providing insights into possible vulnerabilities.

🔒 Enhancing Security with Content Security Policy (CSP)

While X-Frame-Options is effective, modern browsers support the more flexible Content-Security-Policy (CSP) header, which provides granular control over framing.

Add the following header to your responses:

$response->headers->set('Content-Security-Policy', "frame-ancestors 'none';");

This directive prevents any domain from embedding your content, offering robust protection against clickjacking.

🧰 Additional Security Measures

CSRF Protection: Ensure that all forms include CSRF tokens to prevent cross-site request forgery attacks.

Regular Updates: Keep Symfony and all dependencies up to date to patch known vulnerabilities.

Security Audits: Conduct regular security audits to identify and address potential vulnerabilities.

📢 Explore More on Our Blog

For more insights into securing your Symfony applications, visit our Pentest Testing Blog. We cover a range of topics, including:

Preventing clickjacking in Laravel

Securing API endpoints

Mitigating SQL injection attacks

🛡️ Our Web Application Penetration Testing Services

Looking for a comprehensive security assessment? Our Web Application Penetration Testing Services offer:

Manual Testing: In-depth analysis by security experts.

Affordable Pricing: Services starting at $25/hr.

Detailed Reports: Actionable insights with remediation steps.

Contact us today for a free consultation and enhance your application's security posture.

3 notes

·

View notes

Text

I'm very good at "professionalism" I was trained from a young age. If I get an interview, I'm getting the job. I sit upright in my chair and wear a collared shirt and my employer thinks, "wow! She has a lot of passion for this role!" Buddy, you don't know the start of it. You don't even know my gender.

I'm OSHA certified. I got my 24-hour GD&T training. They can see this. What they don't see is me waxing poetical about surface finish or some shit on this website. When I was in 6th grade, I was exposed to Autodesk Inventor and it changed me fundamentally as a person. Whenever I look at any consumer good (of which there are a lot) I have to consider how it was made. And where the materials came from and how it got here and really the whole ass process. It's fascinating to me in a way that can be described as "intense". I love looking at large machines and thinking about them and taking pictures of them. There are so many steps and machines and people involved to create anything around you. I think if any person truly understood everything that happened in a single factory they would go insane with the knowledge. But by god am I trying. My uncle works specifically on the printers that print dates onto food. There are hundreds or even thousands of hyperspecific jobs like that everywhere. My employer looks away and I'm creating an unholy abomination of R and HTML, and I'm downloading more libraries so I can change the default CSS colors. I don't know anything about programming but with the power of stack overflow and sheer determination I'm making it happen. Is it very useful? No. But I'm learning a lot and more importantly I don't give a fuck. I'm learning about PLCs. I'm learing about CNC machines. I'm fucking with my laptop. I'm deleting SQL databases. I'm finding electromechanical pinball machines on facebook marketplace. I'm writing G-code by hand. I'm a freight train with no brakes. I'm moving and I'm moving fast. And buddy, you better hope I'm moving in the right direction. I must be, because all of my former employers give me stellar reviews when used as a reference. I'm winning at "career" and also in life.

14 notes

·

View notes

Text

The Great Data Cleanup: A Database Design Adventure

As a budding database engineer, I found myself in a situation that was both daunting and hilarious. Our company's application was running slower than a turtle in peanut butter, and no one could figure out why. That is, until I decided to take a closer look at the database design.

It all began when my boss, a stern woman with a penchant for dramatic entrances, stormed into my cubicle. "Listen up, rookie," she barked (despite the fact that I was quite experienced by this point). "The marketing team is in an uproar over the app's performance. Think you can sort this mess out?"

Challenge accepted! I cracked my knuckles, took a deep breath, and dove headfirst into the database, ready to untangle the digital spaghetti.

The schema was a sight to behold—if you were a fan of chaos, that is. Tables were crammed with redundant data, and the relationships between them made as much sense as a platypus in a tuxedo.

"Okay," I told myself, "time to unleash the power of database normalization."

First, I identified the main entities—clients, transactions, products, and so forth. Then, I dissected each entity into its basic components, ruthlessly eliminating any unnecessary duplication.

For example, the original "clients" table was a hot mess. It had fields for the client's name, address, phone number, and email, but it also inexplicably included fields for the account manager's name and contact information. Data redundancy alert!

So, I created a new "account_managers" table to store all that information, and linked the clients back to their account managers using a foreign key. Boom! Normalized.

Next, I tackled the transactions table. It was a jumble of product details, shipping info, and payment data. I split it into three distinct tables—one for the transaction header, one for the line items, and one for the shipping and payment details.

"This is starting to look promising," I thought, giving myself an imaginary high-five.

After several more rounds of table splitting and relationship building, the database was looking sleek, streamlined, and ready for action. I couldn't wait to see the results.

Sure enough, the next day, when the marketing team tested the app, it was like night and day. The pages loaded in a flash, and the users were practically singing my praises (okay, maybe not singing, but definitely less cranky).

My boss, who was not one for effusive praise, gave me a rare smile and said, "Good job, rookie. I knew you had it in you."

From that day forward, I became the go-to person for all things database-related. And you know what? I actually enjoyed the challenge. It's like solving a complex puzzle, but with a lot more coffee and SQL.

So, if you ever find yourself dealing with a sluggish app and a tangled database, don't panic. Grab a strong cup of coffee, roll up your sleeves, and dive into the normalization process. Trust me, your users (and your boss) will be eternally grateful.

Step-by-Step Guide to Database Normalization

Here's the step-by-step process I used to normalize the database and resolve the performance issues. I used an online database design tool to visualize this design. Here's what I did:

Original Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerName varchar

AccountManagerPhone varchar

Step 1: Separate the Account Managers information into a new table:

AccountManagers Table:

AccountManagerID int

AccountManagerName varchar

AccountManagerPhone varchar

Updated Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerID int

Step 2: Separate the Transactions information into a new table:

Transactions Table:

TransactionID int

ClientID int

TransactionDate date

ShippingAddress varchar

ShippingPhone varchar

PaymentMethod varchar

PaymentDetails varchar

Step 3: Separate the Transaction Line Items into a new table:

TransactionLineItems Table:

LineItemID int

TransactionID int

ProductID int

Quantity int

UnitPrice decimal

Step 4: Create a separate table for Products:

Products Table:

ProductID int

ProductName varchar

ProductDescription varchar

UnitPrice decimal

After these normalization steps, the database structure was much cleaner and more efficient. Here's how the relationships between the tables would look:

Clients --< Transactions >-- TransactionLineItems

Clients --< AccountManagers

Transactions --< Products

By separating the data into these normalized tables, we eliminated data redundancy, improved data integrity, and made the database more scalable. The application's performance should now be significantly faster, as the database can efficiently retrieve and process the data it needs.

Conclusion

After a whirlwind week of wrestling with spreadsheets and SQL queries, the database normalization project was complete. I leaned back, took a deep breath, and admired my work.

The previously chaotic mess of data had been transformed into a sleek, efficient database structure. Redundant information was a thing of the past, and the performance was snappy.

I couldn't wait to show my boss the results. As I walked into her office, she looked up with a hopeful glint in her eye.

"Well, rookie," she began, "any progress on that database issue?"

I grinned. "Absolutely. Let me show you."

I pulled up the new database schema on her screen, walking her through each step of the normalization process. Her eyes widened with every explanation.

"Incredible! I never realized database design could be so... detailed," she exclaimed.

When I finished, she leaned back, a satisfied smile spreading across her face.

"Fantastic job, rookie. I knew you were the right person for this." She paused, then added, "I think this calls for a celebratory lunch. My treat. What do you say?"

I didn't need to be asked twice. As we headed out, a wave of pride and accomplishment washed over me. It had been hard work, but the payoff was worth it. Not only had I solved a critical issue for the business, but I'd also cemented my reputation as the go-to database guru.

From that day on, whenever performance issues or data management challenges cropped up, my boss would come knocking. And you know what? I didn't mind one bit. It was the perfect opportunity to flex my normalization muscles and keep that database running smoothly.

So, if you ever find yourself in a similar situation—a sluggish app, a tangled database, and a boss breathing down your neck—remember: normalization is your ally. Embrace the challenge, dive into the data, and watch your application transform into a lean, mean, performance-boosting machine.

And don't forget to ask your boss out for lunch. You've earned it!

8 notes

·

View notes

Text

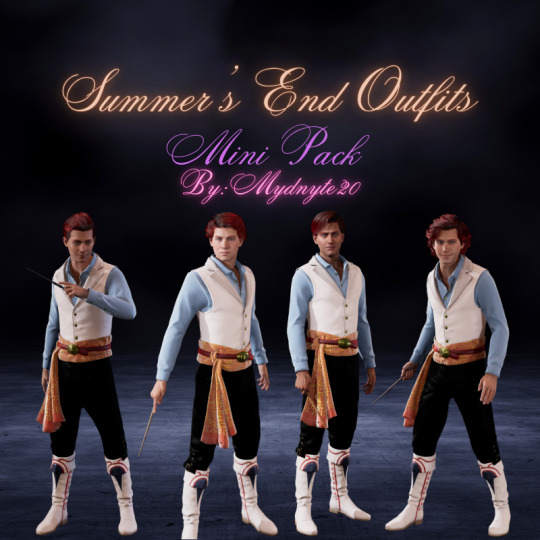

HL Outfit Mod Update: 3-13-25 @ 9:05 PM

♥ The MC outfit for the female has loaded successfully twice today & one of those being my MC as of tonight. The other? The lovely @ahriadnetv has confirmed that it loaded for her this morning before I went to work.

♦ The gear icon isn't showing in Gladrags, but as of right now, I've changed the SQL code for the outfit to where it's supposed to show. All that remains is to let the mod cook & test it again. If it loads, I will release both the MC outfit & another one that many have been waiting for. Which is it? Find out under the cut!

♣ That's right! The NPC Mini Pack of Summer's End will come alongside the MC version if the MC outfit icon loads correctly! The boys have loaded without fail for me in their outfits & all of them look like a classy date that you'd want to show off.

♠ I've worked a whole month on these & the boys were my first attempt at modding, so to finally see progress & that they load perfectly has made me proud. Make no mistake that finally getting an MC outfit done has me excited because this will be my first one to go live in-game.

It's spring break for many, so consider this my spring break project. :P

#hogwarts legacy#sebastian sallow#ominis gaunt#slytherin#king of snakes#king of curses#amit thakkar#garreth weasley#gryffindor#ravenclaw#heir of slytherin#hogwarts legacy mc#hogwarts legacy sebastian#hogwarts legacy ominis#hl mods#hogwarts legacy mods#outfit mods#mod update

2 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes