#AI and Privacy

Text

From instructions on how to opt out, look at the official staff post on the topic. It also gives more information on Tumblr's new policies. If you are opting out, remember to opt out each separate blog individually.

Please reblog this post, so it will get more votes!

#third party sharing#third-party sharing#scrapping#ai scrapping#Polls#tumblr#tumblr staff#poll#please reblog#art#everything else#features#opt out#policies#data privacy#privacy#please boost#staff

47K notes

·

View notes

Text

UPDATE! REBLOG THIS VERSION!

#reaux speaks#zoom#terms of service#ai#artificial intelligence#privacy#safety#internet#end to end encryption#virtual#remote#black mirror#joan is awful#twitter#instagram#tiktok#meetings#therapy

23K notes

·

View notes

Text

AI in Social Media: The Future is Now and It's Unbelievably Cool (and Slightly Terrifying)

AI in Social Media: The Future is Now and It’s Unbelievably Cool (and Slightly Terrifying)

In the ever-twisting saga of social media, we’ve stumbled upon a chapter that feels like it’s been ripped straight out of a sci-fi novel – the rise of AI-driven content creation. Yes, folks, we’re talking about the kind of AI that makes the robots in ‘Wall-E’ look like child’s play. So, buckle up as…

View On WordPress

#AI and Privacy#AI Content Tools#AI in Social Media#Artificial Intelligence#Content Creation#Digital Ethics#Future of Social Media#Personalization Algorithms#Social Media Trends 2024#Tech Innovation

0 notes

Text

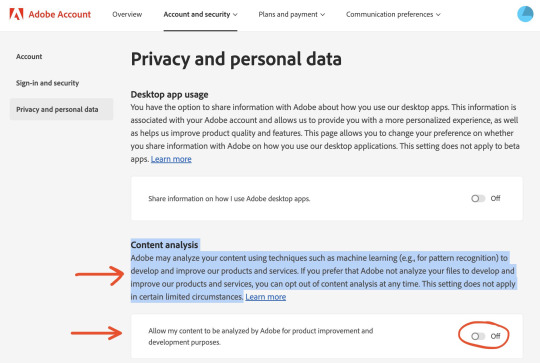

You know, every so often I think I should update my pirated copy of CS2.

Then I see things like this, and remember that I don't need it more than I need it, you know?

Dated 3/22/23

#adobe#photoshop#privacy#ai#online security#adobe accounts#adobe photoshop#art#yes I know about all of the alternatives#but I'm old#so leave me alone dammit

24K notes

·

View notes

Text

From Mythology to Reality: Exploring the Past, Present, and Future of AI

Artificial Intelligence (AI) has rapidly become a ubiquitous part of our lives, from voice assistants and image recognition to self-driving cars and personalized advertising. While the technology continues to advance, there is still much debate and discussion around the implications and ethics of its use.

(more…) “”

View On WordPress

#AI and job displacement#AI and privacy#AI applications#AI ethics#AI history#AI impact on society#Artificial Intelligence#Autonomous vehicles#Bias in AI#Future of AI#Healthcare AI#Image recognition#predictive analytics#Robotics#Virtual reality#Voice assistants

0 notes

Text

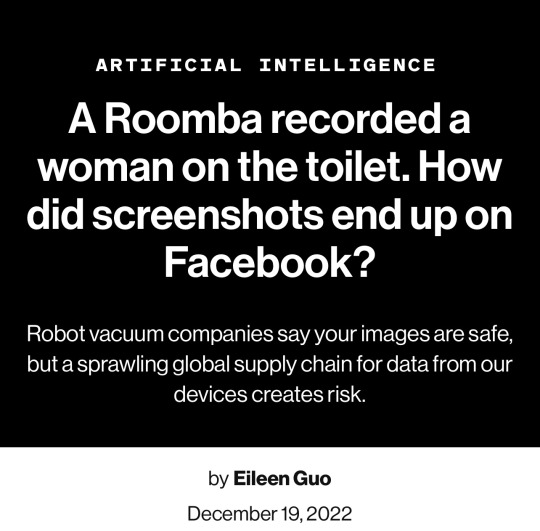

IN THE FALL OF 2020, GIG WORKERS IN VENEZUELA POSTED A SERIES OF images to online forums where they gathered to talk shop. The photos were mundane, if sometimes intimate, household scenes captured from low angles—including some you really wouldn’t want shared on the Internet.

In one particularly revealing shot, a young woman in a lavender T-shirt sits on the toilet, her shorts pulled down to mid-thigh.

The images were not taken by a person, but by development versions of iRobot’s Roomba J7 series robot vacuum. They were then sent to Scale AI, a startup that contracts workers around the world to label audio, photo, and video data used to train artificial intelligence.

They were the sorts of scenes that internet-connected devices regularly capture and send back to the cloud—though usually with stricter storage and access controls. Yet earlier this year, MIT Technology Review obtained 15 screenshots of these private photos, which had been posted to closed social media groups.

The photos vary in type and in sensitivity. The most intimate image we saw was the series of video stills featuring the young woman on the toilet, her face blocked in the lead image but unobscured in the grainy scroll of shots below. In another image, a boy who appears to be eight or nine years old, and whose face is clearly visible, is sprawled on his stomach across a hallway floor. A triangular flop of hair spills across his forehead as he stares, with apparent amusement, at the object recording him from just below eye level.

iRobot—the world’s largest vendor of robotic vacuums, which Amazon recently acquired for $1.7 billion in a pending deal—confirmed that these images were captured by its Roombas in 2020.

Ultimately, though, this set of images represents something bigger than any one individual company’s actions. They speak to the widespread, and growing, practice of sharing potentially sensitive data to train algorithms, as well as the surprising, globe-spanning journey that a single image can take—in this case, from homes in North America, Europe, and Asia to the servers of Massachusetts-based iRobot, from there to San Francisco–based Scale AI, and finally to Scale’s contracted data workers around the world (including, in this instance, Venezuelan gig workers who posted the images to private groups on Facebook, Discord, and elsewhere).

Together, the images reveal a whole data supply chain—and new points where personal information could leak out—that few consumers are even aware of.

(continue reading)

#politics#james baussmann#scale ai#irobot#amazon#roomba#privacy rights#colin angle#privacy#data mining#surveillance state#mass surveillance#surveillance industry#1st amendment#first amendment#1st amendment rights#first amendment rights#ai#artificial intelligence#iot#internet of things

5K notes

·

View notes

Text

Hey so just saw this on Twitter and figured there are some people who would like to know @infinitytraincrew is apparently getting deleted tonight so if you wanna archive it do it now

#infinity train#third-party sharing#owen dennis#anti ai#tumblr staff making stupid decisions again#cryptid says stuff#don't just glaze it actively nightshade it#ai scraping#data privacy

399 notes

·

View notes

Text

I think most of us should take the whole ai scraping situation as a sign that we should maybe stop giving google/facebook/big corps all our data and look into alternatives that actually value your privacy.

i know this is easier said than done because everybody under the sun seems to use these services, but I promise you it’s not impossible. In fact, I made a list of a few alternatives to popular apps and services, alternatives that are privacy first, open source and don’t sell your data.

right off the bat I suggest you stop using gmail. it’s trash and not secure at all. google can read your emails. in fact, google has acces to all the data on your account and while what they do with it is already shady, I don’t even want to know what the whole ai situation is going to bring. a good alternative to a few google services is skiff. they provide a secure, e3ee mail service along with a workspace that can easily import google documents, a calendar and 10 gb free storage. i’ve been using it for a while and it’s great.

a good alternative to google drive is either koofr or filen. I use filen because everything you upload on there is end to end encrypted with zero knowledge. they offer 10 gb of free storage and really affordable lifetime plans.

google docs? i don’t know her. instead, try cryptpad. I don’t have the spoons to list all the great features of this service, you just have to believe me. nothing you write there will be used to train ai and you can share it just as easily. if skiff is too limited for you and you also need stuff like sheets or forms, cryptpad is here for you. the only downside i could think of is that they don’t have a mobile app, but the site works great in a browser too.

since there is no real alternative to youtube I recommend watching your little slime videos through a streaming frontend like freetube or new pipe. besides the fact that they remove ads, they also stop google from tracking what you watch. there is a bit of functionality loss with these services, but if you just want to watch videos privately they’re great.

if you’re looking for an alternative to google photos that is secure and end to end encrypted you might want to look into stingle, although in my experience filen’s photos tab works pretty well too.

oh, also, for the love of god, stop using whatsapp, facebook messenger or instagram for messaging. just stop. signal and telegram are literally here and they’re free. spread the word, educate your friends, ask them if they really want anyone to snoop around their private conversations.

regarding browser, you know the drill. throw google chrome/edge in the trash (they really basically spyware disguised as browsers) and download either librewolf or brave. mozilla can be a great secure option too, with a bit of tinkering.

if you wanna get a vpn (and I recommend you do) be wary that some of them are scammy. do your research, read their terms and conditions, familiarise yourself with their model. if you don’t wanna do that and are willing to trust my word, go with mullvad. they don’t keep any logs. it’s 5 euros a month with no different pricing plans or other bullshit.

lastly, whatever alternative you decide on, what matters most is that you don’t keep all your data in one place. don’t trust a service to take care of your emails, documents, photos and messages. store all these things in different, trustworthy (preferably open source) places. there is absolutely no reason google has to know everything about you.

do your own research as well, don’t just trust the first vpn service your favourite youtube gets sponsored by. don’t trust random tech blogs to tell you what the best cloud storage service is — they get good money for advertising one or the other. compare shit on your own or ask a tech savvy friend to help you. you’ve got this.

1K notes

·

View notes

Text

Privacy first

The internet is embroiled in a vicious polycrisis: child safety, surveillance, discrimination, disinformation, polarization, monopoly, journalism collapse – not only have we failed to agree on what to do about these, there's not even a consensus that all of these are problems.

But in a new whitepaper, my EFF colleagues Corynne McSherry, Mario Trujillo, Cindy Cohn and Thorin Klosowski advance an exciting proposal that slices cleanly through this Gordian knot, which they call "Privacy First":

https://www.eff.org/wp/privacy-first-better-way-address-online-harms

Here's the "Privacy First" pitch: whatever is going on with all of the problems of the internet, all of these problems are made worse by commercial surveillance.

Worried your kid is being made miserable through targeted ads? No surveillance, no targeting.

Worried your uncle was turned into a Qanon by targeted disinformation? No surveillance, no targeting. Worried that racialized people are being targeted for discriminatory hiring or lending by algorithms? No surveillance, no targeting.

Worried that nation-state actors are exploiting surveillance data to attack elections, politicians, or civil servants? No surveillance, no surveillance data.

Worried that AI is being trained on your personal data? No surveillance, no training data.

Worried that the news is being killed by monopolists who exploit the advantage conferred by surveillance ads to cream 51% off every ad-dollar? No surveillance, no surveillance ads.

Worried that social media giants maintain their monopolies by filling up commercial moats with surveillance data? No surveillance, no surveillance moat.

The fact that commercial surveillance hurts so many groups of people in so many ways is terrible, of course, but it's also an amazing opportunity. Thus far, the individual constituencies for, say, saving the news or protecting kids have not been sufficient to change the way these big platforms work. But when you add up all the groups whose most urgent cause would be significantly improved by comprehensive federal privacy law, vigorously enforced, you get an unstoppable coalition.

America is decades behind on privacy. The last really big, broadly applicable privacy law we passed was a law banning video-store clerks from leaking your porn-rental habits to the press (Congress was worried about their own rental histories after a Supreme Court nominee's movie habits were published in the Washington City Paper):

https://en.wikipedia.org/wiki/Video_Privacy_Protection_Act

In the decades since, we've gotten laws that poke around the edges of privacy, like HIPAA (for health) and COPPA (data on under-13s). Both laws are riddled with loopholes and neither is vigorously enforced:

https://pluralistic.net/2023/04/09/how-to-make-a-child-safe-tiktok/

Privacy First starts with the idea of passing a fit-for-purpose, 21st century privacy law with real enforcement teeth (a private right of action, which lets contingency lawyers sue on your behalf for a share of the winnings):

https://www.eff.org/deeplinks/2022/07/americans-deserve-more-current-american-data-privacy-protection-act

Here's what should be in that law:

A ban on surveillance advertising:

https://www.eff.org/deeplinks/2022/03/ban-online-behavioral-advertising

Data minimization: a prohibition on collecting or processing your data beyond what is strictly necessary to deliver the service you're seeking.

Strong opt-in: None of the consent theater click-throughs we suffer through today. If you don't give informed, voluntary, specific opt-in consent, the service can't collect your data. Ignoring a cookie click-through is not consent, so you can just bypass popups and know you won't be spied on.

No preemption. The commercial surveillance industry hates strong state privacy laws like the Illinois biometrics law, and they are hoping that a federal law will pre-empt all those state laws. Federal privacy law should be the floor on privacy nationwide – not the ceiling:

https://www.eff.org/deeplinks/2022/07/federal-preemption-state-privacy-law-hurts-everyone

No arbitration. Your right to sue for violations of your privacy shouldn't be waivable in a clickthrough agreement:

https://www.eff.org/deeplinks/2022/04/stop-forced-arbitration-data-privacy-legislation

No "pay for privacy." Privacy is not a luxury good. Everyone deserves privacy, and the people who can least afford to buy private alternatives are most vulnerable to privacy abuses:

https://www.eff.org/deeplinks/2020/10/why-getting-paid-your-data-bad-deal

No tricks. Getting "consent" with confusing UIs and tiny fine print doesn't count:

https://www.eff.org/deeplinks/2019/02/designing-welcome-mats-invite-user-privacy-0

A Privacy First approach doesn't merely help all the people harmed by surveillance, it also prevents the collateral damage that today's leading proposals create. For example, laws requiring services to force their users to prove their age ("to protect the kids") are a privacy nightmare. They're also unconstitutional and keep getting struck down.

A better way to improve the kid safety of the internet is to ban surveillance. A surveillance ban doesn't have the foreseeable abuses of a law like KOSA (the Kids Online Safety Act), like bans on information about trans healthcare, medication abortions, or banned books:

https://www.eff.org/deeplinks/2023/05/kids-online-safety-act-still-huge-danger-our-rights-online

When it comes to the news, banning surveillance advertising would pave the way for a shift to contextual ads (ads based on what you're looking at, not who you are). That switch would change the balance of power between news organizations and tech platforms – no media company will ever know as much about their readers as Google or Facebook do, but no tech company will ever know as much about a news outlet's content as the publisher does:

https://www.eff.org/deeplinks/2023/05/save-news-we-must-ban-surveillance-advertising

This is a much better approach than the profit-sharing arrangements that are being trialed in Australia, Canada and France (these are sometimes called "News Bargaining Codes" or "Link Taxes"). Funding the news by guaranteeing it a share of Big Tech's profits makes the news into partisans for that profit – not the Big Tech watchdogs we need them to be. When Torstar, Canada's largest news publisher, struck a profit-sharing deal with Google, they killed their longrunning, excellent investigative "Defanging Big Tech" series.

A privacy law would also protect access to healthcare, especially in the post-Roe era, when Big Tech surveillance data is being used to target people who visit abortion clinics or secure medication abortions. It would end the practice of employers forcing workers to wear health-monitoring gadget. This is characterized as a "voluntary" way to get a "discount" on health insurance – but in practice, it's a way of punishing workers who refuse to let their bosses know about their sleep, fertility, and movements.

A privacy law would protect marginalized people from all kinds of digital discrimination, from unfair hiring to unfair lending to unfair renting. The commercial surveillance industry shovels endless quantities of our personal information into the furnaces that fuel these practices. A privacy law shuts off the fuel supply:

https://www.eff.org/deeplinks/2023/04/digital-privacy-legislation-civil-rights-legislation

There are plenty of ways that AI will make our lives worse, but copyright won't fix it. For issues of labor exploitation (especially by creative workers), the answer lies in labor law:

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

And for many of AI's other harms, a muscular privacy law would starve AI of some of its most potentially toxic training data:

https://www.businessinsider.com/tech-updated-terms-to-use-customer-data-to-train-ai-2023-9

Meanwhile, if you're worried about foreign governments targeting Americans – officials, military, or just plain folks – a privacy law would cut off one of their most prolific and damaging source of information. All those lawmakers trying to ban Tiktok because it's a surveillance tool? What about banning surveillance, instead?

Monopolies and surveillance go together like peanut butter and chocolate. Some of the biggest tech empires were built on mountains of nonconsensually harvested private data – and they use that data to defend their monopolies. Legal privacy guarantees are a necessary precursor to data portability and interoperability:

https://www.eff.org/wp/interoperability-and-privacy

Once we are guaranteed a right to privacy, lawmakers and regulators can order tech giants to tear down their walled gardens, rather than relying on tech companies to (selectively) defend our privacy:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

The point here isn't that privacy fixes all the internet's woes. The policy is "privacy first," not "just privacy." When it comes to making a new, good internet, there's plenty of room for labor law, civil rights legislation, antitrust, and other legal regimes. But privacy has the biggest constituency, gets us the most bang for the buck, and has the fewest harmful side-effects. It's a policy we can all agree on, even if we don't agree on much else. It's a coalition in potentia that would be unstoppable in reality. Privacy first! Then – everything else!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#privacy first#eff#privacy#surveillance#surveillance advertising#cold war 2.0#tiktok#saving the news from big tech#competition#interoperability#interop#online harms#ai#digital discrimination#discrimination#health care#hippa#medical privacy

436 notes

·

View notes

Text

Private issues

#ai generated#ai nude#ai model#ai girl#nudity#hot nude#lavatory#restroom#private#privacy#sexy content#sexy pose#sexy breast#hot asian babe#asian#asian chick#asian goddess

197 notes

·

View notes

Text

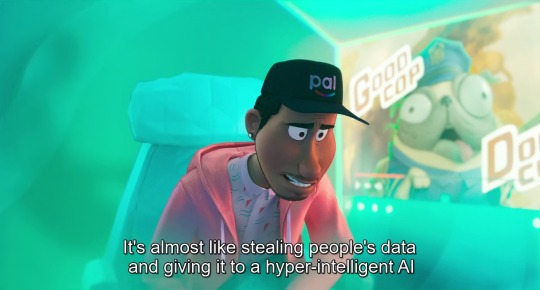

(from The Mitchells vs. the Machines, 2021)

#the mitchells vs the machines#data privacy#ai#artificial intelligence#digital privacy#genai#quote#problem solving#technology#sony pictures animation#sony animation#mike rianda#jeff rowe#danny mcbride#abbi jacobson#maya rudolph#internet privacy#internet safety#online privacy#technology entrepreneur

341 notes

·

View notes

Text

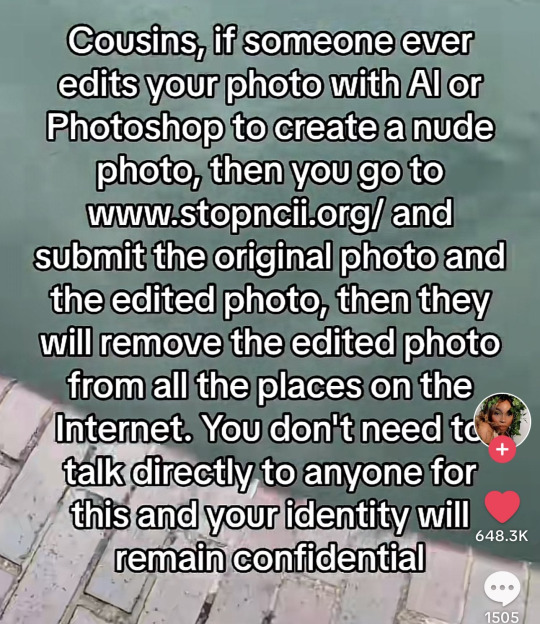

"Stopncii.org is a free tool designed to support victims of Non-Consensual Intimate Image (NCII) abuse."

"Revenge Porn Helpline is a UK service supporting adults (aged 18+) who are experiencing intimate image abuse, also known as, revenge porn."

"Take It Down (ncmec.org) is for people who have images or videos of themselves nude, partially nude, or in sexually explicit situations taken when they were under the age of 18 that they believe have been or will be shared online."

#important information#image desc in alt text#informative#stop ai#anti ai#safety#internet safety#exploitation#tell your friends#stay informed#the internet#internet privacy#online safety#stay safe#important#openai#tiktok screenshots#tiktok#life tips#ysk#you should know#described#alt text#alt text provided#alt text added#alt text in image#alt text described#alt text included#id in alt text

564 notes

·

View notes

Text

ABOUT THE KOSA BILL. PLEASE TAKE A MOMENT TO READ THIS.

please help us stop the kosa bill. I may not know much about how exactly to stop it but in the posts ive reblogged recently there are a lot of recourses.

this bill will be reviewed in march, likely the 13-16th. (I updated this)

if you cannot donate or call anyone, help spread information and awareness.

the kosa bill will censor lgbtq spaces on the internet, restrict minor’s internet access, and prevent us from interacting with most if not all social media websites. it’s almost guaranteed that parents will see our activity online. this is not okay for some minors. we won’t be able to see anything related to lgbtq and things related to the racist treatment of many people historically.

please spread awareness about this. warn the people you know. donate. sign petitions. call and or email your senators. follow blogs that provide resources such as @stopkosa and @anti-kosa-bill-links. anything you can. we cannot let this happen. no good will come out of it.

this affects anything and everything.

social media: tumblr, twitter, facebook, tiktok, youtube, instagram, and even more.

“games”: genshin impact, gacha, character.ai (not really a game), and even more.

this cannot happen.

we don’t want to be shut out from the world.

#important#kosa bill#kosa act#stop kosa#kosa#help#privacy#lgbtqiia+#lgbtq#lgbtq rights#black lives matter#blm#i stand AGAINST the kosa bill#character.ai#character ai#tumblr#TikTok#YouTube#instagram#social media#Gacha#gacha life 2#gacha club#all of these apps will be affected by the kosa bill#serious#serious post#reblog#repost#internet freedom#internet

131 notes

·

View notes

Text

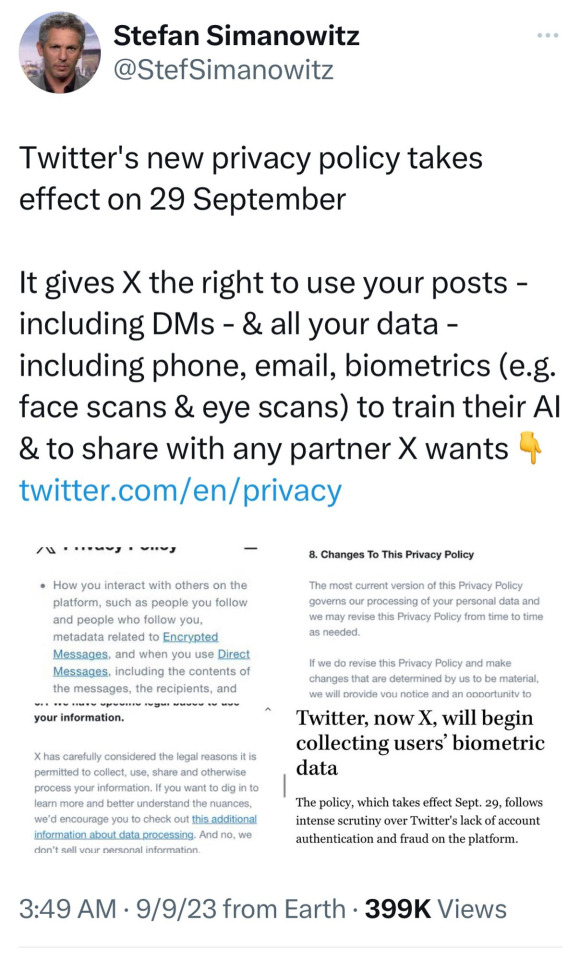

Anyone who follows me on here with a Twitter/X account:

In case you haven't heard, the Twitter Privacy Policy is changing on September 29th. The new policy states that any public content uploaded there will be used to train AI models. This, in addition to the overarching content policy which gives Twitter the right to "use, copy, reproduce, process, adapt, modify, publish and display" any content published on the platform without permission or creator compensation, means that any original content on there could potentially be used to generate AI content for use on the platform, without the original creator's consent.

If you publish original content on Twitter (especially art) and you don't like or agree with the policy update, now may be the time to review the changes for yourself and if necessary take things down before the policy update is published.

Edit: I wanna offer a brief apology for the original post, my wording was a bit unclear and may have drawn people to the wrong assumptions! I have changed the original post a bit now to hopefully be a more accurate reflection of the situation.

Let me just clarify some things since I certainly don't wanna fearmonger and also I feel like some people may take this more seriously than it actually is!:

The part of the privacy policy I mentioned regarding "use, copy, reproduce, process, adapt, modify, publish and display" is included in the policies of every social media site nowadays. That part on its own is not scary, as they have to include that in order to show your content to other users and have it published outside of the site (ie. embedding on other sites, news articles).

The scenario I mentioned is pretty unlikely to happen, I highly doubt the site will suddenly start stealing art or other consent and use it to pump stuff out all over the web without consent or compensation. I simply mentioned it because the fact that the data is being used to train AI models means that stuff on there may end up being used as references for it at some point, and that could then lead to the scenario I mentioned where peoples content becomes the food for new AI content. I don't know myself how likely that is for definite, but I know many people still don't trust the training of AI, which is why I feel it is important to mention.

I cannot offer professional or foolproof advice to people on the platform who have posted content before, I'm just some guy! I don't wanna make people freak out or anything. If you have content already on the site, chances are its probably already floating around somewhere you wouldn't want it. That's, unfortunately, the reality of the internet. You don't have to take down everything you've ever posted or delete your accounts, however I wouldn't recommend posting new content on the site if you are uncomfortable with the changes.

THIS POST WAS MADE FOR AWARENESS ONLY!! I AM NOT SUGGESTING WHAT YOU SHOULD AND SHOULD NOT DO, I AM NOT RESPONSIBLE!! /lh

TL;DR: I worded the original post slightly poorly, for clarification the policy being changed to allow for AI training doesn't automatically mean that all your creations will be stolen and be recreated with AI or anything, it just means that those creations will be used to teach the AI to make things of it's own. If you don't like the sound of that, consider looking into this matter yourself for a more detailed insight.

308 notes

·

View notes

Text

Okay, look, they talk to a Google rep in some of the video clips, but I give it a pass because this FREE course is a good baseline for personal internet safety that so many people just do not seem to have anymore. It's done in short video clip and article format (the videos average about a minute and a half). This is some super basic stuff like "What is PII and why you shouldn't put it on your twitter" and "what is a phishing scam?" Or "what is the difference between HTTP and HTTPS and why do you care?"

It's worrying to me how many people I meet or see online who just do not know even these absolute basic things, who are at constant risk of being scammed or hacked and losing everything. People who barely know how to turn their own computers on because corporations have made everything a proprietary app or exclusive hardware option that you must pay constant fees just to use. Especially young, somewhat isolated people who have never known a different world and don't realize they are being conditioned to be metaphorical prey animals in the digital landscape.

Anyway, this isn't the best internet safety course but it's free and easy to access. Gotta start somewhere.

Here's another short, easy, free online course about personal cyber security (GCFGlobal.org Introduction to Internet Safety)

Bonus videos:

youtube

(Jul 13, 2023, runtime 15:29)

"He didn't have anything to hide, he didn't do anything wrong, anything illegal, and yet he was still punished."

youtube

(Apr 20, 2023; runtime 9:24 minutes)

"At least 60% use their name or date of birth as a password, and that's something you should never do."

youtube

(March 4, 2020, runtime 11:18 minutes)

"Crossing the road safely is a basic life skill that every parent teaches their kids. I believe that cyber skills are the 21st century equivalent of road safety in the 20th century."

#you need to protect yourself#internet literacy#computer literacy#internet safety#privacy#online#password managers#security questions#identity theft#Facebook#browser safety#google#tesla#clearwater ai#people get arrested when google makes a mistake#lives are ruined because your Ring is spying on you#they aren't just stealing they are screwing you over#your alexa is not a woman it's a bug#planted by a supervillain who smirks at you#as they sell that info to your manager#oh you have nothing to hide?#then what's your credit card number?#listen I'm in a mood about this right now#Youtube

147 notes

·

View notes

Text

This from Xitter (pronounced sh*tter). I think this is the final nail for me. I’m out.

157 notes

·

View notes