#Automated Image Recognition

Explore tagged Tumblr posts

Text

Vision in Focus: The Art and Science of Computer Vision & Image Processing.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in An insightful blog post on computer vision and image processing, highlighting its impact on medical diagnostics, autonomous driving, and security systems.

Computer vision and image processing have reshaped the way we see and interact with the world. These fields power systems that read images, detect objects and analyze video…

#AI#Automated Image Recognition#Autonomous Driving#Collaboration#Community#Computer Vision#data#Discussion#Future Tech#Health Tech#Image Processing#Innovation#Medical Diagnostics#News#Object Detection#Privacy#Sanjay Kumar Mohindroo#Security Systems#Tech Ethics#tech innovation#Video Analysis

0 notes

Text

Simplifying OCR Data Collection: A Comprehensive Guide -

Globose Technology Solutions, we are committed to providing state-of-the-art OCR solutions to meet the specific needs of our customers. Contact us today to learn more about how OCR can transform your data collection workflow.

#OCR data collection#Optical Character Recognition (OCR)#Data Extraction#Document Digitization#Text Recognition#Automated Data Entry#Data Capture#OCR Technology#Document Processing#Image to Text Conversion#Data Accuracy#Text Analytics#Invoice Processing#Form Recognition#Natural Language Processing (NLP)#Data Management#Document Scanning#Data Automation#Data Quality#Compliance Reporting#Business Efficiency#data collection#data collection company

0 notes

Text

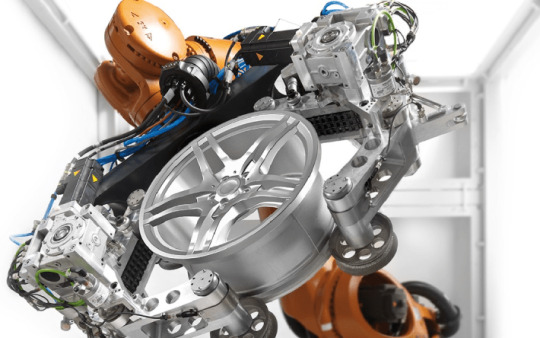

🦾 A006R - Robotic X-ray Inspection of Cast Aluminium Automotive Wheels Robot Arm 6-axis with special gripper - BarCode inline recognition ISAR image evaluation software - XEye detector X-ray inspection... via HeiDetect HEITEC PTS and MetrologyNews ▸ TAEVision Engineering on Pinterest

Data A006R - Nov 10, 2023

#automation#robot#robotics#robot arm 6-axis#special gripper#BarCode inline recognition#ISAR image evaluation software#XEye detector#X-ray Inspection#via HeiDetect#HEITEC PTS#MetrologyNews

1 note

·

View note

Text

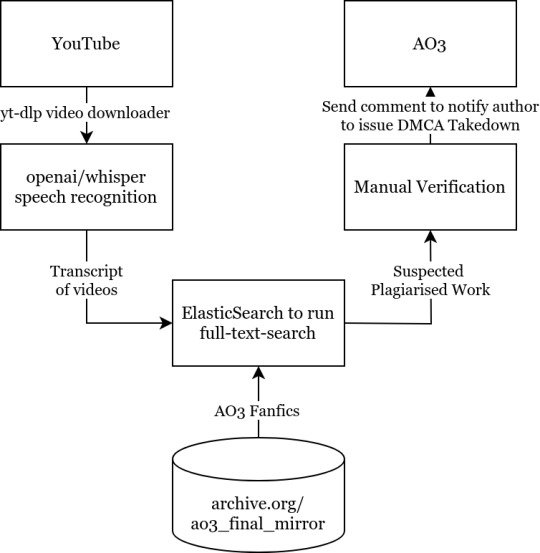

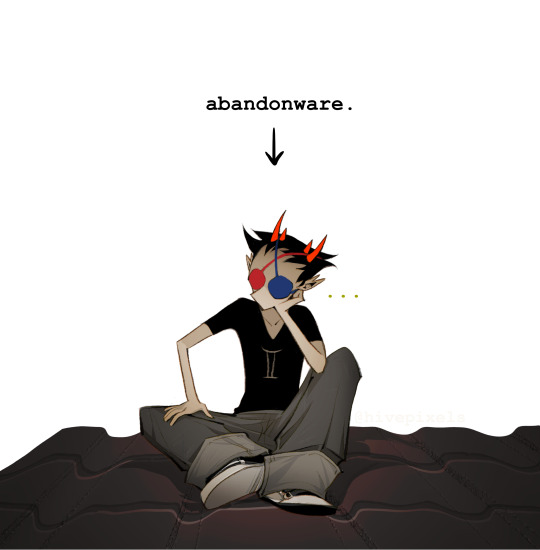

I’m Declaring War Against “What If” Videos: Project Copy-Knight

What Are “What If” Videos?

These videos follow a common recipe: A narrator, given a fandom (usually anime ones like My Hero Academia and Naruto), explores an alternative timeline where something is different. Maybe the main character has extra powers, maybe a key plot point goes differently. They then go on and make up a whole new story, detailing the conflicts and romance between characters, much like an ordinary fanfic.

Except, they are fanfics. Actual fanfics, pulled off AO3, FFN and Wattpad, given a different title, with random thumbnail and background images added to them, narrated by computer text-to-speech synthesizers.

They are very easy to make: pick a fanfic, copy all the text into a text-to-speech generator, mix the resulting audio file with some generic art from the fandom as the background, give it a snappy title like “What if Deku had the Power of Ten Rings”, photoshop an attention-grabbing thumbnail, dump it onto YouTube and get thousands of views.

In fact, the process is so straightforward and requires so little effort, it’s pretty clear some of these channels have automated pipelines to pump these out en-masse. They don’t bother with asking the fic authors for permission. Sometimes they don’t even bother with putting the fic’s link in the description or crediting the author. These content-farms then monetise these videos, so they get a cut from YouTube’s ads.

In short, an industry has emerged from the systematic copyright theft of fanfiction, for profit.

Project Copy-Knight

Since the adversaries almost certainly have automated systems set up for this, the only realistic countermeasure is with another automated system. Identifying fanfics manually by listening to the videos and searching them up with tags is just too slow and impractical.

And so, I came up with a simple automated pipeline to identify the original authors of “What If” videos.

It would go download these videos, run speech recognition on it, search the text through a database full of AO3 fics, and identify which work it came from. After manual confirmation, the original authors will be notified that their works have been subject to copyright theft, and instructions provided on how to DMCA-strike the channel out of existence.

I built a prototype over the weekend, and it works surprisingly well:

On a randomly-selected YouTube channel (in this case Infinite Paradox Fanfic), the toolchain was able to identify the origin of half of the content. The raw output, after manual verification, turned out to be extremely accurate. The time taken to identify the source of a video was about 5 minutes, most of those were spent running Whisper, and the actual full-text-search query and Levenshtein analysis was less than 5 seconds.

The other videos probably came from fanfiction websites other than AO3, like fanfiction.net or Wattpad. As I do not have access to archives of those websites, I cannot identify the other ones, but they are almost certainly not original.

Armed with this fantastic proof-of-concept, I’m officially declaring war against “What If” videos. The mission statement of Project Copy-Knight will be the elimination of “What If” videos based on the theft of AO3 content on YouTube.

I Need Your Help

I am acutely aware that I cannot accomplish this on my own. There are many moving parts in this system that simply cannot be completely automated – like the selection of YouTube channels to feed into the toolchain, the manual verification step to prevent false-positives being sent to authors, the reaching-out to authors who have comments disabled, etc, etc.

So, if you are interested in helping to defend fanworks, or just want to have a chat or ask about the technical details of the toolchain, please consider joining my Discord server. I could really use your help.

------

See full blog article and acknowledgements here: https://echoekhi.com/2023/11/25/project-copy-knight/

7K notes

·

View notes

Note

i am in love with your sollux i think

sollux love party :]

if you’re interested heres some of my personal fondness thoughts on him.. big warning for the mega long read ahead aye

as we alr know sollux's rejection of participation somewhat mirrors dave's rejection of heroism, but even without getting cooked to completion i still find sollux's character v compelling beyond the fourth wall

as someone who doesnt get a pinch of that Protagonist Sparkle to begin with, he can openly say he wants to leave anytime…. and unlike dave, he actually Can leave the scene anytime. but he can never be truly Free from the story via permanent character death like the other trolls.

his irrelevancy is indeed relevant - he’s there so u can point him out.

while his image is intended to be a relic of past internet subculture, his role is not only about hehehaha being a Chad or a 2000s cyberforum 2²chan haxxor ragequit gamebro.

his continued existence also happens to add a Bit to the overarching themes of homestuck! a Bit that gives him longer-lasting thematic relevance compared to the trolls who could’ve had more character potential but didnt get to survive beyond the main story.

the Bit in question:

his defiance contributes to the illusion of agency (treating characters = people with autonomy). he’s “aware” of it, and that recognition is worth noting enough to forcibly keep him alive as both reward and punishment.

considering how his personality & classpect is designed its definitely a very haha thing for hussie to do LOL. he’s made to be op asf so he's resigned to doing dirty work, gradually deteriorating along the way but never truly dying. as fans have mentioned before, him openly rejecting involvement after a while of grim tolerance is like if the sim u were controlling suddenly stopped, looked up and gave u the finger while u were step six into the walkthrough for Every Possible Sim Death Animation.

but since he’s just a sim… the more he hates it, the more you keep him around. if ur sim started complaining abt your whimsical household storyline you’d definitely keep that little fuck.

but yeah i like that sollux is just idling. the significance of his presence being that one dude who's always reliably Somewhere, root core Unchanged, no individual ambitions (possibly due to fear of consequence?), and design-wise: a staple representative product of his time.

compared to dirk's character, who has aged phenomenally well into the present (themes of control + AR + artificial intelligence, clearer exploration around navigating relationships/sexuality, infinite possibilities of self-splinterhood and trait inheritance), sollux's potential is really... contained. bitter. defeatist. limiting and frustrating in the way old tech is.

the world continues moving on to shinier, brighter, more advanced automated things - minimalist and metaverse or whatever but sollux is still here 🧍♂️ going woohoo redblue 3d. (tho personally i imagine his vibe similar to what the kids call cassette futurism on pinterest mixed w more grimy grunge insectoid influences eheh)

conceptually-speaking,

at the foundation of it all, the rapid pace of modern development was built off the understanding of ppl like sollux in the past, who were There actively at work while the dough was still beginning to rise

thats one of the cool things abt the idea of trolls preceding humans! the idea that trolls like sollux excelled back when lots of basic shit still needed to be discovered, building structures like networks and codes from scratch, and humans will eventually inherit and reinvent that knowledge in ways that become so optimized it makes the old manual effort seem archaic, slow, and labour-intensive.

but despite information/resources/shortcuts being more accessible now, much of the new highly-anticipated stuff released on trend still end up unfinished, inefficient, or expiring quickly due to cutting corners under severe capitalistic pressures

meanwhile, some of the old stuff frm past generations of thorough, exploratory and perfectionistic development still remains working, complete, and ever so sturdy.

those things continue to exist, just outside our periphery with either:

zero purpose left for modern needs (outdated/obsolete)

or

far too important to replace or destroy, bcs of its surprisingly essential and circumstantial usefulness in one niche specific area.

which are honestly? both points that sum up sollux pree well.

dramatic ending sorry. anw are u still on the fence or are u Sick abt him like me </3

#ask#anon#sollux captor#homestuck#hs2 spoilers#2023#vioart#hs2 sollux explaining girls and bitches to john: 🗣️🗣️🗣️#mr foods‚ setting up the visuals: LMAO ok pause. cool story bro theyre all gone its just u n ur sandwich bro.#now that i think abt it sol's kind of a toaster? awkwardly takes up countertop space#lacks the versatility and sociability of an air fryer/pressure cooker. unwashed and littered w crumbs!#but sometimes the clear‚ frank simplicity of the toaster is a temporary lifesaver for ppl who struggle w low appetite / decision fatigue#or ppl who just have a habit of eating toast for breakfast LOL#and eh ¯\ _(ツ)_/¯ even if u dont feel like toasting today thats ok he's still gonna be sitting there 👍👍#a funnyman..... i curse him in my pan but root for him in my biscuit 🫶

1K notes

·

View notes

Note

What objections would you actually accept to AI?

Roughly in order of urgency, at least in my opinion:

Problem 1: Curation

The large tech monopolies have essentially abandoned curation and are raking in the dough by monetizing the process of showing you crap you don't want.

The YouTube content farm; the Steam asset flip; SEO spam; drop-shipped crap on Etsy and Amazon.

AI makes these pernicious, user hostile practices even easier.

Problem 2: Economic disruption

This has a bunch of aspects, but key to me is that *all* automation threatens people who have built a living on doing work. If previously difficult, high skill work suddenly becomes low skill, this is economically threatening to the high skill workers. Key to me is that this is true of *all* work, independent of whether the work is drudgery or deeply fulfilling. Go automate an Amazon fulfillment center and the employees will not be thanking you.

There's also just the general threat of existing relationships not accounting for AI, in terms of, like, residuals or whatever.

Problem 3: Opacity

Basically all these AI products are extremely opaque. The companies building them are not at all transparent about the source of their data, how it is used, or how their tools work. Because they view the tools as things they own whose outputs reflect on their company, they mess with the outputs in order to attempt to ensure that the outputs don't reflect badly on their company.

These processes are opaque and not communicated clearly or accurately to end users; in fact, because AI text tools hallucinate, they will happily give you *fake* error messages if you ask why they returned an error.

There's been allegations that Mid journey and Open AI don't comply with European data protection laws, as well.

There is something that does bother me, too, about the use of big data as a profit center. I don't think it's a copyright or theft issue, but it is a fact that these companies are using public data to make a lot of money while being extremely closed off about how exactly they do that. I'm not a huge fan of the closed source model for this stuff when it is so heavily dependent on public data.

Problem 4: Environmental maybe? Related to problem 3, it's just not too clear what kind of impact all this AI stuff is having in terms of power costs. Honestly it all kind of does something, so I'm not hugely concerned, but I do kind of privately think that in the not too distant future a lot of these companies will stop spending money on enormous server farms just so that internet randos can try to get Chat-GPT to write porn.

Problem 5: They kind of don't work

Text programs frequently make stuff up. Actually, a friend pointed out to me that, in pulp scifi, robots will often say something like, "There is an 80% chance the guards will spot you!"

If you point one of those AI assistants at something, and ask them what it is, a lot of times they just confidently say the wrong thing. This same friend pointed out that, under the hood, the image recognition software is working with probabilities. But I saw lots of videos of the Rabbit AI assistant thing confidently being completely wrong about what it was looking at.

Chat-GPT hallucinates. Image generators are unable to consistently produce the same character and it's actually pretty difficult and unintuitive to produce a specific image, rather than a generic one.

This may be fixed in the near future or it might not, I have no idea.

Problem 6: Kinetic sameness.

One of the subtle changes of the last century is that more and more of what we do in life is look at a screen, while either sitting or standing, and making a series of small hand gestures. The process of writing, of producing an image, of getting from place to place are converging on a single physical act. As Marshall Macluhan pointed out, driving a car is very similar to watching TV, and making a movie is now very similar, as a set of physical movements, to watching one.

There is something vaguely unsatisfying about this.

Related, perhaps only in the sense of being extremely vague, is a sense that we may soon be mediating all, or at least many, of our conversations through AI tools. Have it punch up that email when you're too tired to write clearly. There is something I find disturbing about the idea of communication being constantly edited and punched up by a series of unrelated middlemen, *especially* in the current climate, where said middlemen are large impersonal monopolies who are dedicated to opaque, user hostile practices.

Given all of the above, it is baffling and sometimes infuriating to me that the two most popular arguments against AI boil down to "Transformative works are theft and we need to restrict fair use even more!" and "It's bad to use technology to make art, technology is only for boring things!"

90 notes

·

View notes

Note

real actual nonhostile question with a preamble: i think a lot of artists consider NN-generated images as an existential threat to their ability to use art as a tool to survive under capitalism, and it's frequently kind of disheartening to think about what this is going to do to artists who rely on commissions / freelance storyboarding / etc. i don't really care whether or not nn-generated images are "true art" because like, that's not really important or worth pursuing as a philosophical question, but i also don't understand how (under capitalism) the rise of it is anything except a bleak portent for the future of artists

thanks for asking! i feel like it's good addressing the idea of the existential threat, the fears and feelings that artists have as to being replaced are real, but personally i am cynical as to the extent that people make it out to be a threat. and also i wanna say my piece in defense of discussions about art and meaning.

the threat of automation, and implementation of technologies that make certain jobs obsolete is not something new at all in labor history and in art labor history. industrial printing, stock photography, art assets, cgi, digital art programs, etc, are all technologies that have cut down on the number of art jobs that weren't something you could cut corners and labor off at one point. so why do neural networks feel like more of a threat? one thing is that they do what the metaphorical "make an image" button that has been used countless times in arguments on digital art programs does, so if the fake button that was made up to win an argument on the validity of digital art exists, then what will become of digital art? so people panic.

but i think that we need to be realistic as to what neural net image generation does. no matter how insanely huge the data pool they pull from is, the medium is, in the simplest terms, limited as to the arrangement of pixels that are statistically likely to be together given certain keywords, and we only recognize the output as symbols because of pattern recognition. a neural net doesn't know about gestalt, visual appeal, continuity, form, composition, etc. there are whole areas of the art industry that ai art serves especially badly, like sequential arts, scientific illustration, drafting, graphic design, etc. and regardless, neural nets are tools. they need human oversight to work, and to deal with the products generated. and because of the medium's limitations and inherent jankiness, it's less work to hire a human professional to just do a full job than to try and wrangle a neural net.

as to the areas of the art industry that are at risk of losing job opportunities to ai like freelance illustration and concept art, they are seen as replaceable to an industry that already overworks, underpays, and treats them as disposable. with or without ai, artists work in precarized conditions without protections of organized labor, even moreso in case of freelancers. the fault is not of ai in itself, but in how it's yielded as a tool by capital to threaten workers. the current entertainment industry strikes are in part because of this, and if the new wga contract says anything, it's that a favorable outcome is possible. pressure capital to let go of the tools and question everyone who proposes increased copyright enforcement as the solution. intellectual property serves capital and not the working artist.

however, automation and ai implementation is not unique to the art industry. service jobs, manufacturing workers and many others are also at risk at losing out jobs to further automation due to capital's interest in maximizing profits at the cost of human lives, but you don't see as much online outrage because they are seen as unskilled and uncreative. the artist is seen as having a prestige position in society, if creativity is what makes us human, the artist symbolizes this belief - so if automation comes for the artist then people feel like all is lost. but art is an industry like any other and artists are not of more intrinsic value than any manual laborer. the prestige position of artist also makes artists act against class interest by cooperating with corporations and promoting ip law (which is a bad thing. take the shitshow of the music industry for example), and artists feel owed upward social mobility for the perceived merits of creativity and artistic genius.

as an artist and a marxist i say we need to exercise thinking about art, meaning and the role of the artist. the average prompt writer churning out big titty thomas kinkade paintings posting on twitter on how human made art will become obsolete doesnt know how to think about art. art isn't about making pretty pictures, but is about communication. the average fanartist underselling their work doesn't know that either. discussions on art and meaning may look circular and frustrating if you come in bad faith, but it's what exercises critical thinking and nuance.

208 notes

·

View notes

Text

As someone who dual-uses Chrome & Firefox, and definitely found Chrome's recent updates to hit performance, I have been using Firefox a bit more heavily and man, Chrome is the better browser. It just has such much more ease-of-use features from tab & audio management to settings customization, and its extension library is on average more robust than Firefox's (though both are fine in this regard).

I have the bespoke need of using automated translation & OCR tools for reading multilingual sources - hilariously, Google Image OCR (the best one out there) actually has stripped functionality on Firefox? Awful act of digital terrorism there on Google's part, but I live in the world I am given, I can't fix it. Chrome also has more accurate text recognition for page translation and smoother toggle options. For most other things Firefox vs Chrome is preference, but when I do anime research I literally have to use Chrome, the efficiency impact of Firefox is way too high.

So yeah I respect all the Firefox stans out there - I use both after all - but if they want wider adaptation they need to compete a bit better.

82 notes

·

View notes

Text

AI Tool Reproduces Ancient Cuneiform Characters with High Accuracy

ProtoSnap, developed by Cornell and Tel Aviv universities, aligns prototype signs to photographed clay tablets to decode thousands of years of Mesopotamian writing.

Cornell University researchers report that scholars can now use artificial intelligence to “identify and copy over cuneiform characters from photos of tablets,” greatly easing the reading of these intricate scripts.

The new method, called ProtoSnap, effectively “snaps” a skeletal template of a cuneiform sign onto the image of a tablet, aligning the prototype to the strokes actually impressed in the clay.

By fitting each character’s prototype to its real-world variation, the system can produce an accurate copy of any sign and even reproduce entire tablets.

"Cuneiform, like Egyptian hieroglyphs, is one of the oldest known writing systems and contains over 1,000 unique symbols.

Its characters change shape dramatically across different eras, cultures and even individual scribes so that even the same character… looks different across time,” Cornell computer scientist Hadar Averbuch-Elor explains.

This extreme variability has long made automated reading of cuneiform a very challenging problem.

The ProtoSnap technique addresses this by using a generative AI model known as a diffusion model.

It compares each pixel of a photographed tablet character to a reference prototype sign, calculating deep-feature similarities.

Once the correspondences are found, the AI aligns the prototype skeleton to the tablet’s marking and “snaps” it into place so that the template matches the actual strokes.

In effect, the system corrects for differences in writing style or tablet wear by deforming the ideal prototype to fit the real inscription.

Crucially, the corrected (or “snapped”) character images can then train other AI tools.

The researchers used these aligned signs to train optical-character-recognition models that turn tablet photos into machine-readable text.

They found the models trained on ProtoSnap data performed much better than previous approaches at recognizing cuneiform signs, especially the rare ones or those with highly varied forms.

In practical terms, this means the AI can read and copy symbols that earlier methods often missed.

This advance could save scholars enormous amounts of time.

Traditionally, experts painstakingly hand-copy each cuneiform sign on a tablet.

The AI method can automate that process, freeing specialists to focus on interpretation.

It also enables large-scale comparisons of handwriting across time and place, something too laborious to do by hand.

As Tel Aviv University archaeologist Yoram Cohen says, the goal is to “increase the ancient sources available to us by tenfold,” allowing big-data analysis of how ancient societies lived – from their religion and economy to their laws and social life.

The research was led by Hadar Averbuch-Elor of Cornell Tech and carried out jointly with colleagues at Tel Aviv University.

Graduate student Rachel Mikulinsky, a co-first author, will present the work – titled “ProtoSnap: Prototype Alignment for Cuneiform Signs” – at the International Conference on Learning Representations (ICLR) in April.

In all, roughly 500,000 cuneiform tablets are stored in museums worldwide, but only a small fraction have ever been translated and published.

By giving AI a way to automatically interpret the vast trove of tablet images, the ProtoSnap method could unlock centuries of untapped knowledge about the ancient world.

#protosnap#artificial intelligence#a.i#cuneiform#Egyptian hieroglyphs#prototype#symbols#writing systems#diffusion model#optical-character-recognition#machine-readable text#Cornell Tech#Tel Aviv University#International Conference on Learning Representations (ICLR)#cuneiform tablets#ancient world#ancient civilizations#technology#science#clay tablet#Mesopotamian writing

5 notes

·

View notes

Text

“Northrop Grumman’s HORNET system leverages the latest advances in real-time coupling of human brain activity with automated cognitive neural processing to provide superior target detection,” says Michael House, Northrop Grumman’s CT2WS program manager. “The system will maintain persistent surveillance in order to defeat an enemy’s attempts to surprise through evasive move-stop-move tactics, giving the U.S. warfighter as much as a 20-minute advantage over his adversaries.”

The subconscious mind is hypersensitive to visual images. The system will take advantage of that by using non-invasive electro-encephalogram (EEG) measurement sensors inside the warfighter’s helmet and attached to his scalp, House says.

A soldier’s subconscious may detect a threat his conscious mind is not registering, House says. The EEG sensors will read the soldier’s neural responses to potential threats, and then the readings will educate the system’s algorithms. For example, the algorithms will identify when the warfighter’s subconscious is detecting a moving target at 5 kilometers or at 10 kilometers, House continues. As missions and threats evolve, the algorithms will be refined.

One of the main goals of the Hornet is to reduce the false alarm rate for target acquisition, House says. The human brain’s visual process has very few false alarms, he adds. This should also significantly improve the range of standard binoculars. Lowering false-alarm rates is crucial for HORNET’s intended applications, which include improvised explosive device (IED) detection and destruction, aided target recognition, and border surveillance for homeland security scenarios."

5 notes

·

View notes

Text

i am not against ai as a concept, it is extremely useful in some areas -

i personally know of a team of researchers who train ai to identify types of blood cells - it is an amazing progress for laboratory diagnostics - cell count in blood can be a great first identifier of a human body general condition but blood is such a tricky material;

To keep it in a state close to human body environment even for a couple of hours, one needs to take a lot of special measures (vacuum containers with anticoagulants for some analytes etc.) and even then a blood sample will mostly deteriorate beyond recognition in 24hours.

There already are automated systems that will count blood cells using spectrophotometry and extrapolation, but in harder cases human eyes and microscope are your best bet, the eye strain is unbelievable though and in my previous workplace it was ~500 samples a day per person, i really hadn't envied my colleagues from this department.

So, to have an instrument trained specifically by experts to identify cells faster and count them better than with spectrometry and math, sparing actual real people from eye problems - GOOD.

And it will not endanger those real people's jobs - there are many kinds of lab tests in that field that require human expertise and pathological cases will require human evaluation still.

But why the fuck would someone use ai for creativity?! That baffles me to no end.

Also it feels like such a violation to make ai images/texts in "someone's style":/ what if said artist absolutely hated the topic that was prompted? ugh

Anyways, the idea of ai generated books/images just makes my skin crawl.

Also I've just installed a windows xp program on win 11, so my decades old scanner won't go obsolete, and I am very proud of myself¯\_(ツ)_/¯

31 notes

·

View notes

Text

A Sims 4 Horse Ranch* review, by a Simmer who's not all that into horses

* Software not final. Sponsored by EA.

First, many thanks to EA/Maxis for the early access! This was a treat for me, to get access to an early build to try out for a while, as I'm not exactly a high-profile streamer. Or a streamer at all. Or even a creator (though I'll upload a household I came to love to the Gallery when I get a chance!). But I do love this game! And I love that I can help more of you play it the way you want to. Anyway, the review…

What I liked!

The great range of build/buy! I'll get LOTS of use out of this. It complements some other packs well too.

There are lots of helpful rooms in build mode for fast stables and nectar-related spaces! As a non-builder who sometimes tries to build, I was really happy to have a premade horse stall for my lot.

[image: a pre-built stable room in Build mode]

There's lots of Teen–Elder clothing and hair, and I love the dirty clothes swatches. I'll get LOTS of use out of this pack's CAS! And it will go well with styles from some other packs, too.

The new Afro-textured hairs are a welcome recognition that the cowboy culture of the Old West was not a White culture — there were lots of Black, Hispanic, and Indigenous cowboys and entrepreneurial women in the Old West!

I deeply appreciate the Indigenous content in build/buy, recipes, and CAS — I could see lots there in CAS, for example, that the Navajo people I saw and met in Utah and northern New Mexico (which is a part of the world I really want to go back to) wore IRL.

Lots range from fairly small (15 x 20) to quite large. I appreciate the range as someone who isn't a fan of building on large lots when I do build. There are horse practice areas in the land around some small lots, so you can still keep a horse there.

For Strangerville owners who love that landscape (which I do!), there's now somewhere for that valley, with its smaller population, to be "near". I can imagine that you'd drive up into a range from the new world and drop down into hidden Strangerville. Driving the other direction might take you to Oasis Springs.

The horse-riding and other horse animations are really detailed and fluid. They interact a lot with each other, too. I felt like parent and child horses recognized their relationship even.

[image: a mare lovingly nuzzling her foal]

The sheep and goats are endlessly adorable. Plus profitable! :D And they can sleep in your house!

The rabbithole adventure location out in the countryside has a very different gameplay than previous ones! And it has great sound effects -- play it with the volume up! It's hard to find, though. But it was nice to not need other tricks to get access. (I still have not done the secret places in Oasis Springs or the Outdoor Retreat pack!)

What I didn't like:

No new fridge, stove, bathtub, or toilet (I do like getting more of those!)

Very little boys' children's clothes. And no chaps for Children, even though they can ride and even though Toddlers got some

I would have liked a higher-tech/automated version of a nectar maker. However, this isn't a feature I care about much anyway.

You can't breed the mini goats and sheep, and there aren't even smaller baby ones. I'd have liked to have a full-on sheep farm. I like sheep. (I can practically see my husband glaring about how much I like sheep, even though he's waaaaay far away at the office right now.)

[image: a smiling, leaping mini-sheep, with a spotted goat behind it]

I definitely would never have found the countryside rabbithole without help, but maybe you will? If I could figure out how to do spoilers here, I would...

The horse-toy balls are a little… glowy? for my taste. They also weren't where I looked for them in the catalog, so you'll want to use search for them.

Cross-pack things?

I'd have liked to check out these before now, but with the pre-patch builds, that's not an option, and I'm always kind of busy when patches come out! For example ...

How do cats and dogs interact with horses, sheep, and goats?

Can horses be familiars for spellcasters?

Are there new Milestones?

Are there new Lifestyles?

Are there new Club rules, and are there enough of those?

This Simmer needs to know! (And eventually will.)

Neat things to know!

There's a rabbithole building in town where you can change or plan outfits like a dresser! And just off the main road in town there's a rabbithole building where you can buy goats, sheep, and groceries and other useful things, like horse age-up treats. I liked having an alternative way to buy these things and a whole new way to plan outfits. I'd be happy for more of that. If I can't go into a building, I might as well at least be able to pretend I can. As long as they don't end up being worlds stuffed with rabbitholes in place of gameplay (actually watching horse competition would have been nice!).

You can use a Community Board in town (or from B/B if you want one on your lot) to take local one-off jobs for money. Most need you to own a Horse or some sheep or goats. There are lots of ways to make money as a rancher without needing someone in the household to have a job. My relatives who are farmers will be seriously jealous.

Get to know that Crinkletop guy! He's very useful.

Bugs? Bugs!

Things to watch out for that were issues for me in the early-access build, which is NOT the release build, so hopefully it's a bit better:

Ranch dancing is EXTREMELY popular. You might want to not keep a radio at home until the new dancing has a mod to … moderate it. Or is tuned down by the devs. But I do like it when I'm in control!

[image: five Sims ranch dancing (line dancing) as a group, including two Don Lothario and Eliza Pancakes]

The beautiful stone fireplace was also very, very popular, and of course potentially deadly. Watch out for that.

Ranch hands are NOT reliable. They might stay really, really late. They might stop showing up after a couple days. They might forget the things you instructed them NOT to do the day before. They might be super into kicking the garbage bin over. Keep an eye on your ranch hand. At least until the day, someday, when they get fixed. I'm hoping this is also moddable for those of us on PC.

At one point I had a weird bug where my Sim decided she would NOT eat. The rest of the household could eat. Guests could eat. They could eat HER food. It was fixed by going to the world map and back into the household, so I didn't find out if she was going to just starve to death.

I couldn't find some of the new CAS at first because some men's outfits were under "jumpsuit" for no apparent reason. So, if you're looking for some cool outerwear, try "jumpsuits." Hopefully it was recategorized for the release build!

And that's it! I'm happy to answer questions!

#sims 4 horse ranch#the sims 4 horse ranch#ts4 horse ranch#sponsored by EA#the sims 4#ts4#sims 4#simblr

143 notes

·

View notes

Text

🦾 A006R - Robotic X-ray Inspection of Cast Aluminium Automotive Wheels Robot Arm 6-axis with special gripper - BarCode inline recognition ISAR image evaluation software - XEye detector X-ray inspection... via HeiDetect HEITEC PTS and MetrologyNews ▸ TAEVision Engineering on Pinterest

Data A006R - Jul 24, 2023

#automation#robot#robotics#robot arm 6-axis#special gripper#BarCode inline recognition#ISAR image evaluation software#XEye detector#X-ray Inspection#via HeiDetect#HEITEC PTS#MetrologyNews

1 note

·

View note

Text

You’re cheering a win — while gangrene spreads

Some of you are celebrating lawsuits against Midjourney, thinking they’ll restore the good old days of commissions and human-made fanart.

But let’s walk through how this really unfolds — for you.

🔍 1. Character Recognition Models Are Coming

Big IP holders already have extensive visual and text datasets of their characters.

It’s only a matter of time before they deploy detection models trained specifically to recognize deviations and unauthorized reinterpretations — from sexualization to gender-swaps.

📡 2. Platforms Will Be Forced to Integrate IP-Scanning APIs

No need to chase individual fanartists. Platforms will be required to connect to centralized scanning tools — designed and controlled by rights holders.

Upload an image → the API flags it → blocked or quietly deleted. No public drama, just silence. Or shadowban.

🧾 3. One Lawsuit Is All It Takes

Companies don’t need to send cease-and-desists to everyone. They target infrastructure.

Once a platform is legally pressured or threatened with deplatforming (remember Tumblr in 2018?), it will comply — automatically.

They won’t manually check if your blog is cute, transformative, or queer.

They’ll just press delete.

🖼️ 4. It Won’t Matter If It’s AI or Human

Once automated detection is in place, the question of who made the image becomes irrelevant.

If it looks like protected IP — it’s treated as infringement.

📉 5. The 2018 Tumblr Purge Was Just a Dress Rehearsal

When Apple removed Tumblr from the App Store over child exploitation concerns, the platform overreacted — purging thousands of blogs in a broad NSFW sweep.

No due process. No clear appeals. Just mass deletion.

Tumblr has done this before. And that was before AI.

🧠 And here’s the part you’re missing:

This isn’t just about AI art.

The same infrastructure that blocks machine-made fanart can — and will — block yours.

You’re not cheering for justice.

You’re cheering like a patient who’s glad their infected finger was cut off — not realizing the blood is already carrying sepsis to the lungs.

#anti ai#ai#pro ai#disney#midjourney#ai discussion#ai discourse#digital culture#copyright#fanart#fandom culture#artists on tumblr#creative freedom#ao3 writer#ao3 author#2018 tumblr#tumblr purge#important read

2 notes

·

View notes

Text

The Ultimate Guide to Online Media Tools: Convert, Compress, and Create with Ease

In the fast-paced digital era, online tools have revolutionized the way we handle multimedia content. From converting videos to compressing large files, and even designing elements for your website, there's a tool available for every task. Whether you're a content creator, a developer, or a business owner, having the right tools at your fingertips is essential for efficiency and creativity. In this blog, we’ll explore the most powerful online tools like Video to Audio Converter Online, Video Compressor Online Free, Postman Online Tool, Eazystudio, and Favicon Generator Online—each playing a unique role in optimizing your digital workflow.

Video to Audio Converter Online – Extract Sound in Seconds

Ever wanted just the audio from a video? Maybe you’re looking to pull music, dialogue, or sound effects for a project. That’s where a Video to Audio Converter Online comes in handy. These tools let you convert video files (MP4, AVI, MOV, etc.) into MP3 or WAV audio files in just a few clicks. No software installation required.

Using a Video to Audio Converter Online is ideal for:

Podcast creators pulling sound from interviews.

Music producers isolating tracks for remixing.

Students or professionals transcribing lectures or meetings.

The beauty lies in its simplicity—upload the video, choose your audio format, and download. It’s as straightforward as that

2. Video Compressor Online Free – Reduce File Size Without Losing Quality

Large video files are a hassle to share or upload. Whether you're sending via email, uploading to a website, or storing in the cloud, a bulky file can be a roadblock. This is where a Video Compressor Online Free service shines.

Key benefits of using a Video Compressor Online Free:

Shrink video size while maintaining quality.

Fast, browser-based compression with no downloads.

Compatible with all major formats (MP4, AVI, MKV, etc.).

If you're managing social media content, YouTube uploads, or email campaigns, compressing videos ensures faster load times and better performance—essential for keeping your audience engaged.

3. Postman Online Tool – Streamline Your API Development

Developers around the world swear by Postman, and the Postman Online Tool brings that power to the cloud. This tool is essential for testing APIs, monitoring responses, and managing endpoints efficiently—all without leaving your browser.

Features of Postman Online Tool include:

Send GET, POST, PUT, DELETE requests with real-time response visualization.

Organize your API collections for collaborative development.

Automate testing and environment management.

Whether you're debugging or building a new application,Postman Online Tool provides a robust platform that simplifies complex API workflows, making it a must-have in every developer's toolkit.

4. Eazystudio – Your Creative Powerhouse

When it comes to content creation and design, Eazystudio is a versatile solution for both beginners and professionals. From editing videos and photos to crafting promotional content, Eazystudio makes it incredibly easy to create high-quality digital assets.

Highlights of Eazystudio:

User-friendly interface for designing graphics, videos, and presentations.

Pre-built templates for social media, websites, and advertising.

Cloud-based platform with drag-and-drop functionality.

Eazystudio is perfect for marketers, influencers, and businesses looking to stand out online. You don't need a background in graphic design—just an idea and a few clicks.

5. Favicon Generator Online – Make Your Website Look Professional

A small icon can make a big difference. The Favicon Generator Online helps you create favicons—the tiny icons that appear next to your site title in a browser tab. They enhance your website’s branding and improve user recognition.

With a Favicon Generator Online, you can:

Convert images (JPG, PNG, SVG) into favicon.ico files.

Generate multiple favicon sizes for different platforms and devices.

Instantly preview how your favicon will look in a browser tab or bookmark list.

For web developers and designers, using a Favicon Generator Online is an easy yet impactful way to polish a website and improve brand presence.

Why These Tools Matter in 2025

The future is online. As remote work, digital content creation, and cloud computing continue to rise, browser-based tools will become even more essential. Whether it's a Video to Audio Converter Online that simplifies sound editing, a Video Compressor Online Freefor seamless sharing, or a robust Postman Online Tool for development, these platforms boost productivity while cutting down on time and costs.

Meanwhile, platforms like Eazystudio empower anyone to become a designer, and tools like Favicon Generator Online ensure your brand always makes a professional first impression.

Conclusion

The right tools can elevate your workflow, save you time, and improve the quality of your digital output. Whether you're managing videos, developing APIs, or enhancing your website’s design, tools like Video to Audio Converter Online, Video Compressor Online Free, Postman Online Tool, Eazystudio, and Favicon Generator Online are indispensable allies in your digital toolbox.

So why wait? Start exploring these tools today and take your digital productivity to the next level

2 notes

·

View notes

Text

Best AI Training in Electronic City, Bangalore – Become an AI Expert & Launch a Future-Proof Career!

youtube

Artificial Intelligence (AI) is reshaping industries and driving the future of technology. Whether it's automating tasks, building intelligent systems, or analyzing big data, AI has become a key career path for tech professionals. At eMexo Technologies, we offer a job-oriented AI Certification Course in Electronic City, Bangalore tailored for both beginners and professionals aiming to break into or advance within the AI field.

Our training program provides everything you need to succeed—core knowledge, hands-on experience, and career-focused guidance—making us a top choice for AI Training in Electronic City, Bangalore.

🌟 Who Should Join This AI Course in Electronic City, Bangalore?

This AI Course in Electronic City, Bangalore is ideal for:

Students and Freshers seeking to launch a career in Artificial Intelligence

Software Developers and IT Professionals aiming to upskill in AI and Machine Learning

Data Analysts, System Engineers, and tech enthusiasts moving into the AI domain

Professionals preparing for certifications or transitioning to AI-driven job roles

With a well-rounded curriculum and expert mentorship, our course serves learners across various backgrounds and experience levels.

📘 What You Will Learn in the AI Certification Course

Our AI Certification Course in Electronic City, Bangalore covers the most in-demand tools and techniques. Key topics include:

Foundations of AI: Core AI principles, machine learning, deep learning, and neural networks

Python for AI: Practical Python programming tailored to AI applications

Machine Learning Models: Learn supervised, unsupervised, and reinforcement learning techniques

Deep Learning Tools: Master TensorFlow, Keras, OpenCV, and other industry-used libraries

Natural Language Processing (NLP): Build projects like chatbots, sentiment analysis tools, and text processors

Live Projects: Apply knowledge to real-world problems such as image recognition and recommendation engines

All sessions are conducted by certified professionals with real-world experience in AI and Machine Learning.

🚀 Why Choose eMexo Technologies – The Best AI Training Institute in Electronic City, Bangalore

eMexo Technologies is not just another AI Training Center in Electronic City, Bangalore—we are your AI career partner. Here's what sets us apart as the Best AI Training Institute in Electronic City, Bangalore:

✅ Certified Trainers with extensive industry experience ✅ Fully Equipped Labs and hands-on real-time training ✅ Custom Learning Paths to suit your individual career goals ✅ Career Services like resume preparation and mock interviews ✅ AI Training Placement in Electronic City, Bangalore with 100% placement support ✅ Flexible Learning Modes including both classroom and online options

We focus on real skills that employers look for, ensuring you're not just trained—but job-ready.

🎯 Secure Your Future with the Leading AI Training Institute in Electronic City, Bangalore

The demand for skilled AI professionals is growing rapidly. By enrolling in our AI Certification Course in Electronic City, Bangalore, you gain the tools, confidence, and guidance needed to thrive in this cutting-edge field. From foundational concepts to advanced applications, our program prepares you for high-demand roles in AI, Machine Learning, and Data Science.

At eMexo Technologies, our mission is to help you succeed—not just in training but in your career.

📞 Call or WhatsApp: +91-9513216462 📧 Email: [email protected] 🌐 Website: https://www.emexotechnologies.com/courses/artificial-intelligence-certification-training-course/

Seats are limited – Enroll now in the most trusted AI Training Institute in Electronic City, Bangalore and take the first step toward a successful AI career.

🔖 Popular Hashtags

#AITrainingInElectronicCityBangalore#AICertificationCourseInElectronicCityBangalore#AICourseInElectronicCityBangalore#AITrainingCenterInElectronicCityBangalore#AITrainingInstituteInElectronicCityBangalore#BestAITrainingInstituteInElectronicCityBangalore#AITrainingPlacementInElectronicCityBangalore#MachineLearning#DeepLearning#AIWithPython#AIProjects#ArtificialIntelligenceTraining#eMexoTechnologies#FutureTechSkills#ITTrainingBangalore#Youtube

3 notes

·

View notes