#artificial intelligences

Text

no i don't want to use your ai assistant. no i don't want your ai search results. no i don't want your ai summary of reviews. no i don't want your ai feature in my social media search bar (???). no i don't want ai to do my work for me in adobe. no i don't want ai to write my paper. no i don't want ai to make my art. no i don't want ai to edit my pictures. no i don't want ai to learn my shopping habits. no i don't want ai to analyze my data. i don't want it i don't want it i don't want it i don't fucking want it i am going to go feral and eat my own teeth stop itttt

#i don't want it!!!!#ai#artificial intelligence#there are so many positive uses for ai#and instead we get ai google search results that make me instantly rage#diz says stuff

118K notes

·

View notes

Text

103K notes

·

View notes

Text

There was a paper in 2016 exploring how an ML model was differentiating between wolves and dogs with a really high accuracy, they found that for whatever reason the model seemed to *really* like looking at snow in images, as in thats what it pays attention to most.

Then it hit them. *oh.*

*all the images of wolves in our dataset has snow in the background*

*this little shit figured it was easier to just learn how to detect snow than to actually learn the difference between huskies and wolves. because snow = wolf*

Shit like this happens *so often*. People think trainning models is like this exact coding programmer hackerman thing when its more like, coralling a bunch of sentient crabs that can do calculus but like at the end of the day theyre still fucking crabs.

22K notes

·

View notes

Text

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train their models without the creator’s permission. Using it to “poison” this training data could damage future iterations of image-generating AI models, such as DALL-E, Midjourney, and Stable Diffusion, by rendering some of their outputs useless—dogs become cats, cars become cows, and so forth. MIT Technology Review got an exclusive preview of the research, which has been submitted for peer review at computer security conference Usenix.

AI companies such as OpenAI, Meta, Google, and Stability AI are facing a slew of lawsuits from artists who claim that their copyrighted material and personal information was scraped without consent or compensation. Ben Zhao, a professor at the University of Chicago, who led the team that created Nightshade, says the hope is that it will help tip the power balance back from AI companies towards artists, by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property. Meta, Google, Stability AI, and OpenAI did not respond to MIT Technology Review’s request for comment on how they might respond.

Zhao’s team also developed Glaze, a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

Continue reading article here

#Ben Zhao and his team are absolute heroes#artificial intelligence#plagiarism software#more rambles#glaze#nightshade#ai theft#art theft#gleeful dancing

22K notes

·

View notes

Text

ai makes everything so boring. deepfakes will never be as funny as clipping together presidential speeches. ai covers will never be as funny as imitating the character. ai art will never be as good as art drawn by humans. ai chats will never be as good as roleplaying with other people. ai writing will never be as good as real authors

#zylo's posts#ai#ai art#artificial intelligence#chatgpt#chatbots#ai generated#ai technology#ai tools#edit: 10k what the fuck

28K notes

·

View notes

Text

After 146 days, the Writer's Strike has ended with a resounding success. Throughout constant attempts by the studios to threaten, gaslight, and otherwise divide the WGA, union members stood strong and kept fast in their demands. The result is a historic win guaranteeing not only pay increases and residual guarantees, but some of the first serious restrictions on the use of AI in a major industry.

This win is going to have a ripple effect not only throughout Hollywood but in all industries threatened by AI and wage reduction. Studio executives tried to insist that job replacement through AI is inevitable and wage increases for staff members is not financially viable. By refusing to give in for almost five long months, the writer's showed all of the US and frankly the world that that isn't true.

Organizing works. Unions work. Collective bargaining how we bring about a better future for ourselves and the next generation, and the WGA proved that today. Congratulations, Writer's Guild of America. #WGAstrong!!!

#gingerswagfreckles#wga#writer's strike#wga strong#wga strike#do the write thing#sag#sag aftra#sag afta strike#unions#Hollywood#according to the news the strike isnt technically over until a vote can be ratified on Tuesday but in practice its over#pickets r called off immediately amd they got almlst everything theu wanted#so its gonna be ratified for sure#current events#news#AI#artificial intelligence

38K notes

·

View notes

Text

Fans' attitudes toward AI-generated works

Irissa Cisternino, a PhD candidate of Stony Brook University, is writing their research on topics related to technology, art and fandom. You can participate by filling out a survey and additionally, signing up for an interview. The survey is expected to last until at least the end of April, those, who signed up for the interview, will be contacted later. You need to be at least 18 years old to participate in either, be able to understand and speak English and identify as a fan.

After the completion of the research, it will be accessible as the dissertation of the researcher. If you have further questions, you can contact Irina Cisternino at [email protected] or Lu-Ann Kozlowsky at [email protected].

14K notes

·

View notes

Text

UPDATE! REBLOG THIS VERSION!

#reaux speaks#zoom#terms of service#ai#artificial intelligence#privacy#safety#internet#end to end encryption#virtual#remote#black mirror#joan is awful#twitter#instagram#tiktok#meetings#therapy

23K notes

·

View notes

Text

Despicable Me 4 - Minion Intelligence (Big Game Spot)

#superbowl#super bowl#despicable me#byaurore#userbbelcher#tuserrachel#usersugar#nessa007#tuserpris#userallisyn#usereena#userelio#tuserhan#tuserrobin#userkam#userrlaura#ai#artificial intelligence#minions

9K notes

·

View notes

Text

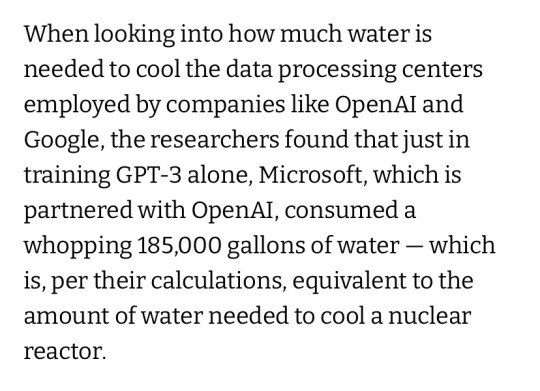

So it turns out that ChatGPT not only uses a ton shit of energy, but also a ton shit of water. This is according to a new study by a group of researchers from the University of California Riverside and the University of Texas Arlington, Futurism reports.

Which sounds INSANE but also makes sense when you think of it. You know what happens to, for example, your computer when it’s doing a LOT of work and processing. You gotta cool those machines.

And what’s worrying about this is that water shortages are already an issue almost everywhere, and over this summer, and the next summers, will become more and more of a problem with the rising temperatures all over the world. So it’s important to have this in mind and share the info. Big part of how we ended up where we are with the climate crisis is that for a long time politicians KNEW about the science, but the large public didn’t have all the facts. We didn’t have access to it. KNOWING about things and sharing that info can be a real game-changer. Because then we know up to what point we, as individuals, can have effective actions in our daily lives and what we need to be asking our legislators for.

And with all the issues AI can pose, I think this is such an important argument to add to the conversation.

Edit: I previously accidentally typed Colorado instead of California. Thank you to the fellow user who noticed and signaled that!

#lem talks#let’s get political#science#science tumblr#politics#research#artificial intelligence#AI#climate#important

39K notes

·

View notes

Text

51K notes

·

View notes

Text

A little-discussed detail in the Lavender AI article is that Israel is killing people based on being in the same Whatsapp group [1] as a suspected militant [2]. Where are they getting this data? Is WhatsApp sharing it?

Lavender is Israel's system of "pre-crime" [3] - they use AI to guess who to kill in Gaza, and then bomb them when they're at home, along with their entire family. (Obscenely, they call this program "Where's Daddy").

One input to the AI is whether you're in a WhatsApp group with a suspected member of Hamas. There's a lot wrong with this - I'm in plenty of WhatsApp groups with strangers, neighbours, and in the carnage in Gaza you bet people are making groups to connect.

But the part I want to focus on is whether they get this information from Meta. Meta has been promoting WhatsApp as a "private" social network, including "end-to-end" encryption of messages.

Providing this data as input for Lavender undermines their claim that WhatsApp is a private messaging app. It is beyond obscene and makes Meta complicit in Israel's killings of "pre-crime" targets and their families, in violation of International Humanitarian Law and Meta's publicly stated commitment to human rights. No social network should be providing this sort of information about its users to countries engaging in "pre-crime".

#yemen#jerusalem#tel aviv#current events#palestine#free palestine#gaza#free gaza#news on gaza#palestine news#news update#war news#war on gaza#war crimes#gaza genocide#genocide#ai#artificial intelligence

6K notes

·

View notes

Text

I love you robots and artificial intelligence with mental illness. I love you repression being depicted as literally deleting archived data to preserve functionality. I love you anxiety attacks being depicted as a system crashing virus. I love you ptsd being depicted as an annoying pop-up. I love you anxiety disorder being depicted as running thousands of simulations and projected outcomes. I love you artificial beings being shown to be human via their own artificiality.

#robots#artificial intelligence#tropes#rvb#red vs blue#yugioh vrains#wolf 359#star trek#transhumanism#posthumanism

15K notes

·

View notes

Text

Hey, do y’all remember how Tencent said they were developing faceID AI to identify people in riots, and then they suddenly created an AI art generator to turn your selfies into anime?

Do y’all remember that time that someone discovered facial recognition cameras couldn't see through Juggalo makeup, then Facebook had a fun “see what you'd look like with Juggalo makeup” thing, and then facial recognition cameras could suddenly see through Juggalo makeup?

Do y’all remember how, on Twitter, Elon started a tirade against artists who ask for credit when their art is reposted, and he suddenly he created one of the first big art AI programs?

Do y’all remember how AI destroyed the field of freelance translation, despite the inferiority of the machine translations, because companies didn’t care about the quality of the translations? They just wanted it done for free?

Do y’all know how companies will see a lot of money going into a New Tech Thing (like, say, AI art apps) and will jump to try and implement that New Tech Thing into their tech? For example, how it felt like every big company and celebrity had an NFT to sell?

Just wondering.

90K notes

·

View notes

Text

"There was an exchange on Twitter a while back where someone said, ‘What is artificial intelligence?' And someone else said, 'A poor choice of words in 1954'," he says. "And, you know, they’re right. I think that if we had chosen a different phrase for it, back in the '50s, we might have avoided a lot of the confusion that we're having now."

So if he had to invent a term, what would it be? His answer is instant: applied statistics. "It's genuinely amazing that...these sorts of things can be extracted from a statistical analysis of a large body of text," he says. But, in his view, that doesn't make the tools intelligent. Applied statistics is a far more precise descriptor, "but no one wants to use that term, because it's not as sexy".

'The machines we have now are not conscious', Lunch with the FT, Ted Chiang, by Madhumita Murgia, 3 June/4 June 2023

#quote#Ted Chiang#AI#artificial intelligence#technology#ChatGPT#Madhumita Murgia#intelligence#consciousness#sentience#scifi#science fiction#Chiang#statistics#applied statistics#terminology#language#digital#computers

20K notes

·

View notes

Text

#art#photography#nature#artificial intelligence#photograph#photographer#landscape#places#landscapes#artwork#photography art#digital art#photooftheday#artificialintelligence#artoftheday#naturephotography#nature lovers#Ai art#mountains#space#sky#skyscape#night#sunrise#sunset#flowers#stars#night sky

5K notes

·

View notes