#computer hardware engineer

Explore tagged Tumblr posts

Text

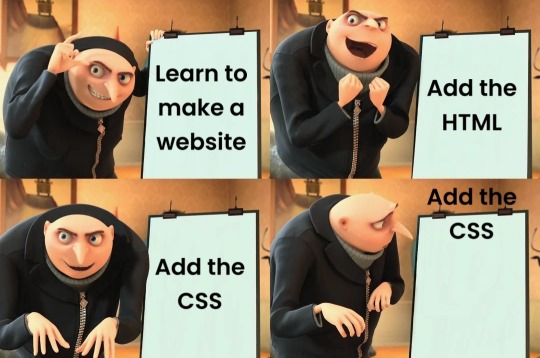

#programmer humor#programming#geek#nerd#programmer#technology#computer#phone#mac#windows#os#operating system#website#web development#dev#developer#development#full stack developer#frontend#backend#software#hardware#html#css#meme#despicable me#gru#joke#software engineer#apple

476 notes

·

View notes

Text

Interactive floor concept!

#technology#science#tech#engineering#interesting#awesome#innovation#cool#electronics#computer#hardware#techinnovation#smart tech#gaming#games#augmented reality

4 notes

·

View notes

Text

youtube

Desk of Ladyada - I2S DACs, Claude API, and Compute Module Backpack 🤖🎒🥧 https://youtu.be/XihMNhTyUlg

Ladyada explores I2S DACs, testing PCM51xx as a UDA1334A alternative. Work continues on the TLV320DAC3100, we test an AI API interface for setters/getters for Claude with pay per token. A new Pi Compute Module backpack is in progress - And we search for tall connectors for CM4/CM5.

#i2s#dac#raspberrypi#pcm5102#tlv320dac3100#compute#embedded#hardwaredesign#electronics#maker#opensource#adafruit#audiophile#tech#engineering#aiapi#claude#prototype#cm4#cm5#computemodule#raspberrypicm#pcbdesign#connectors#digikey#electronicsengineering#soldering#pcb#hardware#Youtube

4 notes

·

View notes

Text

here’s my pro tip

everyone keeps pushing computer science without recognizing that the field is quickly becoming oversaturated. If you love CS, than sure, do that. But if you’re looking for a really marketable degree that will let you do CS but also leave the door open for other stem careers, then I highly recommend computer engineering or electrical engineering with a CS minor (optional - have an ee degree without a minor and I still work in software). You can still get a software job if you want, with the added bonus that a lot of CS people will think you’re a wizard for having a working knowledge of hardware. And as software jobs get harder to find and get, you can diversify and apply for hardware jobs. And the hardware jobs will be easier to get if you know how to code. Also, circuits are really fucking cool guys.

#Engineering#computer science#software development#electrical and computer engineering#stem education#jobs#employment#tech#for those who don’t know#computer engineering is a combination of electrical engineering and computer science degrees#So much so that at my school you aren’t even allowed to get a cs minor if you have ce major because they already overlap so much#That the cs minor is redundant#If you take a circuits class and hate it try ce instead of ee#ce usually requires less circuits#if you love circuits and the physics side do ee#But ce and ee will both let you work in both software and hardware even though ee is focused on hardware#And having both software and hardware skills is highly sought after#I got my software job because I was an electrical engineer#they specifically wanted someone to do software-hardware integration and that is a less common skill set

6 notes

·

View notes

Text

photos taken five minutes before disaster

#you gotta get a hardware guy baby girl if silva really didn't want you touching those files he would've had meltdown protocols for it#<<< this is whimsical movie computer stuff and the real world need not apply ever. im applying the real world for a giggle#it's more like hey man this should've been a huge warning sign actually#WHICH! again! movie whimsy! and also a very interesting character thing for Q!#i also have to take the whimsy out bc im trying to reverse engineer what the fuck those safe guards are

2 notes

·

View notes

Text

i actually think hatori is more of an electrical/hardware engineer than an informatics/information technology/software engineering person

#from the fanbook - he says he has the ability to ''flip switches he isn't supposed to''#in other words - 1s and 0s and currents#off and on#i think at the very granular level that's the mechanics of hatori's power#and i mean this is applicable to computer science and IT - but not that much#the electrical and hardware manipulation is VERY abstracted away into programming languages#and im of the opinion that hatori... doesn't know how to program computers#also when we see him demonstrate his abilities they are either hacking drones and helicopters that are likely programmed in lower-#-level languages and place a larger emphasis on electrical engineering#or hacking radio waves which i mean that's still some sort of off-on thing#the software engineering route of changing ports n permissions n stuff is.. i think not hatori's thing#but who knows... i really like hatori infosec interpretations... its just that i also think in canon he's an electrical engineer type guy#(not shitting on electrical engineers - infact i think they do better stuff than me - the loser infosec guy who can't do physics#to save his life)#my post canon hc for him is that he cleans up and goes to post-secondary school and finally learns the theory behind all of the stuff#-he CAN do#i think he'd unlock a lot of potential that way#but what do i know i am just speculating on the mechanics of psychic powers#milk (normal)#hatori tag#ah this is just me rambling i wanted to get the thought out

13 notes

·

View notes

Text

i keep seeing posts that are like "kids these days dont know how to use computers" do you realize that Most People dont know how to use computers. this is not a generational issue. computer literacy is a complex and evolving skillset that has to be taught and maintained. most people are doing other stuff in their lives besides going on computer. insisting that kids only know about apps and its making them stupid is wildly reductive. and honestly pathetic bc they are children they dont have control of their learning environment how are you mad at them

#cro zone#ok to rb#the reason i know about computers is not bc i just happened to grow up in the sweet spot of pc usage#its bc MY DAD IS A HARDWARE ENGINEER WHO TAUGHT ME HOW COMPUTERS WORK

4 notes

·

View notes

Text

Making your own Home Assistant (theorising)

Disclaimer: This is all theory and speculation. I have not tested anything or made my own home assistant yet, I just looked around for libraries and hardware that are likely compatible. I have not fully tested the compatibility or quality of these, this is simply the first iteration of an idea I have.

I just got news that Amazon Alexa has lost 10 billion dollars because their business model failed. This makes me happy, and has also made me realise that you can make your own home assistant.

Here are some of the links to things (I am aware that some are amazon, but it's the most global I could find. I encourage you to find other sellers, this is just what you should need. If you find anything cheaper or more local to where you are, go for it):

Hardware:

Raspberry Pi 4: https://www.canakit.com/raspberry-pi-4-2gb.html (RAM requirements may differ, I may do testing to see what comfortably runs)

8GB MicroSD: https://www.amazon.ca/Verbatim-Premium-microSDHC-Adapter-10-44081/dp/B00CBAUI40/ref=sr_1_3?crid=3CTN6X9TJXRR2&keywords=microsd%2Bcard%2B8gb&qid=1699209597&s=electronics&sprefix=microsd%2Bcard%2B8gb%2Celectronics%2C91&sr=1-3&th=1

Microphone: https://www.amazon.ca/SunFounder-Microphone-Raspberry-Recognition-Software/dp/B01KLRBHGM?th=1

Software:

Coqui STT: https://github.com/coqui-ai/STT

Coqui TTS: https://github.com/coqui-ai/TTS

If you have it set up correctly, you should be able to run both the STT and TTS in realtime (see https://github.com/coqui-ai/TTS/discussions/904).

After all of them are set up, the only thing to do is bridge it all together with software. There are bindings to Rust for both Coqui libraries (https://github.com/tazz4843/coqui-stt and https://github.com/rowan-sl/coqui-rs), and all that's left to do is implement parsing.

The libraries can also be swapped out for different ones if you like. If you can find and implement a DECtalk library that works for the Raspberry Pi, you can use that.

If I ever figure out how to manifest this idea, I will likely make the project modular so that you can use whatever library you want. You can even fork the project and include your own library of choice (if you can bind it to Rust).

Go FOSS!

#foss#open source#raspberry pi#technology#amazon#alexa#home assistant#coqui#rust#dectalk#programming#engineering#coding#software#software engineering#github#mozilla#hardware#computer engineering

5 notes

·

View notes

Text

Hey audio engineering side of Tumblr. Theoretically how would a young dumb lass record audio directly from an instrument (such as an electric guitar 🎸) to a computer. Secondly how would said young dumb lass layer different recordings on top of eachother without forking over her organs.

#ramblings of a lunatic#audio engineering#music#is that. are those tags ppl use for these purposes??? idk man#halp 👍#i also don't have uh. literally any hardware for this besides geetar and computer so there's that#but I'm willing to make a dumb purchase so long as it doesn't cost me an arm and a leg

5 notes

·

View notes

Text

I’m a privacy engineer and this is a poster I printed out to hang on my wall because it was so perfect:

Source

I don't know who needs to hear this but please please please please please explore the settings. Of your phone, computer, of every app you use. Investigate the UI, toggle some things around and see what happens. You won't break anything irreperably without a confirmation box asking you if you really mean to do that thing. And you can just look up what a setting will do before touching it if you're really worried ok?

Worst case scenario you just have to change the settings back if you don't like what happened but it is so so so important to explore the tools available to you and gain a better understanding for how the stuff you use works.

Even if you already know. Even if you're comfortable with how you use it now. You don't just have to accept whtever experience has been handed to you by default and it's good for you to at least know what's available to you.

#truly and genuinely this is how you learn computer stuff#you mess around with it and see what happens#at least 50 percent of my job as a security or privacy engineer over the years#has consisted of pushing buttons and interacting with apps in strange ways to see what happens#if I push the button in an odd way#or if I do things out of order#or if I shouldn’t have permission#or if I swap out a bit of text in the url#Because you can understand how stuff works in theory#but we don’t use the platonic ideal of a computer#we use machines built by people#running instructions devised by people#When the rubber hits the road things often don’t work exactly the way they ‘should’ on paper#so tinker with stuff#be careful if it’s hardware you could break#or if you don’t have some idea of what it is you’re changing#(I’m not suggesting you go changing registry values if you don’t know what those are)#but if it’s easily discoverable to a layperson in the UI#mess around#see what happens

35K notes

·

View notes

Text

Even native speakers of a language had to learn it from those who came before them.

So this was originally a response to this post:

****

****

Which is about people wanting an AO3 app, but then it became large and way off topic, so here you go.

Nobody under the age of 20 knows how to use a computer or the internet. At all. They only know how to use apps. Their whole lives are in their phones or *maybe* a tablet/iPad if they're an artist. This is becoming a huge concern.

I'm a private tutor for middle- and high-school students, and since 2020 my business has been 100% virtual. Either the student's on a tablet, which comes with its own series of problems for screen-sharing and file access, or they're on mom's or dad's computer, and they have zero understanding of it.

They also don't know what the internet is, or even the absolute basics of how it works. You might not think that's an important thing to know, but stick with me.

Last week I accepted a new student. The first session is always about the tech -- I tell them this in advance, that they'll have to set up a few things, but once we're set up, we'll be good to go. They all say the same thing -- it won't be a problem because they're so "online" that they get technology easily.

I never laugh in their faces, but it's always a close thing. Because they are expecting an app. They are not expecting to be shown how little they actually know about tech.

I must say up front: this story is not an outlier. This is *every* student during their first session with me. Every single one. I go through this with each of them because most of them learn more, and more solidly, via discussion and discovery rather than direct instruction.

Once she logged in, I asked her to click on the icon for screen-sharing. I described the icon, then started with "Okay, move your mouse to the bottom right corner of the screen." She did the thing that those of us who are old enough to remember the beginnings of widespread home computers remember - picked up the mouse and moved it and then put it down. I explained she had to pull the mouse along the surface, and then click on the icon. She found this cumbersome. I asked if she was on a laptop or desktop computer. She didn't know what I meant. I asked if the computer screen was connected to the keyboard as one piece of machinery that you can open and close, or if there was a monitor - like a TV - and the keyboard was connected to another machine either by cord or by Bluetooth. Once we figured it out was a laptop, I asked her if she could use the touchpad, because it's similar (though not equivalent) to a phone screen in terms of touching clicking and dragging.

Once we got her using the touchpad, we tried screen-sharing again. We got it working, to an extent, but she was having trouble with... lots of things. I asked if she could email me a download or a photo of her homework instead, and we could both have a copy, and talk through it rather than put it on the screen, and we'd worry about learning more tech another day. She said she tried, but her email blocked her from sending anything to me.

This is because the only email address she has is for school, and she never uses email for any other purpose. I asked if her mom or dad could email it to me. They weren't home.

(Re: school email that blocks any emails not whitelisted by the school: that's great for kids as are all parental controls for young ones, but 16-year-olds really should be getting used to using an email that belongs to them, not an institution.)

I asked if the homework was on a paper handout, or in a book, or on the computer. She said it was on the computer. Great! I asked her where it was saved. She didn't know. I asked her to search for the name of the file. She said she already did that and now it was on her screen. Then, she said to me: "You can just search for it yourself - it's Chapter 5, page 11."

This is because homework is on the school's website, in her math class's homework section, which is where she searched. For her, that was "searching the internet."

Her concepts of "on my computer" "on the internet" or "on my school's website" are all the same thing. If something is displayed on the monitor, it's "on the internet" and "on my phone/tablet/computer" and "on the school's website."

She doesn't understand "upload" or "download," because she does her homework on the school's website and hits a "submit" button when she's done. I asked her how she shares photos and stuff with friends; she said she posts to Snapchat or TikTok, or she AirDrops. (She said she sometimes uses Insta, though she said Insta is more "for old people"). So in her world, there's a button for "post" or "share," and that's how you put things on "the internet".

She doesn't know how it works. None of it. And she doesn't know how to use it, either.

Also, none of them can type. Not a one. They don't want to learn how, because "everything is on my phone."

And you know, maybe that's where we're headed. Maybe one day, everything will be on "my phone" and computers as we know them will be a thing of the past. But for the time being, they're not. Students need to learn how to use computers. They need to learn how to type. No one is telling them this, because people think teenagers are "digital natives." And to an extent, they are, but the definition of that has changed radically in the last 20-30 years. Today it means "everything is on my phone."

#i will always be glad that i was raised by people who taught me how to use computers from a young age#even my young teenage siblings can navigate a computer with ease#the only thing i never got the hang of was typing#but it wasnt for lack of trying on anyone's part but mine#i just wasn't bothered when i was young and nowadays i have an average speed so eh#but we're so far out of the norm#i mean#we are out of the norm anyways#who lets a teenager and their adult sibling dismantle the teen's pc in order to find out why it was so slow#(it was the hard disk btw)#(we replaced it with an old m2 drive we had lying around the house and all problems were solved)#gods im glad i was raised by a software engineer and a hardware engineer

50K notes

·

View notes

Text

Photonic processor could enable ultrafast AI computations with extreme energy efficiency

New Post has been published on https://thedigitalinsider.com/photonic-processor-could-enable-ultrafast-ai-computations-with-extreme-energy-efficiency/

Photonic processor could enable ultrafast AI computations with extreme energy efficiency

The deep neural network models that power today’s most demanding machine-learning applications have grown so large and complex that they are pushing the limits of traditional electronic computing hardware.

Photonic hardware, which can perform machine-learning computations with light, offers a faster and more energy-efficient alternative. However, there are some types of neural network computations that a photonic device can’t perform, requiring the use of off-chip electronics or other techniques that hamper speed and efficiency.

Building on a decade of research, scientists from MIT and elsewhere have developed a new photonic chip that overcomes these roadblocks. They demonstrated a fully integrated photonic processor that can perform all the key computations of a deep neural network optically on the chip.

The optical device was able to complete the key computations for a machine-learning classification task in less than half a nanosecond while achieving more than 92 percent accuracy — performance that is on par with traditional hardware.

The chip, composed of interconnected modules that form an optical neural network, is fabricated using commercial foundry processes, which could enable the scaling of the technology and its integration into electronics.

In the long run, the photonic processor could lead to faster and more energy-efficient deep learning for computationally demanding applications like lidar, scientific research in astronomy and particle physics, or high-speed telecommunications.

“There are a lot of cases where how well the model performs isn’t the only thing that matters, but also how fast you can get an answer. Now that we have an end-to-end system that can run a neural network in optics, at a nanosecond time scale, we can start thinking at a higher level about applications and algorithms,” says Saumil Bandyopadhyay ’17, MEng ’18, PhD ’23, a visiting scientist in the Quantum Photonics and AI Group within the Research Laboratory of Electronics (RLE) and a postdoc at NTT Research, Inc., who is the lead author of a paper on the new chip.

Bandyopadhyay is joined on the paper by Alexander Sludds ’18, MEng ’19, PhD ’23; Nicholas Harris PhD ’17; Darius Bunandar PhD ’19; Stefan Krastanov, a former RLE research scientist who is now an assistant professor at the University of Massachusetts at Amherst; Ryan Hamerly, a visiting scientist at RLE and senior scientist at NTT Research; Matthew Streshinsky, a former silicon photonics lead at Nokia who is now co-founder and CEO of Enosemi; Michael Hochberg, president of Periplous, LLC; and Dirk Englund, a professor in the Department of Electrical Engineering and Computer Science, principal investigator of the Quantum Photonics and Artificial Intelligence Group and of RLE, and senior author of the paper. The research appears today in Nature Photonics.

Machine learning with light

Deep neural networks are composed of many interconnected layers of nodes, or neurons, that operate on input data to produce an output. One key operation in a deep neural network involves the use of linear algebra to perform matrix multiplication, which transforms data as it is passed from layer to layer.

But in addition to these linear operations, deep neural networks perform nonlinear operations that help the model learn more intricate patterns. Nonlinear operations, like activation functions, give deep neural networks the power to solve complex problems.

In 2017, Englund’s group, along with researchers in the lab of Marin Soljačić, the Cecil and Ida Green Professor of Physics, demonstrated an optical neural network on a single photonic chip that could perform matrix multiplication with light.

But at the time, the device couldn’t perform nonlinear operations on the chip. Optical data had to be converted into electrical signals and sent to a digital processor to perform nonlinear operations.

“Nonlinearity in optics is quite challenging because photons don’t interact with each other very easily. That makes it very power consuming to trigger optical nonlinearities, so it becomes challenging to build a system that can do it in a scalable way,” Bandyopadhyay explains.

They overcame that challenge by designing devices called nonlinear optical function units (NOFUs), which combine electronics and optics to implement nonlinear operations on the chip.

The researchers built an optical deep neural network on a photonic chip using three layers of devices that perform linear and nonlinear operations.

A fully-integrated network

At the outset, their system encodes the parameters of a deep neural network into light. Then, an array of programmable beamsplitters, which was demonstrated in the 2017 paper, performs matrix multiplication on those inputs.

The data then pass to programmable NOFUs, which implement nonlinear functions by siphoning off a small amount of light to photodiodes that convert optical signals to electric current. This process, which eliminates the need for an external amplifier, consumes very little energy.

“We stay in the optical domain the whole time, until the end when we want to read out the answer. This enables us to achieve ultra-low latency,” Bandyopadhyay says.

Achieving such low latency enabled them to efficiently train a deep neural network on the chip, a process known as in situ training that typically consumes a huge amount of energy in digital hardware.

“This is especially useful for systems where you are doing in-domain processing of optical signals, like navigation or telecommunications, but also in systems that you want to learn in real time,” he says.

The photonic system achieved more than 96 percent accuracy during training tests and more than 92 percent accuracy during inference, which is comparable to traditional hardware. In addition, the chip performs key computations in less than half a nanosecond.

“This work demonstrates that computing — at its essence, the mapping of inputs to outputs — can be compiled onto new architectures of linear and nonlinear physics that enable a fundamentally different scaling law of computation versus effort needed,” says Englund.

The entire circuit was fabricated using the same infrastructure and foundry processes that produce CMOS computer chips. This could enable the chip to be manufactured at scale, using tried-and-true techniques that introduce very little error into the fabrication process.

Scaling up their device and integrating it with real-world electronics like cameras or telecommunications systems will be a major focus of future work, Bandyopadhyay says. In addition, the researchers want to explore algorithms that can leverage the advantages of optics to train systems faster and with better energy efficiency.

This research was funded, in part, by the U.S. National Science Foundation, the U.S. Air Force Office of Scientific Research, and NTT Research.

#ai#air#air force#Algorithms#applications#artificial#Artificial Intelligence#Astronomy#author#Building#Cameras#CEO#challenge#chip#chips#computation#computer#computer chips#Computer Science#Computer science and technology#computing#computing hardware#data#Deep Learning#devices#efficiency#Electrical engineering and computer science (EECS)#electronic#Electronics#energy

0 notes

Text

Our Professional web development company in Coimbatore are created to meet a variety of company needs for various industries. In order to match customer expectations while upholding industry standards, your web app will be developed by a team of skilled professionals who will come up with novel strategies.

We assist your company in streamlining operations with our websites, from designing the web with great aesthetic value to creating it with high-end features and cutting-edge functionality. As top web development company in Coimbatore we create scalable, reliable websites that are geared toward expanding your company.

#website#html#programming#technology#website development company#software engineering#computer#old web#hardware

0 notes

Text

youtube

#youtube video#computer technology#computer generation#computer science#computer basics#learn computer in Hindi#computer graphics#computer education#Hindi tutorial#technology explained#computer fundamentals#computing history#computer hardware#software tutorial#computer engineering#Hindi tech education#Youtube

0 notes

Text

RN42 Bluetooth Module: A Comprehensive Guide

The RN42 Bluetooth module was developed by Microchip Technology. It’s designed to provide Bluetooth connectivity to devices and is commonly used in various applications, including wireless communication between devices.

Features Of RN42 Bluetooth Module

The RN42 Bluetooth module comes with several key features that make it suitable for various wireless communication applications. Here are the key features of the RN42 module:

Bluetooth Version:

The RN42 module is based on Bluetooth version 2.1 + EDR (Enhanced Data Rate).

Profiles:

Supports a range of Bluetooth profiles including Serial Port Profile (SPP), Human Interface Device (HID), Audio Gateway (AG), and others. The availability of profiles makes it versatile for different types of applications.

Frequency Range:

Operates in the 2.4 GHz ISM (Industrial, Scientific, and Medical) band, the standard frequency range for Bluetooth communication.

Data Rates:

Offers data rates of up to 3 Mbps, providing a balance between speed and power consumption.

Power Supply Voltage:

Operates with a power supply voltage in the range of 3.3V to 6V, making it compatible with a variety of power sources.

Low Power Consumption:

Designed for low power consumption, making it suitable for battery-powered applications and energy-efficient designs.

Antenna Options:

Provides options for both internal and external antennas, offering flexibility in design based on the specific requirements of the application.

Interface:

Utilizes a UART (Universal Asynchronous Receiver-Transmitter) interface for serial communication, facilitating easy integration with microcontrollers and other embedded systems.

Security Features:

Implements authentication and encryption mechanisms to ensure secure wireless communication.

Read More: RN42 Bluetooth Module

#rn42-bluetooth-module#bluetooth-module#rn42#bluetooth-low-energy#ble#microcontroller#arduino#raspberry-pi#embedded-systems#IoT#internet-of-things#wireless-communication#data-transmission#sensor-networking#wearable-technology#mobile-devices#smart-homes#industrial-automation#healthcare#automotive#aerospace#telecommunications#networking#security#software-development#hardware-engineering#electronics#electrical-engineering#computer-science#engineering

0 notes

Text

Hands-on training on Computer Hardware and Networking Concepts

Department of Computer Science and Engineering, SIRT successfully conducted hands-on training on "Computer Hardware and Networking Concepts".

#hands-on training#computer hardware#networking concept#sirt cse#computer science engineering#admission

0 notes