#privacy policies

Text

From instructions on how to opt out, look at the official staff post on the topic. It also gives more information on Tumblr's new policies. If you are opting out, remember to opt out each separate blog individually.

Please reblog this post, so it will get more votes!

#third party sharing#third-party sharing#scrapping#ai scrapping#Polls#tumblr#tumblr staff#poll#please reblog#art#everything else#features#opt out#policies#data privacy#privacy#please boost#staff

47K notes

·

View notes

Text

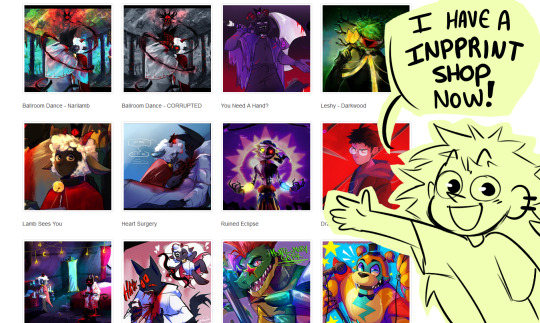

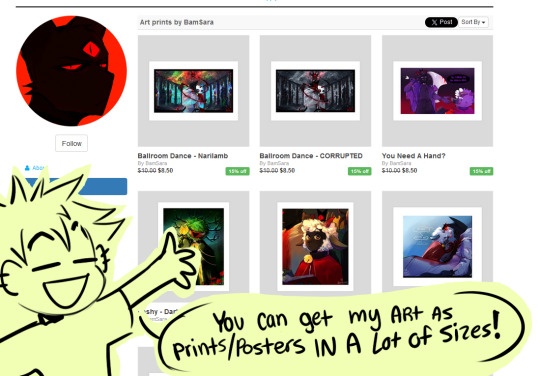

I have a INPRINT shop now! You can get my art as prints and posters here in many different sizes and materials.

Not all my art is on there, but I'm slowly getting my other pieces ready. You can use the code '0WXYC1' on order over $30 to get 15% for one week, plus the sitewide discounts currently going on. The discount will end 7/09/24.

If there are any art pieces I've done you'd like to see as a print, please let me know! (Also heads up! I have no way to remove the white border that inprint adds onto the fine art prints, but 'canvas' and other options do not include the white border that they add! Feel free to cut off the borders if you prefer!)

#if you notice some of the arts a little bit altered its because i went back and had to fix some things dkghsld#I've heard good things about inprint and their copyright and privacy policy look alright#so i'm giving it a shot#doodles#print shop#inprint#tried doing prints from home and that was just Not Working#so print shop attempt here we go

2K notes

·

View notes

Text

Koo vs Twitter: Why Koo has an Edge Over Twitter in India

Social media platforms have revolutionized the way we communicate, share our ideas and connect with people. Among them, Twitter has been the go-to platform for millions of users for years. But with the rise of Koo, a new microblogging platform, there is a lot of buzz in the social media world. Koo has gained a lot of attention recently, and many users are comparing it with Twitter. In this…

View On WordPress

#Government Support#Indian languages#Koo#microblogging platforms#privacy policies#Twitter#user base#user interface

1 note

·

View note

Text

Accelerating Smart Home Trends in 2022: The industry of smart home technologies is ever-increasing over time. The market has expanded to a huge extent, and you will find smart gadgets for every need and space of your home! At present, these trends are not confined to expensive houses or other updates that will happen somewhere in the future.

0 notes

Text

In defense of bureaucratic competence

Sure, sometimes it really does make sense to do your own research. There's times when you really do need to take personal responsibility for the way things are going. But there's limits. We live in a highly technical world, in which hundreds of esoteric, potentially lethal factors impinge on your life every day.

You can't "do your own research" to figure out whether all that stuff is safe and sound. Sure, you might be able to figure out whether a contractor's assurances about a new steel joist for your ceiling are credible, but after you do that, are you also going to independently audit the software in your car's antilock brakes?

How about the nutritional claims on your food and the sanitary conditions in the industrial kitchen it came out of? If those turn out to be inadequate, are you going to be able to validate the medical advice you get in the ER when you show up at 3AM with cholera? While you're trying to figure out the #HIPAAWaiver they stuck in your hand on the way in?

40 years ago, Ronald Reagan declared war on "the administrative state," and "government bureaucrats" have been the favored bogeyman of the American right ever since. Even if Steve Bannon hasn't managed to get you to froth about the "Deep State," there's a good chance that you've griped about red tape from time to time.

Not without reason, mind you. The fact that the government can make good rules doesn't mean it will. When we redid our kitchen this year, the city inspector added a bunch of arbitrary electrical outlets to the contractor's plans in places where neither we, nor any future owner, will every need them.

But the answer to bad regulation isn't no regulation. During the same kitchen reno, our contractor discovered that at some earlier time, someone had installed our kitchen windows without the accompanying vapor-barriers. In the decades since, the entire structure of our kitchen walls had rotted out. Not only was the entire front of our house one good earthquake away from collapsing – there were two half rotted verticals supporting the whole thing – but replacing the rotted walls added more than $10k to the project.

In other words, the problem isn't too much regulation, it's the wrong regulation. I want our city inspectors to make sure that contractors install vapor barriers, but to not demand superfluous electrical outlets.

Which raises the question: where do regulations come from? How do we get them right?

Regulation is, first and foremost, a truth-seeking exercise. There will never be one obvious answer to any sufficiently technical question. "Should this window have a vapor barrier?" is actually a complex question, needing to account for different window designs, different kinds of barriers, etc.

To make a regulation, regulators ask experts to weigh in. At the federal level, expert agencies like the DoT or the FCC or HHS will hold a "Notice of Inquiry," which is a way to say, "Hey, should we do something about this? If so, what should we do?"

Anyone can weigh in on these: independent technical experts, academics, large companies, lobbyists, industry associations, members of the public, hobbyist groups, and swivel-eyed loons. This produces a record from which the regulator crafts a draft regulation, which is published in something called a "Notice of Proposed Rulemaking."

The NPRM process looks a lot like the NOI process: the regulator publishes the rule, the public weighs in for a couple of rounds of comments, and the regulator then makes the rule (this is the federal process; state regulation and local ordinances vary, but they follow a similar template of collecting info, making a proposal, collecting feedback and finalizing the proposal).

These truth-seeking exercises need good input. Even very competent regulators won't know everything, and even the strongest theoretical foundation needs some evidence from the field. It's one thing to say, "Here's how your antilock braking software should work," but you also need to hear from mechanics who service cars, manufacturers, infosec specialists and drivers.

These people will disagree with each other, for good reasons and for bad ones. Some will be sincere but wrong. Some will want to make sure that their products or services are required – or that their competitors' products and services are prohibited.

It's the regulator's job to sort through these claims. But they don't have to go it alone: in an ideal world, the wrong people will be corrected by other parties in the docket, who will back up their claims with evidence.

So when the FCC proposes a Net Neutrality rule, the monopoly telcos and cable operators will pile in and insist that this is technically impossible, that there is no way to operate a functional ISP if the network management can't discriminate against traffic that is less profitable to the carrier. Now, this unity of perspective might reflect a bedrock truth ("Net Neutrality can't work") or a monopolists' convenient lie ("Net Neutrality is less profitable for us").

In a competitive market, there'd be lots of counterclaims with evidence from rivals: "Of course Net Neutrality is feasible, and here are our server logs to prove it!" But in a monopolized markets, those counterclaims come from micro-scale ISPs, or academics, or activists, or subscribers. These counterclaims are easy to dismiss ("what do you know about supporting 100 million users?"). That's doubly true when the regulator is motivated to give the monopolists what they want – either because they are hoping for a job in the industry after they quit government service, or because they came out of industry and plan to go back to it.

To make things worse, when an industry is heavily concentrated, it's easy for members of the ruling cartel – and their backers in government – to claim that the only people who truly understand the industry are its top insiders. Seen in that light, putting an industry veteran in charge of the industry's regulator isn't corrupt – it's sensible.

All of this leads to regulatory capture – when a regulator starts defending an industry from the public interest, instead of defending the public from the industry. The term "regulatory capture" has a checkered history. It comes out of a bizarre, far-right Chicago School ideology called "Public Choice Theory," whose goal is to eliminate regulation, not fix it.

In Public Choice Theory, the biggest companies in an industry have the strongest interest in capturing the regulator, and they will work harder – and have more resources – than anyone else, be they members of the public, workers, or smaller rivals. This inevitably leads to capture, where the state becomes an arm of the dominant companies, wielded by them to prevent competition:

https://pluralistic.net/2022/06/05/regulatory-capture/

This is regulatory nihilism. It supposes that the only reason you weren't killed by your dinner, or your antilock brakes, or your collapsing roof, is that you just got lucky – and not because we have actual, good, sound regulations that use evidence to protect us from the endless lethal risks we face. These nihilists suppose that making good regulation is either a myth – like ancient Egyptian sorcery – or a lost art – like the secret to embalming Pharaohs.

But it's clearly possible to make good regulations – especially if you don't allow companies to form monopolies or cartels. What's more, failing to make public regulations isn't the same as getting rid of regulation. In the absence of public regulation, we get private regulation, run by companies themselves.

Think of Amazon. For decades, the DoJ and FTC sat idly by while Amazon assembled and fortified its monopoly. Today, Amazon is the de facto e-commerce regulator. The company charges its independent sellers 45-51% in junk fees to sell on the platform, including $31b/year in "advertising" to determine who gets top billing in your searches. Vendors raise their Amazon prices in order to stay profitable in the face of these massive fees, and if they don't raise their prices at every other store and site, Amazon downranks them to oblivion, putting them out of business.

This is the crux of the FTC's case against Amazon: that they are picking winners and setting prices across the entire economy, including at every other retailer:

https://pluralistic.net/2023/04/25/greedflation/#commissar-bezos

The same is true for Google/Facebook, who decide which news and views you encounter; for Apple/Google, who decide which apps you can use, and so on. The choice is never "government regulation" or "no regulation" – it's always "government regulation" or "corporate regulation." You either live by rules made in public by democratically accountable bureaucrats, or rules made in private by shareholder-accountable executives.

You just can't solve this by "voting with your wallet." Think about the problem of robocalls. Nobody likes these spam calls, and worse, they're a vector for all kinds of fraud. Robocalls are mostly a problem with federation. The phone system is a network-of-networks, and your carrier is interconnected with carriers all over the world, sometimes through intermediaries that make it hard to know which network a call originates on.

Some of these carriers are spam-friendly. They make money by selling access to spammers and scammers. Others don't like spam, but they have lax or inadequate security measures to prevent robocalls. Others will simply be targets of opportunity: so large and well-resourced that they are irresistible to bad actors, who continuously probe their defenses and exploit overlooked flaws, which are quickly patched.

To stem the robocall tide, your phone company will have to block calls from bad actors, put sloppy or lazy carriers on notice to shape up or face blocks, and also tell the difference between good companies and bad ones.

There's no way you can figure this out on your own. How can you know whether your carrier is doing a good job at this? And even if your carrier wants to do this, only the largest, most powerful companies can manage it. Rogue carriers won't give a damn if some tiny micro-phone-company threatens them with a block if they don't shape up.

This is something that a large, powerful government agency is best suited to addressing. And thankfully, we have such an agency. Two years ago, the FCC demanded that phone companies submit plans for "robocall mitigation." Now, it's taking action:

https://arstechnica.com/tech-policy/2023/10/telcos-filed-blank-robocall-plans-with-fcc-and-got-away-with-it-for-2-years/

Specifically, the FCC has identified carriers – in the US and abroad – with deficient plans. Some of these plans are very deficient. National Cloud Communications of Texas sent the FCC a Windows Printer Test Page. Evernex (Pakistan) sent the FCC its "taxpayer profile inquiry" from a Pakistani state website. Viettel (Vietnam) sent in a slide presentation entitled "Making Smart Cities Vision a Reality." Canada's Humbolt VoIP sent an "indiscernible object." DomainerSuite submitted a blank sheet of paper scrawled with the word "NOTHING."

The FCC has now notified these carriers – and others with less egregious but still deficient submissions – that they have 14 days to fix this or they'll be cut off from the US telephone network.

This is a problem you don't fix with your wallet, but with your ballot. Effective, public-interest-motivated FCC regulators are a political choice. Trump appointed the cartoonishly evil Ajit Pai to run the FCC, and he oversaw a program of neglect and malice. Pai – a former Verizon lawyer – dismantled Net Neutrality after receiving millions of obviously fraudulent comments from stolen identities, lying about it, and then obstructing the NY Attorney General's investigation into the matter:

https://pluralistic.net/2021/08/31/and-drown-it/#starve-the-beast

The Biden administration has a much better FCC – though not as good as it could be, thanks to Biden hanging Gigi Sohn out to dry in the face of a homophobic smear campaign that ultimately led one of the best qualified nominees for FCC commissioner to walk away from the process:

https://pluralistic.net/2022/12/15/useful-idiotsuseful-idiots/#unrequited-love

Notwithstanding the tragic loss of Sohn's leadership in this vital agency, Biden's FCC – and its action on robocalls – illustrates the value of elections won with ballots, not wallets.

Self-regulation without state regulation inevitably devolves into farce. We're a quarter of a century into the commercial internet and the US still doesn't have a modern federal privacy law. The closest we've come is a disclosure rule, where companies can make up any policy they want, provided they describe it to you.

It doesn't take a genius to figure out how to cheat on this regulation. It's so simple, even a Meta lawyer can figure it out – which is why the Meta Quest VR headset has a privacy policy isn't merely awful, but long.

It will take you five hours to read the whole document and discover how badly you're being screwed. Go ahead, "do your own research":

https://foundation.mozilla.org/en/privacynotincluded/articles/annual-creep-o-meter/

The answer to bad regulation is good regulation, and the answer to incompetent regulators is competent ones. As Michael Lewis's Fifth Risk (published after Trump filled the administrative agencies with bootlickers, sociopaths and crooks) documented, these jobs demand competence:

https://memex.craphound.com/2018/11/27/the-fifth-risk-michael-lewis-explains-how-the-deep-state-is-just-nerds-versus-grifters/

For example, Lewis describes how a Washington State nuclear waste facility created as part of the Manhattan Project endangers the Columbia River, the source of 8 million Americans' drinking water. The nuclear waste cleanup is projected to take 100 years and cost 100 billion dollars. With stakes that high, we need competent bureaucrats overseeing the job.

The hacky conservative jokes comparing every government agency to the DMV are not descriptive so much as prescriptive. By slashing funding, imposing miserable working conditions, and demonizing the people who show up for work anyway, neoliberals have chased away many good people, and hamstrung those who stayed.

One of the most inspiring parts of the Biden administration is the large number of extremely competent, extremely principled agency personnel he appointed, and the speed and competence they've brought to their roles, to the great benefit of the American public:

https://pluralistic.net/2022/10/18/administrative-competence/#i-know-stuff

But leaders can only do so much – they also need staff. 40 years of attacks on US state capacity has left the administrative state in tatters, stretched paper-thin. In an excellent article, Noah Smith describes how a starveling American bureaucracy costs the American public a fortune:

https://www.noahpinion.blog/p/america-needs-a-bigger-better-bureaucracy

Even stripped of people and expertise, the US government still needs to get stuff done, so it outsources to nonprofits and consultancies. These are the source of much of the expense and delay in public projects. Take NYC's Second Avenue subway, a notoriously overbudget and late subway extension – "the most expensive mile of subway ever built." Consultants amounted to 20% of its costs, double what France or Italy would have spent. The MTA used to employ 1,600 project managers. Now it has 124 of them, overseeing $20b worth of projects. They hand that money to consultants, and even if they have the expertise to oversee the consultants' spending, they are stretched too thin to do a good job of it:

https://slate.com/business/2023/02/subway-costs-us-europe-public-transit-funds.html

When a public agency lacks competence, it ends up costing the public more. States with highly expert Departments of Transport order better projects, which need fewer changes, which adds up to massive costs savings and superior roads:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4522676

Other gaps in US regulation are plugged by nonprofits and citizen groups. Environmental rules like NEPA rely on the public to identify and object to environmental risks in public projects, from solar plants to new apartment complexes. NEPA and its state equivalents empower private actors to sue developers to block projects, even if they satisfy all environmental regulations, leading to years of expensive delay.

The answer to this isn't to dismantle environmental regulations – it's to create a robust expert bureaucracy that can enforce them instead of relying on NIMBYs. This is called "ministerial approval" – when skilled government workers oversee environmental compliance. Predictably, NIMBYs hate ministerial approval.

Which is not to say that there aren't problems with trusting public enforcers to ensure that big companies are following the law. Regulatory capture is real, and the more concentrated an industry is, the greater the risk of capture. We are living in a moment of shocking market concentration, thanks to 40 years of under-regulation:

https://www.openmarketsinstitute.org/learn/monopoly-by-the-numbers

Remember that five-hour privacy policy for a Meta VR headset? One answer to these eye-glazing garbage novellas presented as "privacy policies" is to simply ban certain privacy-invading activities. That way, you can skip the policy, knowing that clicking "I agree" won't expose you to undue risk.

This is the approach that Bennett Cyphers and I argue for in our EFF white-paper, "Privacy Without Monopoly":

https://www.eff.org/wp/interoperability-and-privacy

After all, even the companies that claim to be good for privacy aren't actually very good for privacy. Apple blocked Facebook from spying on iPhone owners, then sneakily turned on their own mass surveillance system, and lied about it:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

But as the European experiment with the GDPR has shown, public administrators can't be trusted to have the final word on privacy, because of regulatory capture. Big Tech companies like Google, Apple and Facebook pretend to be headquartered in corporate crime havens like Ireland and Luxembourg, where the regulators decline to enforce the law:

https://pluralistic.net/2023/05/15/finnegans-snooze/#dirty-old-town

It's only because of the GPDR has a private right of action – the right of individuals to sue to enforce their rights – that we're finally seeing the beginning of the end of commercial surveillance in Europe:

https://www.eff.org/deeplinks/2022/07/americans-deserve-more-current-american-data-privacy-protection-act

It's true that NIMBYs can abuse private rights of action, bringing bad faith cases to slow or halt good projects. But just as the answer to bad regulations is good ones, so too is the answer to bad private rights of action good ones. SLAPP laws have shown us how to balance vexatious litigation with the public interest:

https://www.rcfp.org/resources/anti-slapp-laws/

We must get over our reflexive cynicism towards public administration. In my book The Internet Con, I lay out a set of public policy proposals for dismantling Big Tech and putting users back in charge of their digital lives:

https://www.versobooks.com/products/3035-the-internet-con

The most common objection I've heard since publishing the book is, "Sure, Big Tech has enshittified everything great about the internet, but how can we trust the government to fix it?"

We've been conditioned to think that lawmakers are too old, too calcified and too corrupt, to grasp the technical nuances required to regulate the internet. But just because Congress isn't made up of computer scientists, it doesn't mean that they can't pass good laws relating to computers. Congress isn't full of microbiologists, but we still manage to have safe drinking water (most of the time).

You can't just "do the research" or "vote with your wallet" to fix the internet. Bad laws – like the DMCA, which bans most kinds of reverse engineering – can land you in prison just for reconfiguring your own devices to serve you, rather than the shareholders of the companies that made them. You can't fix that yourself – you need a responsive, good, expert, capable government to fix it.

We can have that kind of government. It'll take some doing, because these questions are intrinsically hard to get right even without monopolies trying to capture their regulators. Even a president as flawed as Biden can be pushed into nominating good administrative personnel and taking decisive, progressive action:

https://doctorow.medium.com/joe-biden-is-headed-to-a-uaw-picket-line-in-detroit-f80bd0b372ab?sk=f3abdfd3f26d2f615ad9d2f1839bcc07

Biden may not be doing enough to suit your taste. I'm certainly furious with aspects of his presidency. The point isn't to lionize Biden – it's to point out that even very flawed leaders can be pushed into producing benefit for the American people. Think of how much more we can get if we don't give up on politics but instead demand even better leaders.

My next novel is The Lost Cause, coming out on November 14. It's about a generation of people who've grown up under good government – a historically unprecedented presidency that has passed the laws and made the policies we'll need to save our species and planet from the climate emergency:

https://us.macmillan.com/books/9781250865939/the-lost-cause

The action opens after the pendulum has swung back, with a new far-right presidency and an insurgency led by white nationalist militias and their offshore backers – seagoing anarcho-capitalist billionaires.

In the book, these forces figure out how to turn good regulations against the people they were meant to help. They file hundreds of simultaneous environmental challenges to refugee housing projects across the country, blocking the infill building that is providing homes for the people whose homes have been burned up in wildfires, washed away in floods, or rendered uninhabitable by drought.

I don't want to spoil the book here, but it shows how the protagonists pursue a multipronged defense, mixing direct action, civil disobedience, mass protest, court challenges and political pressure to fight back. What they don't do is give up on state capacity. When the state is corrupted by wreckers, they claw back control, rather than giving up on the idea of a competent and benevolent public system.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/10/23/getting-stuff-done/#praxis

#pluralistic#nerd harder#private right of action#privacy#robocalls#fcc#administrative competence#noah smith#spam#regulatory capture#public choice theory#nimbyism#the lost cause#the internet con#evidence based policy#small government#transit#praxis#antitrust#trustbusting#monopoly

381 notes

·

View notes

Text

Anyone who follows me on here with a Twitter/X account:

In case you haven't heard, the Twitter Privacy Policy is changing on September 29th. The new policy states that any public content uploaded there will be used to train AI models. This, in addition to the overarching content policy which gives Twitter the right to "use, copy, reproduce, process, adapt, modify, publish and display" any content published on the platform without permission or creator compensation, means that any original content on there could potentially be used to generate AI content for use on the platform, without the original creator's consent.

If you publish original content on Twitter (especially art) and you don't like or agree with the policy update, now may be the time to review the changes for yourself and if necessary take things down before the policy update is published.

Edit: I wanna offer a brief apology for the original post, my wording was a bit unclear and may have drawn people to the wrong assumptions! I have changed the original post a bit now to hopefully be a more accurate reflection of the situation.

Let me just clarify some things since I certainly don't wanna fearmonger and also I feel like some people may take this more seriously than it actually is!:

The part of the privacy policy I mentioned regarding "use, copy, reproduce, process, adapt, modify, publish and display" is included in the policies of every social media site nowadays. That part on its own is not scary, as they have to include that in order to show your content to other users and have it published outside of the site (ie. embedding on other sites, news articles).

The scenario I mentioned is pretty unlikely to happen, I highly doubt the site will suddenly start stealing art or other consent and use it to pump stuff out all over the web without consent or compensation. I simply mentioned it because the fact that the data is being used to train AI models means that stuff on there may end up being used as references for it at some point, and that could then lead to the scenario I mentioned where peoples content becomes the food for new AI content. I don't know myself how likely that is for definite, but I know many people still don't trust the training of AI, which is why I feel it is important to mention.

I cannot offer professional or foolproof advice to people on the platform who have posted content before, I'm just some guy! I don't wanna make people freak out or anything. If you have content already on the site, chances are its probably already floating around somewhere you wouldn't want it. That's, unfortunately, the reality of the internet. You don't have to take down everything you've ever posted or delete your accounts, however I wouldn't recommend posting new content on the site if you are uncomfortable with the changes.

THIS POST WAS MADE FOR AWARENESS ONLY!! I AM NOT SUGGESTING WHAT YOU SHOULD AND SHOULD NOT DO, I AM NOT RESPONSIBLE!! /lh

TL;DR: I worded the original post slightly poorly, for clarification the policy being changed to allow for AI training doesn't automatically mean that all your creations will be stolen and be recreated with AI or anything, it just means that those creations will be used to teach the AI to make things of it's own. If you don't like the sound of that, consider looking into this matter yourself for a more detailed insight.

308 notes

·

View notes

Text

Summary: The Magnus Institute hires a Data Protection Officer during season 3. He sets about diligently booking in meetings, writing policy documents, and training all the staff in the importance of confidentiality. Now if only he could get hold of the Head Archivist, who seems to have vanished again...

(Jon is only trying to save the world, but apparently some people think he should still be doing his day job.)

Author: @shinyopals

Note from submitter: This fic is best read with custom work skins enabled.

#official fic poll#haveyoureadthisfic#pollblr#tumblr polls#fanfiction#fandom poll#fanfic#fandom culture#internet culture#Beholding the GDPR#How the Magnus Institute Updated Its Privacy Policy for the Twenty-First Century And Only Caused One Nervous Breakdown In The Process#the magnus archives#magnus archives#jonmartin#jmart#teaholding#ao3

80 notes

·

View notes

Text

So, I am on my way to a convention dressed as Dr Watson, and I'm hyper aware that the geography of the situation means there's kind of no way for me to complete my journey without encountering Baker Street.

#We're really testing the boundaries of my own self-consciousness this morning#I simultaneously don't really care about what strangers think of me#But also value my privacy#And my general policy on public transport is to try to fade into the distance

29 notes

·

View notes

Text

My New Article at WIRED

Tweet

So, you may have heard about the whole zoom “AI” Terms of Service clause public relations debacle, going on this past week, in which Zoom decided that it wasn’t going to let users opt out of them feeding our faces and conversations into their LLMs. In 10.1, Zoom defines “Customer Content” as whatever data users provide or generate (“Customer Input”) and whatever else Zoom generates from our uses of Zoom. Then 10.4 says what they’ll use “Customer Content” for, including “…machine learning, artificial intelligence.”

And then on cue they dropped an “oh god oh fuck oh shit we fucked up” blog where they pinky promised not to do the thing they left actually-legally-binding ToS language saying they could do.

Like, Section 10.4 of the ToS now contains the line “Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent,” but it again it still seems a) that the “customer” in question is the Enterprise not the User, and 2) that “consent” means “clicking yes and using Zoom.” So it’s Still Not Good.

Well anyway, I wrote about all of this for WIRED, including what zoom might need to do to gain back customer and user trust, and what other tech creators and corporations need to understand about where people are, right now.

And frankly the fact that I have a byline in WIRED is kind of blowing my mind, in and of itself, but anyway…

Also, today, Zoom backtracked Hard. And while i appreciate that, it really feels like decided to Zoom take their ball and go home rather than offer meaningful consent and user control options. That’s… not exactly better, and doesn’t tell me what if anything they’ve learned from the experience. If you want to see what I think they should’ve done, then, well… Check the article.

Until Next Time.

Tweet

Read the rest of My New Article at WIRED at A Future Worth Thinking About

#ai#artificial intelligence#ethics#generative pre-trained transformer#gpt#large language models#philosophy of technology#public policy#science technology and society#technological ethics#technology#zoom#privacy

124 notes

·

View notes

Text

If you're following KOSA, you should also be following state legislation, which is much more rapidly adopting tech regulation related to child safety than Congress. For reference, in 2023, 13 states adopted 23 laws related to child safety online.

Even if your locality hasn't adopted similar tech regulation, online platforms, apps, and websites are rarely operating in only some states. When regulations become patchwork, it's often easier for companies to adopt policies reflective of the most stringent regulations relevant to their service for all users, rather than try to implement different policies for users based on each user's location.

I know this because that's what happened when patchwork data privacy regulations began swelling — which is why many webites have privacy policies reflective of the GDPR that apply even to users outside of Europe. I also know this because I'm a tech lawyer — I'm the wet cat drafting policies for and advising tech and video game companies on how to navigate messy, convoluted, and patchwork US regulatory obligations.

So, when I say this is how companies are thinking about this, I mean this is how my coworkers and I have to think about this. And because the US is such a large market, this could impact users outside the US, too.

#kosa#us law#tech policy#data privacy#regulatorycompliance#sorry if i tricked you into thinking this is a fandom account#this is just my blog of twelve years#and ive seen a lot about kosa#but local us legislation is where most of the movement here is#and there is a trend towards adopting laws and regulations related to minors online#that is rapidly gaining momentum#i love being an internet lawyer but i also love the internet#and so im feeling more and more compelled to talk about how the tectonic plates are shifting fast

27 notes

·

View notes

Text

Sometimes I learn an additional piece of information about the extent to which the United States has traded any semblance of privacy for the illusion of security and I get mad all over again

#i have really the funniest priorities when it comes to my major federal policy issues honestly it's like#privacy. labor rights. nuclear disarmament.#there are others but most of the others are stuff that like. is already being dealt with#also some things fall into these categories that you may not expect#i.e. our immigration policies are dictated by our obsession with security at the expense of privacy#same with... basically everything associated with the military.#frankly we do need to talk about privacy vs security more cuz of my god it touches SO FUCKING MUCH#anyway lmao

24 notes

·

View notes

Text

Usually, I consider Morning Brew an entertaining element of my morning, but not necessarily the most on-topic (for my interests) of the newscasts I listen to.

However, they had a LOT of good topics today, like:

an overview of the tax and welfare elements of the budget that Biden is proposing (understandably, most of the others are focused on the immigration policy), and how it relates to the election

the increasing number of incidents with Boeing, touching on the suspicious death of a whistle-blower

automakers are sharing your personal information with insurance companies without your knowledge (consent was gained in the tiny fine print), and the expected involvement of the FTC

#current events#politics#united states#domestic politics#boeing#taxes#tax policy#privacy violations#ftc#Phoenix Politics

51 notes

·

View notes

Text

Accelerating Smart Home Trends in 2022- Advanced Security

Accelerating Smart Home Trends in 2022- Advanced Security

Accelerating Smart Home Trends in 2022: The industry of smart home technologies is ever-increasing over time. The market has expanded to a huge extent, and you will find smart gadgets for every need and space of your home! At present, these trends are not confined to expensive houses or other updates that will happen somewhere in the future.

The fact is that people are keen on using this…

View On WordPress

0 notes

Text

Apparently, if you opt out right now, chances are high that tumblr won't sell your info, but if you decide to do so later, you have to trust big AI companies to honour your request, there isn't a guarantee or regulation for it in place

#frown pouts#ai#open ai#tumblr#automattic#third-party sharing#third party sharing#anti ai#staff#privacy#data privacy#policies#opt out#features

42 notes

·

View notes

Text

grindr isn't the only company selling your HIV status

#scruff#jack'd#grindr#read the lack of privacy policy#queer#lgbqti#lgbt#gay app#gay#trans#bi#2018#2024

16 notes

·

View notes

Text

are there literally any ways to take commissions that dont involve the client seeing your legal name. genuine question.

#t#id like to open them again but im a lot stricter about my privacy now.#i know its the policy to look away whistling over legal names but i dont want it to be visible at all. i dont wanna know my clients names.

10 notes

·

View notes