#structured query language

Explore tagged Tumblr posts

Text

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

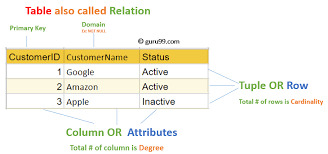

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

0 notes

Note

Is it ethical to use Chat GPT or Grammarly for line editing purposes? I have a finished book, 100% written by me and line edited by me already--and I do hope to get it traditionally published. But I think it could benefit from a line edit from someone who isn't me, obviously, before querying. But line editing services run $3-4k for a 75k book, which is beyond my budget.

I was chatting with someone recently who self-publishes. They said they use Chat GPT Plus to actually train a model for their projects to line edit using instructions like (do not rewrite or rephrase for content /edit only for rhythm, clarity, tone, and pacing /preserve my voice, sentence structure, and story intent with precision). Those are a few inputs she used and she said it actually worked really well.

So in that case, is AI viewed in the same way you'd collaborate with a human editor? Or does that cross ethical boundaries in traditional publishing? Like say for instance AI rewords your sentence and maybe switches out for a stronger verb or adjective or a stronger metaphor--is using that crossing a line? And if I were to use it for that purpose, would I need to disclose that? I know AI is practically a swear word among authors and publishers right now, so I think even having to say "I used AI tools" might raise eyebrows and make an agent hesitant during the querying process. But obviously, I wouldn't lie if it needs to be disclosed... just not sure I even want to go there and risk having to worry about that. Thoughts? Am I fine? Overthinking it?

Thanks!

I gotta be honest, this question made me flinch so hard I'm surprised my face didn't turn inside out.

Feeding your original work into ChatGPT or a similar generative AI large language model -- which are WELL KNOWN FOR STEALING EVERYTHING THAT GETS PUT INTO THEM AND SPITTING OUT STOLEN MATERIAL-- feels like, idk, just a terrible idea. Letting that AI have ANY kind of control over your words and steal them feels like a terrible idea. Using any words that a literal plagiarism-bot might come up with for you feels like a terrible idea.

And ethical questions aside: AI is simply not good at writing fiction. It doesn't KNOW anything. You want to take its "advice" on your book? Come on. Get it together.

Better idea: Get a good critique group that can tell you if there are major plot holes, characters whose motivations are unclear, anything like that -- those are things that AI can't help you with, anyway. Then read Self-Editing for Fiction Writers -- that info combined with a bit of patience should stand you in good stead.

Finally, I do think that using spell-check/grammarly, either as you work or to check your work, is fine. It's not rewriting your work for you, it's just pointing out typos/mistakes/potential issues, and YOU, PERSONALLY, are going through each and every one to make the decision of how to fix any actual errors that might have snuck in there, and you, personally, are making the decision about when to use a "stronger" word or phrase or recast a sentence that it thinks might be unclear or when to stet for voice, etc. Yes, get rid of typos and real mistakes, by all means!

(And no, I don't think use of that kind of "spell-check/grammar-check" tool is a problem or anything that you need to "disclose" or feel weird about -- spell-check is like, integrated into most word processing software as a rule, it's ubiquitous and helpful, and it's different from feeding your work into some third-party AI thing!)

343 notes

·

View notes

Text

AI continues to be useful, annoying everyone

Okay, look - as much as I've been fairly on the side of "this is actually a pretty incredible technology that does have lots of actual practical uses if used correctly and with knowledge of its shortfalls" throughout the ongoing "AI era", I must admit - I don't use it as a tool too much myself.

I am all too aware of how small errors can slip in here and there, even in output that seems above the level, and, perhaps more importantly, I still have a bit of that personal pride in being able to do things myself! I like the feeling that I have learned a skill, done research on how to do a thing and then deployed that knowledge to get the result I want. It's the bread and butter of working in tech, after all.

But here's the thing, once you move beyond beginner level Python courses and well-documented windows applications. There will often be times when you will want to achieve a very particular thing, which involves working with a specialist application. This will usually be an application written for domain experts of this specialization, and so it will not be user-friendly, and it will certainly not be "outsider-friendly".

So you will download the application. Maybe it's on the command line, has some light scripting involved in a language you've never used, or just has a byzantine shorthand command structure. There is a reference document - thankfully the authors are not that insane - but there are very few examples, and none doing exactly what you want. In order to do the useful thing you want to do, they expect you to understand how the application/platform/scripting language works, to the extent that you can apply it in a novel context.

Which is all fine and well, and normally I would not recommend anybody use a tool at length unless they have taken the time to understand it to the degree at which they know what they are doing. Except I do not wish to use the tool at length, I wish to do one, singular operation, as part of a larger project, and then never touch it again. It is unfortunately not worth my time for me to sink a few hours into learning a technology that you will use once for twenty seconds and then never again.

So you spend time scouring the specialist forums, pulling up a few syntax examples you find randomly of their code and trying to string together the example commands in the docs. If you're lucky, and the syntax has enough in common with something you're familiar with, you should be able to bodge together something that works in 15-20 minutes.

But if you're not lucky, the next step would have been signing up to that forum, or making a post on that subreddit, creating a thread called "Hey, newbie here, needing help with..." and then waiting 24-48 hours to hear back from somebody probably some years-deep veteran looking down on you with scorn for not having put in the effort to learn their Thing, setting aside the fact that you have no reason to normally. It's annoying, disruptive, and takes time.

Now I can ask ChatGPT, and it will have ingested all those docs, all those forums, and it will give you a correct answer in 20 seconds about what you were doing wrong. Because friends, this is where a powerful attention model excels, because you are not asking it to manage a complex system, but to collate complex sources into a simple synthesis. The LLM has already trained in this inference, and it can reproduce it in the blink of an eye, and then deliver information about this inference in the form of a user dialog.

When people say that AI is the future of tutoring, this is what it means. Instead of waiting days to get a reply from a bored human expert, the machine knowledge blender has already got it ready to retrieve via a natural language query, with all the followup Q&A to expand your own knowledge you could desire. And the great thing about applying this to code or scripting syntax is that you can immediately verify whether the output is correct but running it and seeing if it performs as expected, so a lot of the danger is reduced (not that any modern mainstream attention model is likely to make a mistake on something as simple a single line command unless it's something barely documented online, that is).

It's incredibly useful, and it outdoes the capacity of any individual human researcher, as well as the latency of existing human experts. That's something you can't argue we've ever had better before, in any context, and it's something you can actively make use of today. And I will, because it's too good not to - despite my pride.

130 notes

·

View notes

Text

Sitting On Your Lap Headcanons (Demon Slayer Edition)

Imagine you're taller and more muscular than the others and you pulled them into your lap for comfort.

Rengoku Kyojuro: As Hashira, your schedules are always unaligned with each other, making every moment precious to both of you. And as someone who uses physical affection as a love language, you get grumpy not being in the arms of your favorite flame hashira for a long period. Once you get some time together, you're practically shining with joy; Kyojuro announcing his arrival to your estate after a long mission outside the region and offering a bento as a returning gift. As your last mission exhausted your strength, there's nothing better to do but sit down and eat with your boyfriend whilst he's "UMAI"-ing. Soon after dinner, you pull him into your lap; with a taller, more structured stature, you're able to lift heavy objects with ease. Kyojuro was practically like coddling a lion. Hands slithering around his waist, your head nuzzling the juxtaposition of his neck and collarbone. The scent of paprika, grapefruit, and charcoal seduces your nose as his hair titillated your cheek. He's so warm, like a heated teddy bear. Kyojuro breathed a chuckle at his current position; the irony of seeing a person larger and more brawny than him acting so soft and gentle is quite comedic. He loves it though, and he understands your fatigue. The both of you worked hard, especially this week. A break was in order, and by the gods above he will cherish this moment of peace as he fiddled around to a comfortable position for you.

Uzui Tengen: Obviously, this man knows a thing or two about lap sitting. Each of his wives gets pulled at least three times a week. He'd hear about it if it's less. It's the same with the wives; during moments of downtime, you'll find Tengen's relaxed form lying comfortably on one of their thighs. Mainly Hina, but Makio and Suma get their time in the spotlight too. When you became the fourth wife of the Festival God, something inside you heightened. Your anxiety. Your romantic anxiety. Hina, Makio, and Suma have all been married to him longer than you, which means more experience, which means more attraction between them, which means more affection between them—get where I'm going? And it didn't help when the three of them gawked at your tall, broad form. Well, Makio and Suma did; Hina was more intrigued than scared, but it didn't help! However, after you got used to the customs of polygamy and flamboyance, you started to feel like part of the family. Cuddle piles didn't feel like a can of sardines, and routine lap sitting became a new tradition within the family. That all started with you, of course, being the physically affectionate one besides Tengen. It was an accident, you swear! Your subconscious just pulled the flamboyant man to your lap without question. Even Tengen didn't realize what happened the moment you did it. An outing among the Sakura trees with the family turned into a little mishap once Tengen sensed his seat was magically cushioned by your meaty thighs. A shit-eating grin smirk plastered over his face as he teased you about your subtle act, querying your interior motives. Your blushing form once increases this man's smugness; you're a clingy one aren't ya? The girlies are ✨shooketh✨, well only Makio and Suma once again, Hina's chuckling at the sight. but the outing continued as usual. Now, Makio and Suma are fighting over a spot on your lap.

Tomioka Giyu: Doesn't know the slightest bit of affection. It's been years...he can't even hug properly anymore. His trauma crippled him so hard that the slightest touch of affection stops his heart longer than consecutive sneezes. However, despite his disability, Giyu is a good learner. A slow learner, but a good one. You already knew about his backstory as he trauma-dumped on you one night, so you became wary of your love language around him. Starting with gentle hand-holding to brushing shoulders to resting heads upon shoulders; smaller, subtle acts of physical affection. Giyu accepts each little act and tries TRIES to reciprocate them the best he can but he's so stiff! It's hard for him to rest his head on your shoulder since it's practically two stories above him. A normal hug stuffs his face into your stomach while you're petting his hair like a child. And it really doesn't help when Giyu looks up at you with his deadpan expression. One time, after a long mission away from home, you hobbled your way to his estate in fatigue and affection deprivation. Of course, the Water Hashira was hiding away at his home and had welcomed your arrival from the exhausting mission. In the blink of an eye, you swept this man off his feet with a beefy arm underneath his thighs and his head lodged into your neck, cradling him like a baby. Giyu's mind was fucking fried at that point. Just wide-eyed and motionless. He thought something had happened while you were away, right after he composed himself from the sudden embrace. Once the groundwork was laid, the exchange of affection flowed like a simple stream. Giyu's confidence grew a little as he started to initiate things, you're giving him more hugs and kisses now and then. The two of you built a solid foundation of love that you could stand on together. And yet that evening threw the man for a loop. Another exhausting mission led your heavy body to the Water Hashira's estate again, where the recluse was on the floor practicing his calligraphy in the dark with a single lantern. Giyu greeted your presence, asking if you were alright, and you responded with a simple "yes". Your eyebags were enough for Giyu to know you weren't completely "alright" and that food and rest were in order. However, something halted him. Picked him up. Plopped him on a cushion and ensnared him in a tight coil. His waist was wrapped and his back was heated, along with his face. Comprehending what happened, it took him a minute to realize that the culprit was his partner, and that he could no longer move from this position. Shit, something happened again. He can't even turn to face you; your face is smushed into his hair. Giyu wanted to pose a question, but even his tongue was tied. Oh well, maybe later tonight he'll ask.

Shinazugawa Sanemi: It's no surprise that Sanemi avoids any sense of weakness or dependence. A hug was a symbol of strength deprivation, a sign of frailty. He's a Hashira for fuck's sake, the last thing he needs is him laying in the arms of another person. This has really strained your relationship with the Wind Hashira. While you may not be the Love Hashira, physical affection is the only way you can express your attraction to Sanemi. No amount of compliments on his looks or physique could compensate for the chance to hold his rugged hands. His independence is as unyielding as his training style, which you interpret as "keep your hands to yourself." Your hands tremble as the two of you watch the sunset after finishing sparring.You long to hold him, to sit him on your lap and run your fingers through his hair. But you can't; to him, an embrace is like waving a white flag. Or so you thought...In private, Sanemi reveals a side you've never expected to see since your first meeting at the semi-annual Hashira gathering. When you're home, the albino spares some time for you to share a meal or just escape from the others. He may never say it outright, but Sanemi finds comfort in you and your presence. He doesn't just respect you; he admires you as both a comrade and a partner. Just keep that between us! After a blunt confession one time, Sanemi would throw an arm around you, kiss you, and hold you despite your larger frame. He needs this. He'll never express it directly, but he craves something to hold onto, and you provide the patience he needs. That gives you the opportunity to sit him on your lap. At first, Sanemi protests, asking what the heck you're doing and why you're treating him like a babydoll, then he'll squirm and demand you let him go, before eventually resigning to his fate, grumbling as he adjusts himself. You squeeze him tighter, prompting him to growl at you to stop before you break his spine. Then you call him pretty, which leaves him flustered.

Kocho Shinobu: We all love our petite Insect Hashira. Known for her quick wit and sharp mind, she could make pure nonsense sit up straight. You loved her disposition; you don't see why everyone's annoyed by her quips, they're funny! As much as she's a wind-up merchant, she's talented in her work and equally as strong as the other Hashira. God did you fall hard for her the moment she quipped about your figure in comparison to your mind. How your muscle mass seemed more prepotent than your own brain. All because you swapped her blade for yours on accident. And yet, getting berated by her made you shiver with delight. A kilig and a wanton. You were starving and she gave you bread crumbs until finally she reciprocated your feelings. From a glance, your relationship seemed like a comedic juxtaposition. The Big Guy Little Guy comedy duo. Of course, you're not a complete oaf, but as a human, you tend to make silly mistakes, to which Shinobu picks the pieces of your messes. In return, you give her full submission of yourself. Whipped and wrapped around her tiny finger. As the head of the infirmary and a Hashira, Shinobu gets very little time with you as the number of demon slayers hobbled up to her door steps missing limbs and covered in blood both theirs and not theirs. The whole solar system must be aligned in order for both of you to have downtime together. When you do, a chat over dinner allows the two of you to catch up on each other's health and daily life. Shinobu tells you the horrors of her missions and the infirmary whilst you reply with your own scary stories on the job. Once you've finished for food, the rest of the evening is yours to keep until the break of dawn, or another group of demon slayers are piled up at the Butterfly Estate. Your eyes go from watching the stars to gazing upon the beautiful Papillion. The cheeky Thumbelina quips that you're staring again, smiling at your lovestruck sight. You blush, then later grab her waist and plopped her on your lap, hiding your red face into her hair to see if it would cool off. It's humorous to look at; seeing Shinobu sitting on your lap like a throne of flesh and muscle. Just like the queen you treat her as. Giggles emit from her, quipping yet again about how clingy you are. You're making her miss you too much. And yet that is what she loves the most.

Iguro Obanai: While Giyu's reclusive was due to his introversion, Obanai's was due to just sheer disinterest and enmity towards the others, except for Sanemi and Mitsuri. He too eschewed weakness like his closest comrade, making your relationship strained. How he always presented himself made you believe you were on his hit list, even though you treated him with respect. Though he never said---or looked---like he despised you; he's seen your breathing style, your talent, and your hard work. You've trained and sparred together. He even complimented you once. Back then, it was hard to decipher him. The animosity he has grows within him like snake venom, ready to be spewed through his sharp tongue at the dilettante. But he never spat on you, which once again through you for a loop. It wasn't until you saw the Serpent Hashira leaving at your doorstep after placing something down. You caught him before he left the lot and asked about his presence and his present. Obanai merely said he was just delivering a parcel, nothing more. Though his scaly companion, Kaburamaru, spoke different words as he nipped his friend on the cheek in displeasure. You knew the snake was venomous, so you started to worry about your fellow comrade. Obanai settles you down saying that he's immune to venom. You took a breath of relief. A beckoning hand and an open door symbolize your want for him to come inside and resume the conversation. Obanai---with the glare of Kaburamaru---reluctantly took your welcome, taking the parcel with him. Once inside, the package was given to you again, followed by an explanation of "It's nothing special". Revealing the package inside was a simple necklace. Nothing special in Obanai's gorgeous eyes. You, however, treasured it dearly, wearing it immediately. Believing that was settled, Obanai began to leave in haste. But he just got here, he should at least stay for a bit. Perhaps dinner? No, he ate already. Rest? The Serpent Hashira stopped in his trace. Fine, he'll rest. For a bit. It was then you dragged him down to your lap. You absolutely did not realize how short the man was. For some, it's laughable, to find a male so strong to be so short in stature. For you, you didn't mind. More reasons to plop him into your lap. Speaking of which, the serpentine swordsman seems to be shaken by the situation. He's flustered, protesting to be off your lap this instance. But once again, Kaburamaru changes his mind as he slithers up your chest to your neck, locking the both of you in place. He's trapped, Obanai thought, and yet, he doesn't seem to mind.

Kanroji Mitsuri: Mitsuri loves everyone and everything. Of course, she has her dislikes, which are common among regular folks: demons, murderers, abusers, and people who don't respect others for who they are. The list goes on, but you get the idea. Otherwise, she loves everything, and she loves you! She adores your strength, your chiseled abs, the contours of your muscles, your unwavering kindness, and the way you carry yourself high despite the many glares and raised brows at your form. Not to mention, you're a real sweetheart. That list continues as well. Mitsuri absolutely cherishes you. So when you heard her swooning over how you soothe a crying baby during a mission together, your heart raced. She likes you; you blushed—she really likes you. Just hearing the sound of her voice makes you swoon too. You two have always been partnered up, which forced you to bond during missions. You cherished it, though; every moment you watched Mitsuri dispatch demons felt like the most cinematic scene of your life. The woman is a queen, and you're happy to kiss the ground she walks on. To show your admiration for her, you decided to cook a feast fit for a goddess. The finest ambrosia for her loveliness: Sakura Mochi, with a side of everything else. You've already invited her over, so you needed to set up quickly. She'll be back from her mission soon, and she'll definitely have an appetite. *Knock knock knock* Shit! She came back early. No matter; you just finished up and rushed to the door. Mitsuri, despite the small bags forming under her beautiful eyes, was ecstatic! You invited her over AND prepared a gift; she was giddy just thinking about it. After exchanging greetings and hugs, it was time to feast! The steam of warm, delicious food filled the room. Mouthwatering morsels gathered on the table like a communion. Mitsuri hugged you a third time, squeezing you as she squealed in delight. The two of you chatted whilst you ate together. Her smaller form sat in your lap with glee, blushing at the meaty, comfortable thighs beneath her and the warm muscular arms wrapped around her waist. And when you confessed your love for her, she's tangled in your arms yet again.

Tokito Muichiro:

Let's say you're both the same age.

The Mist Hashira was an estranged member of the corp. Distant, dazed, a little aloof. In the beginning, he didn't really acknowledge you much; just another comrade that was his age. Your time there allowed you to interact with Muichiro a lot since he's the only one close to your age. He never shoos you away when you join him for cloud gazing. You don't even know if he acknowledges your presence. You thought he just ignored you. Until, he started talking to you. You've never heard his voice, but God you fall in love with it. It sounded like nostalgia; mellow and soothing yet mysterious. He called you an ox; mighty and diligent yet patient. You weren't sure if it was a compliment or an insult. His deadpan expression made it hard to tell. You wanted to hear his voice more, that sonnet of serenity. And once you saw the sheer height difference between him and the other Hashira, you wanted to cradle the boy. Swaddle him in blankets like a baby. But of course, he'll never let you do that. Until the incident at the Swordsmiths' Village. Where he truly acted his age. Muichiro's eyes shimmered like Croatian Blue Grottos. A smile graced his face when you visited him in the infirmary, greeting you like an old friend. The two of you interacted more often; inviting you to his home for origami and tea or competitive paper plane throwing. But your favorite thing to do together is cloud gazing, especially at dusk. When the sun was right and the temperature was warm, you sat at the entrance of his estate watching the clouds go by. Muichiro was inside taking a bath after a mission. Eventually, he returned to you, freshly clean and dressed in his casual yukata. He joined you at the side for a bit until you decided to take the small boy into your lap. You held him ever so softly. Carassed his gorgeous hair and kissed his forehead whilst calling him pretty and cute. He's so tiny compared to you; seeing his hands engulfed by yours made you squeal internally. You couldn't see it, but Muichiro's flustered stammer indicated his embarrassed state. You loved your little Mui so much.

#demon slayer kimetsu no yaiba#demon slayer#kimetsu no yaiba#kny hashira#hashira headcanons#rengoku kyoujuro#giyuu tomioka#sanemi shinazugawa#iguro obanai#obanai iguro#shinobu kocho#mitsuri kanroji#muichiro tokito#muichiro tokito x reader#demon slayer mitsuri#kny mitsuri#mitsuri x reader#mitsuri x you#tengen uzui#kny tengen#tengen x wives x reader#demon slayer tengen#tengen x reader#demon slayer obanai#obanai x reader#kny obanai#kyojuro rengoku#rengoku kyojuro#rengoku x reader#kyojuro rengoku x reader

144 notes

·

View notes

Text

masterlist of places to submit creative writing

it's intimidating thinking about submitting your precious work to judgement, but all the rejections are worth it when you finally get that one glowing acceptance email that puts your anxieties and impostor syndrome to bed. but where do you submit? it can be incredibly overwhelming trying to find the right sites/journals/zines to submit to so i thought i'd create a little collection of places i have found to submit to and i will update it whenever i find new discoveries.

PROSE ONLY

The Fiction Desk

They consider stories between 1k words and 10k words, paying 25 GBP per thousand words for stories they publish and contributors receive two complimentary paperback copies of the anthology. (A submission fee of 5 GBP for stories which sucks)

Extra Teeth

Works of fiction and creative nonfiction between 800 and 4,000 words receive a 140 GBP payment upon publication in the magazine as well as two copies that feature your work. If your work is selected to published online, you get 100 GBP instead. A Scottish based publication that also offers mentorships to budding writers. (Free)

Clarkesworld

Fantasy and sci-fi magazine accepting submissions of fiction from 1k to 22k words, paying 14 cent per word. Make sure you read their submissions page carefully, it gives you a good idea of what they're looking for and what will get you one of those disheartening rejection emails. (Free)

Granta

Open to unsolicited submissions of fiction and non-fiction. Unfortunately they do charge a 3.50 GBP fee for prose submissions, but they do offer 200 free submissions during every opening period (1 March - 31 March, 1 June - 30 June, 1 September - 30 September, 1 December - 31 December) to low income authors. No set minimum or maximum length, but most accepted works fall within 3,000 and 6,000 words.

Indie Bites

A fantasy short fiction publisher looking for clever hooks, strong characters and interesting takes on their issues' themes. Submissions should be no longer than 7,500 words. You get an honorarium of 5 GBP for each piece of yours that they publish - it's not much, but yay money! (Free)

Big Fiction

Novella publishers (7,500-20,000 words) looking for self-contained works of fiction that play with things like the linearity of narratives, perspective, structure and language. (Free)

Strange Horizons

Employing a broad definition of speculative fiction, they offer 10 cents a word for spec fiction up to 10,000 words but preferably around 5,000. (Free)

Fantasy and Science Fiction

They publish fiction up to 25,000 words in length, offering 8-12 cents per word upon publishing. (Free)

Fictive Dream

Short stories from 500 words to 2,500. They want writing with a contemporary feel that explores the human condition. (Free)

POETRY AND PROSE

eunoia review

Up to 10 poems in a single attachment, up to 15,000 words of fiction and creative non-fiction (can be multiple submissions amounting to that or a single piece). It's free to submit to, and they respond in 24 hours (I can vouch for that).

Confingo Magazine

Stories up to 5,000 words of any genre and poems (a max of three) up to 50 lines. Free to submit to and offer a 30 GBP payment to authors whose work is accepted.

Grain Magazine

Another Canadian based publication also supportive of marginalised identities. They accept poems (max. of six pages), fiction (max. of 3,500 words) or three flash fiction works that total 3.5k, literary nonfiction (3,500 words) and queries for works of other forms. All contributors are paid 50 CAD per page to a max of 250. Authors outside of Canada will need to pay a 5 CAD reading fee but they do offer a limited number of fee waivers if this impacts your ability to submit.

BTWN

An up-and-coming lit mag looking for diverse works that play with genres, breaks the rules and is a little weird. They want what typical lit mags reject. Stories up to 7,000 words, non-fiction up to 7,000 words and up to 4 poems totalling no more than 10 pages, hybrid work, comics/graphics up to 5 pages, original periodicals up to 14,000 words of prose or 20 pages of poetry. (Free)

Gutter

Accepting submission in spring and autumn work that challenges, re-imagines or undermines the status quo and pushes at the boundaries of form and function. If your contribution is chosen, you get 30 GBP for your work as well as a complimentary copy of the issue. Up to three poems (no more than 100 lines), fiction and essays (up to 2,500 words)

Whisk(e)y Tit

This one's worth checking out just for their logo. They're looking for fiction whether it's short stories, flash fiction or novel excerpts up to 7,000 words, up to 5 poems, up to 7,000 word essays, screenplays and stage plays (can be full works or excerpts up to 20 pages). (Free)

FOR QUEER AND MARGINALISED WRITERS

Plenitude magazine

A queer-focused Canadian literary magazine accepting poetry, fiction and creative non-fiction. They define queer literature as create by queer people. (Free)

Lavender Review

Poetry written by and for lesbians. An annual Sappho's Prize in Poetry takes place every October. (Free)

AC|DC

"A journal for the bent", always open for submissions from queer writers of all experience levels. They lean towards dark and raw writing but are open to everything as long as it's not over 3,000 words. (Free)

Sinister Wisdom

A literary and art journal for lesbians of every background. They accept poetry (up to 5), two short stories or essays OR one longer piece (not exceeding 5,000 words), as well as book reviews (these must be pitched before they are submitted, (Free)

Queerlings

Open annually from Jan 1st to March 31st they publish short stories of any genre (up to 2,000 words), flash fiction/hybrid work (500 words), poetry (up to 3 poems per submission with a 20 line maximum on each) and creative non-fiction (2,000 words) written by queer writers. (Free)

underdog lit mag

Based in the UK, they focus on amplifying emerging and underrepresented writers. If you're female, POC, LGBTQ+, working-class or all of the above with a story of 100-3,500 words that fits their flavour of the month (the last flavour was Magical Realism) send it their way! (Free)

fourteen poems

London-based poetry publishers looking for the most exciting queer poets. You can send up to five emails to them within their deadlines and you get 25 GBP for every poem published.

Froglifter Journal

A press publishing the most dynamic and urgent queer writing. Poets send in 3 to 5 poems (max. 5 pages), writers send in up to 7,500 words of fiction or non-fiction or three flash fiction pieces, and cross-genre creators send in up to 20 pages within the submission windows March 1 to May 1 and September 1 to November 1. (Free)

OTHER SOURCES

Short Stories: X | X | X

Poetry: X

#sjlwrites#got overwhelmed just compiling all the bookmarks for places to submit i have so i know how overwhelming it can be looking for somewhere#i hope this makes it a little bit more manageable for those looking to get their work published#and maybe this will inspire someone with a couple of short stories/poems in their back pocket to seek publication#the world can always use new writers!!!!#especially ones who pour their humanity into their work now that some people are trying to outsource creation to AI#writing#writers#writers on tumblr#writeblr#short story#poetry#poem#publishing

127 notes

·

View notes

Text

VIII. ~Survival~

Summary: You were determined to survive longer than anyone, even if you were set to marry him.

Genre: Historical AU, angst, mature, suggestive, arranged-marriage

Warnings: Dark themes, gore, graphic imagery, theme/depictions of horror, swearing/language, suggestive, pet names (Little Flower used 5-6x) implied harsh parenting {on Sukuna's end), mentions of adult murder, implications of impregnating, implied Stockholm Syndrome, images/depictions of dead bodies (both human and animal), child death/murder, character death(s), slight misogynistic themes (if you squint), NOT PROOFREAD YET (sorry ;-;)

Word Count: 6.5k

A/N: For starters, I want to clarify that I am choosing to purposely not mention the names of the twins. Although this makes it difficult on my end, I wanted you, the reader, to decide on the names of your choosing while reading.

P.S. This is the longest chapter I have written. Sorry it took so long but I hope it proves well and worth the wait. (╥﹏╥)

JJK Mlist•Taglist Rules• • Pt.I • Pt. II • Pt. III • Pt. IV • Pt. V • Pt. VI • Pt.VII • Pt. VIII • Pt. IX

You could see the fire, smell the blood, and hear their screams as they begged for mercy. They cried out for their children and loved ones whose bodies were now burning in the roaring flames, reduced to cinders and ashes. Those who threatened to charge were killed before they could make contact, their body contorting in ways the human form was incapable of, causing cries of pure agony as they were left to bleed out in their mangled state– they were left to suffer in their pain as the life slowly drained out of them. If a suffering soul was fortunate, the fire would catch them aflame and kill them faster, or debris would land in a fatal spot or crush them whole to end their misery.

Viewing the demolished structures and flaming bodies, both dead and alive, was a petrifying view– yet you felt nothing. Your breath was methodical, your expression blank, your body unmoving. Pity and remorse were thrown out the window– fear and anguish had long vanished; however, anger and resentment lingered like a tiny flickering flame that continued to grow with each crumble and cry that could be heard.

Although your exterior appearance seemed calm and collected, your heartbeat said otherwise as it accelerated, pounding against your chest so hard you could eventually drown out the hollars of distress with its rapid thumping.

“Mama, look!” Two voices sounded.

Your breath hitched as the familiar calls rang through your head. The pounding in your chest quickened and strengthened when the footsteps got closer. Hearing their giggles and whispers caused your form to tense– not having the strength to say or do anything. How would you explain your current position? How would you tell them tha-

“Mama, are you alright?”

You snapped out of your daydream to see you were in front of the stream, taking care of your personal tasks, this chore being the cleansing of garments. The query of when you arrived there was unknown, but you would assume it had been for way longer than you should have resided in that area. The dreams you would endure during the solace of night, despite those nights being anything but comforting, had begun bleeding into the day and becoming more prevalent and gruesome. It was becoming quite the distraction.

"Mama?"

Before you could allow your thoughts to consume you, you focused your attention on your son and daughter, who were awaiting your reply with innocent eyes. Yeah, their virtue never ceased to amaze you. They were too good for this world– their empathy brought light to your soul that you believed had burnt out long ago– pride and joy.

You looked at your twins with an awaiting gaze as you watched their expressions turn into excitement at the realization they had caught your attention. You blinked once before being met with a piece of parchment littered with ink. It did not take long to realize that the twins had made you something in their short time away. Blinking up at the two, you gave them a fond grin before looking back down at the material. Upon viewing the parchment, you saw an image of what you assumed to be an image of a bird, and next to the picture was a small note.

" To show gratitude to our dearest mother," you read aloud before holding the small gift to your chest, "Thank you, my loves, it is lovely."

The joy on their faces from the small compliment warmed your heart, referring to your previous statement of them being too good for this world. There were moments when you could not believe that the twins were a product of you and Sukuna– that was a reoccurring thought you had often. They were, without doubt, your most significant and last blessing as things around the temple had not been going as smoothly as they once had been the first few years you resided in it, and it was clearly starting to take a toll on everybody, including you.

"Mama, guess what we learned today?" Your son exclaimed excitedly, causing you to jump a little, not expecting the sudden outburst of enthusiasm.

"Was it penmanship because the both of you are getting better. Have you been practicing like I have told you to?" You joked, poking at their bellies, causing them to giggle.

"No, Mama, Father taught us about Jujutsu!" your daughter shouted enthusiastically.

"Hey, I wanted to tell her," the boy pouted.

"Sorry," your little girl apologized as she turned to look at her brother with an apologetic look.

The sibling tried to look upset, not wanting to give in quite yet, but when he turned around to look at his sister's guilty expression, he launched to hug her. If you had said it twice, you were to state it a third time– the world did not deserve this pair– you could not stress that enough.

"Did he now?" you breathed, your anxiety slowly creeping to the back of your neck like it did so often.

You were aware of the agreement you made with Sukuna all those years ago, and as of things so far, you both were holding up to your ends of the deal. The twins continued to be educated under your supervision and occasionally your attendant. Your little girl and boy were now at the ripe age of six, at which they would begin manifesting their cursed energy, so they were now taking lessons under their father's supervision– that notion made you apprehensive of your deal.

As you previously mentioned, things were not going as smoothly as they once were. Your village has become slightly non-compliant recently. The traditional wedding ceremonies had stopped a little over a year ago as families started refusing to hand over their kin to Sukuna. Despite the disrespect, Sukuna had no care as he had plenty of women to satisfy him; however, to say that he was taking the rebellion lightly would be a complete lie. Over the last few years, more guards were posted for precautionary reasons. Nothing major had happened yet, only the occasional distant and muffled voices chanting in protest.

With such circumstances, emotions were running high, and the crowd only seemed to get bigger as the days passed. You could admit that some days were worse than others, but it did not change the fact that these events could cause a catastrophic resolution at the hands of your husband. Viewing the situation, there was no question that Sukuna would be more occupied than usual; however, it was not amid meetings or trivial tasks but with his children instead.

Sukuna could hardly be viewed as a legitimate father but rather a mentor– a cruel one based on the round, tear-stained cheeks that would walk into the garden after they had spent their designated time with their dad. The only children who seemed the slightest bit content with their learnings were your son and daughter. Your twins have not been training for long, but they had outlasted most other kids regarding their spirits breaking. The first day your little boy and girl had left to meet with Sukuna, you could not help but feel nervous; however, when they came back, they were all giggles and smiles as they told you of their time with the man they call father. To say you were shocked was an understatement, but despite that astonishment, you were simply glad they left a good impression and walked out unscathed, their spirits still intact.

"So, have your studies with your father come to fruition yet?" You asked, not thinking of your wording as the question effortlessly slipped from your tongue.

"Come to fruition?" your son repeated, looking at his sister to see if she understood the meaning of your words.

Despite your children being clever, they were still young and naive, and that naivety could not help but make you laugh gently as you watched them whisper to each other as they tried to decipher the saying. They paused in their little hushed conversation at your breathy giggle, flustered as they looked at you, hoping you would grant them the knowledge they wanted.

"Mama, stop laughing. What does it mean?" the two whined in sync as they looked at you with awaiting eyes.

"Alright," you managed to say between your little fits of giggles, "It means to succeed in the progression of a goal. In this case, did you reach the intended goal of your lessons today?"

Your twins thought over your words for a minute before a look of realization washed over their faces. The two looked at one another to make sure the other understood, finding they were both on the same page before turning to your now-awaiting gaze. Smiles were once again plastered to their expressions of proudness.

"Not exactly," your daughter stated.

"What do you mean, 'not exactly'?" you questioned with a raised brow as you looked for an answer.

"Well...we do not have cursed energy yet, but Father said it was okay because we will..." Your son trailed off before looking at his sister for assistance, trying to remember the exact words Sukuna had used.

"Manifest!" your daughter shouted in revelation after a moment of thought.

"Oh yes, manifest! He said it was okay because 'we will manifest our cursed energy soon enough,'" your son finished, ignoring the distant whispers and tiny gasps that had suddenly emerged from the surrounding women and children.

"And you both will, I am sure of that– my intuition is never wrong," a deep voice resonated behind the twins.

You froze as you looked up to see Sukuna looking down at you, a proud grin on his face as he let the words settle. Your smile had long disappeared, your lips forming into a tight line as you met his gaze. His presence was not what had upset you as you had grown familiar with his company and unexpected visits, but rather the fact that you knew he was right.

"Father!" the twins shouted, bowing before going in to hug his legs, looking up at him with their innocent doe-like eyes that shone the color of your own hues, little flecks of what seemed to be crimson could also be seen if the light hit them just right.

Your heart stopped for a second as you watched your four-armed companion freeze on the spot at the sudden attention. Although you knew Sukuna could not lay a hand upon his children due to the contents of the pact you had made with him, it did not eliminate the uneasiness you had, worried of the thought he would grow to distaste them. The curse-user was not a man of tenderness nor liked to be presented with such fondness, especially from his offspring. There was no room for weaklings in his realm, in hid brigade of suitable heirs.

You sit there, waiting for his reaction, chewing on your lip to the point it draws a small amount of blood. The man stood stiff, looking down at the two smaller beings that clung to his legs in a warm greeting before moving to bend down, causing your heart to spike in rhythm. The questions flooded your brain once more like they often did when it involved your significant other's actions. Sukuna took a set of his arms, placing one on each twin's back before meeting their eye level.

"Did I ever indulge either of you with the story of how I found out about your mother's conceiving of the both of you?" Sukuna asked, an arched brow with a devious smile as he switched eye contact from one twin to the other.

"No," your son replied honestly, curiosity gleaming in his eyes.

With that short answer, Sukuna looked at you, a mischievous glint in his eyes before redirecting his focus on his kids once more.

"I knew that your mother would one day bear the fruit of her fertility, but there was one particular evening where I could sense an odd presence. I immediately called upon your mother, and when I was met with her physique, I could tell she was with child. It would have been unnoticeable, but my perception is unlike the average man. Looking at your mother, I could see her stomach was softer and slightly rounder, her ankles somewhat swollen, and her breasts enlarged."

You held back the bile rising in your throat as your husband explained his side of the story you knew all too well, remembering the exact events that led up to that day. His vulgar description of the event sickened you to the core.

"Your mother was unaware of her condition, but I was. The moment I felt her stomach, I could feel the presence of not one but two essences in her womb. I remember the look on her face when I told her– pure shock."

Sukuna's words offended you because pure shock was an understatement. You were undeniably mortified that day, but you would never admit that to your children. For their happiness's sake, you were willing to push the bitter memories of your pregnancy aside. They did not need to know your previous disdain for them– you had not even met them yet. What they did not know could not hurt them.

"How could you sense both of our essences?" Your daughter questioned, tilting her head as Sukuna focused his attention on her.

"Always the curious one, aren't you?" Sukuna noted, a teasing grin forming on his face.

"Mama says it is always best to stay curious because you will never learn anything new if you are too stubborn or scared to keep asking questions."

"Did she now?" Sukuna's grin grew wider as he drew his attention back to you, "And what do you believe that is a lesson of?"

"Fearlessness?" your daughter answered hesitantly.

"Close, but not quite," Sukuna started, "She is teaching you confidence."

"Is that not the same thing, Father?" your daughter questioned again.

"Not exactly, my child," The curse-user paused, looking at you for a fleeting moment before continuing, "being fearless is alright in certain circumstances– something as frivolous as a mouse is something to lack fear of, but there are certain things you should fear. Fear, my child, is what keeps you alive; however, it can be crippling at times. It is the confidence to overcome those fears that lets you survive."

"Why have you come here, Sukuna?" you suddenly asked, becoming tired and uncomfortable with his lingering presence. You knew that the man had not come for idle conversation and to share invasive stories nor explain your teachings.

Had your twins been any older, they would have caught onto your passive aggression as you addressed their father, staring at him blankly as he drew his attention to you. You were aware of the line you were crossing, aware of the hostility you were presenting in the presence of your children, despite the obliviousness of it, but with high tension in the temple and his sudden visit, you felt you had every right to feel uneased. Sukuna's gaze turned from teasing mischief into a grave look.

"Well, Y/n, I wish not to sully our bonding with grave matters," the man spoke, returning your passive-aggressive tone, "we'll speak of it later."

"So why did you come, father?" Your boy asked, looking up at the tall man.

"Must I have a reason to visit my kin?" Sukuna teased.

"Well, we do not see you much outside of lessons," your daughter jumped in with her own comment.

"Observant as well, huh?" Sukuna huffed, pausing for a moment before speaking up once more, "I was wondering if you both would accompany me on a hunt?"

That question caused their little orbs to light up, their little heads turning to you, silently begging for your approval. Looking at their pleading eyes, you could not say no, giving a nod of approval. If they were cheerful before, they were exhilarated now. These kids were to be the death of you if a simple pair of puppy dog eyes could make you cave like this, and you were okay with that.

"Can Mama come too?

Your blood ran cold at the mention of your name. There was no particular reason to be troubled, but at this point, it was a habit for these tense feelings to rise whenever your name was mentioned. So, as you look at your supposed significant other, you could feel yourself about to explain how you had other activities to attend to.

"I do not see why not."

Now, that was unexpected.

The words you were going to speak paused in your throat, swallowing them down when your little boy and girl rushed up to you after hearing Sukuna's approval, hugging you as they tugged on your hands to stand. What was he playing at? Despite the inquiry of his intentions, you had to push it aside as you saw the thrilled look on your children's faces–they most likely wanted to show off what they had learned while spending time with their father. They always returned with smiles of pride after spending time with their dad. You would give up your life to see them smile at you like that for as long as you lived, so you followed them as they walked beside Sukuna despite your own apprehension.

Time slowly passed as you trekked quietly through the nearby woods, watching Sukuna's movement as he led the three of you through the brush, pausing when something caught his eye. It took only a moment for a bow to appear in his hand, but when you had expected him to use it, he motioned over to your son, giving the child the weapon. Every motherly instinct told you to confiscate the bow, but quickly reminded yourself of your pact both in regards that Sukuna was bound to protect your children from harm and that you had accepted he could use any training methods he deemed necessary– this being one of them.

Sukuna was crouched the lowest he could get, arms hovering over your boy's form, guiding his son while speaking in a low voice as the two focused on the prey ahead. Looking into the small clearing, you could see a few grazing rabbits, clueless and defenseless to the threat before them, nibbling on the dewy grass. The bow's snap and the sight of an impaled rabbit caused you to return from your light daze, turning over to see your son smiling in excitement.

"Did you see that, Mama? I did it!" the boy beamed, maintaining a hushed voice.

You gave your son a warm smile, nodding in reassurance before watching your son switch places with your daughter. The rabbits that previously remained in the clearing had run off, but one straggler emerged from bushes, unaware of what had occurred, clueless about its impaled companion. In a mere few moments, the creature suffered the same fate as the previous one, bringing joy to your little girl. She turned to you with the same smile as her brother's– it frightened you.

You had no doubt that you loved your children for who they were. You loved their innocence, passion, and joyful nature, but a realization had dawned upon you in these moments– one that made your heart drop to your stomach.

"Mama, you try!" your daughter called out, grabbing your hand as she led you toward a better spot to shoot from, that spot closer to Sukuna.

Their reason for upbringing would be to take their father's place, to be his heir, and Sukuna was not giving that role to a charitable and naive son or daughter. Things seemed pleasant for now, and your children might keep their nature through adulthood, but one thing was for sure. Whether they stayed that way or not, they would feel justified in their actions– believe what they were doing was good because that is what their father was teaching them, and you were enabling it.

"Darling, I'm not sure that it would be wise for me-"

"I think it is a marvelous idea," Sukuna interrupted, standing from his crouched position and grabbing your waist.

You felt the man's hands slither up your body, messing with the material of your clothing before touching your flesh. Your skin burned unpleasantly as his hands settled, a faux attempt to adjust your form when you were capable; however, with your twins present, you would not dare cause a stir. Looking at the clearing, there was nothing seemingly there as all the critters that previously inhabited it ran off.

"There's nothing for me to target, so maybe we should end this," you suggested, trying to excuse yourself from this activity, keeping a low tone.

"If nothing is there, why do you whisper, Little Flower?" Sukuna responded in a hushed voice, feeling his smirk form as his face rested against your cheek.

Before you could respond, the sound of fluttering was heard. Without thought, you lifted the bow's angle, shooting the arrow into the air– a thud sounded shortly after as whatever you had shot hit the ground. Looking down, you could see a bird skewered with an arrow, blood pooling from its limp body and staining the grass surrounding it.

"Mama, you did it!" the twins exclaimed, thrilled you had participated.

Their sounds of excitement were drowned out by the ringing of your ears as your gaze lingered on the deceased animal. What had you done? Yes, you had viewed death without so much as a flinch, but you were not the one with blood on your hands. You were unaware you could perform such an action– you had never held a weapon before, only a mere kitchen knife.

It disturbed you.

How did you kill the helpless creature so instinctively? So effortlessly? The worst part is...

It felt good.

The ringing eventually subsided as the bow settled to your side, turning your head toward the two-faced man you called 'husband' and handed it to him. Thankfully, Sukuna took the item with no smug remark or wicked grin, giving you one of his infamous blank looks before moving his gaze toward the kids, motioning for them in the direction of the temple, settling one of his hands at the small of your back as you all started the walk back.

Making the hike back, you settled on your earlier realization regarding your children. You would love them until the end of time, and you had no doubt about that; whether they were inherently good or bad– you would love them. But now, as you continue to think, all you can think about is the future. Where would you and your twins be standing in the years to come? What kind of life would you three indulge in if you were all to live? How many bodies would have to pile under your feet before you were guaranteed genuine safety for you and them?

For the years under the same roof as Sukuna, you had been focusing on your mother's words, the promise you had made to her.

"I promise I will survive– longer than anyone."

Your life had been summed up by that promise. So far, you have kept faithful to it because you have been surviving. From your wedding day to your pregnancy, to the many inspections you attended, all up until now, as you approached the temple, you have been surviving. You played all the right cards to get you here and made all the right sacrifices to keep your children alive– what more could you ask for? You were alive and breathing along with your children, and that is all that truly mattered, right?

No.

You may have been playing this game of survival and have been successful thus far, but there was one thing you had failed to do...

Live, you had failed to truly live.

You have played your part in your husband's sick game. You married him, gave him your purity, gave him children, and now you were done. You were more than aware of the pact you had made with your husband, but almost every contract had a loophole whether it could be seen or not.

"We are relocating."

Your heart rate accelerated as Sukuna bent down to whisper those words into your ear, the words taking a moment to register. Was it out of fear? Anger? Possibly both? No. It was excitement. You had given your word that you would never leave the temple unless it was under Sukuna's supervision and say so. Unless he accompanied you outside those gates, you would remain here; however, you had never given your word to stay by his side.

You had given your word to stay at the temple.

The curse-user had just given your confirmation of freedom without being aware he was doing so.

"May I ask why?" you dug, trying to keep your composure to not seem suspicious, as if he could tell what you were thinking if you had shown the slightest emotion.

"I have simply grown bored of this place, plus I have got what I needed from these people, and they all stand right here before me," Sukuna explained, the last part of his statement being clear that he was referring to you and the twins.

"Where would that leave my village?"

Now, that was a genuine question. You were not as concerned for your village but rather your family instead. The four-armed beast of a man was not known for leaving a town so quietly– you had heard plenty of notorious stories from survivors to prove that.

"What of it?"

"Will it remain in one piece, or will it be returned to the dirt?"

"That entirely depends on them, Little Flower."

The answer was vague– it was neither a confirmation nor a denial, but you could understand the meaning behind his words. For the sake of your family, you hoped that the village elders would not perform anything stupid. You hoped they could shove their egos aside and let Sukuna leave the town with what minimal disturbance he was willing to make. Everything you have worked so hard to achieve would be ruined without their cooperation.

Approaching the temple, you could not help but feel the delight swell in your chest. After years of this torment, this unjustified punishment, you are finally going to be free. You have survived, and now you will live. The journey has been difficult, but now you will achieve the tranquility and normalcy you deserve. Your children will have the chance to live a standard and carefree life, unlike the competitive and tiring one they would achieve with their father.

It was finally over.

Arriving at the temple did not feel as bitter this time, watching your children running to your attendant as she greeted you all, giving a respectful bow before taking off with the children, most likely heading off to eat. It was quiet as you stood in the garden; everyone else had gone to fill their appetite– it was just you and Sukuna.

"What has you smiling so brightly, Little Flower."

You had not noticed it, but you had plastered a broad, foolish grin onto your face. Usually, your partner catching this would have brought you anxiety as you thought of the right words, but you did not feel that way– quite the opposite. You were proud that he had noticed, allowing your smile to grow wider.

"I feel like a burden has been lifted off my shoulders, and I cannot wait to leave this place."

"I am glad I could bring such relieving news and bring a smile to your face," Sukuna responded, smiling down at you before taking your chin between his fingers and bending down, "Once you put the children to sleep, come seek me out as we have much more to discuss."

You could only smile stupidly, nodding and allowing Sukuna to kiss you before heading to your children. You did not care what the two-faced monster had to share with you, but you would indulge him because this would be the last time you would ever have to.

You were free.

"Oh, hello, Y/n-sama! We were just finishing our meals. Should I fix you something as well?" your attendant offered, keeping a light-hearted tone.

The young woman had grown more confident with you over the years. The two of you had grown quite close after the birth of your children– she was the only person you full-heartedly trusted with your kids. Maybe you would take her with you in your escape; she was far too good to serve ungrateful and bitter women.

"No, thank you, I am not that hungry; however, I have grown rather tired, meaning it is time for bed."

"Awwwwww," you twins whined in unison, looking at your attendant with puppy dog eyes, hoping she could convince you, only to receive a shake of her head.

The twins stood begrudgingly, approaching your awaiting stance, giving you the same desperate eyes. You gave your own silent response as you offered a warm smile and a quick shake of your head before having them follow you down the halls. In any other scenario, you would have in, but things were different now. Your children need to be well-rested for the upcoming events. You were going to give them the life they deserved.

Arriving at their sleep quarters, you slid the door open, allowing the twins in first before following. Before closing the door, you took a peek out into the hallway to make sure no one was approaching. Once you deduced nobody was coming, you slowly and quietly slid the door shut, quick to approach your kids' bedside.

"Mama, do we have to go to bed?" your daughter whined.

"Yeah, do we really have to?" your son followed.

You could not help but lightly chuckle at their resistance to sleep. Your heart filled with warmth as you remembered sharing a similar moment with your mother. There were many occasions they reminded you of yourself, and you could not wait to see more of those similarities manifest when you leave this temple. You could not wait to give them a regular and well-deserved life.

"Yes, you both have to rest. You two need to preserve your energy for the days to come."

That statement piqued their interest, their faces perking up with intrigue.

"What is to come, Mama?" the twins sounded in unison like they did so often in these moments. Sometimes, it was almost as if they shared the same mind.

"Well, soon enough, you will get to meet your grandparents," you whispered, "you cousins, aunts, and uncles, all from Mama's side of the family."

"Really?!" the two shouted, settling down when you gestured for them to lower their voices.

"Yes, but do not tell your father, it is..." you trailed, picking your words carefully, "a surprise visit just for the three of us, and I do not want him to feel left out."

There was no doubt that you despised Sukuna in every sense of the word, but you did not wish for your children to hate him. Believe it or not, you wanted your twins to paint a good picture of their father, and whether that picture remained clean was up to Sukuna himself– you would not tarnish his name for him.

"Okay, Mama, we promise we will not tell." your son spoke for the two of them, his sibling nodding in turn as she motioned to seal her lips.

You smiled, whispering a small thank you before kissing the top of their foreheads and letting them rest. You stood quietly, blowing out the candles illuminating the room before leaving. Once you stepped foot into the hallway, you were startled to see a guard, a familiar one at that, though he had clearly aged with time.

"Y/n-sama, I have been instructed to take you to your sleeping chambers," the male spoke before swiftly turning on his heel to lead you to your room.

The man's voice was cold and almost distant as he spoke to you, but his voice was familiar. You were acquainted with most of the staff within the temple, but you could not remember where you had met him in particular, though he seemed familiar and significant. Your face contorted as your mind pondered, trying to recognize his face in your personal timeline, but nothing came to mind.

"Your wedding night," the guard spoke suddenly, noticing your expression of thought, "I held and guarded the door during your wedding night."

You thought back to your wedding day, and it suddenly hit you. The guard was the same one Sukuna had forced to watch the consummation of your marriage. You quickly grew flustered at the memory, clearing your throat before speaking.

"I recall now," you responded, your voice barely above a whisper.

"Are you happy, Y/n-sama?" another unshakable tone as he questioned you.

Why was he asking this?

"Yes, I'm happy."

You did not know what this man was playing at, but you did not want to fall into any traps, so you gave the preferred answer when this question was presented to you on many occasions.

"Even though you have suffered all these years, bearing and raising his offspring?"

"Excuse me?" you grimaced at the guard's words.

"Nothing, I am sorry, I have overstepped my boundaries. I will leave you now," the man uttered, leaving you at the doorway to your sleeping quarters.

You narrowed your eyes, staring as the male's figure grew smaller in the distance. What did he gain from that interaction? No matter– it was no longer your problem to deal with. Collecting yourself, you entered the room and immediately faced Sukuna.

"Come and close the door. We must speak of these urgent matters in private," Sukuna muttered as he blankly stared at the wall in front of him.

You did not question the man and slid the door closed, approaching him as he turned to you. Before you could speak, Sukuna placed a pair of hands on your shoulders, looking into your eyes. His gaze held no emotion you could directly name, but you could sense an urgency in his tone as he spoke to you.

"We leave tonight. The others have been informed and are gathering their belongings– I advise you to do the same."

"What?! Now?! Sukuna, what is going on that you are not telling anyone?" you urged, staring at him with wide eyes.

"Now is no time to be questioning me, Y/n. Hurry, we are leaving shortly."

"No."

The word slipped out without thought. You did not care when you left because your plans would not change, but your partner was acting strangely, and you could not help but be curious as to why. The curiosity is what led you to stand there motionless as your husband stared you down.

"Stubborn as always, I see," the curse-user muttered, "Fine, you want to know, huh? We made a pact, and I'm upholding the bargain. You told me to protect those children, right? Well, for their interest, we are leaving, so be grateful."

You stood there silently, looking into Sukana's unwavering gaze.

"What is going on?" you repeated the question.

"Your village plans to lay siege, and we are leaving to not get caught in the firing radius."

That explained the tensity and whispers among the temple. That explained the extra protection. Everything now made sense and you could not help the feeling of something rising up your throat.

Laughter.

You laughed uncontrollably, trying to cover your mouth to muffle the outburst, but to no avail. Nothing about the situation was logically funny, but you could not control yourself.

"After years of torment, they only now decide to lay siege?" you cackled, "And the best part is that Ryomen Sukuna is fleeing with his tail between his legs."

You should have seen what was to come next when you made that last statement, feeling your hair being tugged to look up at the man you had insulted. Your laugh quickly subsided, swallowing the lump in your throat as you stared into his orbs. You had crossed a line this time, but for once, you were not scared of the intimidation; however, what had shocked you was Sukuna smashing his lips against yours.

"I am the most feared man in Japan– I have no reason to be scared, at least for myself. I am doing this for us and our creation because I love you, Little Flower."

"You do not love me. You love what I can do for you, Sukuna."

"I see where our children have gotten their observance." Sukuna joked, "But you are not entirely wrong. However, that does not change the fact we are leaving right here and now so collec-"

"AHHHHHHHHHHH"

The deformed man paused mid-sentence at the high-pitched scream, storming out of the room to see the commotion. You wasted no time in following him, walking down the hall before being met with the stench of blood. Had one of the pregnant wives gone into labor? Was someone injured? Or was...

Before you could finish that last thought, you were met with the sight of a lifeless body surrounded by its own red fluid. It was disturbingly familiar, and that was because it was the body of the guard that had escorted you earlier. You were shocked at his mangled state, his face just barely beyond recognition, but before you could allow the shock to settle in, another sound of screams was heard in the opposite direction.

Without thought, you bolted in the direction the screams came from. You flew past those blank walls faster than you knew you were capable of before landing at the sight of another body surrounded by women. It was your attendant, her face frozen in fear, her body almost in the same state as the previous one. This death hit you harder than the earlier one as you covered your mouth, keeping the bile from rising up your throat.