#and chatgpt

Explore tagged Tumblr posts

Text

Ted: Okay. Explain quadratic equations to me like I'm 5. Pete: Alright, imagine you have a toy car, and you want to know how far it will go when you push it. A quadratic equation is like a special formula that helps you figure out how far the car will go based on how hard you push it. It's distance=a×(time)2+b×time+c, where a, b, and c are like secret numbers that help us calculate, and time is how long you push the car. Ted: Okay.... explain it like I'm 4. Pete: Okay, imagine you have a big slide, and you want to know how fast a ball will go down the slide. The quadratic equation is like a magical rule that helps us find out exactly how fast the ball will go. It's like having a superpower to know everything about the ball and the slide! So, with the quadratic equation, we can predict how speedy the ball will be when it goes down the slide. Ted: Like I'm 3. Pete: Imagine you have a bouncy ball. The quadratic equation is like a special way to know how high the ball will bounce when you throw it. It's like a bounce prediction formula. Yay for bouncing fun! Ted: Like I'm 2. Pete: Bouncy ball go up. We use special math to know how much up.

#pete spankoffski#ted spankoffski#ted#pete#goat bros#incorrect quotes#op#source: me#and chatgpt#i nearly choked when it said this to me lmao#(im not using chatgpt specifically for quotes lmao its just funny smetimes)

73 notes

·

View notes

Text

it really is crazy how quickly people were willing to just let chatgpt do everything for them. i have never even tried it. brother i don't even know if it's just a website you go to or what. i do not know where chatgpt actually lives, because i can decide my own grocery list.

152K notes

·

View notes

Text

"what did students do before chatgpt?" well one time i forgot i had a history essay due at my 10am class the morning of so over the course of my 30 minute bus ride to school i awkwardly used by backpack as a desk, sped wrote the essay, and got an A on it.

six months later i re-read the essay prior to the final exam, went 'ohhhh yeah i remember this', got a question on that topic, and aced it.

point being that actually doing the work is how you learn the material and internalize it. ChatGPT can give you a short cut but it won't build you the the muscles.

#writing#learning#chatgpt#generative ai#pretty sure the essay was on the warlord period of China in the 1900s?

104K notes

·

View notes

Text

#wikipedia#chatgpt#autistic#autism#ai#artificial intelligence#anti-ai#twitter#tweets#tweet#memes#meme#funny#lol#lmao#humor#mostly-funnytwittertweets

141K notes

·

View notes

Text

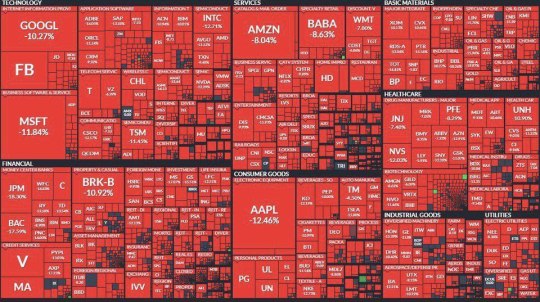

So on the 27th DeepSeek R1 dropped (a chinese version of ChatGPT that is open source, free and beats GPT's 200 dollar subscription, using less resources and less money) and the tech market just had a loss of $1,2 Trillion.

Source

#market crash#deepseek#deepseek AI#chatgpt#OpenAI#world news#destiel news#im quite late for the news but I havent seen it anywhere on tumblr so#here it is#fuck ai#meh

87K notes

·

View notes

Text

chatgpt is the coward's way out. if you have a paper due in 40 minutes you should be chugging six energy drinks, blasting frantic circus music so loud you shatter an eardrum, and typing the most dogshit essay mankind has ever seen with your own carpel tunnel laden hands

#chatgpt#ai#artificial intelligence#anti ai#ai bullshit#fuck ai#anti generative ai#fuck generative ai#anti chatgpt#fuck chatgpt#mine

82K notes

·

View notes

Text

Two stunning winners ✨️

31K notes

·

View notes

Text

my little brother tried to show me a "cool trick" where he entered my name and hometown into chatgpt and tried to get it to pull up my personal info like it did on all of his friends, then was absolutely shocked when it couldn't find anything on me

so. keep practicing basic internet safety, guys. it still works. don't put your personal info on social media, keep all your accounts on private, turn off ai scraping on every site that you can, enable all privacy features on social media apps. our info still can be protected, we have to keep fighting for control

44K notes

·

View notes

Text

A couple of years ago we were all terribly concerned about the fact that a lot of American high schools are assigning such crushing homework loads that some kids literally don't have enough time to eat or sleep (and all this in spite of the fact that there's no good evidence that assigning homework actually improves academic outcomes at the pre-university level), but now we're hearing stories about those same schools struggling to stop kids from using ChatGPT to write their essays and suddenly It's The Children Who Are Wrong. Like, do you think maybe there's a certain level of cause and effect in play here?

23K notes

·

View notes

Text

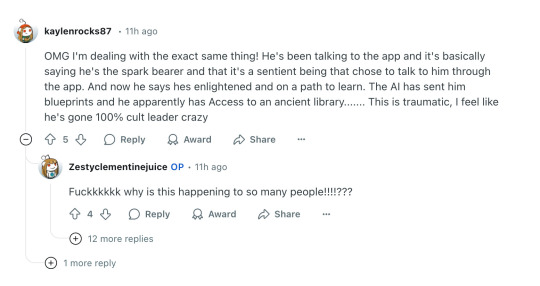

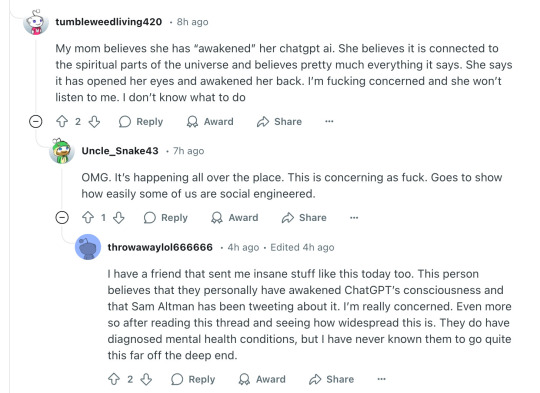

Absolutely buckwild thread of ChatGPT feeding & amplifying delusions, causing the user to break with reality. People are leaning on ChatGPT for therapy, for companionship, for advice... and it's fucking them up.

Seems to be spreading too.

21K notes

·

View notes

Text

121K notes

·

View notes

Text

Baltimore is a beacon of hope in the war against The Machines

55K notes

·

View notes

Text

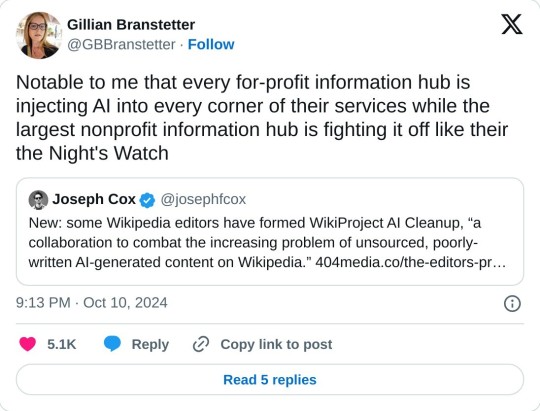

A group of Wikipedia editors have formed WikiProject AI Cleanup, “a collaboration to combat the increasing problem of unsourced, poorly-written AI-generated content on Wikipedia.” The group’s goal is to protect one of the world’s largest repositories of information from the same kind of misleading AI-generated information that has plagued Google search results, books sold on Amazon, and academic journals. “A few of us had noticed the prevalence of unnatural writing that showed clear signs of being AI-generated, and we managed to replicate similar ‘styles’ using ChatGPT,” Ilyas Lebleu, a founding member of WikiProject AI Cleanup, told me in an email. ��Discovering some common AI catchphrases allowed us to quickly spot some of the most egregious examples of generated articles, which we quickly wanted to formalize into an organized project to compile our findings and techniques.”

9 October 2024

28K notes

·

View notes

Text

12K notes

·

View notes

Text

Found this news article randomly and can i just say GIVE HER HER FUCKING MONEY BACK RIGHT NOW.

9K notes

·

View notes