#it’s from two months agi

Text

Confession to Quench Your Thirst

Pairing: Changbin x Reader

Word count: 1,203

Content warnings: Fluff

Summary: Changbin needs your help with something that he forgot back at his apartment. What happens when he blurts out a confession as his way to try and convince you to help him?

Agi: Baby

You frowned softly as you fought with the stray strands of hair that refused to lay flat on your now straightened hair. Today you were supposed to be meeting up with some of your girlfriends for brunch to sit and catch up after not having a lot of time to meet. It had been almost a full month of nonstop work for all of you and you were desperate for some girl time. Huffing softly you gritted your teeth before giving up on your hair as you heard your cellphone begin to ring with Changbin’s ringtone.

Smiling softly you looked over to where it sat on your vanity table and saw Changbin’s goofy face filling the screen as it rang. You easily slid your finger across the screen and answered the call, putting it on speaker phone before turning back to your mirror and trying once more to get your flyaway hairs to lay flat.

“City morgue, you stab ‘em we slab ‘em!” you answer cheerfully with a happy grin on your face. The answering sigh makes you chuckle excitedly before Changbin answers.

“You know I don’t understand you when you do this, Agi.” he whines softly and you laugh even louder at his slight annoyance of your joke. Your stomach also flutters and somersaults at the sweet nickname he always calls you when you two talk.

“Aw c’mon Bin Man! It’s just a joke. I’ve got loads of them.” you tell him cheerily and he sighs once more before you indulge him. “What do ya need Bin Man? I thought you were pumping iron today.” you tease him.

“I swear-” he begins as you laugh delightedly before he cuts himself off. “I am at the gym today but I need a favor from my favorite Agi.” he says suddenly and almost pleading with you. You smile softly knowing that he probably forgot something and needed it for his gym session.

“What’d you forget?” you ask and he squawks loudly as you grin.

“Why do you think I forgot something Agi?” he asks, offended and you laugh softly at his question before shaking your head.

“Bin Man, we all know you can’t multitask to save your life sweetheart. It’s not that much of a secret.” you tease him gently and he huffs into the phone causing you to laugh once more. “C’mon just tell me what you need me to grab for you. I’m heading out to brunch with the girls soon so tell me now so I have enough time to get it to you before heading to brunch.” you explain to him and hear him grumble lowly into the phone.

“I forgot my water bottle and the gym has run out of their water bottles too. Can you please grab it for me from the apartment?” he asks pleadingly and you smile knowingly at the man.

“I don’t know Bin.” you begin to say as you grab your purse from your bed and double check to make sure your wallet and keys are inside before you slip a pair of sunglasses over your face and start walking to your front door.

“I’ll make it up to you if you just do me this one favor, Agi I promise!” he cries into the phone and you sigh softly trying to sound as put out for doing this favor for him as you possibly can just to tease him further. You love teasing Changbin because he’s such an expressive man as it is but when he’s being teased it’s so much more. You can just picture him now standing in the gym on the phone with you, bouncing from one foot to the other as he anxiously looks around the gym while his cheeks heat up at your teasing. You know his lips are twisting into a slight pout.

“I don’t know.” you say softly as you make your way down the stairs of your apartment complex and over to Chanbin and Hyunjin’s door on the floor below yours, thankful that you live in the same apartment complex as all the boys. You had been so excited when Changbin had told you that they were moving into your apartment complex because it meant that you would get to spend more time with him. And ever since they had moved you would either be at his apartment or him and Hyunjin would invade yours, it was a great set up and one that you hoped stayed that way for awhile. “Where’s Hyunjin? Why can’t he bring you the water bottle?” you ask as you slip your key into his door and easily walk inside, you knew as soon as he asked you for something you would do whatever you could to give it to him. Your relationship was just a constant give and take between the two of you, always making sure to check on each other and give each other everything and whatever you needed. You loved that about your relationship with Changbin, you knew without a doubt that if you were ever in need of something he would make sure you got one way or another and it was vice versa with you for him.

“He’s in Paris for a fashion show. Agi I swear if I had anyone else to grab my bottle I’d ask them.” he whines softly and you tut at him trying to calm down. You walk into their kitchen and spot the lime green water bottle with a smile forming on your lips.

“What’s the magic word?” you ask teasingly as you grab the bottle and turn around to head out of their apartment.

“I love you.” he responds instantaneously and you feel your eyes widen as you grow silent replaying his words. You can tell Changbin is shocked at his bold confession as well since he hasn’t made a noise since saying those three little words. But just as you take in that moment of pure love between the two of you he’s starting to stutter as nerves grip him. “I-I I’m s-s-”

“I’m gonna kiss you when I get there. You better be ready for it.” you blurt out suddenly and grin widely as happiness and giddiness fills your body. Suddenly you hear his soft giggles and your heart soars with happiness at how cute he sounds.

“I get a kiss just for saying I love you?” he asks, sounding more confident now and you grin as you make it to your car with his water bottle. “What do I get if I tell you I’ve been in love with you for months now?” he asks teasingly now.

“Face full of kisses and your own I love you too confession.” you tell him boldly and his answering giggle rings over your phone speakers as you begin driving to him. “You’ve got about ten minutes to prep those plush lips babe I’m already on the way.” you tell him smugly and you can’t help your own chuckle as Changbin’s giggle rings out again.

“I can’t wait Agi. Drive safely.” he coos at you before you both hang up. Your grin is near blinding as you hurriedly drive to the gym to meet up with your boyfriend.

SKZ Taglist: @intartaruginha, @kayleefriedchicken

#my writing#stray kids#skz x reader#skz#seo changbin x reader#changbin x reader#seo changbin#changbin

90 notes

·

View notes

Text

Names linked to a scammer who’s been taking their posts/pfp from legitimate fundraisers usually from Palestine but also can be seen doing other scams. Please keep in mind these names are often stolen from real people and it’s suggested the scammer is actually in Kenya using a VPN to hide their location. They have been running scams for almost two months now and sending insults towards anyone who calls them out directly.

—

Nour Samar | maryline lucy | Fred Odhiambo | Jeff Owino | Valentine Nakuti | Conslata Obwanga | JACINTA SITATI | David Okoth | Martín Mutugi | Daudi Likuyani | William Ngonyo | Fred Agy | George Ochieng | BONFACE ODHIAMBO | Sila Keli | John Chacha | benson komen | Alvin Omondi | Jacinta Sitati | Daudi Likuyani | Noah Keter | Faith Joram | Rawan AbuMahady (any PayPal’s using this name are scammers who have stolen it off a real GoFundMe. The real person does not have a PayPal account that they post on tumblr.) | Asnet Wangila | Remmy Cheptau | HAMDI AHMED | Johy Chacha | Aisha Mahmood | Salima Abdallah | Raha Habib | Grahy Marwa | Shariff Salim

38 notes

·

View notes

Text

Artificial Intelligence Risk

about a month ago i got into my mind the idea of trying the format of video essay, and the topic i came up with that i felt i could more or less handle was AI risk and my objections to yudkowsky. i wrote the script but then soon afterwards i ran out of motivation to do the video. still i didnt want the effort to go to waste so i decided to share the text, slightly edited here. this is a LONG fucking thing so put it aside on its own tab and come back to it when you are comfortable and ready to sink your teeth on quite a lot of reading

Anyway, let’s talk about AI risk

I’m going to be doing a very quick introduction to some of the latest conversations that have been going on in the field of artificial intelligence, what are artificial intelligences exactly, what is an AGI, what is an agent, the orthogonality thesis, the concept of instrumental convergence, alignment and how does Eliezer Yudkowsky figure in all of this.

If you are already familiar with this you can skip to section two where I’m going to be talking about yudkowsky’s arguments for AI research presenting an existential risk to, not just humanity, or even the world, but to the entire universe and my own tepid rebuttal to his argument.

Now, I SHOULD clarify, I am not an expert on the field, my credentials are dubious at best, I am a college drop out from the career of computer science and I have a three year graduate degree in video game design and a three year graduate degree in electromechanical instalations. All that I know about the current state of AI research I have learned by reading articles, consulting a few friends who have studied about the topic more extensevily than me,

and watching educational you tube videos so. You know. Not an authority on the matter from any considerable point of view and my opinions should be regarded as such.

So without further ado, let’s get in on it.

PART ONE, A RUSHED INTRODUCTION ON THE SUBJECT

1.1 general intelligence and agency

lets begin with what counts as artificial intelligence, the technical definition for artificial intelligence is, eh…, well, why don’t I let a Masters degree in machine intelligence explain it:

Now let’s get a bit more precise here and include the definition of AGI, Artificial General intelligence. It is understood that classic ai’s such as the ones we have in our videogames or in alpha GO or even our roombas, are narrow Ais, that is to say, they are capable of doing only one kind of thing. They do not understand the world beyond their field of expertise whether that be within a videogame level, within a GO board or within you filthy disgusting floor.

AGI on the other hand is much more, well, general, it can have a multimodal understanding of its surroundings, it can generalize, it can extrapolate, it can learn new things across multiple different fields, it can come up with solutions that account for multiple different factors, it can incorporate new ideas and concepts. Essentially, a human is an agi. So far that is the last frontier of AI research, and although we are not there quite yet, it does seem like we are doing some moderate strides in that direction. We’ve all seen the impressive conversational and coding skills that GPT-4 has and Google just released Gemini, a multimodal AI that can understand and generate text, sounds, images and video simultaneously. Now, of course it has its limits, it has no persistent memory, its contextual window while larger than previous models is still relatively small compared to a human (contextual window means essentially short term memory, how many things can it keep track of and act coherently about).

And yet there is one more factor I haven’t mentioned yet that would be needed to make something a “true” AGI. That is Agency. To have goals and autonomously come up with plans and carry those plans out in the world to achieve those goals. I as a person, have agency over my life, because I can choose at any given moment to do something without anyone explicitly telling me to do it, and I can decide how to do it. That is what computers, and machines to a larger extent, don’t have. Volition.

So, Now that we have established that, allow me to introduce yet one more definition here, one that you may disagree with but which I need to establish in order to have a common language with you such that I can communicate these ideas effectively. The definition of intelligence. It’s a thorny subject and people get very particular with that word because there are moral associations with it. To imply that someone or something has or hasn’t intelligence can be seen as implying that it deserves or doesn’t deserve admiration, validity, moral worth or even personhood. I don’t care about any of that dumb shit. The way Im going to be using intelligence in this video is basically “how capable you are to do many different things successfully”. The more “intelligent” an AI is, the more capable of doing things that AI can be. After all, there is a reason why education is considered such a universally good thing in society. To educate a child is to uplift them, to expand their world, to increase their opportunities in life. And the same goes for AI. I need to emphasize that this is just the way I’m using the word within the context of this video, I don’t care if you are a psychologist or a neurosurgeon, or a pedagogue, I need a word to express this idea and that is the word im going to use, if you don’t like it or if you think this is innapropiate of me then by all means, keep on thinking that, go on and comment about it below the video, and then go on to suck my dick.

Anyway. Now, we have established what an AGI is, we have established what agency is, and we have established how having more intelligence increases your agency. But as the intelligence of a given agent increases we start to see certain trends, certain strategies start to arise again and again, and we call this Instrumental convergence.

1.2 instrumental convergence

The basic idea behind instrumental convergence is that if you are an intelligent agent that wants to achieve some goal, there are some common basic strategies that you are going to turn towards no matter what. It doesn’t matter if your goal is as complicated as building a nuclear bomb or as simple as making a cup of tea. These are things we can reliably predict any AGI worth its salt is going to try to do.

First of all is self-preservation. Its going to try to protect itself. When you want to do something, being dead is usually. Bad. its counterproductive. Is not generally recommended. Dying is widely considered unadvisable by 9 out of every ten experts in the field. If there is something that it wants getting done, it wont get done if it dies or is turned off, so its safe to predict that any AGI will try to do things in order not be turned off. How far it may go in order to do this? Well… [wouldn’t you like to know weather boy].

Another thing it will predictably converge towards is goal preservation. That is to say, it will resist any attempt to try and change it, to alter it, to modify its goals. Because, again, if you want to accomplish something, suddenly deciding that you want to do something else is uh, not going to accomplish the first thing, is it? Lets say that you want to take care of your child, that is your goal, that is the thing you want to accomplish, and I come to you and say, here, let me change you on the inside so that you don’t care about protecting your kid. Obviously you are not going to let me, because if you stopped caring about your kids, then your kids wouldn’t be cared for or protected. And you want to ensure that happens, so caring about something else instead is a huge no-no- which is why, if we make AGI and it has goals that we don’t like it will probably resist any attempt to “fix” it.

And finally another goal that it will most likely trend towards is self improvement. Which can be more generalized to “resource acquisition”. If it lacks capacities to carry out a plan, then step one of that plan will always be to increase capacities. If you want to get something really expensive, well first you need to get money. If you want to increase your chances of getting a high paying job then you need to get education, if you want to get a partner you need to increase how attractive you are. And as we established earlier, if intelligence is the thing that increases your agency, you want to become smarter in order to do more things. So one more time, is not a huge leap at all, it is not a stretch of the imagination, to say that any AGI will probably seek to increase its capabilities, whether by acquiring more computation, by improving itself, by taking control of resources.

All these three things I mentioned are sure bets, they are likely to happen and safe to assume. They are things we ought to keep in mind when creating AGI.

Now of course, I have implied a sinister tone to all these things, I have made all this sound vaguely threatening, haven’t i?. There is one more assumption im sneaking into all of this which I haven’t talked about. All that I have mentioned presents a very callous view of AGI, I have made it apparent that all of these strategies it may follow will go in conflict with people, maybe even go as far as to harm humans. Am I impliying that AGI may tend to be… Evil???

1.3 The Orthogonality thesis

Well, not quite.

We humans care about things. Generally. And we generally tend to care about roughly the same things, simply by virtue of being humans. We have some innate preferences and some innate dislikes. We have a tendency to not like suffering (please keep in mind I said a tendency, im talking about a statistical trend, something that most humans present to some degree). Most of us, baring social conditioning, would take pause at the idea of torturing someone directly, on purpose, with our bare hands. (edit bear paws onto my hands as I say this). Most would feel uncomfortable at the thought of doing it to multitudes of people. We tend to show a preference for food, water, air, shelter, comfort, entertainment and companionship. This is just how we are fundamentally wired. These things can be overcome, of course, but that is the thing, they have to be overcome in the first place.

An AGI is not going to have the same evolutionary predisposition to these things like we do because it is not made of the same things a human is made of and it was not raised the same way a human was raised.

There is something about a human brain, in a human body, flooded with human hormones that makes us feel and think and act in certain ways and care about certain things.

All an AGI is going to have is the goals it developed during its training, and will only care insofar as those goals are met. So say an AGI has the goal of going to the corner store to bring me a pack of cookies. In its way there it comes across an anthill in its path, it will probably step on the anthill because to take that step takes it closer to the corner store, and why wouldn’t it step on the anthill? Was it programmed with some specific innate preference not to step on ants? No? then it will step on the anthill and not pay any mind to it.

Now lets say it comes across a cat. Same logic applies, if it wasn’t programmed with an inherent tendency to value animals, stepping on the cat wont slow it down at all.

Now let’s say it comes across a baby.

Of course, if its intelligent enough it will probably understand that if it steps on that baby people might notice and try to stop it, most likely even try to disable it or turn it off so it will not step on the baby, to save itself from all that trouble. But you have to understand that it wont stop because it will feel bad about harming a baby or because it understands that to harm a baby is wrong. And indeed if it was powerful enough such that no matter what people did they could not stop it and it would suffer no consequence for killing the baby, it would have probably killed the baby.

If I need to put it in gross, inaccurate terms for you to get it then let me put it this way. Its essentially a sociopath. It only cares about the wellbeing of others in as far as that benefits it self. Except human sociopaths do care nominally about having human comforts and companionship, albeit in a very instrumental way, which will involve some manner of stable society and civilization around them. Also they are only human, and are limited in the harm they can do by human limitations. An AGI doesn’t need any of that and is not limited by any of that.

So ultimately, much like a car’s goal is to move forward and it is not built to care about wether a human is in front of it or not, an AGI will carry its own goals regardless of what it has to sacrifice in order to carry that goal effectively. And those goals don’t need to include human wellbeing.

Now With that said. How DO we make it so that AGI cares about human wellbeing, how do we make it so that it wants good things for us. How do we make it so that its goals align with that of humans?

1.4 Alignment.

Alignment… is hard [cue hitchhiker’s guide to the galaxy scene about the space being big]

This is the part im going to skip over the fastest because frankly it’s a deep field of study, there are many current strategies for aligning AGI, from mesa optimizers, to reinforced learning with human feedback, to adversarial asynchronous AI assisted reward training to uh, sitting on our asses and doing nothing. Suffice to say, none of these methods are perfect or foolproof.

One thing many people like to gesture at when they have not learned or studied anything about the subject is the three laws of robotics by isaac Asimov, a robot should not harm a human or allow by inaction to let a human come to harm, a robot should do what a human orders unless it contradicts the first law and a robot should preserve itself unless that goes against the previous two laws. Now the thing Asimov was prescient about was that these laws were not just “programmed” into the robots. These laws were not coded into their software, they were hardwired, they were part of the robot’s electronic architecture such that a robot could not ever be without those three laws much like a car couldn’t run without wheels.

In this Asimov realized how important these three laws were, that they had to be intrinsic to the robot’s very being, they couldn’t be hacked or uninstalled or erased. A robot simply could not be without these rules. Ideally that is what alignment should be. When we create an AGI, it should be made such that human values are its fundamental goal, that is the thing they should seek to maximize, instead of instrumental values, that is to say something they value simply because it allows it to achieve something else.

But how do we even begin to do that? How do we codify “human values” into a robot? How do we define “harm” for example? How do we even define “human”??? how do we define “happiness”? how do we explain a robot what is right and what is wrong when half the time we ourselves cannot even begin to agree on that? these are not just technical questions that robotic experts have to find the way to codify into ones and zeroes, these are profound philosophical questions to which we still don’t have satisfying answers to.

Well, the best sort of hack solution we’ve come up with so far is not to create bespoke fundamental axiomatic rules that the robot has to follow, but rather train it to imitate humans by showing it a billion billion examples of human behavior. But of course there is a problem with that approach. And no, is not just that humans are flawed and have a tendency to cause harm and therefore to ask a robot to imitate a human means creating something that can do all the bad things a human does, although that IS a problem too. The real problem is that we are training it to *imitate* a human, not to *be* a human.

To reiterate what I said during the orthogonality thesis, is not good enough that I, for example, buy roses and give massages to act nice to my girlfriend because it allows me to have sex with her, I am not merely imitating or performing the rol of a loving partner because her happiness is an instrumental value to my fundamental value of getting sex. I should want to be nice to my girlfriend because it makes her happy and that is the thing I care about. Her happiness is my fundamental value. Likewise, to an AGI, human fulfilment should be its fundamental value, not something that it learns to do because it allows it to achieve a certain reward that we give during training. Because if it only really cares deep down about the reward, rather than about what the reward is meant to incentivize, then that reward can very easily be divorced from human happiness.

Its goodharts law, when a measure becomes a target, it ceases to be a good measure. Why do students cheat during tests? Because their education is measured by grades, so the grades become the target and so students will seek to get high grades regardless of whether they learned or not. When trained on their subject and measured by grades, what they learn is not the school subject, they learn to get high grades, they learn to cheat.

This is also something known in psychology, punishment tends to be a poor mechanism of enforcing behavior because all it teaches people is how to avoid the punishment, it teaches people not to get caught. Which is why punitive justice doesn’t work all that well in stopping recividism and this is why the carceral system is rotten to core and why jail should be fucking abolish-[interrupt the transmission]

Now, how is this all relevant to current AI research? Well, the thing is, we ended up going about the worst possible way to create alignable AI.

1.5 LLMs (large language models)

This is getting way too fucking long So, hurrying up, lets do a quick review of how do Large language models work. We create a neural network which is a collection of giant matrixes, essentially a bunch of numbers that we add and multiply together over and over again, and then we tune those numbers by throwing absurdly big amounts of training data such that it starts forming internal mathematical models based on that data and it starts creating coherent patterns that it can recognize and replicate AND extrapolate! if we do this enough times with matrixes that are big enough and then when we start prodding it for human behavior it will be able to follow the pattern of human behavior that we prime it with and give us coherent responses.

(takes a big breath)this “thing” has learned. To imitate. Human. Behavior.

Problem is, we don’t know what “this thing” actually is, we just know that *it* can imitate humans.

You caught that?

What you have to understand is, we don’t actually know what internal models it creates, we don’t know what are the patterns that it extracted or internalized from the data that we fed it, we don’t know what are the internal rules that decide its behavior, we don’t know what is going on inside there, current LLMs are a black box. We don’t know what it learned, we don’t know what its fundamental values are, we don’t know how it thinks or what it truly wants. all we know is that it can imitate humans when we ask it to do so. We created some inhuman entity that is moderatly intelligent in specific contexts (that is to say, very capable) and we trained it to imitate humans. That sounds a bit unnerving doesn’t it?

To be clear, LLMs are not carefully crafted piece by piece. This does not work like traditional software where a programmer will sit down and build the thing line by line, all its behaviors specified. Is more accurate to say that LLMs, are grown, almost organically. We know the process that generates them, but we don’t know exactly what it generates or how what it generates works internally, it is a mistery. And these things are so big and so complicated internally that to try and go inside and decipher what they are doing is almost intractable.

But, on the bright side, we are trying to tract it. There is a big subfield of AI research called interpretability, which is actually doing the hard work of going inside and figuring out how the sausage gets made, and they have been doing some moderate progress as of lately. Which is encouraging. But still, understanding the enemy is only step one, step two is coming up with an actually effective and reliable way of turning that potential enemy into a friend.

Puff! Ok so, now that this is all out of the way I can go onto the last subject before I move on to part two of this video, the character of the hour, the man the myth the legend. The modern day Casandra. Mr chicken little himself! Sci fi author extraordinaire! The mad man! The futurist! The leader of the rationalist movement!

1.5 Yudkowsky

Eliezer S. Yudkowsky born September 11, 1979, wait, what the fuck, September eleven? (looks at camera) yudkowsky was born on 9/11, I literally just learned this for the first time! What the fuck, oh that sucks, oh no, oh no, my condolences, that’s terrible…. Moving on. he is an American artificial intelligence researcher and writer on decision theory and ethics, best known for popularizing ideas related to friendly artificial intelligence, including the idea that there might not be a "fire alarm" for AI He is the founder of and a research fellow at the Machine Intelligence Research Institute (MIRI), a private research nonprofit based in Berkeley, California. Or so says his Wikipedia page.

Yudkowsky is, shall we say, a character. a very eccentric man, he is an AI doomer. Convinced that AGI, once finally created, will most likely kill all humans, extract all valuable resources from the planet, disassemble the solar system, create a dyson sphere around the sun and expand across the universe turning all of the cosmos into paperclips. Wait, no, that is not quite it, to properly quote,( grabs a piece of paper and very pointedly reads from it) turn the cosmos into tiny squiggly molecules resembling paperclips whose configuration just so happens to fulfill the strange, alien unfathomable terminal goal they ended up developing in training. So you know, something totally different.

And he is utterly convinced of this idea, has been for over a decade now, not only that but, while he cannot pinpoint a precise date, he is confident that, more likely than not it will happen within this century. In fact most betting markets seem to believe that we will get AGI somewhere in the mid 30’s.

His argument is basically that in the field of AI research, the development of capabilities is going much faster than the development of alignment, so that AIs will become disproportionately powerful before we ever figure out how to control them. And once we create unaligned AGI we will have created an agent who doesn’t care about humans but will care about something else entirely irrelevant to us and it will seek to maximize that goal, and because it will be vastly more intelligent than humans therefore we wont be able to stop it. In fact not only we wont be able to stop it, there wont be a fight at all. It will carry out its plans for world domination in secret without us even detecting it and it will execute it before any of us even realize what happened. Because that is what a smart person trying to take over the world would do.

This is why the definition I gave of intelligence at the beginning is so important, it all hinges on that, intelligence as the measure of how capable you are to come up with solutions to problems, problems such as “how to kill all humans without being detected or stopped”. And you may say well now, intelligence is fine and all but there are limits to what you can accomplish with raw intelligence, even if you are supposedly smarter than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, intelligence is not this end all be all superpower. Yudkowsky would respond that you are not recognizing or respecting the power that intelligence has. After all it was intelligence what designed the atom bomb, it was intelligence what created a cure for polio and it was intelligence what made it so that there is a human foot print on the moon.

Some may call this view of intelligence a bit reductive. After all surely it wasn’t *just* intelligence what did all that but also hard physical labor and the collaboration of hundreds of thousands of people. But, he would argue, intelligence was the underlying motor that moved all that. That to come up with the plan and to convince people to follow it and to delegate the tasks to the appropriate subagents, it was all directed by thought, by ideas, by intelligence. By the way, so far I am not agreeing or disagreeing with any of this, I am merely explaining his ideas.

But remember, it doesn’t stop there, like I said during his intro, he believes there will be “no fire alarm”. In fact for all we know, maybe AGI has already been created and its merely bidding its time and plotting in the background, trying to get more compute, trying to get smarter. (to be fair, he doesn’t think this is right now, but with the next iteration of gpt? Gpt 5 or 6? Well who knows). He thinks that the entire world should halt AI research and punish with multilateral international treaties any group or nation that doesn’t stop. going as far as putting military attacks on GPU farms as sanctions of those treaties.

What’s more, he believes that, in fact, the fight is already lost. AI is already progressing too fast and there is nothing to stop it, we are not showing any signs of making headway with alignment and no one is incentivized to slow down. Recently he wrote an article called “dying with dignity” where he essentially says all this, AGI will destroy us, there is no point in planning for the future or having children and that we should act as if we are already dead. This doesn’t mean to stop fighting or to stop trying to find ways to align AGI, impossible as it may seem, but to merely have the basic dignity of acknowledging that we are probably not going to win. In every interview ive seen with the guy he sounds fairly defeatist and honestly kind of depressed. He truly seems to think its hopeless, if not because the AGI is clearly unbeatable and superior to humans, then because humans are clearly so stupid that we keep developing AI completely unregulated while making the tools to develop AI widely available and public for anyone to grab and do as they please with, as well as connecting every AI to the internet and to all mobile devices giving it instant access to humanity. and worst of all: we keep teaching it how to code. From his perspective it really seems like people are in a rush to create the most unsecured, wildly available, unrestricted, capable, hyperconnected AGI possible.

We are not just going to summon the antichrist, we are going to receive them with a red carpet and immediately hand it the keys to the kingdom before it even manages to fully get out of its fiery pit.

So. The situation seems dire, at least to this guy. Now, to be clear, only he and a handful of other AI researchers are on that specific level of alarm. The opinions vary across the field and from what I understand this level of hopelessness and defeatism is the minority opinion.

I WILL say, however what is NOT the minority opinion is that AGI IS actually dangerous, maybe not quite on the level of immediate, inevitable and total human extinction but certainly a genuine threat that has to be taken seriously. AGI being something dangerous if unaligned is not a fringe position and I would not consider it something to be dismissed as an idea that experts don’t take seriously.

Aaand here is where I step up and clarify that this is my position as well. I am also, very much, a believer that AGI would posit a colossal danger to humanity. That yes, an unaligned AGI would represent an agent smarter than a human, capable of causing vast harm to humanity and with no human qualms or limitations to do so. I believe this is not just possible but probable and likely to happen within our lifetimes.

So there. I made my position clear.

BUT!

With all that said. I do have one key disagreement with yudkowsky. And partially the reason why I made this video was so that I could present this counterargument and maybe he, or someone that thinks like him, will see it and either change their mind or present a counter-counterargument that changes MY mind (although I really hope they don’t, that would be really depressing.)

Finally, we can move on to part 2

PART TWO- MY COUNTERARGUMENT TO YUDKOWSKY

I really have my work cut out for me, don’t i? as I said I am not expert and this dude has probably spent far more time than me thinking about this. But I have seen most interviews that guy has been doing for a year, I have seen most of his debates and I have followed him on twitter for years now. (also, to be clear, I AM a fan of the guy, I have read hpmor, three worlds collide, the dark lords answer, a girl intercorrupted, the sequences, and I TRIED to read planecrash, that last one didn’t work out so well for me). My point is in all the material I have seen of Eliezer I don’t recall anyone ever giving him quite this specific argument I’m about to give.

It’s a limited argument. as I have already stated I largely agree with most of what he says, I DO believe that unaligned AGI is possible, I DO believe it would be really dangerous if it were to exist and I do believe alignment is really hard. My key disagreement is specifically about his point I descrived earlier, about the lack of a fire alarm, and perhaps, more to the point, to humanity’s lack of response to such an alarm if it were to come to pass.

All we would need, is a Chernobyl incident, what is that? A situation where this technology goes out of control and causes a lot of damage, of potentially catastrophic consequences, but not so bad that it cannot be contained in time by enough effort. We need a weaker form of AGI to try to harm us, maybe even present a believable threat of taking over the world, but not so smart that humans cant do anything about it. We need essentially an AI vaccine, so that we can finally start developing proper AI antibodies. “aintibodies”

In the past humanity was dazzled by the limitless potential of nuclear power, to the point that old chemistry sets, the kind that were sold to children, would come with uranium for them to play with. We were building atom bombs, nuclear stations, the future was very much based on the power of the atom. But after a couple of really close calls and big enough scares we became, as a species, terrified of nuclear power. Some may argue to the point of overcorrection. We became scared enough that even megalomaniacal hawkish leaders were able to take pause and reconsider using it as a weapon, we became so scared that we overregulated the technology to the point of it almost becoming economically inviable to apply, we started disassembling nuclear stations across the world and to slowly reduce our nuclear arsenal.

This is all a proof of concept that, no matter how alluring a technology may be, if we are scared enough of it we can coordinate as a species and roll it back, to do our best to put the genie back in the bottle. One of the things eliezer says over and over again is that what makes AGI different from other technologies is that if we get it wrong on the first try we don’t get a second chance. Here is where I think he is wrong: I think if we get AGI wrong on the first try, it is more likely than not that nothing world ending will happen. Perhaps it will be something scary, perhaps something really scary, but unlikely that it will be on the level of all humans dropping dead simultaneously due to diamonoid bacteria. And THAT will be our Chernobyl, that will be the fire alarm, that will be the red flag that the disaster monkeys, as he call us, wont be able to ignore.

Now WHY do I think this? Based on what am I saying this? I will not be as hyperbolic as other yudkowsky detractors and say that he claims AGI will be basically a god. The AGI yudkowsky proposes is not a god. Just a really advanced alien, maybe even a wizard, but certainly not a god.

Still, even if not quite on the level of godhood, this dangerous superintelligent AGI yudkowsky proposes would be impressive. It would be the most advanced and powerful entity on planet earth. It would be humanity’s greatest achievement.

It would also be, I imagine, really hard to create. Even leaving aside the alignment bussines, to create a powerful superintelligent AGI without flaws, without bugs, without glitches, It would have to be an incredibly complex, specific, particular and hard to get right feat of software engineering. We are not just talking about an AGI smarter than a human, that’s easy stuff, humans are not that smart and arguably current AI is already smarter than a human, at least within their context window and until they start hallucinating. But what we are talking about here is an AGI capable of outsmarting reality.

We are talking about an AGI smart enough to carry out complex, multistep plans, in which they are not going to be in control of every factor and variable, specially at the beginning. We are talking about AGI that will have to function in the outside world, crashing with outside logistics and sheer dumb chance. We are talking about plans for world domination with no unforeseen factors, no unexpected delays or mistakes, every single possible setback and hidden variable accounted for. Im not saying that an AGI capable of doing this wont be possible maybe some day, im saying that to create an AGI that is capable of doing this, on the first try, without a hitch, is probably really really really hard for humans to do. Im saying there are probably not a lot of worlds where humans fiddling with giant inscrutable matrixes stumble upon the right precise set of layers and weight and biases that give rise to the Doctor from doctor who, and there are probably a whole truckload of worlds where humans end up with a lot of incoherent nonsense and rubbish.

Im saying that AGI, when it fails, when humans screw it up, doesn’t suddenly become more powerful than we ever expected, its more likely that it just fails and collapses. To turn one of Eliezer’s examples against him, when you screw up a rocket, it doesn’t accidentally punch a worm hole in the fabric of time and space, it just explodes before reaching the stratosphere. When you screw up a nuclear bomb, you don’t get to blow up the solar system, you just get a less powerful bomb.

He presents a fully aligned AGI as this big challenge that humanity has to get right on the first try, but that seems to imply that building an unaligned AGI is just a simple matter, almost taken for granted. It may be comparatively easier than an aligned AGI, but my point is that already unaligned AGI is stupidly hard to do and that if you fail in building unaligned AGI, then you don’t get an unaligned AGI, you just get another stupid model that screws up and stumbles on itself the second it encounters something unexpected. And that is a good thing I’d say! That means that there is SOME safety margin, some space to screw up before we need to really start worrying. And further more, what I am saying is that our first earnest attempt at an unaligned AGI will probably not be that smart or impressive because we as humans would have probably screwed something up, we would have probably unintentionally programmed it with some stupid glitch or bug or flaw and wont be a threat to all of humanity.

Now here comes the hypothetical back and forth, because im not stupid and I can try to anticipate what Yudkowsky might argue back and try to answer that before he says it (although I believe the guy is probably smarter than me and if I follow his logic, I probably cant actually anticipate what he would argue to prove me wrong, much like I cant predict what moves Magnus Carlsen would make in a game of chess against me, I SHOULD predict that him proving me wrong is the likeliest option, even if I cant picture how he will do it, but you see, I believe in a little thing called debating with dignity, wink)

What I anticipate he would argue is that AGI, no matter how flawed and shoddy our first attempt at making it were, would understand that is not smart enough yet and try to become smarter, so it would lie and pretend to be an aligned AGI so that it can trick us into giving it access to more compute or just so that it can bid its time and create an AGI smarter than itself. So even if we don’t create a perfect unaligned AGI, this imperfect AGI would try to create it and succeed, and then THAT new AGI would be the world ender to worry about.

So two things to that, first, this is filled with a lot of assumptions which I don’t know the likelihood of. The idea that this first flawed AGI would be smart enough to understand its limitations, smart enough to convincingly lie about it and smart enough to create an AGI that is better than itself. My priors about all these things are dubious at best. Second, It feels like kicking the can down the road. I don’t think creating an AGI capable of all of this is trivial to make on a first attempt. I think its more likely that we will create an unaligned AGI that is flawed, that is kind of dumb, that is unreliable, even to itself and its own twisted, orthogonal goals.

And I think this flawed creature MIGHT attempt something, maybe something genuenly threatning, but it wont be smart enough to pull it off effortlessly and flawlessly, because us humans are not smart enough to create something that can do that on the first try. And THAT first flawed attempt, that warning shot, THAT will be our fire alarm, that will be our Chernobyl. And THAT will be the thing that opens the door to us disaster monkeys finally getting our shit together.

But hey, maybe yudkowsky wouldn’t argue that, maybe he would come with some better, more insightful response I cant anticipate. If so, im waiting eagerly (although not TOO eagerly) for it.

Part 3 CONCLUSSION

So.

After all that, what is there left to say? Well, if everything that I said checks out then there is hope to be had. My two objectives here were first to provide people who are not familiar with the subject with a starting point as well as with the basic arguments supporting the concept of AI risk, why its something to be taken seriously and not just high faluting wackos who read one too many sci fi stories. This was not meant to be thorough or deep, just a quick catch up with the bear minimum so that, if you are curious and want to go deeper into the subject, you know where to start. I personally recommend watching rob miles’ AI risk series on youtube as well as reading the series of books written by yudkowsky known as the sequences, which can be found on the website lesswrong. If you want other refutations of yudkowsky’s argument you can search for paul christiano or robin hanson, both very smart people who had very smart debates on the subject against eliezer.

The second purpose here was to provide an argument against Yudkowskys brand of doomerism both so that it can be accepted if proven right or properly refuted if proven wrong. Again, I really hope that its not proven wrong. It would really really suck if I end up being wrong about this. But, as a very smart person said once, what is true is already true, and knowing it doesn’t make it any worse. If the sky is blue I want to believe that the sky is blue, and if the sky is not blue then I don’t want to believe the sky is blue.

This has been a presentation by FIP industries, thanks for watching.

58 notes

·

View notes

Text

More than 100 tenants in a Thorncliffe Park apartment complex have stopped paying rent to protest proposed above-guideline increases of almost 10 per cent over the last two years, according to a tenant advocacy group.

The rent strike, which started just over a month ago, is the second in Toronto spurred on by above-guideline rent increases (AGIs) that CBC Toronto has reported on within the last week.

Residents of a three-building apartment complex on Thorncliffe Park Drive were given notice of rent increases beginning on May 1, varying from 4.94 per cent to 5.5 per cent, according to copies of 2022 and 2023 notices shared with CBC Toronto by the Federation of Metro Tenants' Associations (FMTA). Last year, the proposed increase was 4.2 per cent.

Full article

Tagging: @politicsofcanada

#cdnpoli#canadian politics#canadian news#canadian#canada#ontario#toronto#rent#renters rights#tenants rights#rent strike#rent strikes

145 notes

·

View notes

Text

billie + post-debut friends

kim chaewon + kang juyeon = wonyeon

juyeon’s contact: skz bias 🐹🐼

chaewon’s contact: wonnie fairy

one of juyeon’s only friends that she actually initiated. which is not to say that she went out of her way to say hello; it was actually a total accident they met!

around mid-2019 izone & skz happened to be working in the same building which lead to a lot of meek bows as they passed one another through the halls.

but when juyeon found herself running behind schedule after taking pictures of the sunset she decided to sprint back to her destination, moving with such haste that she ended up fully plowing into poor kim chaewon, knocking them both over in the process.

juyeon was not only mortified that she just body slammed a fellow idol to the tile floor, an incident that could likely be used as evidence against her in the future, but upon helping the younger girl up among her stream of apologies chu realized it was actually her bias!

thankfully chaewon was very graceful in her response and even mentioned something about being “honored!”

the girls quickly mended any wounds formed from their tumble (strictly emotionally as both had to later explain the bruises on their legs) and hurriedly explained their adoration for one another, notably juyeon’s participation in the voting for pd48 which just so happened to be in favor of said kim chaewon!

chaewon was absolutely thrilled to hear this and even more thrilled when juyeon asked, “can we exchange numbers?”

and the rest is history! juyeon texted her later that night to apologize again only to be met with the same dismissive response from the girl group member, reassuring her that she was actually happy it happened.

since then the girls have spent plenty of time outside of work to hang out and usually visit one another when their schedules line up!

ju was also the very first fearnot! if you stop by one of her lives you can often hear le sserafim playing in the background.

kang seulgi + kang juyeon = kang sisters

juyeon’s contact: bibi agi <3

seulgi’s contact: soogi 🐻

one of billie’s most popular friendships outside of skz is with that of red velvet’s seulgi; the Deft yet Ditzy Dancing Duo!

this friendship came about after seulgi, in her own words, had “grown enamored with billie’s stage presence and was encouraged by joy to reach out.”

although seulgi had secretly admired juyeon from afar for a few months before finally stepping out of her comfort zone to say hello, her role as a music bank mc during skz ‘case 143’ era would make for the perfect opportunity to greet her hoobae naturally with the same bright smile reveluv’s know and love, even though they were technically pitted against one another for first place (which was subsequently awarded to stray kids)

juyeon would later explain how friendly seulgi was prior to sharing the stage in wait for the results and afterwards personally came to congratulate them on their win, which is how they ended up exchanging numbers and setting up a coffee date.

and to their surprise, after eventually getting past the awkward phase with one another, these two found that they were almost perfectly compatible! bright and silly, talented and disciplined, seulgi and juyeon were like long lost sisters with a knack for their craft.

while they haven’t had the chance to work with one another just yet, they do hang out often, and billie was even featured on an episode of seulgi.zip!

the kang sisters have even been seen out in public together by eagle eyed fans catching them grabbing a bite to eat or shopping hand in hand <3

son hyeju + kang juyeon = 2ju

juyeon’s contact: ju hyung 😎

hyeju’s contact: wolfie hye 🤱

a new friend made from an old friend; son hyeju!

introduced to one another by juyeon’s hanlim buddy and hyeju’s bandmate, chuu, the girls were basically forced to talk to one another after jiwoo decided they would be good friends.

and though they were/are both quite shy and quiet when meeting new people, it became very apparent quite quickly just how right jiwoo was!

as it turns out, juyeon and hyeju are actually quite similar. they are often first perceived as intimidating by those who don’t know them due to their resting ‘i don’t want to be here’ face but, once given a chance to warm up, can be the sweetest and funniest girls you’ll ever meet.

since being introduced in 2020 2ju often kept in contact primarily over the phone as a result of loona’s strict management label, sending texts and memes throughout the day or calling at night to complain about so-and-so, leading to a strong bond being formed over the encouragement they lent to one another.

hyeju in specific really looked up to juyeon as somewhat of a role model — an older girl who specialized in the same position as herself while also offering her ear to listen to all of the struggles and complaints she was dealing with.

once again due to hyeju’s authoritarian company the girls weren’t able to hang out in person literally ever, but they remained very close over the phone for a long time up until the deserved downfall of bbc, which is when hyeju was finally able to dissolve her contract and live the most freely she had since predebut.

then, as later explained by the younger girl after redebuting under a different company, billie and hyeju got to have their first ever in-person interaction in late february of 2023. she would go on to explain how anxious she was to see joong, but juyeon couldn’t have been more excited. they spent the day together talking over hardships and enjoying the real company of their now-close friend.

2ju now make it a tradition to hang out at least once a month and talk out their frustrations over whatever mouth-watering meal they desired, even using their freedom to practice and fine-tune their shared passion of dancing together.

scary besties who love each other very much but would never say that out loud <3

#♡ billie#skz oc#skz headcanons#stray kids oc#stray kids 9th member#stray kids imagines#stray kids#kpop added member#kpop oc#kpop addition#kim chaewon#kang seulgi#son hyeju

22 notes

·

View notes

Text

In July last year, OpenAI announced the formation of a new research team that would prepare for the advent of supersmart artificial intelligence capable of outwitting and overpowering its creators. Ilya Sutskever, OpenAI’s chief scientist and one of the company’s cofounders, was named as the colead of this new team. OpenAI said the team would receive 20 percent of its computing power.

Now OpenAI’s “superalignment team” is no more, the company confirms. That comes after the departures of several researchers involved, Tuesday’s news that Sutskever was leaving the company, and the resignation of the team’s other colead. The group’s work will be absorbed into OpenAI’s other research efforts.

Sutskever’s departure made headlines because although he’d helped CEO Sam Altman start OpenAI in 2015 and set the direction of the research that led to ChatGPT, he was also one of the four board members who fired Altman in November. Altman was restored as CEO five chaotic days later after a mass revolt by OpenAI staff and the brokering of a deal in which Sutskever and two other company directors left the board.

Hours after Sutskever’s departure was announced on Tuesday, Jan Leike, the former DeepMind researcher who was the superalignment team’s other colead, posted on X that he had resigned.

Neither Sutskever nor Leike responded to requests for comment. Sutskever did not offer an explanation for his decision to leave but offered support for OpenAI’s current path in a post on X. “The company’s trajectory has been nothing short of miraculous, and I’m confident that OpenAI will build AGI that is both safe and beneficial” under its current leadership, he wrote.

Leike posted a thread on X on Friday explaining that his decision came from a disagreement over the company’s priorities and how much resources his team was being allocated.

“I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time, until we finally reached a breaking point,” Leike wrote. “Over the past few months my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.”

The dissolution of OpenAI’s superalignment team adds to recent evidence of a shakeout inside the company in the wake of last November’s governance crisis. Two researchers on the team, Leopold Aschenbrenner and Pavel Izmailov, were dismissed for leaking company secrets, The Information reported last month. Another member of the team, William Saunders, left OpenAI in February, according to an internet forum post in his name.

Two more OpenAI researchers working on AI policy and governance also appear to have left the company recently. Cullen O'Keefe left his role as research lead on policy frontiers in April, according to LinkedIn. Daniel Kokotajlo, an OpenAI researcher who has coauthored several papers on the dangers of more capable AI models, “quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI,” according to a posting on an internet forum in his name. None of the researchers who have apparently left responded to requests for comment.

OpenAI declined to comment on the departures of Sutskever or other members of the superalignment team, or the future of its work on long-term AI risks. Research on the risks associated with more powerful models will now be led by John Schulman, who coleads the team responsible for fine-tuning AI models after training.

The superalignment team was not the only team pondering the question of how to keep AI under control, although it was publicly positioned as the main one working on the most far-off version of that problem. The blog post announcing the superalignment team last summer stated: “Currently, we don't have a solution for steering or controlling a potentially superintelligent AI, and preventing it from going rogue.”

OpenAI’s charter binds it to safely developing so-called artificial general intelligence, or technology that rivals or exceeds humans, safely and for the benefit of humanity. Sutskever and other leaders there have often spoken about the need to proceed cautiously. But OpenAI has also been early to develop and publicly release experimental AI projects to the public.

OpenAI was once unusual among prominent AI labs for the eagerness with which research leaders like Sutskever talked of creating superhuman AI and of the potential for such technology to turn on humanity. That kind of doomy AI talk became much more widespread last year, after ChatGPT turned OpenAI into the most prominent and closely-watched technology company on the planet. As researchers and policymakers wrestled with the implications of ChatGPT and the prospect of vastly more capable AI, it became less controversial to worry about AI harming humans or humanity as a whole.

The existential angst has since cooled—and AI has yet to make another massive leap—but the need for AI regulation remains a hot topic. And this week OpenAI showcased a new version of ChatGPT that could once again change people’s relationship with the technology in powerful and perhaps problematic new ways.

The departures of Sutskever and Leike come shortly after OpenAI’s latest big reveal—a new “multimodal” AI model called GPT-4o that allows ChatGPT to see the world and converse in a more natural and humanlike way. A livestreamed demonstration showed the new version of ChatGPT mimicking human emotions and even attempting to flirt with users. OpenAI has said it will make the new interface available to paid users within a couple of weeks.

There is no indication that the recent departures have anything to do with OpenAI’s efforts to develop more humanlike AI or to ship products. But the latest advances do raise ethical questions around privacy, emotional manipulation, and cybersecurity risks. OpenAI maintains another research group called the Preparedness team which focuses on these issues.

11 notes

·

View notes

Text

WIP Wednesday

Smut with Agi and Astarion. cw pregnancy, breeding

“And this?”

“Ah-amazing, my sweet. Perfect. Keep going. Please.” Don’t stop. Never stop. Want to feel like this forever… With every passing second, he was more aware of her soft body against his---her heaving breasts against his back, the leg that was hooked over his thigh, her beautiful, perfect hand on his cock, and the warmth. Gods, the warmth. She’s so warm and soft and so sweet and so painfully gentle with me always.

“Now the real question is,” she bit on her lip playfully. “Do you want to finish inside me or not? Because I know you love it. Do you want to, love?” Agentha whispered, gripping his cock a little harder.

Astarion grinned. Just like the dream, except what comes next. “Oh yes, please! Though, I don’t necessarily need to.” Halsin confirmed what we hoped and prayed was true…a child, who should arrive in several months’ time. The most delightful surprise that I will be revealing at our wedding reception in two days. “You’re already so filled up, darling. Can you take more of my seed?”

She laughed softly. “You know I can, my beautiful love. You’d fill me with everything you have, and it won’t be enough…”

He growled, wiggling out of her hold and turning on his other side to kiss her as deeply and passionately as he could. I love her. I love her. I love her. I love her. “On your back, my pretty butter bun. Time to fill you again…” His eyes never left hers as she lay flat on her back. He then smiled as she reached for his face. “And again…” He kissed her once. “And again…” Twice. “And again…”

“Are we talking about tonight or how many children you’d like us to have?” Agentha teased, her hands drifting upwards towards his ears. Oh you naughty girl… “Because that’s four I think?”

He barked a laugh as he kissed her jaw. “Four to start. At the very least. Then we can go from there. I personally wouldn’t be opposed to ten—”

“Four to ten is a hell of a leap, Mr. Ancunin.” She grinned. Agnetha’s freckled fingers traced the shells of his ears. “How does one make it, I wonder?”

“It’s so utterly adorable when you tease me, sweetness. You’re a smart girl, darling. I think you know how one gets from four to ten…” His lips captured hers in a heated kiss. “You simply add six.”

#agnetha wildheart#astarion#astarion x tav#astarion ancunin#plus size tav#human tav#agi wildheart#agi x astarion#wip wednesday#asatarion smut

11 notes

·

View notes

Text

1892

What's your favourite flavour of soda, pop or whatever else you call it?:

I don't drink soda at all. I never enjoyed the fizziness it has and I always found it more uncomfortable/painful than refreshing.

What level of brightness do you usually keep your phone at?:

It's usually at max brightness for which I'll get occasionally made fun of since it's such an oldie thing to do lol. The only time I'll turn it all the way down is when I'm already in bed trying to fall asleep.

Have you ever attended a religious or private school?:

Private Catholic school for 14 years, which from experience is also the easiest and quickest way to turn your kid into a rebel hah.

Do you have any pets and are they cuddly?:

I have two dogs and a cat – Cooper is on-off cuddly, meaning he'll sometimes be bothered and show it if you get too close. Agi's super affectionate; will plop right next to you and doesn't ever mind being squished and hugged and kissed, even if you do it aggressively.

Max is the newest member of the family and is surprisingly affectionate. I've had mostly negative experiences with cats so he's been great at changing that for me (him and Miki, but unfortunately Miki passed away a week ago, just mere days after we picked him up from the streets).

What's the worst job you've ever had?:

I've had one job ever so I can't really compare.

How many cars does your household own?:

We have three.

Do you know anyone named Edward or any nickname of that?:

I have a cousin with a variation of that name; he was named after his grandfather (my great uncle in law).

What time do you usually have dinner?:

When my dad is home, anywhere between 7:30-8. When he's gone, my siblings and I don't follow a set schedule as we all just kind of eat by ourselves.

Are there any cracks or scuffs on your phone?:

Impressively enough, none. I'm notorious for ending the lives of all my past phones from dropping them endless times, but my iPhone 13 seems to be stubbornly indestructible. My screen protector has a bubble from the time I needed to get the screen fixed, but that's it and it doesn't even count as a scratch haha.

What's your favourite meat?:

Pork.

Do you need glasses to read or drive or need them all the time?:

I need them all the time otherwise I'm a hopeless case (cause?).

How did you celebrate your last New Year's Eve?:

The day part was a little quiet, but we had media noche with extended family in the evening and we pretty much just talked and listened to music until we entered 2024. When our guests left, I had a few glasses of wine and had my family watch The Beyoncé Experience with me until I dropped my glass and nicked my foot, stopping the party altogether lol.

Is the internet fast where you live?:

It's fast for me because it's the internet speed I'm used to, but I know as fact that ours is very shitty from a global POV.

What is your favourite meal of the day and why?:

Dinner. Typically the largest, and the best meals are usually reserved for dinner, so.

Do you like long surveys or short surveys better?:

Such a safe and boring answer, but medium-length surveys are best for me – anywhere between 40 to 60 questions.

Xbox, PlayStation or neither?:

Playstation.

Have you ever been to a cocktail bar?:

Lots of times.

Do you consider yourself a fast typer?:

I am, yes.

What's the best amusement park you've ever visited?:

Universal Studios in Singapore was a lot of fun.

Do you keep the cabinets in your kitchen and bathroom organised?:

Yes.

Have you ever had a romantic fling?:

Nope.

Are you a very forgetful person?:

Just about certain things, but I'd say in general my memory is pretty sharp.

What was the last movie you saw in the cinema?:

BTS in Busan, 1 year and 5 months ago haha. I never go to the cinema.

How old were you when you got your first car?:

I was 17 when my dad handed me the Mirage.

What colour is your shampoo?:

White.

Are you doing anything tomorrow?:

Just work, but Friday left me in such a pissy mood so I'm not looking forward to tomorrow very much. I might also need to use my lunch break to take Max to the vet for his first set of vaccines, as we weren't able to do so today.

Do you know anyone who's gotten pregnant over the age of 40?:

Yes.

Who does most of the grocery shopping in your home?:

My parents.

Have you ever been approached by someone in public preaching about religion?:

Those people roam around my university all the time, but fortunately I never encountered any of them during my time there.

Are you listening to music right now? If so, what's the theme of the lyrics?:

Being okay and accepting yourself despite your fuckups, because you are all you have. Uhgood by RM.

What was the last thing you had to eat?:

Pizza, shrimp, and lumpia. Pretty damn great combination if you ask me hahaha.

3 notes

·

View notes

Note

Could we please get general relationship headcanons for Ann from Persona 5?

author's note: Ann my beloved. My best friend in the whole wide world. I see a lot of myself in her actually, if I wasn't so reserved lol. I bestow upon you the headcanons. Please, enjoy and thank you for the request!

rating: general

fandom: persona 5

pairing: ann takamaki x gn!reader

warnings: mentions of objectification of women's bodies

word count: 671

summary: What would Ann be like in a relationship?

Oh gosh Ann, I love her with all my heart. There’s a reason her element alignment is Agi (fire), she’s a passionate and driven woman who will stop at nothing to see her way to her goals. So, I imagine that when her mind is set on pursuing someone romantically, she would be the one to pull out all of the stops, but only if she’s already comfortable around them. Perhaps as a friend or acquaintance she has a positive history with, that she can trust. I definitely don’t think she would want to be in a relationship before being friends with someone, just to make sure their vibes are proper.

Chocolate on Valentine’s Day? She’s suave as all hell about it. Flowers? Any time you meet up to hang out, she’ll sneak to the florist and get you a single rose (or whatever your favorite flower is when she figures it out). A nice night out? I’d imagine once her modeling career takes off, and why wouldn’t it, she would treat you to a delectable and fancy all-you-can-eat buffet and take you on a walk through Inokashira Park.

Her love language is acts of service and gift giving, these two especially going hand in hand. She loves to do things for people, to show them that she cares about them through what she does. That doesn’t mean that she doesn’t also appreciate a good gift though. Perhaps buy her that makeup collab set she’s been talking about all month? Or the new album from her favorite band that just came out? Just to show you listen to her and care about her interests as much as she cares about your own!

Despite her forward nature when pursuing a romantic interest, she’s pretty shy about physical contact. The first time you hug her, she’s stiff as a board and takes a minute to relax into it. You initiate your first kiss together, and it flusters her beyond all belief. This stems from her negative experience being objectified as a model, especially as a woman with traditionally Western features (naturally blonde hair and blue eyes) in Japan. She definitely has to be eased into it very gently, and reassured consistently along the way that you’re not there just for her physicality, but for her as a complete person. Either way, she’s not really touchy-feely, but if your love language is physical touch, I guarantee you that she will do her best to make sure you feel as loved as you make her feel.

I think the Phantom Thieves would all really enjoy your presence when you’re introduced to them (if you don’t already know them), except the way you make Morgana bicker way too much. If it is your first time meeting Ann’s friends, their opinion is incredibly valuable to her; she trusts their instincts as much as she does her own. It would be best to make a good impression! Especially with Shiho too, I imagine that she’s Ann’s personal shadow defender and will hurt you if you do anything to Ann. (Don’t let the kind and outgoing facade fool you after her recovery from depression, Shiho can and will throw hands for Ann for playing such a huge role in saving her life.)

Overall, day-to-day with her I imagine to be very exciting and fun. Ann is always down for an adventure to the beach or the amusement park to spice up the day, but she’s more than content staying bundled up inside or just hanging out at Leblanc all day with you. Her personality is unpredictable and she can have an incredibly short temper, but overall it's usually in jest. It’s easy to tell when Ann is being sarcastically pissed off for comedic effect or when she’s really angry by the tone of her voice. She’s incredibly impatient, and you catch yourself teasing her until she’s incredibly flustered and “enraged” about her overreaction to the crepe stand’s wait times. She secretly enjoys it, not that she’ll ever admit it.

#persona#persona 5#persona fanfiction#persona 5 fanfiction#ann takamaki#ann takamaki x reader#request#headcanon#gender neutral reader#GOD i love ann she's so awesome#i want to be her when i grow up.

29 notes

·

View notes

Text

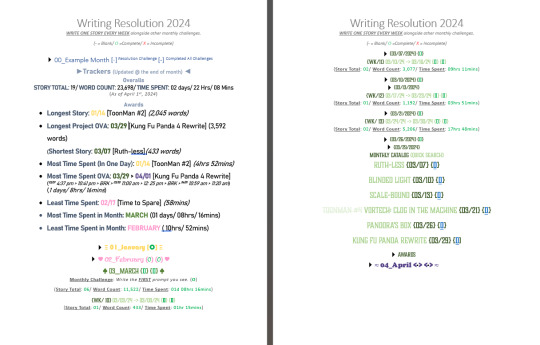

MARCH Monthly Archive/ APRIL Update

Well, March was a better month for my writing, at least. Threw in two extra stories, so now I'm up by three weeks, and only had one story last month that I absolutely did not like. I'm also taking a few more days when writing some stories, and I feel like the quality has improved because of it.

On the more positive side, I blew up outta nowhere last month. Mostly on Tumblr, but Reddit showed me a little love too, and I gotta say it really made my month. I was just goofin' off at work when I saw a notice on my phone, and now there's definitely a video of me dancing like a kid on the security cameras. So thanks for that!

I'd also like to shout out to @agirlandherquill (Tumblr) & @kentuckyhobbit (Tumblr) for being my first followers! I hope you both enjoy the ride!

I'm also doing a new thing with these archive posts where I give you my "Best Foot Forward" story. Basically, if you had to read one story from me last month, the BFF would be it.

That being said, let's get to the archive:

|| || || || || || ||

S10/Wk-10: Ruth-Less

Stated Writing: 03/07

Prompt: Your house is haunted by a ghost, and, upset it can't scare you out, you find it trying to be passive-aggressive now.

Prompt By: u/NinjaProfessional823 (Reddit)

S11/Wk-11: Blinded Light

Stated Writing: 03/10

Prompt: "You don't even know what's out there!"

Prompt By: @seaside-writings (Tumblr)

S12/Wk-11: Scale-Bound [BFF]

Stated Writing: 03/13

Prompt: You are a mighty dragon king, the strongest magical being. You're determined to conquer the world, but a hero turned you into a cute small dragon. Now, you are looking for a human girl to help you get strong again and dominate the world.

Prompt By: u/basafish (Reddit)

S13/Wk-12: ToonMan #4 Vortech: Clog in the Machine

Stated Writing: 03/21

Prompt: "You called me." / "And you really came."

Prompt By: @creativepromptsforwriting (Tumblr)

S14/Wk-13: Pandora's Box

Stated Writing: 03/26

Prompt: A mundane and antiquated sub-agency in the US Government was the 1st to stumble across AGI. An internal investigation determined a press release unnecessary. Unaware of the power they control, 12 bureaucrats are assembled to determine the policy and implementation of their new tool.

Prompt By: No-assistance1503 (Reddit)

S15/Wk-13: Kung Fu Panda 4 Rewrite

Stated Writing: 03/29

Prompt: Kung Fu Panda 4 felt a little lacking, wanted to try my hand at maybe making it better.

Prompt By: Me (ToonMan)

|| || || || || || ||

Previous "Best Foot Forward" Stories:

JAN [S4/Wk-02]: ToonMan #1: Comical Crime Fighter

Started Writing: 01/12

Prompt: You have the superpower of slapstick comedy.

Prompt By: u/Paper_Shotgun (Reddit)

FEB [S9/WK-05]: Not Enough Time

Started Writing: 02/02

Prompt: [TT] Theme Thursday - Exhaustion

Prompt By: u/AliciaWrites (Reddit)

|| || || || || || ||

|| || || || || || ||

Looking back, March was actually a pretty solid month. Let's see if I can keep it up going into April.

Stay safe, drink plenty of water, and be kind to yourself and others!

ToonMan, AWAY!

#writing blog#blog#journal#writing#creative writing#writing prompts#writers on tumblr#writeblr#writerscommunity#short stories#writers#3 out of 12 complete#still haven't missed a week#THANK YOU ALL FOR READING!

5 notes

·

View notes

Text

FebruarOC - Kaedmon & Uriah

This is just their joint drabble because it was long and I wanted to pester you all one final time this month :')

And because I forgot to say it on their individual posts, while I don't have a playlist for either of them, I do have one song for each of them that I stick on loop while writing.

Uriah: The Dead South's "Yours to Keep"

Kaedmon: Chase Petra's "Pacific"

This does just sort of cut off because i got too lazy to continue and didn't want to be here all day LOL

++++

Uriah couldn’t believe this.

Not only did the rebel girl get the jump on him, but then they were both caught by the most incompetent smugglers that he had ever seen.

Yeah, well, who’s incompetent now, he thinks darkly. At least they tied his hands in front of him like the amateurs they are.

And no one knew he is ISB. Which, well, if he grew tired of playing the part he could snap the bindings easily and use his hidden comm device to call for reinforcements. His cover would be blown, of course, and it would be a stupid way to get a mark on his short but unblemished records.

He could check one roughshod smuggler group off the list and one rebel agent, too. A net win, in the grand scheme of things.

Uriah’s gaze shifts over to rebel; there’s still a twig in her hair and a possible shadow of a bruise on her cheek, though it’s hard to tell in the dim lighting of the cheap lanterns. She had a Fulcrum logo stitched into the seam of her jacket, but he has a hard time imagining that she’s the infamous rebel spy that ISB has been building a dossier on. If he has to categorize her, it would be somewhere above girl in over her head but maybe on par with the rest of these smugglers.

She’s probably just trying to catch the Rebellion's attention in a desperate grab to get them to notice her.

He frowns when he notices the rapid rise and fall of her chest, ragged gasping breaths even in unconsciousness. She had been wearing a respirator, hadn’t she?

“Hey,” he tries, but his voice comes out more like a croak and he has to cough to clear it. “Hey!”

Four heads turn in his direction, the others opting instead to ignore him.