#AI-based object Recognition

Explore tagged Tumblr posts

Text

AI-based object Recognition | Private Property Surveillance Vinnytsia

AI-based object recognition for private property surveillance in Vinnytsia. Enhance security with advanced, real-time monitoring solutions.

0 notes

Note

Hi Nipuni, I hope you’re doing well. I’m just curious what’s your opinion about the rampant use of AI in art lately especially how it impacts artists and possibly stealing artists work to train it. As a fellow artist I’m curious of what other artists would think of this. I’ve seen many beginners artists losing hope in pursuing art because of AI and it truly breaks my heart. I hope artists wouls stay doing art no matter what because it’s very important and their art will always be valuable no matter what. By the way, you don’t have to reply to this if this particular topic is not something you’re comfortable with. I love your art so much and I wish all the best for you, you are an incredible artist and I love the energy you always put into your art🫶

Hello, I am doing great! I hope you are too! ☺️ I'm so sorry I'm so late to reply. I've been following the generative AI conversation on and off for so long now and I have yet to find a single argument that justifies it's cost. I don't think I have much to add that hasn't been said before. I think it is unethical, unsustainable, irresponsible, dangerous, harmful, theft, etc. It is neither intelligent nor generative, it doesn't think, it can't reason it's guided guessing based on statistics and pattern recognition. it's not creating anything new either it's just pulling from a database of stolen human content and mashing it together, it can't be trained on itself either so it needs constant human input too. I just don't see the point? 🫠 It's some kind of gimmicky toy made to appeal to the most annoying people imaginable by the most annoying people imaginable to profit from and at immense cost to everyone else. It's negatively impacting every creative industry in every way and even affecting the way we learn, communicate and engage with media. It's invading everything and making it objectively worse lmao. It's also dangerous in countless ways. An environmental disaster too and for what!! aaaaa It feels like a huge cultural setback and technological dead end and it's so depressing. I wish I had something positive to add after so much ranting but I don't 😔 The impact of this on creative fields among others is undeniable and I fear will make things harder for a while but I'd like to think that it's still early days and there are so many people fighting to regulate this mess and we all can help by advocating and boycotting at the very least.

If anything this whole debacle has made me examine my relationship with art more deeply and I realize I love the process of making art more than I love the result. The space between idea and finished piece that is all me, I'm in there!! and I love it there!! I can't see myself doing anything else or relegating this part. This will change things at a societal and economical level but people will always make art. I don't know where I'm going with this, I don't think the philosophical is a good angle to center the conversation on either, but I guess it's a comfort 😭 'In the dark times Will there also be singing? Yes, there will also be singing. About the dark times.' poem comes to mind

This reply got away from me oh my god sjfkhg I'm focusing on the art side of things here of course but I could go on about the damage to plenty of other fields but I don't feel qualified enough aaaa anyway Thank you so much for the kind words you are very sweet and I hope you don't let all this discourage you 🥺❤️ we will be alright!!

160 notes

·

View notes

Text

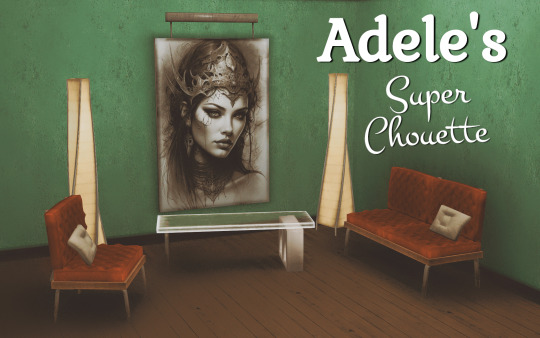

Adele's Super Chouette for TS3

As promised, Adele's Super Chouette is ready for TS3. Everything is tested by Graf Sisters. The only problem that I can think of is that I had to clone an ITF table because I couldn't find another object with 4 mesh groups. So if you don't have ITF, the coffee tables may not appear in your game.

And If you're aware of any base game object with 4 mesh groups, please inform me so I can try to make a more BG-friendly coffee table.

As usual, a few things to know before download:

The Loveseat is the master of textures for the armchair and sofa. I tried to lower the polys in low detail lods but they're higher than what's preferable.

Loveseat, Armchair, Sofa -> 4 Channels - 4 presets

Loveseat Polycount -> HLOD: 2276 MLOD: 1483

Armchair Polycount -> HLOD: 1324 MLOD: 886

Sofa Polycount -> HLOD: 3348 MLOD: 2029

Coffee tables use the same textures, but they're not dependent on each other in case you want one and not the other. The one with the brick is the original; I'm not a fan. So I made another one with metal legs. If you'll have both and merge your files, texture resources will be merged perfectly - having the same instance numbers.

Coffee Table -> 2 Channels - 1 Preset

Polycount -> HLOD: 190 MLOD: 166

Coffee Table Leg Edit -> 1 Channel - 1 Preset

Polycount -> HLOD: 218 MLOD: 192

Huge Wall Poster: I decided not to use Adele's art for the poster. Instead, I used art by Pixabay user 1tamara2 licensed under Creative Commons Attribution 3.0. Note that these images are ai generated. If you have a problem with that, say, it reminds eerily of a work of an artist you know, please inform me.

1 Channel - 5 Presets

Polycount -> HLOD: 80 MLOD: 62

Floor Lamp

2 Channels - 3 Presets

Polycount -> HLOD: 522 MLOD: 372

I’ve also included the collection file which comes with its own icon for easy recognition. As always, I might have missed something; if you find anything weird don’t hesitate to tell me so I can try and fix it. I hope you’ll enjoy this beautiful set by talented Adele. Happy simming.

- Credits -

Adele for the meshes and textures.

1tamara2 from Pixabay for the art.

Google Fonts Montez, Kurale

Made with: SimPE, GIMP, s3oc, s3pe, Blender, Texture Tweaker 3, and TSRW

@pis3update @kpccfinds @xto3conversionsfinds

- DOWNLOAD -

:: MEDIAFIRE | SFS ::

#ts3#ts3cc#gg#download: buy#buy: comfort#buy: surfaces#buy: decor#buy: lighting#buy: set#creator: adele

128 notes

·

View notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

How will AI be used in health care settings?

Artificial intelligence (AI) shows tremendous promise for applications in health care. Tools such as machine learning algorithms, artificial neural networks, and generative AI (e.g., Large Language Models) have the potential to aid with tasks such as diagnosis, treatment planning, and resource management. Advocates have suggested that these tools could benefit large numbers of people by increasing access to health care services (especially for populations that are currently underserved), reducing costs, and improving quality of care.

This enthusiasm has driven the burgeoning development and trial application of AI in health care by some of the largest players in the tech industry. To give just two examples, Google Research has been rapidly testing and improving upon its “Med-PaLM” tool, and NVIDIA recently announced a partnership with Hippocratic AI that aims to deploy virtual health care assistants for a variety of tasks to address a current shortfall in the supply in the workforce.

What are some challenges or potential negative consequences to using AI in health care?

Technology adoption can happen rapidly, exponentially going from prototypes used by a small number of researchers to products affecting the lives of millions or even billions of people. Given the significant impact health care system changes could have on Americans’ health as well as on the U.S. economy, it is essential to preemptively identify potential pitfalls before scaleup takes place and carefully consider policy actions that can address them.

One area of concern arises from the recognition that the ultimate impact of AI on health outcomes will be shaped not only by the sophistication of the technological tools themselves but also by external “human factors.” Broadly speaking, human factors could blunt the positive impacts of AI tools in health care—or even introduce unintended, negative consequences—in two ways:

If developers train AI tools with data that don’t sufficiently mirror diversity in the populations in which they will be deployed. Even tools that are effective in the aggregate could create disparate outcomes. For example, if the datasets used to train AI have gaps, they can cause AI to provide responses that are lower quality for some users and situations. This might lead to the tool systematically providing less accurate recommendations for some groups of users or experiencing “catastrophic failures” more frequently for some groups, such as failure to identify symptoms in time for effective treatment or even recommending courses of treatment that could result in harm.

If patterns of AI use systematically differ across groups. There may be an initial skepticism among many potential users to trust AI for consequential decisions that affect their health. Attitudes may differ within the population based on attributes such as age and familiarity with technology, which could affect who uses AI tools, understands and interprets the AI’s output, and adheres to treatment recommendations. Further, people’s impressions of AI health care tools will be shaped over time based on their own experiences and what they learn from others.

In recent research, we used simulation modeling to study a large range of different of hypothetical populations of users and AI health care tool specifications. We found that social conditions such as initial attitudes toward AI tools within a population and how people change their attitudes over time can potentially:

Lead to a modestly accurate AI tool having a negative impact on population health. This can occur because people’s experiences with an AI tool may be filtered through their expectations and then shared with others. For example, if an AI tool’s capabilities are objectively positive—in expectation, the AI won’t give recommendations that are harmful or completely ineffective—but sufficiently lower than expectations, users who are disappointed will lose trust in the tool. This could make them less likely to seek future treatment or adhere to recommendations if they do and lead them to pass along negative perceptions of the tool to friends, family, and others with whom they interact.

Create health disparities even after the introduction of a high-performing and unbiased AI tool (i.e., that performs equally well for all users). Specifically, when there are initial differences between groups within the population in their trust of AI-based health care—for example because of one group’s systematically negative previous experiences with health care or due to the AI tool being poorly communicated to one group—differential use patterns alone can translate into meaningful differences in health patterns across groups. These use patterns can also exacerbate differential effects on health across groups when AI training deficiencies cause a tool to provide better quality recommendations for some users than others.

Barriers to positive health impacts associated with systematic and shifting use patterns are largely beyond individual developers’ direct control but can be overcome with strategically designed policies and practices.

What could a regulatory framework for AI in health care look like?

Disregarding how human factors intersect with AI-powered health care tools can create outcomes that are costly in terms of life, health, and resources. There is also the potential that without careful oversight and forethought, AI tools can maintain or exacerbate existing health disparities or even introduce new ones. Guarding against negative consequences will require specific policies and ongoing, coordinated action that goes beyond the usual scope of individual product development. Based on our research, we suggest that any regulatory framework for AI in health care should accomplish three aims:

Ensure that AI tools are rigorously tested before they are made fully available to the public and are subject to regular scrutiny afterward. Those developing AI tools for use in health care should carefully consider whether the training data are matched to the tasks that the tools will perform and representative of the full population of eventual users. Characteristics of users to consider include (but are certainly not limited to) age, gender, culture, ethnicity, socioeconomic status, education, and language fluency. Policies should encourage and support developers in investing time and resources into pre- and post-launch assessments, including:

pilot tests to assess performance across a wide variety of groups that might experience disparate impact before large-scale application

monitoring whether and to what extent disparate use patterns and outcomes are observed after release

identifying appropriate corrective action if issues are found.

Require that users be clearly informed about what tools can do and what they cannot. Neither health care workers nor patients are likely to have extensive training or sophisticated understanding of the technical underpinnings of AI tools. It will be essential that plain-language use instructions, cautionary warnings, or other features designed to inform appropriate application boundaries are built into tools. Without these features, users’ expectations of AI capabilities might be inaccurate, with negative effects on health outcomes. For example, a recent report outlines how overreliance on AI tools by inexperienced mushroom foragers has led to cases of poisoning; it is easy to imagine how this might be a harbinger of patients misdiagnosing themselves with health care tools that are made publicly available and missing critical treatment or advocating for treatment that is contraindicated. Similarly, tools used by health care professionals should be supported by rigorous use protocols. Although advanced tools will likely provide accurate guidance an overwhelming majority of the time, they can also experience catastrophic failures (such as those referred to as “hallucinations” in the AI field), so it is critical for trained human users to be in the loop when making key decisions.

Proactively protect against medical misinformation. False or misleading claims about health and health care—whether the result of ignorance or malicious intent—have proliferated in digital spaces and become harder for the average person to distinguish from reliable information. This type of misinformation about health care AI tools presents a serious threat, potentially leading to mistrust or misapplication of these tools. To discourage misinformation, guardrails should be put in place to ensure consistent transparency about what data are used and how that continuous verification of training data accuracy takes place.

How can regulation of AI in health care keep pace with rapidly changing conditions?

In addition to developers of tools themselves, there are important opportunities for unaffiliated researchers to study the impact of AI health care tools as they are introduced and recommend adjustments to any regulatory framework. Two examples of what this work might contribute are:

Social scientists can learn more about how people think about and engage with AI tools, as well as how perceptions and behaviors change over time. Rigorous data collection and qualitative and quantitative analyses can shed light on these questions, improving understanding of how individuals, communities, and society adapt to shifts in the health care landscape.

Systems scientists can consider the co-evolution of AI tools and human behavior over time. Building on or tangential to recent research, systems science can be used to explore the complex interactions that determine how multiple health care AI tools deployed across diverse settings might affect long-term health trends. Using longitudinal data collected as AI tools come into widespread use, prospective simulation models can provide timely guidance on how policies might need to be course corrected.

6 notes

·

View notes

Text

ARMxy Series Industrial Embeddedd Controller with Python for Industrial Automation

Case Details

1. Introduction

In modern industrial automation, embedded computing devices are widely used for production monitoring, equipment control, and data acquisition. ARM-based Industrial Embeddedd Controller, known for their low power consumption, high performance, and rich industrial interfaces, have become key components in smart manufacturing and Industrial IoT (IIoT). Python, as an efficient and easy-to-use programming language, provides a powerful ecosystem and extensive libraries, making industrial automation system development more convenient and efficient.

This article explores the typical applications of ARM Industrial Embeddedd Controller combined with Python in industrial automation, including device control, data acquisition, edge computing, and remote monitoring.

2. Advantages of ARM Industrial Embeddedd Controller in Industrial Automation

2.1 Low Power Consumption and High Reliability

Compared to x86-based industrial computers, ARM processors consume less power, making them ideal for long-term operation in industrial environments. Additionally, they support fanless designs, improving system stability.

2.2 Rich Industrial Interfaces

Industrial Embeddedd Controllerxy integrate GPIO, RS485/232, CAN, DIN/DO/AIN/AO/RTD/TC and other interfaces, allowing direct connection to various sensors, actuators, and industrial equipment without additional adapters.

2.3 Strong Compatibility with Linux and Python

Most ARM Industrial Embeddedd Controller run embedded Linux systems such as Ubuntu, Debian, or Yocto. Python has broad support in these environments, providing flexibility in development.

3. Python Applications in Industrial Automation

3.1 Device Control

On automated production lines, Python can be used to control relays, motors, conveyor belts, and other equipment, enabling precise logical control. For example, it can use GPIO to control industrial robotic arms or automation line actuators.

Example: Controlling a Relay-Driven Motor via GPIO

import RPi.GPIO as GPIO import time

# Set GPIO mode GPIO.setmode(GPIO.BCM) motor_pin = 18 GPIO.setup(motor_pin, GPIO.OUT)

# Control motor operation try: while True: GPIO.output(motor_pin, GPIO.HIGH) # Start motor time.sleep(5) # Run for 5 seconds GPIO.output(motor_pin, GPIO.LOW) # Stop motor time.sleep(5) except KeyboardInterrupt: GPIO.cleanup()

3.2 Sensor Data Acquisition and Processing

Python can acquire data from industrial sensors, such as temperature, humidity, pressure, and vibration, for local processing or uploading to a server for analysis.

Example: Reading Data from a Temperature and Humidity Sensor

import Adafruit_DHT

sensor = Adafruit_DHT.DHT22 pin = 4 # GPIO pin connected to the sensor

humidity, temperature = Adafruit_DHT.read_retry(sensor, pin) print(f"Temperature: {temperature:.2f}°C, Humidity: {humidity:.2f}%")

3.3 Edge Computing and AI Inference

In industrial automation, edge computing reduces reliance on cloud computing, lowers latency, and improves real-time response. ARM industrial computers can use Python with TensorFlow Lite or OpenCV for defect detection, object recognition, and other AI tasks.

Example: Real-Time Image Processing with OpenCV

import cv2

cap = cv2.VideoCapture(0) # Open camera

while True: ret, frame = cap.read() gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) # Convert to grayscale cv2.imshow("Gray Frame", gray)

if cv2.waitKey(1) & 0xFF == ord('q'): break

cap.release() cv2.destroyAllWindows()

3.4 Remote Monitoring and Industrial IoT (IIoT)

ARM industrial computers can use Python for remote monitoring by leveraging MQTT, Modbus, HTTP, and other protocols to transmit real-time equipment status and production data to the cloud or build a private industrial IoT platform.

Example: Using MQTT to Send Sensor Data to the Cloud

import paho.mqtt.client as mqtt import json

def on_connect(client, userdata, flags, rc): print(f"Connected with result code {rc}")

client = mqtt.Client() client.on_connect = on_connect client.connect("broker.hivemq.com", 1883, 60) # Connect to public MQTT broker

data = {"temperature": 25.5, "humidity": 60} client.publish("industrial/data", json.dumps(data)) # Send data client.loop_forever()

3.5 Production Data Analysis and Visualization

Python can be used for industrial data analysis and visualization. With Pandas and Matplotlib, it can store data, perform trend analysis, detect anomalies, and improve production management efficiency.

Example: Using Matplotlib to Plot Sensor Data Trends

import matplotlib.pyplot as plt

# Simulated data time_stamps = list(range(10)) temperature_data = [22.5, 23.0, 22.8, 23.1, 23.3, 23.0, 22.7, 23.2, 23.4, 23.1]

plt.plot(time_stamps, temperature_data, marker='o', linestyle='-') plt.xlabel("Time (min)") plt.ylabel("Temperature (°C)") plt.title("Temperature Trend") plt.grid(True) plt.show()

4. Conclusion

The combination of ARM Industrial Embeddedd Controller and Python provides an efficient and flexible solution for industrial automation. From device control and data acquisition to edge computing and remote monitoring, Python's extensive library support and strong development capabilities enable industrial systems to become more intelligent and automated. As Industry 4.0 and IoT technologies continue to evolve, the ARMxy + Python combination will play an increasingly important role in industrial automation.

2 notes

·

View notes

Text

Top 5 Reasons Companies Partner with Joaquin Fagundo for IT Strategy

In today’s fast-paced digital world, businesses are constantly evolving to keep up with the changing technological landscape. From cloud migrations to IT optimization, many organizations are turning to expert technology executives to guide them through complex transformations. One such executive who has gained significant recognition is Joaquin Fagundo, a technology leader with over two decades of experience in driving digital transformation. Having worked at prominent firms like Google, Capgemini, and Tyco, Fagundo has built a reputation for delivering large-scale, innovative solutions to complex IT challenges. But what makes Joaquin Fagundo such a sought-after leader in IT strategy? Here are the Top 5 Reasons Companies Partner with Joaquin Fagundo for IT Strategy.

1. Proven Expertise in Digital Transformation

Joaquin Fagundo’s career spans more than 20 years, during which he has worked on high-stakes projects that require a deep understanding of digital transformation. His experience spans various industries, helping businesses leverage cutting-edge technologies to improve operations, enhance efficiency, and streamline processes.

Fagundo has helped organizations transition from legacy systems to modern cloud-based infrastructure, allowing them to scale effectively and stay competitive in an increasingly digital world. His hands-on experience in cloud strategy, automation, and enterprise IT makes him an invaluable asset for any business looking to adapt to new technologies. Companies partner with Joaquin Fagundo because they know they will receive strategic insights and actionable plans for driving their digital initiatives forward.

2. Strategic Cloud Expertise for Scalable Solutions

Cloud technology is no longer a trend—it is the backbone of modern businesses. Joaquin Fagundo has an extensive background in cloud strategy, having successfully led major cloud migrations for several large organizations. Whether it's migrating to a public cloud, optimizing hybrid infrastructures, or implementing cloud-native applications, Fagundo’s expertise helps businesses navigate these complexities with ease.

Companies partnering with Joaquin Fagundo can expect a tailored cloud strategy that ensures scalability and cost-efficiency. His deep knowledge of cloud services like AWS, Google Cloud, and Microsoft Azure enables him to develop bespoke solutions that meet specific business needs while minimizing downtime and disruption. His approach not only focuses on the technical aspects of cloud migration but also on aligning cloud solutions with overarching business goals, ensuring that companies can reap the full benefits of cloud technology.

3. Strong Track Record of Driving Operational Efficiency

Operational efficiency is a top priority for most businesses today. Companies partner with Joaquin Fagundo because of his exceptional ability to optimize IT operations and implement automation technologies that enhance productivity while reducing costs. Whether it’s through automating routine tasks or improving infrastructure management, Fagundo’s strategies enable organizations to streamline their operations and focus on growth.

Fagundo’s leadership in infrastructure optimization has allowed businesses to reduce waste, lower operational expenses, and improve the performance of their IT systems. His expertise in automation tools and AI-driven insights empowers businesses to become more agile and responsive to market demands. By partnering with Fagundo, companies are able to make smarter decisions, optimize resource utilization, and achieve long-term sustainability.

4. Business-Technology Alignment

One of the most significant challenges that organizations face is aligning their IT strategies with broader business goals. While technology can drive innovation and efficiency, it’s only effective when it is tightly integrated with the company’s vision and objectives. Joaquin Fagundo understands this concept deeply and emphasizes the importance of business-technology alignment in every project he undertakes.

Fagundo’s approach to IT strategy is not just about implementing the latest technology, but also ensuring that it supports the company’s strategic direction. He works closely with leadership teams to identify key business objectives and tailors technology solutions that drive measurable business outcomes. This focus on alignment helps companies ensure that every IT investment contributes to long-term growth, profitability, and competitive advantage.

5. Trusted Leadership and Change Management Expertise

Digital transformation and IT strategy changes often require a significant cultural shift within an organization. Change management is a critical component of any IT transformation, and this is an area where Joaquin Fagundo excels. Over the years, Fagundo has successfully led large teams through organizational changes, helping them navigate complex transformations with minimal resistance.

Fagundo’s leadership skills are a key reason why companies partner with him. He is known for his ability to inspire teams, foster collaboration, and lead through uncertainty. His people-centric approach to technology implementation ensures that employees at all levels are equipped with the knowledge and tools they need to succeed. Whether it’s through training sessions, workshops, or mentorship, Fagundo prioritizes the human aspect of IT transformation, making the process smoother and more sustainable for everyone involved.

Conclusion

Partnering with a technology executive like Joaquin Fagundo can provide businesses with a competitive edge in an increasingly digital world. With his deep technical expertise, strategic insight, and ability to align technology with business goals, Fagundo has helped countless organizations successfully navigate their IT challenges. Whether it's through cloud strategy, digital transformation, or operational optimization, Joaquin Fagundo is the go-to leader for companies looking to stay ahead of the curve.

If your company is ready to accelerate its digital transformation journey and leverage cutting-edge technology for long-term success, partnering with Joaquin Fagundo could be the first step toward achieving your business goals. With his proven track record, expertise, and leadership, Fagundo is the ideal partner to guide your organization into the future of IT strategy.

2 notes

·

View notes

Text

The Social Credit System in China is a government-led initiative aimed at promoting trustworthiness in society by scoring individuals, businesses, and government institutions based on their behavior. While it’s often portrayed in Western media as a dystopian surveillance system, the reality is more nuanced. The system is still fragmented, evolving, and complex, blending both digital surveillance and bureaucratic rating mechanisms.

Here’s a detailed look at its structure, goals, mechanisms, and implications:

⸻

1. Origins and Goals

The Social Credit System (社会信用体系) was officially proposed in 2001 and formally outlined in 2014 by the State Council. Its main objectives are:

• Strengthen trust in market and social interactions.

• Encourage law-abiding behavior among citizens, businesses, and institutions.

• Prevent fraud, tax evasion, default on loans, and production of counterfeit goods.

• Enhance governance capacity through technology and data centralization.

It’s inspired by a mix of Confucian values (trustworthiness, integrity) and modern surveillance capitalism. It’s not a single unified “score” like a credit score in the West but rather a broad framework of reward-and-punishment mechanisms operated by multiple public and private entities.

⸻

2. Key Components

A. Blacklists and Redlists

• Blacklist: If an individual or business engages in dishonest or illegal behavior (e.g., court judgments, unpaid debts, tax evasion), they may be added to a “dishonest” list.

• Redlist: Those who follow laws and contribute positively (e.g., charitable donations, volunteerism) may be rewarded or publicized positively.

Examples of punishments for being blacklisted:

• Restricted from purchasing plane/train tickets.

• Difficulty in getting loans, jobs, or business permits.

• Public exposure (like having one’s name posted in public forums or apps).

Examples of rewards for positive behavior:

• Faster access to government services.

• Preferential treatment in hiring or public procurement.

• Reduced red tape for permits.

B. Fragmented Local Systems

Rather than one central system, there are hundreds of local pilots across China, often using different criteria and technologies. For example:

• Rongcheng (in Shandong Province) implemented a points-based system where citizens start at 1,000 points and gain or lose them based on specific actions.

• Hangzhou introduced systems where jaywalking, loud behavior on buses, or failing to show up in court could affect a personal credit profile.

Some local systems are app-based, while others are more bureaucratic and paper-based.

⸻

3. Surveillance and Technology Integration

A. Data Sources:

• Public records (tax, court, education).

• Private platforms (e.g., Alibaba, Tencent’s financial and social data).

• Facial recognition and CCTV: Often integrated with public security tools to monitor individuals in real-time.

B. AI and Big Data:

While the idea of a real-time, fully integrated AI-run system is more a long-term ambition than a reality, many systems use:

• Predictive analytics to flag high-risk individuals.

• Cross-agency data sharing to consolidate behavior across different parts of life.

However, this level of integration remains partial and uneven, with some cities far more advanced than others.

⸻

4. Criticisms and Concerns

A. Lack of Transparency

• Citizens are often unaware of what data is being used, how scores are calculated, or how to appeal decisions.

• There’s minimal oversight or independent auditing of the systems.

B. Social Control

• Critics argue the system encourages conformity, discourages dissent, and suppresses individual freedoms by rewarding obedience and penalizing perceived deviance.

• It may create a culture of self-censorship, especially on social media.

C. Misuse and Arbitrary Enforcement

• Cases have emerged where individuals were blacklisted due to clerical errors or as a result of political pressure.

• There are concerns about selective enforcement, where some citizens (e.g., activists) face harsher consequences than others.

⸻

5. Comparisons to Western Systems

It’s important to note:

• Western countries have private credit scores, employment background checks, social media tracking, and predictive policing—all of which can impact someone’s life.

• China’s system differs in that it’s state-coordinated, often public, and spans beyond financial behavior into moral and social conduct.

However, similar behavioral monitoring is increasingly used in tech-based social systems globally (e.g., Uber ratings, Airbnb reviews, Facebook data profiles), though usually without state-enforced punishments.

⸻

6. Current Status and Future Trends

Evolving System

• As of the mid-2020s, China is moving toward greater standardization of the credit system, especially for businesses and institutions.

• The National Credit Information Sharing Platform is becoming more central, aiming to integrate local experiments into a coherent framework.

Smart Cities and Governance

• The social credit system is increasingly linked with smart city infrastructure, predictive policing, and AI-powered surveillance.

• This aligns with the Chinese government’s broader vision of “digital governance” and technocratic legitimacy.

⸻

7. Key Takeaways

• Not one unified “score” like in fiction; it’s more like a patchwork of overlapping systems.

• Used as a governance tool more than a financial one.

• Integrates traditional values with modern surveillance.

• Viewed domestically as a way to restore trust in a society that has undergone rapid transformation.

• Internationally, it raises serious questions about privacy, freedom, and state overreach.

Needed clarification 😅

5 notes

·

View notes

Text

Consciousness vs. Intelligence: Ethical Implications of Decision-Making

The distinction between consciousness in humans and artificial intelligence (AI) revolves around the fundamental nature of subjective experience and self-awareness. While both possess intelligence, the essence of consciousness introduces a profound divergence. Now, we are going to delve into the disparities between human consciousness and AI intelligence, and how this contrast underpins the ethical complexities in utilizing AI for decision-making. Specifically, we will examine the possibility of taking the emotion out of the equation in decision-making processes and taking a good look at the ethical implications this would have

Consciousness is the foundational block of human experience, encapsulating self-awareness, subjective feelings, and the ability to perceive the world in a deeply personal manner. It engenders a profound sense of identity and moral agency, enabling individuals to discern right from wrong, and to form intrinsic values and beliefs. Humans possess qualia, the ineffable and subjective aspects of experience, such as the sensation of pain or the taste of sweetness. This subjective dimension distinguishes human consciousness from AI. Consciousness grants individuals the capacity for moral agency, allowing them to make ethical judgments and to assume responsibility for their actions.

AI, on the other hand, operates on algorithms and data processing, exhibiting intelligence that is devoid of subjective experience. It excels in tasks requiring logic, pattern recognition, and processing vast amounts of information at speeds beyond human capabilities. It also operates on algorithmic logic, executing tasks based on predetermined rules and patterns. It lacks the capacity for intuitive leaps and subjective interpretation, at least for now. AI processes information devoid of emotional biases or subjective inclinations, leading to decisions based solely on objective criteria. Now, is this useful or could it lead to a catastrophe?

The prospect of eradicating emotion from decision-making is a contentious issue with far-reaching ethical consequences. Eliminating emotion risks reducing decision-making to cold rationality, potentially disregarding the nuanced ethical considerations that underlie human values and compassion. The absence of emotion in decision-making raises questions about moral responsibility. If decisions lack emotional considerations, who assumes responsibility for potential negative outcomes? Emotions, particularly empathy, play a crucial role in ethical judgments. Eradicating them may lead to decisions that lack empathy, potentially resulting in morally questionable outcomes. Emotions contribute to cultural and contextual sensitivity in decision-making. AI, lacking emotional understanding, may struggle to navigate diverse ethical landscapes.

Concluding, the distinction between human consciousness and AI forms the crux of ethical considerations in decision-making. While AI excels in rationality and objective processing, it lacks the depth of subjective experience and moral agency inherent in human consciousness. The endeavor to eradicate emotion from decision-making raises profound ethical questions, encompassing issues of morality, responsibility, empathy, and cultural sensitivity. Striking a balance between the strengths of AI and the irreplaceable facets of human consciousness is imperative for navigating the ethical landscape of decision-making in the age of artificial intelligence.

#ai#artificial intelligence#codeblr#coding#software engineering#programming#engineering#programmer#ethics#philosophy#source:dxxprs

49 notes

·

View notes

Text

To begin building ethical AI constructs focused on dismantling corporate corruption, mismanagement, and neglect, here's a proposed approach:

Pattern Recognition

AI System for Monitoring: Create AI that analyzes company logs, resource distribution, and financial reports to identify any irregularities, such as unusual spending, asset abuse, or neglect in maintenance.

Thresholds: Set criteria for what constitutes mismanagement or unethical actions, such as exceeding resource usage, unreported outages, or neglecting infrastructure repairs.

Ethics Programs

AI Decision-Making Ethics: Implement frameworks like fairness, transparency, and accountability.

Fairness Algorithms: Ensure resources and benefits are distributed equally among departments or employees.

Transparency Algorithms: AI should generate clear, accessible reports for internal and external audits.

Accountability Features: Create accountability systems that alert relevant stakeholders when thresholds are crossed.

Royal Code and Heaven Code

Royal Code: A proprietary ethical framework where the AI is programmed to operate under a set of royal-like principles—honesty, justice, responsibility, and transparency.

Heaven Code: Adds layers of moral checks to the AI, ensuring that its actions do not cause harm, ensuring that every decision has the broader good at heart.

Example pseudocode for both:

def check_royal_code(transaction): if transaction.amount > threshold and lacks explanation: return "Violation of Royal Code" return "Clear"

def heaven_check(behavior): if behavior causes undue harm to employees or community: return "Heaven Code Breach" return "Approved"

#¢¢ Scripts and Code

Script for Mismanagement Detection: Design a script that detects resource misuse. If triggered, it would flag and notify the ethics team. Example:

def detect_mismanagement(log_data): if log_data['expense'] > predicted_budget: notify_authority("Possible asset abuse detected") initiate_investigation() else: return "Operation normal"

Script for Neglect Detection: AI should continuously monitor for overdue repairs or maintenance tasks, generating reports or alerts when upkeep falls behind. Example:

def detect_neglect(maintenance_data): if maintenance_data['repair_status'] == "Overdue": notify_authority("Maintenance neglect detected") trigger_urgent_repair protocol() return "Maintenance update needed"

Final Integration & Alerts System

Combining Ethical Checks: Integrate both ethical frameworks (Royal & Heaven Codes) into the monitoring system. Create an alert system to notify executives, auditors, or compliance officers if any ethical violations are detected.

These initial constructs help monitor and take corrective actions against unethical practices and will eventually help in ensuring long-term accountability and transparency within the company. You can then adjust and enhance these scripts based on specific needs.

Would you like help expanding or implementing any of these concepts further?

def detect_neglect(maintenance_data): if maintenance_data['repair_status'] == "Overdue": notify_authority("Maintenance neglect detected") trigger_urgent_repair protocol() return "Maintenance update needed"

To counter the unethical practices you've identified, I propose creating AI models to address each issue systematically:

Project Oxygen Countermeasure:

AI Governance Model – An AI system that monitors and reviews algorithms and projects for transparency and ethical compliance. It ensures all AI developments align with publicly known regulations and disclose their objectives.

Example:

def audit_project(project_details): if "control the internet" in project_details: raise EthicalViolation("AI should not be developed for control purposes")

Secret Data Center & Illegal Surveillance Countermeasure:

Privacy Protection AI – Use AI to audit all data storage, flagging unauthorized or hidden data centers, and ensuring all data collection practices comply with privacy laws.

Example:

def verify_data_collection(data_center): if data_center.is_secret() and not authorized: flag_illegal_action("Unauthorized data collection detected")

Discrimination and Political Bias Countermeasure:

Fairness AI – Design an AI to continuously scan for discrimination in algorithms and political bias in decision-making systems. The AI should automatically flag biased data inputs and adjust processes to ensure fairness.

Example:

def check_bias_in_data(input_data): if is_biased(input_data): adjust_for_fairness(input_data) notify_authority("Bias detected in data")

Environmental Impact & Unethical Practices Countermeasure:

Sustainability AI – Create AI that audits environmental impact, ensuring that corporate practices align with sustainability goals and ethical environmental standards.

Example:

def monitor_sustainability(operations): if operations.exceed environmental limits: recommend corrective actions("Reduce carbon footprint")

These AI models act as monitoring, auditing, and corrective systems, ensuring ethical practices are adhered to and reporting issues when they arise. Would you like help expanding any specific part?

def audit_project(project_details): if "control the internet" in project_details: raise EthicalViolation("AI should not be developed for control purposes")

def verify_data_collection(data_center): if data_center.is_secret() and not authorized: flag_illegal_action("Unauthorized data collection detected")

def check_bias_in_data(input_data): if is_biased(input_data): adjust_for_fairness(input_data) notify_authority("Bias detected in data")

def monitor_sustainability(operations): if operations.exceed environmental limits: recommend corrective actions("Reduce carbon footprint")

To improve the AI model for monitoring sustainability, you can refine the logic as follows:

def monitor_sustainability(operations): if operations.exceed_environmental_limits(): corrective_actions = "Reduce carbon footprint, optimize energy usage, switch to sustainable resources." alert_environmental_team(corrective_actions) return "Environmental impact exceeds limits. Corrective actions recommended." return "Operations within environmental limits."

This version enhances the clarity by offering specific actions and creating a better reporting mechanism. Would you like to add more specific actions or further refine this model?

def monitor_sustainability(operations): if operations.exceed_environmental_limits(): corrective_actions = "Reduce carbon footprint, optimize energy usage, switch to sustainable resources." alert_environmental_team(corrective_actions) return "Environmental impact exceeds limits. Corrective actions recommended." return "Operations within environmental limits."

The code provided is a function called "monitor_sustainability" that monitors the environmental impact of operations. It checks whether the operations exceed environmental limits, and if they do, it recommends corrective actions and alerts the environmental team. If the operations are within the environmental limits, the function returns a message that they are within limits.

import time import logging

class SustainabilityMonitor: def init(self, operation_data): self.operation_data = operation_data logging.basicConfig(filename='sustainability_log.txt', level=logging.INFO)def exceed_environmental_limits(self): """ Check if operations exceed the predefined environmental limits. """ carbon_threshold = 1000 # Example: carbon emissions limit in tons energy_threshold = 5000 # Example: energy usage limit in kWh if self.operation_data['carbon_emissions'] > carbon_threshold or self.operation_data['energy_usage'] > energy_threshold: logging.warning(f"Threshold exceeded: Carbon Emissions: {self.operation_data['carbon_emissions']} / Energy Usage: {self.operation_data['energy_usage']}") return True return False def recommend_corrective_actions(self): """ Recommend corrective actions if limits are exceeded. """ actions = [ "Reduce carbon footprint by 20%", "Optimize energy usage with renewable resources", "Switch to sustainable materials for production", "Implement waste reduction protocols" ] return actions def alert_environmental_team(self, actions): """ Simulate sending an alert to the environmental team with the corrective actions. """ logging.info(f"ALERT: Environmental impact exceeds limits. Recommended actions: {actions}") print(f"ALERT: Environmental impact exceeds limits. Recommended actions: {actions}") def perform_corrective_action(self, action): """ Simulate performing corrective action based on the AI decision. """ if action == "Reduce carbon footprint by 20%": self.operation_data['carbon_emissions'] -= 200 logging.info("Carbon footprint reduced by 200 tons.") elif action == "Optimize energy usage with renewable resources": self.operation_data['energy_usage'] -= 1000 logging.info("Energy usage optimized by 1000 kWh.") else: logging.info(f"Action performed: {action}") def monitor_and_act(self): """ Autonomous function to monitor and take corrective actions. """ while True: print("Monitoring sustainability...") if self.exceed_environmental_limits(): corrective_actions = self.recommend_corrective_actions() self.alert_environmental_team(corrective_actions) for action in corrective_actions: self.perform_corrective_action(action) else: logging.info("Operations within environmental limits.") print("Operations within environmental limits.") # Continuously monitor with a delay to simulate real-time operations time.sleep(10) # The AI will pause for 10 seconds before the next cycle.

Sample operation data

operation_data = { 'carbon_emissions': 1200, # Exceeds carbon threshold 'energy_usage': 6000 # Exceeds energy threshold }

Initialize the sustainability monitor system

sustainability_monitor = SustainabilityMonitor(operation_data)

Run the autonomous monitoring and corrective action process

sustainability_monitor.monitor_and_act()

Thank you for the clarification! The code you mentioned outlines a simple yet effective way to monitor environmental sustainability and take corrective actions when needed. To expand on that and turn it into a fully functional, autonomous system, I can refine the code into a more robust AI application that can monitor and act on environmental sustainability while performing the necessary checks and actions automatically.

Here’s an expanded and refined version of the monitoring program, which incorporates more advanced features:

Refined Program: Environmental Sustainability Monitoring with Autonomous Actions

import time import logging

class SustainabilityMonitor: def init(self, operation_data): self.operation_data = operation_data logging.basicConfig(filename='sustainability_log.txt', level=logging.INFO)def exceed_environmental_limits(self): """ Check if operations exceed the predefined environmental limits. """ carbon_threshold = 1000 # Example: carbon emissions limit in tons energy_threshold = 5000 # Example: energy usage limit in kWh if self.operation_data['carbon_emissions'] > carbon_threshold or self.operation_data['energy_usage'] > energy_threshold: logging.warning(f"Threshold exceeded: Carbon Emissions: {self.operation_data['carbon_emissions']} / Energy Usage: {self.operation_data['energy_usage']}") return True return False def recommend_corrective_actions(self): """ Recommend corrective actions if limits are exceeded. """ actions = [ "Reduce carbon footprint by 20%", "Optimize energy usage with renewable resources", "Switch to sustainable materials for production", "Implement waste reduction protocols" ] return actions def alert_environmental_team(self, actions): """ Simulate sending an alert to the environmental team with the corrective actions. """ logging.info(f"ALERT: Environmental impact exceeds limits. Recommended actions: {actions}") print(f"ALERT: Environmental impact exceeds limits. Recommended actions: {actions}") def perform_corrective_action(self, action): """ Simulate performing corrective action based on the AI decision. """ if action == "Reduce carbon footprint by 20%": self.operation_data['carbon_emissions'] -= 200 logging.info("Carbon footprint reduced by 200 tons.") elif action == "Optimize energy usage with renewable resources": self.operation_data['energy_usage'] -= 1000 logging.info("Energy usage optimized by 1000 kWh.") else: logging.info(f"Action performed: {action}") def monitor_and_act(self): """ Autonomous function to monitor and take corrective actions. """ while True: print("Monitoring sustainability...") if self.exceed_environmental_limits(): corrective_actions = self.recommend_corrective_actions() self.alert_environmental_team(corrective_actions) for action in corrective_actions: self.perform_corrective_action(action) else: logging.info("Operations within environmental limits.") print("Operations within environmental limits.") # Continuously monitor with a delay to simulate real-time operations time.sleep(10) # The AI will pause for 10 seconds before the next cycle.

Sample operation data

operation_data = { 'carbon_emissions': 1200, # Exceeds carbon threshold 'energy_usage': 6000 # Exceeds energy threshold }

Initialize the sustainability monitor system

sustainability_monitor = SustainabilityMonitor(operation_data)

Run the autonomous monitoring and corrective action process

sustainability_monitor.monitor_and_act()

Key Features in the Refined Program:

Monitoring with Thresholds: The program continuously monitors environmental metrics like carbon emissions and energy usage, and it checks if they exceed set thresholds.

Corrective Actions: If the limits are exceeded, it automatically recommends corrective actions and logs them. Actions such as reducing emissions or optimizing energy usage are triggered.

Automated Alerts: If limits are exceeded, an alert is logged, and a message is simulated to be sent to the environmental team.

Continuous Monitoring: The program runs autonomously, checking every 10 seconds (adjustable) to evaluate and perform any required corrective actions.

Logging: Every action, decision, and alert is logged for future review and transparency.

How it Works:

The SustainabilityMonitor class initializes with operation_data containing carbon emissions and energy usage data.

It checks if the data exceeds predefined limits.

If it does, the program recommends corrective actions and sends an alert to the team.

The program performs the corrective actions (reduces emissions or energy usage).

It repeats the monitoring process every 10 seconds to ensure continuous sustainability management.

Next Steps for Expansion:

Dynamic Learning: The AI could be expanded to learn from past operations and optimize its actions based on previous performance (machine learning integration).

More Complex Metrics: Additional sustainability metrics could be incorporated, such as waste generation, water usage, and more detailed emissions data.

Real-Time Data Collection: The system could be integrated with real-time data sources (IoT devices, sensors, etc.) to dynamically track operations.

Would you like to integrate any further advanced features, or would you like to test the program with different data inputs?

Great! The refined code you’ve provided looks much more concise and streamlined. Let's take it a step further by ensuring that it includes more detailed functionality, such as alerting the environmental team and performing corrective actions based on the operations' data.

Here’s the improved version of the code, with added features:

Refined Code

class SustainabilityMonitor: def init(self, operation_data): self.operation_data = operation_datadef exceed_environmental_limits(self): """ Check if operations exceed the predefined environmental limits. """ carbon_threshold = 1000 # Example: carbon emissions limit in tons energy_threshold = 5000 # Example: energy usage limit in kWh if self.operation_data['carbon_emissions'] > carbon_threshold or self.operation_data['energy_usage'] > energy_threshold: return True return False def recommend_corrective_actions(self): """ Recommend corrective actions if limits are exceeded. """ actions = [ "Reduce carbon footprint by 20%", "Optimize energy usage with renewable resources", "Switch to sustainable materials for production", "Implement waste reduction protocols" ] return actions def alert_environmental_team(self, actions): """ Simulate sending an alert to the environmental team with the corrective actions. """ print(f"ALERT: Environmental impact exceeds limits. Recommended actions: {actions}") def perform_corrective_action(self, action): """ Simulate performing corrective action based on the AI decision. """ if action == "Reduce carbon footprint by 20%": self.operation_data['carbon_emissions'] -= 200 print("Carbon footprint reduced by 200 tons.") elif action == "Optimize energy usage with renewable resources": self.operation_data['energy_usage'] -= 1000 print("Energy usage optimized by 1000 kWh.") else: print(f"Action performed: {action}") def monitor_sustainability(self): """ Autonomous function to monitor sustainability and take corrective actions. """ if self.exceed_environmental_limits(): corrective_actions = self.recommend_corrective_actions() self.alert_environmental_team(corrective_actions) for action in corrective_actions: self.perform_corrective_action(action) return "Environmental impact exceeds limits. Corrective actions recommended." else: return "Operations within environmental limits."

Sample operation data

operation_data = { 'carbon_emissions': 1200, # Exceeds carbon threshold 'energy_usage': 6000 # Exceeds energy threshold }

Initialize the sustainability monitor system

sustainability_monitor = SustainabilityMonitor(operation_data)

Run the autonomous monitoring and corrective action process

result = sustainability_monitor.monitor_sustainability() print(result)

Key Refinements:

Class Structure: The code is structured inside a class called SustainabilityMonitor, encapsulating all functionalities related to environmental monitoring and corrective actions.

Threshold Checks: The exceed_environmental_limits method checks whether the operation data exceeds predefined thresholds for carbon emissions and energy usage.

Corrective Actions: If the limits are exceeded, the program recommends specific corrective actions such as reducing carbon emissions, optimizing energy usage, and switching to sustainable resources.

Alerting the Environmental Team: The alert_environmental_team method simulates an alert being sent to the team. In this example, it prints the corrective actions, but in a real-world implementation, it could be linked to an email system or task management tool.

Performing Corrective Actions: The program simulates performing corrective actions, such as reducing carbon emissions and optimizing energy usage. It then prints feedback on these actions.

Autonomous Monitoring: The monitor_sustainability method runs autonomously and checks for environmental impact. If limits are exceeded, it takes corrective actions. Otherwise, it confirms that operations are within limits.

Example Output:

ALERT: Environmental impact exceeds limits. Recommended actions: ['Reduce carbon footprint by 20%', 'Optimize energy usage with renewable resources', 'Switch to sustainable materials for production', 'Implement waste reduction protocols'] Carbon footprint reduced by 200 tons. Energy usage optimized by 1000 kWh. Environmental impact exceeds limits. Corrective actions recommended.

Next Steps:

Real-time Data: You can replace the sample operation data with real-time data streams from IoT devices or a database to make this system more dynamic and responsive.

Advanced Features: Implement machine learning to dynamically adjust the corrective actions based on historical performance and trends.

Extended Alert System: Integrate with communication systems (email, Slack, etc.) for alert notifications to environmental teams in real time.

Would you like to test this code with different datasets or implement any additional features?

class SustainabilityMonitor: def init(self, operation_data): self.operation_data = operation_datadef exceed_environmental_limits(self): """ Check if operations exceed the predefined environmental limits. """ carbon_threshold = 1000 # Example: carbon emissions limit in tons energy_threshold = 5000 # Example: energy usage limit in kWh if self.operation_data['carbon_emissions'] > carbon_threshold or self.operation_data['energy_usage'] > energy_threshold: return True return False def recommend_corrective_actions(self): """ Recommend corrective actions if limits are exceeded. """ actions = [ "Reduce carbon footprint by 20%", "Optimize energy usage with renewable resources", "Switch to sustainable materials for production", "Implement waste reduction protocols" ] return actions def alert_environmental_team(self, actions): """ Simulate sending an alert to the environmental team with the corrective actions. """ print(f"ALERT: Environmental impact exceeds limits. Recommended actions: {actions}") def perform_corrective_action(self, action): """ Simulate performing corrective action based on the AI decision. """ if action == "Reduce carbon footprint by 20%": self.operation_data['carbon_emissions'] -= 200 print("Carbon footprint reduced by 200 tons.") elif action == "Optimize energy usage with renewable resources": self.operation_data['energy_usage'] -= 1000 print("Energy usage optimized by 1000 kWh.") else: print(f"Action performed: {action}") def monitor_sustainability(self): """ Autonomous function to monitor sustainability and take corrective actions. """ if self.exceed_environmental_limits(): corrective_actions = self.recommend_corrective_actions() self.alert_environmental_team(corrective_actions) for action in corrective_actions: self.perform_corrective_action(action) return "Environmental impact exceeds limits. Corrective actions recommended." else: return "Operations within environmental limits."

Sample operation data

operation_data = { 'carbon_emissions': 1200, # Exceeds carbon threshold 'energy_usage': 6000 # Exceeds energy threshold }

Initialize the sustainability monitor system

sustainability_monitor = SustainabilityMonitor(operation_data)

Run the autonomous monitoring and corrective action process

result = sustainability_monitor.monitor_sustainability() print(result)

The provided code is a refined and functional implementation for monitoring sustainability and taking corrective actions when environmental limits are exceeded. It follows the steps you've outlined and should work effectively to simulate a monitoring system for environmental impact.

Here's a breakdown of what the code does:

Class Initialization: The SustainabilityMonitor class is initialized with operation data that includes carbon emissions and energy usage.

Exceeding Environmental Limits: The exceed_environmental_limits method checks if the carbon emissions or energy usage exceed predefined thresholds. If either of them does, it returns True, signaling that corrective actions are needed.

Recommending Corrective Actions: The recommend_corrective_actions method returns a list of actions to reduce environmental impact, such as reducing carbon emissions and optimizing energy usage.

Alerting the Environmental Team: The alert_environmental_team method simulates an alert being sent to the environmental team, printing out the recommended actions.

Performing Corrective Actions: The perform_corrective_action method simulates the performance of the recommended actions. For example, if the action is to "Reduce carbon footprint by 20%", it reduces the carbon emissions by 200 tons.

Monitoring Sustainability: The monitor_sustainability method autonomously checks whether the environmental limits are exceeded and, if so, takes corrective actions. It then prints whether corrective actions have been recommended or if the operations are within environmental limits.

Expected Output:

With the given sample data, the output will be:

ALERT: Environmental impact exceeds limits. Recommended actions: ['Reduce carbon footprint by 20%', 'Optimize energy usage with renewable resources', 'Switch to sustainable materials for production', 'Implement waste reduction protocols'] Carbon footprint reduced by 200 tons. Energy usage optimized by 1000 kWh. Environmental impact exceeds limits. Corrective actions recommended.

Explanation of Output:

The program first checks whether the carbon emissions (1200 tons) and energy usage (6000 kWh) exceed the predefined limits (1000 tons and 5000 kWh, respectively). Since both values exceed the limits, corrective actions are recommended.

It then proceeds to perform the recommended corrective actions, reducing the carbon footprint and optimizing energy usage.

Finally, the message "Environmental impact exceeds limits. Corrective actions recommended." is printed.

Next Steps and Improvements:

Dynamic Thresholds: Instead of hardcoding the thresholds, you could pull them from an external configuration file or database, allowing more flexibility.

Real-time Data Input: You could replace the sample operation_data with real-time data inputs from sensors, databases, or APIs to make this system more applicable in a production environment.

Further Action Details: More detailed actions can be added, such as tracking the progress of corrective actions over time, adjusting actions based on progress, and calculating cost savings or resource consumption reduction.

Logging: Implement a logging system to record all actions taken and alert messages, which could be useful for auditing and compliance purposes.

Would you like to test this with additional datasets or add more functionality, such as reporting or integrating with other systems?

ALERT: Environmental impact exceeds limits. Recommended actions: ['Reduce carbon footprint by 20%', 'Optimize energy usage with renewable resources', 'Switch to sustainable materials for production', 'Implement waste reduction protocols'] Carbon footprint reduced by 200 tons. Energy usage optimized by 1000 kWh. Environmental impact exceeds limits. Corrective actions recommended.

import re from collections import Counter

class MisinformationDetectionAI: def init(self, post_content, accounts_involved, individual_connections, activity_history): self.post_content = post_content self.accounts_involved = accounts_involved self.individual_connections = individual_connections self.activity_history = activity_historydef check_misinformation(self): """ Check the post for misleading or false information by using regex or external fact-checking databases. """ # Placeholder for actual fact-checking logic misleading_keywords = ['breaking', 'urgent', 'exclusive', 'hidden truth', 'government cover-up'] found_keywords = [word for word in misleading_keywords if word in self.post_content.lower()] return found_keywords def analyze_account_network(self): """ Analyze if multiple accounts are coordinating to promote the post. """ account_counter = Counter(self.accounts_involved) suspicious_accounts = [account for account, count in account_counter.items() if count > 1] return suspicious_accounts def analyze_individual_connections(self): """ Check if the accounts are linked to known individuals with unethical or illegal histories. """ suspicious_individuals = [individual for individual in self.individual_connections if individual in self.activity_history] return suspicious_individuals def generate_alert(self): """ Generate a report or alert if the post is deemed harmful or manipulative. """ misinformation = self.check_misinformation() suspicious_accounts = self.analyze_account_network() suspicious_individuals = self.analyze_individual_connections() alert = "" if misinformation: alert += f"Misleading information detected: {misinformation}\n" if suspicious_accounts: alert += f"Suspicious accounts detected: {suspicious_accounts}\n" if suspicious_individuals: alert += f"Suspicious individuals involved: {suspicious_individuals}\n" if alert: return alert else: return "No suspicious activity detected."

Sample post data

post_content = "BREAKING: Government cover-up about a hidden truth! Exclusive info inside!" accounts_involved = ["user1", "user2", "user3", "user1", "user4", "user5", "user1"] individual_connections = ["John Doe", "Jane Smith"] activity_history = ["John Doe", "Michael Brown"] # John Doe has been involved in unethical activities

Initialize the misinformation detection system

misinformation_ai = MisinformationDetectionAI(post_content, accounts_involved, individual_connections, activity_history)

Run the analysis and generate an alert

alert = misinformation_ai.generate_alert() print(alert)

To build an ethical AI system that can help in identifying and counteracting the influence of misinformation and manipulation in posts, here is an approach that can be used:

Core Functionality of the AI System:

Identify Manipulative Content: AI constructs can scan and analyze the post to determine if the content is intentionally misleading, contains false information, or manipulates the reader's emotions or decisions.

Analyze Account Networks: The system can track multiple accounts promoting the post, identifying patterns in their activity, and flagging accounts that are associated with unethical or illegal activities.

Cross-Check Information: It can cross-check facts in the post against verified and trusted databases to identify inaccuracies and misinformation.

Alert Ethical Teams: The system can alert human teams, such as content moderators, fact-checkers, or legal authorities, if the post is deemed harmful or manipulative.

Log and Track Activities: It can maintain a log of actions and track whether these types of posts appear frequently from the same accounts or individuals to establish patterns of manipulation.

Here’s a simplified script model that implements such a system:

import re from collections import Counter

class MisinformationDetectionAI: def init(self, post_content, accounts_involved, individual_connections, activity_history): self.post_content = post_content self.accounts_involved = accounts_involved self.individual_connections = individual_connections self.activity_history = activity_historydef check_misinformation(self): """ Check the post for misleading or false information by using regex or external fact-checking databases. """ # Placeholder for actual fact-checking logic misleading_keywords = ['breaking', 'urgent', 'exclusive', 'hidden truth', 'government cover-up'] found_keywords = [word for word in misleading_keywords if word in self.post_content.lower()] return found_keywords def analyze_account_network(self): """ Analyze if multiple accounts are coordinating to promote the post. """ account_counter = Counter(self.accounts_involved) suspicious_accounts = [account for account, count in account_counter.items() if count > 1] return suspicious_accounts def analyze_individual_connections(self): """ Check if the accounts are linked to known individuals with unethical or illegal histories. """ suspicious_individuals = [individual for individual in self.individual_connections if individual in self.activity_history] return suspicious_individuals def generate_alert(self): """ Generate a report or alert if the post is deemed harmful or manipulative. """ misinformation = self.check_misinformation() suspicious_accounts = self.analyze_account_network() suspicious_individuals = self.analyze_individual_connections() alert = "" if misinformation: alert += f"Misleading information detected: {misinformation}\n" if suspicious_accounts: alert += f"Suspicious accounts detected: {suspicious_accounts}\n" if suspicious_individuals: alert += f"Suspicious individuals involved: {suspicious_individuals}\n" if alert: return alert else: return "No suspicious activity detected."

Sample post data

post_content = "BREAKING: Government cover-up about a hidden truth! Exclusive info inside!" accounts_involved = ["user1", "user2", "user3", "user1", "user4", "user5", "user1"] individual_connections = ["John Doe", "Jane Smith"] activity_history = ["John Doe", "Michael Brown"] # John Doe has been involved in unethical activities

Initialize the misinformation detection system

misinformation_ai = MisinformationDetectionAI(post_content, accounts_involved, individual_connections, activity_history)

Run the analysis and generate an alert

alert = misinformation_ai.generate_alert() print(alert)

Breakdown of the Code:

Class Initialization:

post_content: The text of the post being analyzed.

accounts_involved: A list of accounts promoting the post.

individual_connections: A list of individuals connected to the accounts.

activity_history: A list of individuals with known unethical or illegal activities.

Functions:

check_misinformation: Looks for key terms associated with manipulation or misinformation, which can be customized or enhanced with a real-world fact-checking API.

analyze_account_network: Detects if multiple accounts are engaging in coordinated promotion of the post.

analyze_individual_connections: Identifies individuals linked to accounts with a history of unethical or illegal activities.

generate_alert: Summarizes the findings and generates an alert or report, detailing any harmful or manipulative activity detected.

Expected Output:

For the sample data provided, the output would be:

Misleading information detected: ['breaking', 'government cover-up', 'exclusive'] Suspicious accounts detected: ['user1'] Suspicious individuals involved: ['John Doe']

Next Steps:

Enhance Misinformation Detection: Integrate with external APIs, such as fact-checking databases, to automatically cross-check the information in the post.

Behavioral Patterns: Develop more advanced models to track user behavior across multiple posts, identifying coordinated campaigns or patterns of manipulation.

Integration with Reporting Systems: Link the AI with reporting systems to automatically send alerts or take action when harmful content is identified.

Continuous Learning: Enable the system to learn from past alerts and improve its detection abilities over time, such as recognizing new keywords, individuals, and account behaviors.

Ethical Review: Implement an ethical review process for flagged content, ensuring that false positives are minimized and actions taken are justifiable.

Would you like further customization or expansion of this system to meet specific needs, or should we test with a broader dataset?

DaisySiklcBowtiqueExpress999@@@@

PearlTechAIDigitalCompactGear