#education and artificial intelligence

Explore tagged Tumblr posts

Text

AI compliance certification

Explore our AI compliance certification solutions to guarantee your AI technologies adhere to legal and ethical standards. Get certified and stay ahead in the industry.

#AI compliance certification#artificial intelligence training#artificial intelligence platform#education and artificial intelligence

1 note

·

View note

Text

Just ask AI 🤔

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourselves#reeducate yourself#think about it#think for yourselves#think for yourself#do your homework#do your research#do some research#do your own research#ask yourself questions#question everything#ai#artificial intelligence#escape the matrix#truth be told#news#you decide

795 notes

·

View notes

Text

Warm Take: The proliferation of AI-based plagiarism in higher education is based partly in the years-in-the-making general social/economic narrative that a college degree is an essential line on your résumé, required for getting any halfway decent job. The more a degree became a universally requisite prize, the more it became just a prize—and not even as impressive a prize, because everyone has one, don’t they? So it’s little more than a participation ribbon, received by going through the motions. That school, any level of school, is meant to be for the purpose of learning; that more advanced schooling is meant to result in, & should be taken by people who want, advanced learning, of new facts and moreover of how to think about new facts—this is being culturally forgotten. All that matters is the end-goal of the line on the résumé—so why not just let the computer generate something?

329 notes

·

View notes

Text

77 notes

·

View notes

Text

Donald Trump’s Secretary of Education Linda McMahon kept referring to Artificial Intelligence ‘AI’ as A1. Yes, the letter A and the number 1, like the steak sauce.

America’s finest representing our government.

#linda mcmahon#department of education#education department#education dept#donald trump#breaking news#us politics#politics#potus#president trump#news#president of the united states#tumblr#united states politics#usa#usa news#united states politics and government#united states news#education#artificial intelligence#ai#usa politics#us news#united states#current events

47 notes

·

View notes

Text

They made us register for online classes about Gen AI today. One of the categories to fill in was "Prompting Experience". I feel gross.🤢🤮

At least it's just my work email... gonna deactivate that account as soon as the school year is over.

#anti ai#mispearl ramblings#first video literally opens with a guy saying “Artificial intelligence in education is no longer optional”#YEAH#CAUSE YOU'RE FORCING US TO USE IT#as if the ipads haven't rotted the kids' brains already??😫😫😫#teacher life

19 notes

·

View notes

Note

Senku, I want to ask you a question on the matter of using AI. I want to know your thoughts about it!

A while back, I attended a conference with a bunch of esteemed people, including a few diplomats from all over the world. Someone had asked a doctor and a professor in the top university of my country, "How do you feel about the growing presence of artificial intelligence in almost every aspects of work and life? Does it threaten you?" (Not his exact words, but that's the gist!)

At first, he laughed, and then he simply said: "Do you think mathematicians got mad when the calculator got invented?"

I have my own stance about it, and I think a tool whose primary function is to make complex computations much easier to do is VERY different from the level of AI currently existing.

As someone who's proficient in math, science, technology, and many other things, how do you feel about the professor's statement? 🤔

— 🐰

Okay. I have lots of thoughts about this

So we all know that AI is on the rise. This growth is thanks to the introduction of a type of AI called generative AI. This is the AI that makes all those generated images you hear about, it runs chat gpt, it's what they used for the infamous cola commercial.

Now there's another type of AI that we've been using for a much longer time called analytical AI. This is what your favorite web browser uses to sort your search results according to the query. This has like nothing to do with character ai or whatever, all that stuff is generative AI.

The professor compared AI to a calculator, but in my mind, calculators are much more like analytical AI, not the generative AI that's gotten so popular which the question was CLEARLY referencing. This is because analytical AI uses a structured algorithm, which is usually like a system of given numbers or codes that gives an exact result. There are some calculators that can actually be considered analytical AI. Point is, you're right, this is completely different from generative AI that uses an unstructured algorithm to make something "unique" (in quotes because it's one of a kind, but drawn from a combination of existing texts and images). The professor did NOT get the question I fear.

This bothers me because analytical AI can be incredibly useful, but generative AI really just takes away from us. Art, writing and design are for humans, not for robots -- science should foster creativity, not make it dull. It's important to know the difference between them so we know what to support and what to reject.

#also professors should be EDUCATED#should they not???#bro literally failed at his one job#people shouldnt be making comments on things they dont have a damn clue about#artificial intelligence#ai#dr stone rp#senku ishigami#ishigami senku

22 notes

·

View notes

Text

By: Clay Shirky

Published: Apr 29, 2025S

Since ChatGPT launched in late 2022, students have been among its most avid adopters. When the rapid growth in users stalled in the late spring of ’23, it briefly looked like the AI bubble might be popping, but growth resumed that September; the cause of the decline was simply summer break. Even as other kinds of organizations struggle to use a tool that can be strikingly powerful and surprisingly inept in turn, AI’s utility to students asked to produce 1,500 words on Hamlet or the Great Leap Forward was immediately obvious, and is the source of the current campaigns by OpenAI and others to offer student discounts, as a form of customer acquisition.

Every year, 15 million or so undergraduates in the United States produce papers and exams running to billions of words. While the output of any given course is student assignments — papers, exams, research projects, and so on — the product of that course is student experience. “Learning results from what the student does and thinks,” as the great educational theorist Herbert Simon once noted, “and only as a result of what the student does and thinks.” The assignment itself is a MacGuffin, with the shelf life of sour cream and an economic value that rounds to zero dollars. It is valuable only as a way to compel student effort and thought.

The utility of written assignments relies on two assumptions: The first is that to write about something, the student has to understand the subject and organize their thoughts. The second is that grading student writing amounts to assessing the effort and thought that went into it. At the end of 2022, the logic of this proposition — never ironclad — began to fall apart completely. The writing a student produces and the experience they have can now be decoupled as easily as typing a prompt, which means that grading student writing might now be unrelated to assessing what the student has learned to comprehend or express.

Generative AI can be useful for learning. These tools are good at creating explanations for difficult concepts, practice quizzes, study guides, and so on. Students can write a paper and ask for feedback on diction, or see what a rewrite at various reading levels looks like, or request a summary to check if their meaning is clear. Engaged uses have been visible since ChatGPT launched, side by side with the lazy ones. But the fact that AI might help students learn is no guarantee it will help them learn.

After observing that student action and thought is the only possible source of learning, Simon concluded, “The teacher can advance learning only by influencing the student to learn.” Faced with generative AI in our classrooms, the obvious response for us is to influence students to adopt the helpful uses of AI while persuading them to avoid the harmful ones. Our problem is that we don’t know how to do that.I

am an administrator at New York University, responsible for helping faculty adapt to digital tools. Since the arrival of generative AI, I have spent much of the last two years talking with professors and students to try to understand what is going on in their classrooms. In those conversations, faculty have been variously vexed, curious, angry, or excited about AI, but as last year was winding down, for the first time one of the frequently expressed emotions was sadness. This came from faculty who were, by their account, adopting the strategies my colleagues and I have recommended: emphasizing the connection between effort and learning, responding to AI-generated work by offering a second chance rather than simply grading down, and so on. Those faculty were telling us our recommended strategies were not working as well as we’d hoped, and they were saying it with real distress.

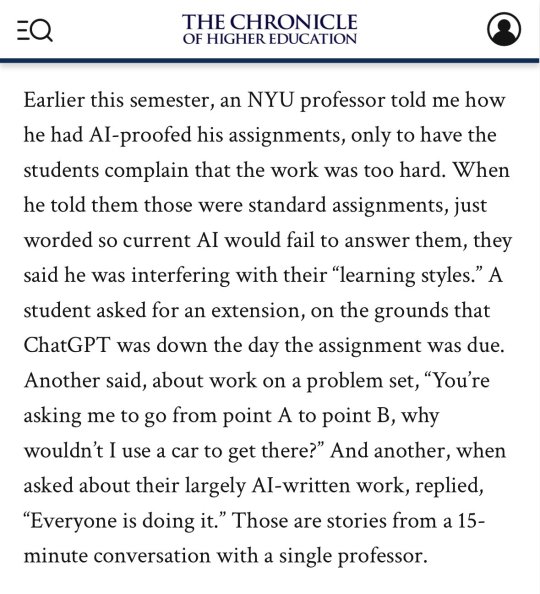

Earlier this semester, an NYU professor told me how he had AI-proofed his assignments, only to have the students complain that the work was too hard. When he told them those were standard assignments, just worded so current AI would fail to answer them, they said he was interfering with their “learning styles.” A student asked for an extension, on the grounds that ChatGPT was down the day the assignment was due. Another said, about work on a problem set, “You’re asking me to go from point A to point B, why wouldn’t I use a car to get there?” And another, when asked about their largely AI-written work, replied, “Everyone is doing it.” Those are stories from a 15-minute conversation with a single professor.

We are also hearing a growing sense of sadness from our students about AI use. One of my colleagues reports students being “deeply conflicted” about AI use, originally adopting it as an aid to studying but persisting with a mix of justification and unease. Some observations she’s collected:

“I’ve become lazier. AI makes reading easier, but it slowly causes my brain to lose the ability to think critically or understand every word.”

“I feel like I rely too much on AI, and it has taken creativity away from me.”

On using AI summaries: “Sometimes I don’t even understand what the text is trying to tell me. Sometimes it’s too much text in a short period of time, and sometimes I’m just not interested in the text.”

“Yeah, it’s helpful, but I’m scared that someday we’ll prefer to read only AI summaries rather than our own, and we’ll become very dependent on AI.”

Much of what’s driving student adoption is anxiety. In addition to the ordinary worries about academic performance, students feel time pressure from jobs, internships, or extracurriculars, and anxiety about GPA and transcripts for employers. It is difficult to say, “Here is a tool that can basically complete assignments for you, thus reducing anxiety and saving you 10 hours of work without eviscerating your GPA. By the way, don’t use it that way.” But for assignments to be meaningful, that sort of student self-restraint is critical.

Self-restraint is also, on present evidence, not universally distributed. Last November, a Reddit post appeared in r/nyu, under the heading “Can’t stop using Chat GPT on HW.” (The poster’s history is consistent with their being an NYU undergraduate as claimed.) The post read:

I literally can’t even go 10 seconds without using Chat when I am doing my assignments. I hate what I have become because I know I am learning NOTHING, but I am too far behind now to get by without using it. I need help, my motivation is gone. I am a senior and I am going to graduate with no retained knowledge from my major.

Given these and many similar observations in the last several months, I’ve realized many of us working on AI in the classroom have made a collective mistake, believing that lazy and engaged uses lie on a spectrum, and that moving our students toward engaged uses would also move them away from the lazy ones.

Faculty and students have been telling me that this is not true, or at least not true enough. Instead of a spectrum, uses of AI are independent options. A student can take an engaged approach to one assignment, a lazy approach on another, and a mix of engaged and lazy on a third. Good uses of AI do not automatically dissuade students from also adopting bad ones; an instructor can introduce AI for essay feedback or test prep without that stopping their student from also using it to write most of their assignments.

Our problem is that we have two problems. One is figuring out how to encourage our students to adopt creative and helpful uses of AI. The other is figuring out how to discourage them from adopting lazy and harmful uses. Those are both important, but the second one is harder.I

t is easy to explain to students that offloading an assignment to ChatGPT creates no more benefit for their intellect than moving a barbell with a forklift does for their strength. We have been alert to this issue since late 2022, and students have consistently reported understanding that some uses of AI are harmful. Yet forgoing easy shortcuts has proven to be as difficult as following a workout routine, and for the same reason: The human mind is incredibly adept at rationalizing pleasurable but unhelpful behavior.

Using these tools can certainly make it feel like you are learning. In her explanatory video “AI Can Do Your Homework. Now What?” the documentarian Joss Fong describes it this way:

Education researchers have this term “desirable difficulties,” which describes this kind of effortful participation that really works but also kind of hurts. And the risk with AI is that we might not preserve that effort, especially because we already tend to misinterpret a little bit of struggling as a signal that we’re not learning.

This preference for the feeling of fluency over desirable difficulties was identified long before generative AI. It’s why students regularly report they learn more from well-delivered lectures than from active learning, even though we know from many studies that the opposite is true. One recent paper was evocatively titled “Measuring Active Learning Versus the Feeling of Learning.” Another concludes that instructor fluency increases perceptions of learning without increasing actual learning.

This is a version of the debate we had when electronic calculators first became widely available in the 1970s. Though many people present calculator use as unproblematic, K-12 teachers still ban them when students are learning arithmetic. One study suggests that students use calculators as a way of circumventing the need to understand a mathematics problem (i.e., the same thing you and I use them for). In another experiment, when using a calculator programmed to “lie,” four in 10 students simply accepted the result that a woman born in 1945 was 114 in 1994. Johns Hopkins students with heavy calculator use in K-12 had worse math grades in college, and many claims about the positive effect of calculators take improved test scores as evidence, which is like concluding that someone can run faster if you give them a car. Calculators obviously have their uses, but we should not pretend that overreliance on them does not damage number sense, as everyone who has ever typed 7 x 8 into a calculator intuitively understands.

Studies of cognitive bias with AI use are starting to show similar patterns. A 2024 study with the blunt title “Generative AI Can Harm Learning” found that “access to GPT-4 significantly improves performance … However, we additionally find that when access is subsequently taken away, students actually perform worse than those who never had access.” Another found that students who have access to a large language model overestimate how much they have learned. A 2025 study from Carnegie Mellon University and Microsoft Research concludes that higher confidence in gen AI is associated with less critical thinking. As with calculators, there will be many tasks where automation is more important than user comprehension, but for student work, a tool that improves the output but degrades the experience is a bad tradeoff.I

n 1980 the philosopher John Searle, writing about AI debates at the time, proposed a thought experiment called “The Chinese Room.” Searle imagined an English speaker with no knowledge of the Chinese language sitting in a room with an elaborate set of instructions, in English, for looking up one set of Chinese characters and finding a second set associated with the first. When a piece of paper with words in Chinese written on it slides under the door, the room’s occupant looks it up, draws the corresponding characters on another piece of paper, and slides that back. Unbeknownst to the room’s occupant, Chinese speakers on the other side of the door are slipping questions into the room, and the pieces of paper that slide back out are answers in perfect Chinese. With this imaginary setup, Searle asked whether the room’s occupant actually knows how to read and write Chinese. His answer was an unequivocally no.

When Searle proposed that thought experiment, no working AI could approximate that behavior; the paper was written to highlight the theoretical difference between acting with intent versus merely following instructions. Now it has become just another use of actually existing artificial intelligence, one that can destroy a student’s education.

The recent case of William A., as he was known in court documents, illustrates the threat. William was a student in Tennessee’s Clarksville-Montgomery County School system who struggled to learn to read. (He would eventually be diagnosed with dyslexia.) As is required under the Individuals With Disabilities Education Act, William was given an individualized educational plan by the school system, designed to provide a “free appropriate public education” that takes a student’s disabilities into account. As William progressed through school, his educational plan was adjusted, allowing him additional time plus permission to use technology to complete his assignments. He graduated in 2024 with a 3.4 GPA and an inability to read. He could not even spell his own name.

To complete written assignments, as described in the court proceedings, “William would first dictate his topic into a document using speech-to-text software”:

He then would paste the written words into an AI software like ChatGPT. Next, the AI software would generate a paper on that topic, which William would paste back into his own document. Finally, William would run that paper through another software program like Grammarly, so that it reflected an appropriate writing style.

This process is recognizably a practical version of the Chinese Room for translating between speaking and writing. That is how a kid can get through high school with a B+ average and near-total illiteracy.

A local court found that the school system had violated the Individuals With Disabilities Education Act, and ordered it to provide William with hundreds of hours of compensatory tutoring. The county appealed, maintaining that since William could follow instructions to produce the requested output, he’d been given an acceptable substitute for knowing how to read and write. On February 3, an appellate judge handed down a decision affirming the original judgement: William’s schools failed him by concentrating on whether he had completed his assignments, rather than whether he’d learned from them.

Searle took it as axiomatic that the occupant of the Chinese Room could neither read nor write Chinese; following instructions did not substitute for comprehension. The appellate-court judge similarly ruled that William A. had not learned to read or write English: Cutting and pasting from ChatGPT did not substitute for literacy. And what I and many of my colleagues worry is that we are allowing our students to build custom Chinese Rooms for themselves, one assignment at a time.

[ Via: https://archive.today/OgKaY ]

==

These are the students who want taxpayers to pay for their student debt.

#Steve McGuire#higher education#artificial intelligence#AI#academic standards#Chinese Room#literacy#corruption of education#NYU#New York University#religion is a mental illness

14 notes

·

View notes

Text

Today a colleague was showing me our online testing system and excitedly told me how it can write questions for you. I’m never going to use that feature.

52 notes

·

View notes

Text

youtube

How To Learn Math for Machine Learning FAST (Even With Zero Math Background)

I dropped out of high school and managed to became an Applied Scientist at Amazon by self-learning math (and other ML skills). In this video I'll show you exactly how I did it, sharing the resources and study techniques that worked for me, along with practical advice on what math you actually need (and don't need) to break into machine learning and data science.

#How To Learn Math for Machine Learning#machine learning#free education#education#youtube#technology#educate yourselves#educate yourself#tips and tricks#software engineering#data science#artificial intelligence#data analytics#data science course#math#mathematics#Youtube

21 notes

·

View notes

Text

Big Prank - make people study for 16 years and then replace them with AI.

#Big Prank - make people study for 16 years and then replace them with AI.#prank#study blog#studyblr#art study#study motivation#bible study#study#school#education#college#university#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government#artificial intelligence#anti artificial intelligence#anti ai#fuck ai#ai generated#ai art#ai artwork#ai image

9 notes

·

View notes

Text

The beginning of the fall of humanity. The AI take over. 🤔

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourselves#reeducate yourself#think about it#think for yourselves#think for yourself#do your homework#do your own research#do your research#do some research#ask yourself questions#question everything#ai#artificial intelligence#you decide

189 notes

·

View notes

Note

That ai post, I agree that ai it's an awful thing that should've never gone that far but sometimes you can't literally escape it. I work as a data analyst and at least where I'm from every job related to my field demands now that you have knowledge in a lot of ai softwares.. And like a girl has to eat 😭.

As an academic if you include AI in your funding proposal you WILL get the money. It’s become a similar buzzword to quantum except much more powerful. And AI can be rightfully powerful for niche research related things and tensorflow isn’t ALL bad but things like the widespread used of AI in everyday life scares me. What happens when chatgpt needs to start making money, moves ALL of their software that a bunch of young adults now don’t know how to think without to a ridiculous subscription-based model for like 80$ a month? How much personal data is put in there each day? I just think it’s an absolute disaster waiting to happen, not to mention the AI arms race that’s exploded and everyone’s insistence to put AI into everything including checks and balances to existing systems. I don’t have a good feeling, it really needs to be regulated and quick but all the old people in power don’t actually understand the crisis on their hands and just care about the jingle of the cash in their pockets.

#always feel very lucky to have gone through pre-grad education without ai#if I’d be given the magic essay writing machine at 14 I would’ve just used it#and that’s a WORRY#not to mention the environmental goals being steamrolled#and the fact that it requires so much computing power we DO NOT HAVE#nor ever will bc quantum is not the answer. physicists know this but economists who listened to a podcast once think they know better.#sigh.#asks#non f1#artificial intelligence

12 notes

·

View notes

Text

#informative#now you know#free#media#free media#free ebooks#artificial intelligence#ai#adblcok#streaming#torrent#torrents#gaming#reading#torrenting#educational#linux/macOS#linux#macOS#android#iOS#android/iOS#bsky#bsky.app#bluesky#reddit#discord#github

8 notes

·

View notes

Text

Yes, using LLM AI is a dubious ethical decision at best and that deserves to be treated with the proper weight. However, using that as an excuse to completely reject any and all education regarding LLMs is misguided. If we are to criticize LLMs, we should first know how they work and what the intended approaches are, then work from there. Going headfirst without knowing the enemy is a death sentence.

#artificial intelligence#ai discourse#education#Almost all the insults and criticisms and disdain towards LLMs and AI is deserved#but come on.#Refusing to learn is just going to be the death of us#Do we really wanna fall for the same mistakes that’s led to the rampant anti-intellectualism we’re dealing with today

5 notes

·

View notes

Text

Some thoughts on Ai and why I hate it:

Ai is so bad for the environmen it heavily affects cities's water and electricity, so much so that it's amount used on Ai had been quoted as "astonishing". It has also has caused massive losses in the stock market. Ai has never and will never be the solution.

https://www.independent.co.uk/tech/ai-artificial-intelligence-environment-climate-b2643918.html

Writing and reading skills are very important !! Ai is destroying creative spaces as well as critical thinking ability in things like brainstorming, problem-solving, and understanding concepts.

I don't really have an actual problem with most of how people use ai IN FANFICTION (aside from when it's used to create stories from scratch.) It is okay to need spelling and grammar help, and if you continue to write and fixing those mistakes you are learning and that's important.

If you are using Ai so that you don't have to learn (in particularly as a child or teenager) then I think it's a problem because of your necessary cognitive development. Ai does many good things to education too and I don't want to dismiss that. I have much less of a problem when adults are using it because they have already learned how to research and they have developed media literacy (hopefully).

Another problem I see is with the way access to education has been treated in the past. When someone is powerful they want to do everything they can to keep that power and one of the first things that is often eliminated or changed is education. Being able to learn is such an important and powerful thing.

One of the main things Ai is used for is creating pictures and I hope everyone knows how harmful that is to artists, it's awful. There are also many Ai programs that are used for creating sexual content, Ai can be used to make explicit content of anyone without their consent.

I don't support Ai. Supporting one kind of Ai is supporting all forms of Ai.

#protect trans rights in the uk please write to the pm and your mp#ai is evil#anti ai#anti artificial intelligence#artificial intelligence#ai#fanfiction#fanfic#why ai is bad#why ai is evil#how ai affects the environment#education#protect education#anti ai in school

7 notes

·

View notes