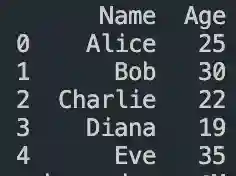

#how to manipulate data in pandas

Explore tagged Tumblr posts

Text

Data Manipulation: A Beginner's Guide to Pandas Dataframe Operations

Outline: What’s a Pandas Dataframe? (Think Spreadsheet on Steroids!) Say Goodbye to Messy Data: Pandas Tames the Beast Rows, Columns, and More: Navigating the Dataframe Landscape Mastering the Magic: Essential Dataframe Operations Selection Superpower: Picking the Data You Need Grab Specific Columns: Like Picking Out Your Favorite Colors Filter Rows with Precision: Finding Just the Right…

View On WordPress

#data manipulation#grouping data#how to manipulate data in pandas#pandas dataframe operations explained#pandas filtering#pandas operations#pandas sorting

0 notes

Text

What are the skills needed for a data scientist job?

It’s one of those careers that’s been getting a lot of buzz lately, and for good reason. But what exactly do you need to become a data scientist? Let’s break it down.

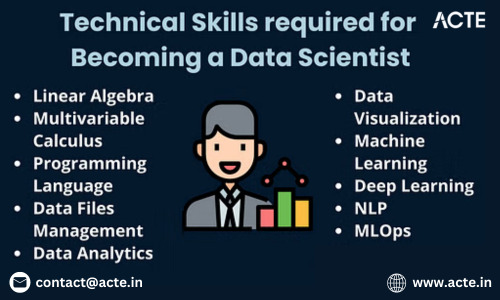

Technical Skills

First off, let's talk about the technical skills. These are the nuts and bolts of what you'll be doing every day.

Programming Skills: At the top of the list is programming. You’ll need to be proficient in languages like Python and R. These are the go-to tools for data manipulation, analysis, and visualization. If you’re comfortable writing scripts and solving problems with code, you’re on the right track.

Statistical Knowledge: Next up, you’ve got to have a solid grasp of statistics. This isn’t just about knowing the theory; it’s about applying statistical techniques to real-world data. You’ll need to understand concepts like regression, hypothesis testing, and probability.

Machine Learning: Machine learning is another biggie. You should know how to build and deploy machine learning models. This includes everything from simple linear regressions to complex neural networks. Familiarity with libraries like scikit-learn, TensorFlow, and PyTorch will be a huge plus.

Data Wrangling: Data isn’t always clean and tidy when you get it. Often, it’s messy and requires a lot of preprocessing. Skills in data wrangling, which means cleaning and organizing data, are essential. Tools like Pandas in Python can help a lot here.

Data Visualization: Being able to visualize data is key. It’s not enough to just analyze data; you need to present it in a way that makes sense to others. Tools like Matplotlib, Seaborn, and Tableau can help you create clear and compelling visuals.

Analytical Skills

Now, let’s talk about the analytical skills. These are just as important as the technical skills, if not more so.

Problem-Solving: At its core, data science is about solving problems. You need to be curious and have a knack for figuring out why something isn’t working and how to fix it. This means thinking critically and logically.

Domain Knowledge: Understanding the industry you’re working in is crucial. Whether it’s healthcare, finance, marketing, or any other field, knowing the specifics of the industry will help you make better decisions and provide more valuable insights.

Communication Skills: You might be working with complex data, but if you can’t explain your findings to others, it’s all for nothing. Being able to communicate clearly and effectively with both technical and non-technical stakeholders is a must.

Soft Skills

Don’t underestimate the importance of soft skills. These might not be as obvious, but they’re just as critical.

Collaboration: Data scientists often work in teams, so being able to collaborate with others is essential. This means being open to feedback, sharing your ideas, and working well with colleagues from different backgrounds.

Time Management: You’ll likely be juggling multiple projects at once, so good time management skills are crucial. Knowing how to prioritize tasks and manage your time effectively can make a big difference.

Adaptability: The field of data science is always evolving. New tools, techniques, and technologies are constantly emerging. Being adaptable and willing to learn new things is key to staying current and relevant in the field.

Conclusion

So, there you have it. Becoming a data scientist requires a mix of technical prowess, analytical thinking, and soft skills. It’s a challenging but incredibly rewarding career path. If you’re passionate about data and love solving problems, it might just be the perfect fit for you.

Good luck to all of you aspiring data scientists out there!

#artificial intelligence#career#education#coding#jobs#programming#success#python#data science#data scientist#data security

9 notes

·

View notes

Text

Exploring Data Science Tools: My Adventures with Python, R, and More

Welcome to my data science journey! In this blog post, I'm excited to take you on a captivating adventure through the world of data science tools. We'll explore the significance of choosing the right tools and how they've shaped my path in this thrilling field.

Choosing the right tools in data science is akin to a chef selecting the finest ingredients for a culinary masterpiece. Each tool has its unique flavor and purpose, and understanding their nuances is key to becoming a proficient data scientist.

I. The Quest for the Right Tool

My journey began with confusion and curiosity. The world of data science tools was vast and intimidating. I questioned which programming language would be my trusted companion on this expedition. The importance of selecting the right tool soon became evident.

I embarked on a research quest, delving deep into the features and capabilities of various tools. Python and R emerged as the frontrunners, each with its strengths and applications. These two contenders became the focus of my data science adventures.

II. Python: The Swiss Army Knife of Data Science

Python, often hailed as the Swiss Army Knife of data science, stood out for its versatility and widespread popularity. Its extensive library ecosystem, including NumPy for numerical computing, pandas for data manipulation, and Matplotlib for data visualization, made it a compelling choice.

My first experiences with Python were both thrilling and challenging. I dove into coding, faced syntax errors, and wrestled with data structures. But with each obstacle, I discovered new capabilities and expanded my skill set.

III. R: The Statistical Powerhouse

In the world of statistics, R shines as a powerhouse. Its statistical packages like dplyr for data manipulation and ggplot2 for data visualization are renowned for their efficacy. As I ventured into R, I found myself immersed in a world of statistical analysis and data exploration.

My journey with R included memorable encounters with data sets, where I unearthed hidden insights and crafted beautiful visualizations. The statistical prowess of R truly left an indelible mark on my data science adventure.

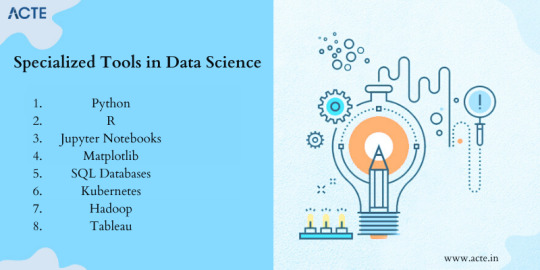

IV. Beyond Python and R: Exploring Specialized Tools

While Python and R were my primary companions, I couldn't resist exploring specialized tools and programming languages that catered to specific niches in data science. These tools offered unique features and advantages that added depth to my skill set.

For instance, tools like SQL allowed me to delve into database management and querying, while Scala opened doors to big data analytics. Each tool found its place in my toolkit, serving as a valuable asset in different scenarios.

V. The Learning Curve: Challenges and Rewards

The path I took wasn't without its share of difficulties. Learning Python, R, and specialized tools presented a steep learning curve. Debugging code, grasping complex algorithms, and troubleshooting errors were all part of the process.

However, these challenges brought about incredible rewards. With persistence and dedication, I overcame obstacles, gained a profound understanding of data science, and felt a growing sense of achievement and empowerment.

VI. Leveraging Python and R Together

One of the most exciting revelations in my journey was discovering the synergy between Python and R. These two languages, once considered competitors, complemented each other beautifully.

I began integrating Python and R seamlessly into my data science workflow. Python's data manipulation capabilities combined with R's statistical prowess proved to be a winning combination. Together, they enabled me to tackle diverse data science tasks effectively.

VII. Tips for Beginners

For fellow data science enthusiasts beginning their own journeys, I offer some valuable tips:

Embrace curiosity and stay open to learning.

Work on practical projects while engaging in frequent coding practice.

Explore data science courses and resources to enhance your skills.

Seek guidance from mentors and engage with the data science community.

Remember that the journey is continuous—there's always more to learn and discover.

My adventures with Python, R, and various data science tools have been transformative. I've learned that choosing the right tool for the job is crucial, but versatility and adaptability are equally important traits for a data scientist.

As I summarize my expedition, I emphasize the significance of selecting tools that align with your project requirements and objectives. Each tool has a unique role to play, and mastering them unlocks endless possibilities in the world of data science.

I encourage you to embark on your own tool exploration journey in data science. Embrace the challenges, relish the rewards, and remember that the adventure is ongoing. May your path in data science be as exhilarating and fulfilling as mine has been.

Happy data exploring!

22 notes

·

View notes

Text

AI Frameworks Help Data Scientists For GenAI Survival

AI Frameworks: Crucial to the Success of GenAI

Develop Your AI Capabilities Now

You play a crucial part in the quickly growing field of generative artificial intelligence (GenAI) as a data scientist. Your proficiency in data analysis, modeling, and interpretation is still essential, even though platforms like Hugging Face and LangChain are at the forefront of AI research.

Although GenAI systems are capable of producing remarkable outcomes, they still mostly depend on clear, organized data and perceptive interpretation areas in which data scientists are highly skilled. You can direct GenAI models to produce more precise, useful predictions by applying your in-depth knowledge of data and statistical techniques. In order to ensure that GenAI systems are based on strong, data-driven foundations and can realize their full potential, your job as a data scientist is crucial. Here’s how to take the lead:

Data Quality Is Crucial

The effectiveness of even the most sophisticated GenAI models depends on the quality of the data they use. By guaranteeing that the data is relevant, AI tools like Pandas and Modin enable you to clean, preprocess, and manipulate large datasets.

Analysis and Interpretation of Exploratory Data

It is essential to comprehend the features and trends of the data before creating the models. Data and model outputs are visualized via a variety of data science frameworks, like Matplotlib and Seaborn, which aid developers in comprehending the data, selecting features, and interpreting the models.

Model Optimization and Evaluation

A variety of algorithms for model construction are offered by AI frameworks like scikit-learn, PyTorch, and TensorFlow. To improve models and their performance, they provide a range of techniques for cross-validation, hyperparameter optimization, and performance evaluation.

Model Deployment and Integration

Tools such as ONNX Runtime and MLflow help with cross-platform deployment and experimentation tracking. By guaranteeing that the models continue to function successfully in production, this helps the developers oversee their projects from start to finish.

Intel’s Optimized AI Frameworks and Tools

The technologies that developers are already familiar with in data analytics, machine learning, and deep learning (such as Modin, NumPy, scikit-learn, and PyTorch) can be used. For the many phases of the AI process, such as data preparation, model training, inference, and deployment, Intel has optimized the current AI tools and AI frameworks, which are based on a single, open, multiarchitecture, multivendor software platform called oneAPI programming model.

Data Engineering and Model Development:

To speed up end-to-end data science pipelines on Intel architecture, use Intel’s AI Tools, which include Python tools and frameworks like Modin, Intel Optimization for TensorFlow Optimizations, PyTorch Optimizations, IntelExtension for Scikit-learn, and XGBoost.

Optimization and Deployment

For CPU or GPU deployment, Intel Neural Compressor speeds up deep learning inference and minimizes model size. Models are optimized and deployed across several hardware platforms including Intel CPUs using the OpenVINO toolbox.

You may improve the performance of your Intel hardware platforms with the aid of these AI tools.

Library of Resources

Discover collection of excellent, professionally created, and thoughtfully selected resources that are centered on the core data science competencies that developers need. Exploring machine and deep learning AI frameworks.

What you will discover:

Use Modin to expedite the extract, transform, and load (ETL) process for enormous DataFrames and analyze massive datasets.

To improve speed on Intel hardware, use Intel’s optimized AI frameworks (such as Intel Optimization for XGBoost, Intel Extension for Scikit-learn, Intel Optimization for PyTorch, and Intel Optimization for TensorFlow).

Use Intel-optimized software on the most recent Intel platforms to implement and deploy AI workloads on Intel Tiber AI Cloud.

How to Begin

Frameworks for Data Engineering and Machine Learning

Step 1: View the Modin, Intel Extension for Scikit-learn, and Intel Optimization for XGBoost videos and read the introductory papers.

Modin: To achieve a quicker turnaround time overall, the video explains when to utilize Modin and how to apply Modin and Pandas judiciously. A quick start guide for Modin is also available for more in-depth information.

Scikit-learn Intel Extension: This tutorial gives you an overview of the extension, walks you through the code step-by-step, and explains how utilizing it might improve performance. A movie on accelerating silhouette machine learning techniques, PCA, and K-means clustering is also available.

Intel Optimization for XGBoost: This straightforward tutorial explains Intel Optimization for XGBoost and how to use Intel optimizations to enhance training and inference performance.

Step 2: Use Intel Tiber AI Cloud to create and develop machine learning workloads.

On Intel Tiber AI Cloud, this tutorial runs machine learning workloads with Modin, scikit-learn, and XGBoost.

Step 3: Use Modin and scikit-learn to create an end-to-end machine learning process using census data.

Run an end-to-end machine learning task using 1970–2010 US census data with this code sample. The code sample uses the Intel Extension for Scikit-learn module to analyze exploratory data using ridge regression and the Intel Distribution of Modin.

Deep Learning Frameworks

Step 4: Begin by watching the videos and reading the introduction papers for Intel’s PyTorch and TensorFlow optimizations.

Intel PyTorch Optimizations: Read the article to learn how to use the Intel Extension for PyTorch to accelerate your workloads for inference and training. Additionally, a brief video demonstrates how to use the addon to run PyTorch inference on an Intel Data Center GPU Flex Series.

Intel’s TensorFlow Optimizations: The article and video provide an overview of the Intel Extension for TensorFlow and demonstrate how to utilize it to accelerate your AI tasks.

Step 5: Use TensorFlow and PyTorch for AI on the Intel Tiber AI Cloud.

In this article, it show how to use PyTorch and TensorFlow on Intel Tiber AI Cloud to create and execute complicated AI workloads.

Step 6: Speed up LSTM text creation with Intel Extension for TensorFlow.

The Intel Extension for TensorFlow can speed up LSTM model training for text production.

Step 7: Use PyTorch and DialoGPT to create an interactive chat-generation model.

Discover how to use Hugging Face’s pretrained DialoGPT model to create an interactive chat model and how to use the Intel Extension for PyTorch to dynamically quantize the model.

Read more on Govindhtech.com

#AI#AIFrameworks#DataScientists#GenAI#PyTorch#GenAISurvival#TensorFlow#CPU#GPU#IntelTiberAICloud#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

How much Python should one learn before beginning machine learning?

Before diving into machine learning, a solid understanding of Python is essential. :

Basic Python Knowledge:

Syntax and Data Types:

Understand Python syntax, basic data types (strings, integers, floats), and operations.

Control Structures:

Learn how to use conditionals (if statements), loops (for and while), and list comprehensions.

Data Handling Libraries:

Pandas:

Familiarize yourself with Pandas for data manipulation and analysis. Learn how to handle DataFrames, series, and perform data cleaning and transformations.

NumPy:

Understand NumPy for numerical operations, working with arrays, and performing mathematical computations.

Data Visualization:

Matplotlib and Seaborn:

Learn basic plotting with Matplotlib and Seaborn for visualizing data and understanding trends and distributions.

Basic Programming Concepts:

Functions:

Know how to define and use functions to create reusable code.

File Handling:

Learn how to read from and write to files, which is important for handling datasets.

Basic Statistics:

Descriptive Statistics:

Understand mean, median, mode, standard deviation, and other basic statistical concepts.

Probability:

Basic knowledge of probability is useful for understanding concepts like distributions and statistical tests.

Libraries for Machine Learning:

Scikit-learn:

Get familiar with Scikit-learn for basic machine learning tasks like classification, regression, and clustering. Understand how to use it for training models, evaluating performance, and making predictions.

Hands-on Practice:

Projects:

Work on small projects or Kaggle competitions to apply your Python skills in practical scenarios. This helps in understanding how to preprocess data, train models, and interpret results.

In summary, a good grasp of Python basics, data handling, and basic statistics will prepare you well for starting with machine learning. Hands-on practice with machine learning libraries and projects will further solidify your skills.

To learn more drop the message…!

2 notes

·

View notes

Text

Tips for the Best Way to Learn Python from Scratch to Pro

Python, often regarded as one of the most beginner-friendly programming languages, offers an excellent entry point for those looking to embark on a coding journey. Whether you aspire to become a Python pro or simply want to add a valuable skill to your repertoire, the path to Python proficiency is well-paved. In this blog, we’ll outline a comprehensive strategy to learn Python from scratch to pro, and we’ll also touch upon how ACTE Institute can accelerate your journey with its job placement services.

1. Start with the basics:

Every journey begins with a single step. Familiarise yourself with Python’s fundamental concepts, including variables, data types, and basic operations. Online platforms like Codecademy, Coursera, and edX offer introductory Python courses for beginners.

2. Learn Control Structures:

Master Python’s control structures, such as loops and conditional statements. These are essential for writing functional code. Sites like HackerRank and LeetCode provide coding challenges to practice your skills.

3. Dive into Functions:

Understand the significance of functions in Python. Learn how to define your functions, pass arguments, and return values. Functions are the building blocks of Python programmes.

4. Explore Data Structures:

Delve into Python’s versatile data structures, including lists, dictionaries, tuples, and sets. Learn their usage and when to apply them in real-world scenarios.

5. Object-Oriented Programming (OOP):

Python is an object-oriented language. Learn OOP principles like classes and objects. Understand encapsulation, inheritance, and polymorphism.

6. Modules and Libraries:

Python’s strength lies in its extensive libraries and modules. Explore popular libraries like NumPy, Pandas, and Matplotlib for data manipulation and visualisation.

7. Web Development with Django or Flask:

If web development interests you, pick up a web framework like Django or Flask. These frameworks simplify building web applications using Python.

8. Dive into Data Science:

Python is a dominant language in the field of data science. Learn how to use libraries like SciPy and Scikit-Learn for data analysis and machine learning.

9. Real-World Projects:

Apply your knowledge by working on real-world projects. Create a portfolio showcasing your Python skills. Platforms like GitHub allow you to share your projects with potential employers.

10. Continuous learning:

Python is a dynamic language, with new features and libraries regularly introduced. Stay updated with the latest developments by following Python communities, blogs, and podcasts.

The ACTE Institute offers a structured Python training programme that covers the entire spectrum of Python learning. Here’s how they can accelerate your journey:

Comprehensive Curriculum: ACTE’s Python course includes hands-on exercises, assignments, and real-world projects. You’ll gain practical experience and a deep understanding of Python’s applications.

Experienced Instructors: Learn from certified Python experts with years of industry experience. Their guidance ensures you receive industry-relevant insights.

Job Placement Services: One of ACTE’s standout features is its job placement assistance. They have a network of recruiting clients, making it easier for you to land a Python-related job.

Flexibility: ACTE offers both online and offline Python courses, allowing you to choose the mode that suits your schedule.

The journey from Python novice to pro involves continuous learning and practical application. ACTE Institute can be your trusted partner in this journey, providing not only comprehensive Python training but also valuable job placement services. Whether you aspire to be a Python developer, data scientist, or web developer, mastering Python opens doors to diverse career opportunities. So, take that first step, start your Python journey, and let ACTE Institute guide you towards Python proficiency and a rewarding career.

I hope I answered your question successfully. If not, feel free to mention it in the comments area. I believe I still have much to learn.

If you feel that my response has been helpful, make sure to Follow me on Tumblr and give it an upvote to encourage me to upload more content about Python.

Thank you for spending your valuable time and upvotes here. Have a great day.

6 notes

·

View notes

Text

AvatoAI Review: Unleashing the Power of AI in One Dashboard

Here's what Avato Ai can do for you

Data Analysis:

Analyze CV, Excel, or JSON files using Python and libraries like pandas or matplotlib.

Clean data, calculate statistical information and visualize data through charts or plots.

Document Processing:

Extract and manipulate text from text files or PDFs.

Perform tasks such as searching for specific strings, replacing content, and converting text to different formats.

Image Processing:

Upload image files for manipulation using libraries like OpenCV.

Perform operations like converting images to grayscale, resizing, and detecting shapes or

Machine Learning:

Utilize Python's machine learning libraries for predictions, clustering, natural language processing, and image recognition by uploading

Versatile & Broad Use Cases:

An incredibly diverse range of applications. From creating inspirational art to modeling scientific scenarios, to designing novel game elements, and more.

User-Friendly API Interface:

Access and control the power of this advanced Al technology through a user-friendly API.

Even if you're not a machine learning expert, using the API is easy and quick.

Customizable Outputs:

Lets you create custom visual content by inputting a simple text prompt.

The Al will generate an image based on your provided description, enhancing the creativity and efficiency of your work.

Stable Diffusion API:

Enrich Your Image Generation to Unprecedented Heights.

Stable diffusion API provides a fine balance of quality and speed for the diffusion process, ensuring faster and more reliable results.

Multi-Lingual Support:

Generate captivating visuals based on prompts in multiple languages.

Set the panorama parameter to 'yes' and watch as our API stitches together images to create breathtaking wide-angle views.

Variation for Creative Freedom:

Embrace creative diversity with the Variation parameter. Introduce controlled randomness to your generated images, allowing for a spectrum of unique outputs.

Efficient Image Analysis:

Save time and resources with automated image analysis. The feature allows the Al to sift through bulk volumes of images and sort out vital details or tags that are valuable to your context.

Advance Recognition:

The Vision API integration recognizes prominent elements in images - objects, faces, text, and even emotions or actions.

Interactive "Image within Chat' Feature:

Say goodbye to going back and forth between screens and focus only on productive tasks.

Here's what you can do with it:

Visualize Data:

Create colorful, informative, and accessible graphs and charts from your data right within the chat.

Interpret complex data with visual aids, making data analysis a breeze!

Manipulate Images:

Want to demonstrate the raw power of image manipulation? Upload an image, and watch as our Al performs transformations, like resizing, filtering, rotating, and much more, live in the chat.

Generate Visual Content:

Creating and viewing visual content has never been easier. Generate images, simple or complex, right within your conversation

Preview Data Transformation:

If you're working with image data, you can demonstrate live how certain transformations or operations will change your images.

This can be particularly useful for fields like data augmentation in machine learning or image editing in digital graphics.

Effortless Communication:

Say goodbye to static text as our innovative technology crafts natural-sounding voices. Choose from a variety of male and female voice types to tailor the auditory experience, adding a dynamic layer to your content and making communication more effortless and enjoyable.

Enhanced Accessibility:

Break barriers and reach a wider audience. Our Text-to-Speech feature enhances accessibility by converting written content into audio, ensuring inclusivity and understanding for all users.

Customization Options:

Tailor the audio output to suit your brand or project needs.

From tone and pitch to language preferences, our Text-to-Speech feature offers customizable options for the truest personalized experience.

>>>Get More Info<<<

#digital marketing#Avato AI Review#Avato AI#AvatoAI#ChatGPT#Bing AI#AI Video Creation#Make Money Online#Affiliate Marketing

3 notes

·

View notes

Text

Building Blocks of Data Science: What You Need to Succeed

Embarking on a journey in data science is a thrilling endeavor that requires a combination of education, skills, and an insatiable curiosity. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry. Whether you're a seasoned professional or a newcomer to the field, here's a comprehensive guide to what is required to study data science.

1. Educational Background: Building a Solid Foundation

A strong educational foundation is the bedrock of a successful data science career. Mastery of mathematics and statistics, encompassing algebra, calculus, probability, and descriptive statistics, lays the groundwork for advanced data analysis. While a bachelor's degree in computer science, engineering, mathematics, or statistics is advantageous, data science is a field that welcomes individuals with diverse educational backgrounds.

2. Programming Skills: The Language of Data

Proficiency in programming languages is a non-negotiable skill for data scientists. Python and R stand out as the languages of choice in the data science community. Online platforms provide interactive courses, making the learning process engaging and effective.

3. Data Manipulation and Analysis: Unraveling Insights

The ability to manipulate and analyze data is at the core of data science. Familiarity with data manipulation libraries, such as Pandas in Python, is indispensable. Understanding how to clean, preprocess, and derive insights from data is a fundamental skill.

4. Database Knowledge: Harnessing Data Sources

Basic knowledge of databases and SQL is beneficial. Data scientists often need to extract and manipulate data from databases, making this skill essential for effective data handling.

5. Machine Learning Fundamentals: Unlocking Predictive Power

A foundational understanding of machine learning concepts is key. Online courses and textbooks cover supervised and unsupervised learning, various algorithms, and methods for evaluating model performance.

6. Data Visualization: Communicating Insights Effectively

Proficiency in data visualization tools like Matplotlib, Seaborn, or Tableau is valuable. The ability to convey complex findings through visuals is crucial for effective communication in data science.

7. Domain Knowledge: Bridging Business and Data

Depending on the industry, having domain-specific knowledge is advantageous. This knowledge helps data scientists contextualize their findings and make informed decisions from a business perspective.

8. Critical Thinking and Problem-Solving: The Heart of Data Science

Data scientists are, at their core, problem solvers. Developing critical thinking skills is essential for approaching problems analytically and deriving meaningful insights from data.

9. Continuous Learning: Navigating the Dynamic Landscape

The field of data science is dynamic, with new tools and techniques emerging regularly. A commitment to continuous learning and staying updated on industry trends is vital for remaining relevant in this ever-evolving field.

10. Communication Skills: Bridging the Gap

Strong communication skills, both written and verbal, are imperative for data scientists. The ability to convey complex technical findings in a comprehensible manner is crucial, especially when presenting insights to non-technical stakeholders.

11. Networking and Community Engagement: A Supportive Ecosystem

Engaging with the data science community is a valuable aspect of the learning process. Attend meetups, participate in online forums, and connect with experienced practitioners to gain insights, support, and networking opportunities.

12. Hands-On Projects: Applying Theoretical Knowledge

Application of theoretical knowledge through hands-on projects is a cornerstone of mastering data science. Building a portfolio of projects showcases practical skills and provides tangible evidence of your capabilities to potential employers.

In conclusion, the journey in data science is unique for each individual. Tailor your learning path based on your strengths, interests, and career goals. Continuous practice, real-world application, and a passion for solving complex problems will pave the way to success in the dynamic and ever-evolving field of data science. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

Python's Age: Unlocking the Potential of Programming

Introduction:

Python has become a powerful force in the ever-changing world of computer languages, influencing how developers approach software development. Python's period is distinguished by its adaptability, ease of use, and vast ecosystem that supports a wide range of applications. Python has established itself as a top choice for developers globally, spanning from web programming to artificial intelligence. We shall examine the traits that characterize the Python era and examine its influence on the programming community in this post. Learn Python from Uncodemy which provides the best Python course in Noida and become part of this powerful force.

Versatility and Simplicity:

Python stands out due in large part to its adaptability. Because it is a general-purpose language with many applications, Python is a great option for developers in a variety of fields. It’s easy to learn and comprehend grammar is straightforward, concise, and similar to that of the English language. A thriving and diverse community has been fostered by Python's simplicity, which has drawn both novice and experienced developers.

Community and Collaboration:

It is well known that the Python community is open-minded and cooperative. Python is growing because of the libraries, frameworks, and tools that developers from all around the world create to make it better. Because the Python community is collaborative by nature, a large ecosystem has grown up around it, full of resources that developers may easily access. The Python community offers a helpful atmosphere for all users, regardless of expertise level. Whether you are a novice seeking advice or an expert developer searching for answers, we have you covered.

Web Development with Django and Flask:

Frameworks such as Django and Flask have helped Python become a major force in the online development space. The "batteries-included" design of the high-level web framework Django makes development more quickly accomplished. In contrast, Flask is a lightweight, modular framework that allows developers to select the components that best suit their needs. Because of these frameworks, creating dependable and

scalable web applications have become easier, which has helped Python gain traction in the web development industry.

Data Science and Machine Learning:

Python has unmatched capabilities in data science and machine learning. The data science toolkit has become incomplete without libraries like NumPy, pandas, and matplotlib, which make data manipulation, analysis, and visualization possible. Two potent machine learning frameworks, TensorFlow and PyTorch, have cemented Python's place in the artificial intelligence field. Data scientists and machine learning engineers can concentrate on the nuances of their models instead of wrangling with complicated code thanks to Python's simple syntax.

Automation and Scripting:

Python is a great choice for activities ranging from straightforward scripts to intricate automation workflows because of its adaptability in automation and scripting. The readable and succinct syntax of the language makes it easier to write automation scripts that are both effective and simple to comprehend. Python has evolved into a vital tool for optimizing operations, used by DevOps engineers to manage deployment pipelines and system administrators to automate repetitive processes.

Education and Python Courses:

The popularity of Python has also raised the demand for Python classes from people who want to learn programming. For both novices and experts, Python courses offer an organized learning path that covers a variety of subjects, including syntax, data structures, algorithms, web development, and more. Many educational institutions in the Noida area provide Python classes that give a thorough and practical learning experience for anyone who wants to learn more about the language.

Open Source Development:

The main reason for Python's broad usage has been its dedication to open-source development. The Python Software Foundation (PSF) is responsible for managing the language's advancement and upkeep, guaranteeing that programmers everywhere can continue to use it without restriction. This collaborative and transparent approach encourages creativity and lets developers make improvements to the language. Because Python is open-source, it has been possible for developers to actively shape the language's development in a community-driven ecosystem.

Cybersecurity and Ethical Hacking:

Python has emerged as a standard language in the fields of ethical hacking and cybersecurity. It's a great option for creating security tools and penetration testing because of its ease of use and large library. Because of Python's adaptability, cybersecurity experts can effectively handle a variety of security issues. Python plays a more and bigger part in system and network security as cybersecurity becomes more and more important.

Startups and Entrepreneurship:

Python is a great option for startups and business owners due to its flexibility and rapid development cycles. Small teams can quickly prototype and create products thanks to the language's ease of learning, which reduces time to market. Additionally, companies may create complex solutions without having to start from scratch thanks to Python's large library and framework ecosystem. Python's ability to fuel creative ideas has been leveraged by numerous successful firms, adding to the language's standing as an engine for entrepreneurship.

Remote Collaboration and Cloud Computing:

Python's heyday aligns with a paradigm shift towards cloud computing and remote collaboration. Python is a good choice for creating cloud-based apps because of its smooth integration with cloud services and support for asynchronous programming. Python's readable and simple syntax makes it easier for developers working remotely or in dispersed teams to collaborate effectively, especially in light of the growing popularity of remote work and distributed teams. The language's position in the changing cloud computing landscape is further cemented by its compatibility with key cloud providers.

Continuous Development and Enhancement:

Python is still being developed; new features, enhancements, and optimizations are added on a regular basis. The maintainers of the language regularly solicit community input to keep Python current and adaptable to the changing needs of developers. Python's longevity and ability to stay at the forefront of technical breakthroughs can be attributed to this dedication to ongoing development.

The Future of Python:

The future of Python seems more promising than it has ever been. With improvements in concurrency, performance optimization, and support for future technologies, the language is still developing. Industry demand for Python expertise is rising, suggesting that the language's heyday is still very much alive. Python is positioned to be a key player in determining the direction of software development as emerging technologies like edge computing, quantum computing, and artificial intelligence continue to gain traction.

Conclusion:

To sum up, Python is a versatile language that is widely used in a variety of sectors and is developed by the community. Python is now a staple of contemporary programming, used in everything from artificial intelligence to web development. The language is a favorite among developers of all skill levels because of its simplicity and strong capabilities. The Python era invites you to a vibrant and constantly growing community, whatever your experience level with programming. Python courses in Noida offer a great starting place for anybody looking to start a learning journey into the broad and fascinating world of Python programming.

Source Link: https://teletype.in/@vijay121/Wj1LWvwXTgz

2 notes

·

View notes

Text

How you can use python for data wrangling and analysis

Python is a powerful and versatile programming language that can be used for various purposes, such as web development, data science, machine learning, automation, and more. One of the most popular applications of Python is data analysis, which involves processing, cleaning, manipulating, and visualizing data to gain insights and make decisions.

In this article, we will introduce some of the basic concepts and techniques of data analysis using Python, focusing on the data wrangling and analysis process. Data wrangling is the process of transforming raw data into a more suitable format for analysis, while data analysis is the process of applying statistical methods and tools to explore, summarize, and interpret data.

To perform data wrangling and analysis with Python, we will use two of the most widely used libraries: Pandas and NumPy. Pandas is a library that provides high-performance data structures and operations for manipulating tabular data, such as Series and DataFrame. NumPy is a library that provides fast and efficient numerical computations on multidimensional arrays, such as ndarray.

We will also use some other libraries that are useful for data analysis, such as Matplotlib and Seaborn for data visualization, SciPy for scientific computing, and Scikit-learn for machine learning.

To follow along with this article, you will need to have Python 3.6 or higher installed on your computer, as well as the libraries mentioned above. You can install them using pip or conda commands. You will also need a code editor or an interactive environment, such as Jupyter Notebook or Google Colab.

Let’s get started with some examples of data wrangling and analysis with Python.

Example 1: Analyzing COVID-19 Data

In this example, we will use Python to analyze the COVID-19 data from the World Health Organization (WHO). The data contains the daily situation reports of confirmed cases and deaths by country from January 21, 2020 to October 23, 2023. You can download the data from here.

First, we need to import the libraries that we will use:import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns

Next, we need to load the data into a Pandas DataFrame:df = pd.read_csv('WHO-COVID-19-global-data.csv')

We can use the head() method to see the first five rows of the DataFrame:df.head()

Date_reportedCountry_codeCountryWHO_regionNew_casesCumulative_casesNew_deathsCumulative_deaths2020–01–21AFAfghanistanEMRO00002020–01–22AFAfghanistanEMRO00002020–01–23AFAfghanistanEMRO00002020–01–24AFAfghanistanEMRO00002020–01–25AFAfghanistanEMRO0000

We can use the info() method to see some basic information about the DataFrame, such as the number of rows and columns, the data types of each column, and the memory usage:df.info()

Output:

RangeIndex: 163800 entries, 0 to 163799 Data columns (total 8 columns): # Column Non-Null Count Dtype — — — — — — — — — — — — — — — 0 Date_reported 163800 non-null object 1 Country_code 162900 non-null object 2 Country 163800 non-null object 3 WHO_region 163800 non-null object 4 New_cases 163800 non-null int64 5 Cumulative_cases 163800 non-null int64 6 New_deaths 163800 non-null int64 7 Cumulative_deaths 163800 non-null int64 dtypes: int64(4), object(4) memory usage: 10.0+ MB “><class 'pandas.core.frame.DataFrame'> RangeIndex: 163800 entries, 0 to 163799 Data columns (total 8 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Date_reported 163800 non-null object 1 Country_code 162900 non-null object 2 Country 163800 non-null object 3 WHO_region 163800 non-null object 4 New_cases 163800 non-null int64 5 Cumulative_cases 163800 non-null int64 6 New_deaths 163800 non-null int64 7 Cumulative_deaths 163800 non-null int64 dtypes: int64(4), object(4) memory usage: 10.0+ MB

We can see that there are some missing values in the Country_code column. We can use the isnull() method to check which rows have missing values:df[df.Country_code.isnull()]

Output:

Date_reportedCountry_codeCountryWHO_regionNew_casesCumulative_casesNew_deathsCumulative_deaths2020–01–21NaNInternational conveyance (Diamond Princess)WPRO00002020–01–22NaNInternational conveyance (Diamond Princess)WPRO0000……………………2023–10–22NaNInternational conveyance (Diamond Princess)WPRO07120132023–10–23NaNInternational conveyance (Diamond Princess)WPRO0712013

We can see that the missing values are from the rows that correspond to the International conveyance (Diamond Princess), which is a cruise ship that had a COVID-19 outbreak in early 2020. Since this is not a country, we can either drop these rows or assign them a unique code, such as ‘IC’. For simplicity, we will drop these rows using the dropna() method:df = df.dropna()

We can also check the data types of each column using the dtypes attribute:df.dtypes

Output:Date_reported object Country_code object Country object WHO_region object New_cases int64 Cumulative_cases int64 New_deaths int64 Cumulative_deaths int64 dtype: object

We can see that the Date_reported column is of type object, which means it is stored as a string. However, we want to work with dates as a datetime type, which allows us to perform date-related operations and calculations. We can use the to_datetime() function to convert the column to a datetime type:df.Date_reported = pd.to_datetime(df.Date_reported)

We can also use the describe() method to get some summary statistics of the numerical columns, such as the mean, standard deviation, minimum, maximum, and quartiles:df.describe()

Output:

New_casesCumulative_casesNew_deathsCumulative_deathscount162900.000000162900.000000162900.000000162900.000000mean1138.300062116955.14016023.4867892647.346237std6631.825489665728.383017137.25601215435.833525min-32952.000000–32952.000000–1918.000000–1918.00000025%-1.000000–1.000000–1.000000–1.00000050%-1.000000–1.000000–1.000000–1.00000075%-1.000000–1.000000–1.000000–1.000000max -1 -1 -1 -1

We can see that there are some negative values in the New_cases, Cumulative_cases, New_deaths, and Cumulative_deaths columns, which are likely due to data errors or corrections. We can use the replace() method to replace these values with zero:df = df.replace(-1,0)

Now that we have cleaned and prepared the data, we can start to analyze it and answer some questions, such as:

Which countries have the highest number of cumulative cases and deaths?

How has the pandemic evolved over time in different regions and countries?

What is the current situation of the pandemic in India?

To answer these questions, we will use some of the methods and attributes of Pandas DataFrame, such as:

groupby() : This method allows us to group the data by one or more columns and apply aggregation functions, such as sum, mean, count, etc., to each group.

sort_values() : This method allows us to sort the data by one or more

loc[] : This attribute allows us to select a subset of the data by labels or conditions.

plot() : This method allows us to create various types of plots from the data, such as line, bar, pie, scatter, etc.

If you want to learn Python from scratch must checkout e-Tuitions to learn Python online, They can teach you Python and other coding language also they have some of the best teachers for their students and most important thing you can also Book Free Demo for any class just goo and get your free demo.

#python#coding#programming#programming languages#python tips#python learning#python programming#python development

2 notes

·

View notes

Text

DataFrame in Pandas: Guide to Creating Awesome DataFrames

Explore how to create a dataframe in Pandas, including data input methods, customization options, and practical examples.

Data analysis used to be a daunting task, reserved for statisticians and mathematicians. But with the rise of powerful tools like Python and its fantastic library, Pandas, anyone can become a data whiz! Pandas, in particular, shines with its DataFrames, these nifty tables that organize and manipulate data like magic. But where do you start? Fear not, fellow data enthusiast, for this guide will…

View On WordPress

#advanced dataframe features#aggregating data in pandas#create dataframe from dictionary in pandas#create dataframe from list in pandas#create dataframe in pandas#data manipulation in pandas#dataframe indexing#filter dataframe by condition#filter dataframe by multiple conditions#filtering data in pandas#grouping data in pandas#how to make a dataframe in pandas#manipulating data in pandas#merging dataframes#pandas data structures#pandas dataframe tutorial#python dataframe basics#rename columns in pandas dataframe#replace values in pandas dataframe#select columns in pandas dataframe#select rows in pandas dataframe#set column names in pandas dataframe#set row names in pandas dataframe

0 notes

Text

The Power of Python: How Python Development Services Transform Businesses

In the rapidly evolving landscape of technology, businesses are continuously seeking innovative solutions to gain a competitive edge. Python, a versatile and powerful programming language, has emerged as a game-changer for enterprises worldwide. Its simplicity, efficiency, and vast ecosystem of libraries have made Python development services a catalyst for transformation. In this blog, we will explore the significant impact Python has on businesses and how it can revolutionize their operations.

Python's Versatility:

Python's versatility is one of its key strengths, enabling businesses to leverage it for a wide range of applications. From web development to data analysis, artificial intelligence to automation, Python can handle diverse tasks with ease. This adaptability allows businesses to streamline their processes, improve productivity, and explore new avenues for growth.

Rapid Development and Time-to-Market:

Python's clear and concise syntax accelerates the development process, reducing the time to market products and services. With Python, developers can create robust applications in a shorter timeframe compared to other programming languages. This agility is especially crucial in fast-paced industries where staying ahead of the competition is essential.

Cost-Effectiveness:

Python's open-source nature eliminates the need for expensive licensing fees, making it a cost-effective choice for businesses. Moreover, the availability of a vast and active community of Python developers ensures that businesses can find affordable expertise for their projects. This cost-efficiency is particularly advantageous for startups and small to medium-sized enterprises.

Data Analysis and Insights:

In the era of big data, deriving valuable insights from vast datasets is paramount for making informed business decisions. Python's libraries like NumPy, Pandas, and Matplotlib provide powerful tools for data manipulation, analysis, and visualization. Python's data processing capabilities empower businesses to uncover patterns, trends, and actionable insights from their data, leading to data-driven strategies and increased efficiency.

Web Development and Scalability:

Python's simplicity and robust frameworks like Django and Flask have made it a popular choice for web development. Python-based web applications are known for their scalability, allowing businesses to handle growing user demands without compromising performance. This scalability ensures a seamless user experience, even during peak traffic periods.

Machine Learning and Artificial Intelligence:

Python's dominance in the field of artificial intelligence and machine learning is undeniable. Libraries like TensorFlow, Keras, and PyTorch have made it easier for businesses to implement sophisticated machine learning algorithms into their processes. With Python, businesses can harness the power of AI to automate tasks, predict trends, optimize processes, and personalize user experiences.

Automation and Efficiency:

Python's versatility extends to automation, making it an ideal choice for streamlining repetitive tasks. From automating data entry and report generation to managing workflows, Python development services can help businesses save time and resources, allowing employees to focus on more strategic initiatives.

Integration and Interoperability:

Many businesses have existing systems and technologies in place. Python's seamless integration capabilities allow it to work in harmony with various platforms and technologies. This interoperability simplifies the process of integrating Python solutions into existing infrastructures, preventing disruptions and reducing implementation complexities.

Security and Reliability:

Python's strong security features and active community support contribute to its reliability as a programming language. Businesses can rely on Python development services to build secure applications that protect sensitive data and guard against potential cyber threats.

Conclusion:

Python's rising popularity in the business world is a testament to its transformative power. From enhancing development speed and reducing costs to enabling data-driven decisions and automating processes, Python development services have revolutionized the way businesses operate. Embracing Python empowers enterprises to stay ahead in an ever-changing technological landscape and achieve sustainable growth in the digital era. Whether you're a startup or an established corporation, harnessing the potential of Python can unlock a world of possibilities and take your business to new heights.

2 notes

·

View notes

Text

How learning best python skill can transform your career in 2025

In 2025, tech skills are evolving faster than ever — and Python has become the top programming language powering the future of artificial intelligence and machine learning. Whether you're a beginner or looking to upskill, learning Python for AI and ML could be the career move that sets you apart in this competitive job market.

Key benefits of learning python for AI & ML in 2025

Future-Proof Skill

As automation and AI become integral to every industry, Python fluency gives you a competitive edge in an AI-first world.

Beginner-Friendly Yet Powerful

You don’t need a computer science degree to learn Python. It’s perfect for non-tech professionals transitioning into tech careers.

Freelance and Remote Opportunities

Python developers working in AI and ML are in high demand on platforms like Upwork and Toptal many command salaries above six figures, working remotely.

Community and Resources

With massive open-source support, free tutorials, and active forums, you can learn Python for AI even without formal education.

Create roadmap: python for Ai and Machine learning

Master the Basics Start with variables, data types, loops, functions, and object-oriented programming in Python.

Understand Data Science Foundations Learn to work with Pandas, NumPy, and Matplotlib for data preprocessing and visualization.

Dive into Machine Learning Explore supervised and unsupervised learning using Scikit-learn, then graduate to TensorFlow and PyTorch for deep learning.

Build Real Projects Hands-on experience is key. Start building real-world applications like:

Spam email classifier

Stock price predictor

Chatbot using NLP

Why python is the best language for AI & Machine learning

Python's simplicity, vast libraries, and flexibility make it the best programming language for artificial intelligence. With intuitive syntax and community support, it's a favorite among data scientists, developers, and AI engineers.

✅ High-demand Python libraries in AI:

TensorFlow and Keras – deep learning models

Scikit-learn – machine learning algorithms

Pandas & NumPy – data analysis and manipulation

Matplotlib & Seaborn – data visualization

These tools allow developers to build everything from predictive models to smart recommendation systems all using Python.

Career Opportunities After Learning Python for AI

If you're wondering how Python for AI and ML can shape your future, consider this: tech companies, startups, and even non-tech industries are hiring for roles like:

Machine Learning Engineer

AI Developer

Data Scientist

Python Automation Engineer

NLP (Natural Language Processing) Specialist

According to LinkedIn and Glassdoor, these roles are not just high-paying but are also projected to grow rapidly through 2030.

Best courses to learn python for Ai & ML in 2025

Google AI with Python (Free course on Coursera)

Python course With SKILL BABU

IBM Applied AI Certification

Udemy: Python for Machine Learning & Data Science

Fast.ai Deep Learning Courses (Free)

These programs offer certifications that can boost your resume and help you stand out to employers.

Conclusion: Choose Your Best Career with Python in 2025

If you’re looking to stay ahead in 2025’s job market, learning Python for AI and machine learning is more than a smart move , it’s a career game-changer. With endless growth opportunities, high-paying roles, and the chance to work on cutting-edge technology, Python opens doors to a future-proof tech career.

Start today. The future is written in Python.

#python#app development company#PythonForAI#MachineLearning2025#LearnPython#TechCareers#AIin2025#Python Programming#Learn AI in 2025#Machine Learning Career#Future Tech Skills#Python for Beginners

0 notes

Text

Inside the Course: What You'll Learn in GVT Academy's Data Analyst Program with AI and VBA

If you're searching for the Best Data Analyst Course with VBA using AI in Noida, GVT Academy offers a cutting-edge curriculum designed to equip you with the skills employers want in 2025. In an age where data is king, the ability to analyze, automate, and visualize information is what separates good analysts from great ones.

Let’s explore the modules inside this powerful course — from basic tools to advanced technologies — all designed with real-world outcomes in mind.

Module 1: Advanced Excel – Master the Basics, Sharpen the Edge

You start with Advanced Excel, a must-have tool for every data analyst. This module helps you upgrade your skills from intermediate to advanced level with:

Advanced formulas like XLOOKUP, IFERROR, and nested functions

Data cleaning techniques using Power Query

Creating interactive dashboards with Pivot Tables

Case-based learning from real business scenarios

This strong foundation ensures you're ready to dive deeper into automation and analytics.

Module 2: VBA Programming – Automate Your Data Workflow

Visual Basic for Applications (VBA) is a game-changer when it comes to saving time. Here’s what you’ll learn:

Automate tasks with macros and loops

Build interactive forms for better data entry

Develop automated reporting tools

Integrate Excel with external databases or emails

This module gives you a serious edge by teaching real-time automation for daily tasks, making you stand out in interviews and on the job.

Module 3: Artificial Intelligence for Analysts – Data Meets Intelligence

This is where things get futuristic. You’ll learn how AI is transforming data analysis:

Basics of machine learning with simple use cases

Use AI tools (like ChatGPT or Excel Copilot) to write smarter formulas

Forecast sales or trends using Python-based models

Explore AI in data cleaning, classification, and clustering

GVT Academy blends the power of AI and VBA to offer a standout Data Analyst Course in Noida, designed to help students gain a competitive edge in the job market.

Module 4: SQL – Speak the Language of Databases

Data lives in databases, and SQL helps you retrieve it efficiently. This module focuses on:

Writing SELECT, JOIN, and GROUP BY queries

Creating views, functions, and subqueries

Connecting SQL output directly to Excel and Power BI

Handling large volumes of structured data

You’ll practice on real datasets and become fluent in working with enterprise-level databases.

Module 5: Power BI – Turn Data into Stories

More than numbers, data analysis is about discovering what the numbers truly mean. In the Power BI module, you'll:

Import, clean, and model data

Create interactive dashboards for business reporting

Use DAX functions to create calculated metrics

Publish and share reports using Power BI Service

By mastering Power BI, you'll learn to tell data-driven stories that influence business decisions.

Module 6: Python – The Language of Modern Analytics

Python is one of the most in-demand skills for data analysts, and this module helps you get hands-on:

Python fundamentals: Variables, loops, and functions

Working with Pandas, NumPy, and Matplotlib

Data manipulation, cleaning, and visualization

Introduction to machine learning with Scikit-Learn

Even if you have no coding background, GVT Academy ensures you learn Python in a beginner-friendly and project-based manner.

Course Highlights That Make GVT Academy #1

👨🏫 Expert mentors with industry experience

🧪 Real-life projects for each module

💻 Live + recorded classes for flexible learning

💼 Placement support and job preparation sessions

📜 Certification recognized by top recruiters

Every module is designed with job-readiness in mind, not just theory.

Who Should Join This Course?

This course is perfect for:

Freshers wanting a high-paying career in analytics

Working professionals in finance, marketing, or operations

B.Com, BBA, and MBA graduates looking to upskill

Anyone looking to switch to data-driven roles

Final Words

If you're looking to future-proof your career, this course is your launchpad. With six powerful modules and job-focused training, GVT Academy is proud to offer the Best Data Analyst Course with VBA using AI in Noida — practical, placement-driven, and perfect for 2025.

📞 Don’t Miss Out – Limited Seats. Enroll Now with GVT Academy and Transform Your Career!

1. Google My Business: http://g.co/kgs/v3LrzxE

2. Website: https://gvtacademy.com

3. LinkedIn: www.linkedin.com/in/gvt-academy-48b916164

4. Facebook: https://www.facebook.com/gvtacademy

5. Instagram: https://www.instagram.com/gvtacademy/

6. X: https://x.com/GVTAcademy

7. Pinterest: https://in.pinterest.com/gvtacademy

8. Medium: https://medium.com/@gvtacademy

#gvt academy#data analytics#advanced excel training#data science#python#sql course#advanced excel training institute in noida#best powerbi course#power bi#advanced excel#vba

0 notes

Text

How Can Beginners Master Python For AI Projects?

Mastering Python for AI as a beginner involves a step-by-step learning approach. Start with understanding Python basics data types, loops, conditionals, and functions. Once comfortable, move to intermediate topics like object-oriented programming, file handling, and error management. After this foundation, explore libraries essential for AI such as NumPy (for numerical operations), pandas (for data manipulation), and Matplotlib (for data visualization).

The next phase includes diving into machine learning libraries like Scikit-learn and deep learning frameworks such as TensorFlow or PyTorch. Practice is key work on mini-projects like spam detection, movie recommendation, or simple image classification. These projects help apply theory to real-world problems. You can also explore platforms like Kaggle to find datasets and challenges.

Understanding AI concepts like supervised/unsupervised learning, neural networks, and model evaluation is crucial. Combine your coding knowledge with a basic understanding of statistics and linear algebra to grasp AI fundamentals better. Lastly, consistency is essential code daily and read documentation regularly.

If you're just starting out, a structured Python course for beginners can be a good foundation.

0 notes

Text

How to Make AI: A Guide to An AI Developer’s Tech Stack

Globally, artificial intelligence (AI) is revolutionizing a wide range of industries, including healthcare and finance. Knowing the appropriate tools and technologies is crucial if you want to get into AI development. A well-organized tech stack can make all the difference, regardless of your level of experience as a developer. The top IT services in Qatar can assist you in successfully navigating AI development if you require professional advice.

Knowing the Tech Stack for AI Development

Programming languages, frameworks, cloud services, and hardware resources are all necessary for AI development. Let's examine the key elements of a tech stack used by an AI developer. 1. Programming Languages for the Development of AI

The first step in developing AI is selecting the appropriate programming language. Among the languages that are most frequently used are:

Because of its many libraries, including TensorFlow, PyTorch, and Scikit-Learn, Python is the most widely used language for artificial intelligence (AI) and machine learning (ML). • R: Perfect for data analysis and statistical computing. • Java: Used in big data solutions and enterprise AI applications. • C++: Suggested for AI-powered gaming apps and high-performance computing. Integrating web design services with AI algorithms can improve automation and user experience when creating AI-powered web applications.

2. Frameworks for AI and Machine Learning

AI/ML frameworks offer pre-built features and resources to speed up development. Among the most widely utilized frameworks are: • TensorFlow: Google's open-source deep learning application library. • PyTorch: A versatile deep learning framework that researchers prefer. • Scikit-Learn: Perfect for conventional machine learning tasks such as regression and classification.

Keras is a high-level TensorFlow-based neural network API. Making the most of these frameworks is ensured by utilizing AI/ML software development expertise in order to stay ahead of AI innovation.

3. Tools for Data Processing and Management Large datasets are necessary for AI model training and optimization. Pandas, a robust Python data manipulation library, is one of the most important tools for handling and processing AI data. • Apache Spark: A distributed computing platform designed to manage large datasets. • Google BigQuery: An online tool for organizing and evaluating sizable datasets. Hadoop is an open-source framework for processing large amounts of data and storing data in a distributed manner. To guarantee flawless performance, AI developers must incorporate powerful data processing capabilities, which are frequently offered by the top IT services in Qatar.

4. AI Development Cloud Platforms

Because it offers scalable resources and computational power, cloud computing is essential to the development of AI. Among the well-known cloud platforms are Google Cloud AI, which provides AI development tools, AutoML, and pre-trained models. • Microsoft Azure AI: This platform offers AI-driven automation, cognitive APIs, and machine learning services. • Amazon Web Services (AWS) AI: Offers computing resources, AI-powered APIs, and deep learning AMIs. Integrating cloud services with web design services facilitates the smooth deployment and upkeep of AI-powered web applications.

5. AI Hardware and Infrastructure

The development of AI demands a lot of processing power. Important pieces of hardware consist of: • GPUs (Graphics Processing Units): Crucial for AI training and deep learning. • Tensor Processing Units (TPUs): Google's hardware accelerators designed specifically for AI. • Edge Computing Devices: These are used to install AI models on mobile and Internet of Things devices.

To maximize hardware utilization, companies looking to implement AI should think about hiring professionals to develop AI/ML software.

Top Techniques for AI Development

1. Choosing the Appropriate AI Model Depending on the needs of your project, select between supervised, unsupervised, and reinforcement learning models.

2. Preprocessing and Augmenting Data

To decrease bias and increase model accuracy, clean and normalize the data.

3. Constant Model Training and Improvement

For improved performance, AI models should be updated frequently with fresh data.

4. Ensuring Ethical AI Procedures

To avoid prejudice, maintain openness, and advance justice, abide by AI ethics guidelines.

In conclusion

A strong tech stack, comprising cloud services, ML frameworks, programming languages, and hardware resources, is necessary for AI development. Working with the top IT services in Qatar can give you the know-how required to create and implement AI solutions successfully, regardless of whether you're a business or an individual developer wishing to use AI. Furthermore, combining AI capabilities with web design services can improve automation, productivity, and user experience. Custom AI solutions and AI/ML software development are our areas of expertise at Aamal Technology Solutions. Get in touch with us right now to find out how AI can transform your company!

#Best IT Service Provider in Qatar#Top IT Services in Qatar#IT services in Qatar#web designing services in qatar#web designing services#Mobile App Development#Mobile App Development services in qatar

0 notes