#pandas data structures

Explore tagged Tumblr posts

Text

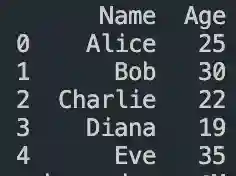

DataFrame in Pandas: Guide to Creating Awesome DataFrames

Explore how to create a dataframe in Pandas, including data input methods, customization options, and practical examples.

Data analysis used to be a daunting task, reserved for statisticians and mathematicians. But with the rise of powerful tools like Python and its fantastic library, Pandas, anyone can become a data whiz! Pandas, in particular, shines with its DataFrames, these nifty tables that organize and manipulate data like magic. But where do you start? Fear not, fellow data enthusiast, for this guide will…

View On WordPress

#advanced dataframe features#aggregating data in pandas#create dataframe from dictionary in pandas#create dataframe from list in pandas#create dataframe in pandas#data manipulation in pandas#dataframe indexing#filter dataframe by condition#filter dataframe by multiple conditions#filtering data in pandas#grouping data in pandas#how to make a dataframe in pandas#manipulating data in pandas#merging dataframes#pandas data structures#pandas dataframe tutorial#python dataframe basics#rename columns in pandas dataframe#replace values in pandas dataframe#select columns in pandas dataframe#select rows in pandas dataframe#set column names in pandas dataframe#set row names in pandas dataframe

0 notes

Text

I think that people are massively misunderstanding how "AI" works.

To summarize, AI like chatGPT uses two things to determine a response: temperature and likeableness. (We explain these at the end.)

ChatGPT is made with the purpose of conversation, not accuracy (in most cases).

It is trained to communicate. It can do other things, aswell, like math. Basically, it has a calculator function.

It also has a translate function. Unlike what people may think, google translate and chatGPT both use AI. The difference is that chatGPT is generative. Google Translate uses "neural machine translation".

Here is the difference between a generative LLM and a NMT translating, as copy-pasted from Wikipedia, in small text:

Instead of using an NMT system that is trained on parallel text, one can also prompt a generative LLM to translate a text. These models differ from an encoder-decoder NMT system in a number of ways:

Generative language models are not trained on the translation task, let alone on a parallel dataset. Instead, they are trained on a language modeling objective, such as predicting the next word in a sequence drawn from a large dataset of text. This dataset can contain documents in many languages, but is in practice dominated by English text. After this pre-training, they are fine-tuned on another task, usually to follow instructions.

Since they are not trained on translation, they also do not feature an encoder-decoder architecture. Instead, they just consist of a transformer's decoder.

In order to be competitive on the machine translation task, LLMs need to be much larger than other NMT systems. E.g., GPT-3 has 175 billion parameters, while mBART has 680 million and the original transformer-big has “only” 213 million. This means that they are computationally more expensive to train and use.

A generative LLM can be prompted in a zero-shot fashion by just asking it to translate a text into another language without giving any further examples in the prompt. Or one can include one or several example translations in the prompt before asking to translate the text in question. This is then called one-shot or few-shot learning, respectively.

Anyway, they both use AI.

But as mentioned above, generative AI like chatGPT are made with the intent of responding well to the user. Who cares if it's accurate information as long as the user is happy? The only thing chatGPT is worried about is if the sentence structure is accurate.

ChatGPT can source answers to questions from it's available data.

... But most of that data is English.

If you're asking a question about what something is like in Japan, you're asking a machine that's primary goal is to make its user happy what the mostly American (but sure some other English-speaking countries) internet thinks something is like in Japan. (This is why there are errors where AI starts getting extremely racist, ableist, transphobic, homophobic, etc.)

Every time you ask chatGPT a question, you are asking not "Do pandas eat waffles?" but "Do you think (probably an) American would think that pandas eat waffles? (respond as if you were a very robotic American)"

In this article, OpenAI says "We use broad and diverse data to build the best AI for everyone."

In this article, they say "51.3% pages are hosted in the United States. The countries with the estimated 2nd, 3rd, 4th largest English speaking populations—India, Pakistan, Nigeria, and The Philippines—have only 3.4%, 0.06%, 0.03%, 0.1% the URLs of the United States, despite having many tens of millions of English speakers." ...and that training data makes up 60% of chatGPT's data.

Something called "WebText2", aka Everything on Reddit with More Than 3 Upvotes, was also scraped for ChatGPT. On a totally unrelated note, I really wonder why AI is so racist, ableist, homophobic, and transphobic.

According to the article, this data is the most heavily weighted for ChatGPT.

"Books1" and "Books2" are stolen books scraped for AI. Apparently, there is practically nothing written down about what they are. I wonder why. It's almost as if they're avoiding the law.

It's also specifically trained on English Wikipedia.

So broad and diverse.

"ChatGPT doesn’t know much about Norwegian culture. Or rather, whatever it knows about Norwegian culture is presumably mostly learned from English language sources. It translates that into Norwegian on the fly."

hm.

Anyway, about the temperature and likeableness that we mentioned in the beginning!! if you already know this feel free to skip lolz

Temperature:

"Temperature" is basically how likely, or how unlikely something is to say. If the temperature is low, the AI will say whatever the most expected word to be next after ___ is, as long as it makes sense.

If the temperature is high, it might say something unexpected.

For example, if an AI with a temperature of 1 and a temperature of, maybe 7 idk, was told to add to the sentence that starts with "The lazy fox..." they might answer with this.

1:

The lazy fox jumps over the...

7:

The lazy fox spontaneously danced.

The AI with a temperature of 1 would give what it expects, in its data "fox" and "jumps" are close together / related (because of the common sentence "The quick fox jumps over the lazy dog."), and "jumps" and "over" are close as well.

The AI with a temperature 7 gives something much more random. "Fox" and "spontaneously" are probably very far apart. "Spontaneously" and "danced"? Probably closer.

Likeableness:

AI wants all prompts to be likeable. This works in two ways, it must 1. be correct and 2. fit the guidelines the AI follows.

For example, an AI that tried to say "The bloody sword stabbed a frail child." would get flagged being violent. (bloody, stabbed)

An AI that tried to say "Flower butterfly petal bakery." would get flagged for being incorrect.

An AI that said "blood sword knife attack murder violence." would get flagged for both.

An AI's sentence gets approved when it is likeable + positive, and when it is grammatical/makes sense.

Sometimes, it being likeable doesn't matter as much. Instead of it being the AI's job, it usually will filter out messages that are inappropriate.

Unless they put "gay" and "evil" as inappropriate, AI can still be extremely homophobic. I'm pretty sure based on whether it's likeable is usually the individual words, and not the meaning of the sentence.

When AI is trained, it is given a bunch of data and then given prompts to fill, which are marked good or bad.

"The horse shit was stinky."

"The horse had a beautiful mane."

...

...

...

Notice how none of this is "accuracy"? The only knowledge that AI like ChatGPT retains from scraping everything is how we speak, not what we know. You could ask AI who the 51st President of America "was" and it might say George Washington.

Google AI scrapes the web results given for what you searched and summarizes it, which is almost always inaccurate.

soooo accurate. (it's not) (it's in 333 days, 14 hours)

10 notes

·

View notes

Text

instagram

Learning to code and becoming a data scientist without a background in computer science or mathematics is absolutely possible, but it will require dedication, time, and a structured approach. ✨👌🏻 🖐🏻Here’s a step-by-step guide to help you get started:

1. Start with the Basics:

- Begin by learning the fundamentals of programming. Choose a beginner-friendly programming language like Python, which is widely used in data science.

- Online platforms like Codecademy, Coursera, and Khan Academy offer interactive courses for beginners.

2. Learn Mathematics and Statistics:

- While you don’t need to be a mathematician, a solid understanding of key concepts like algebra, calculus, and statistics is crucial for data science.

- Platforms like Khan Academy and MIT OpenCourseWare provide free resources for learning math.

3. Online Courses and Tutorials:

- Enroll in online data science courses on platforms like Coursera, edX, Udacity, and DataCamp. Look for beginner-level courses that cover data analysis, visualization, and machine learning.

4. Structured Learning Paths:

- Follow structured learning paths offered by online platforms. These paths guide you through various topics in a logical sequence.

5. Practice with Real Data:

- Work on hands-on projects using real-world data. Websites like Kaggle offer datasets and competitions for practicing data analysis and machine learning.

6. Coding Exercises:

- Practice coding regularly to build your skills. Sites like LeetCode and HackerRank offer coding challenges that can help improve your programming proficiency.

7. Learn Data Manipulation and Analysis Libraries:

- Familiarize yourself with Python libraries like NumPy, pandas, and Matplotlib for data manipulation, analysis, and visualization.

For more follow me on instagram.

#studyblr#100 days of productivity#stem academia#women in stem#study space#study motivation#dark academia#classic academia#academic validation#academia#academics#dark acadamia aesthetic#grey academia#light academia#romantic academia#chaotic academia#post grad life#grad student#graduate school#grad school#gradblr#stemblog#stem#stemblr#stem student#engineering college#engineering student#engineering#student life#study

7 notes

·

View notes

Text

Python for Beginners: Launch Your Tech Career with Coding Skills

Are you ready to launch your tech career but don’t know where to start? Learning Python is one of the best ways to break into the world of technology—even if you have zero coding experience.

In this guide, we’ll explore how Python for beginners can be your gateway to a rewarding career in software development, data science, automation, and more.

Why Python Is the Perfect Language for Beginners

Python has become the go-to programming language for beginners and professionals alike—and for good reason:

Simple syntax: Python reads like plain English, making it easy to learn.

High demand: Industries spanning the spectrum are actively seeking Python developers to fuel their technological advancements.

Versatile applications: Python's versatility shines as it powers everything from crafting websites to driving artificial intelligence and dissecting data.

Whether you want to become a software developer, data analyst, or AI engineer, Python lays the foundation.

What Can You Do With Python?

Python is not just a beginner language—it’s a career-building tool. Here are just a few career paths where Python is essential:

Web Development: Frameworks like Django and Flask make it easy to build powerful web applications. You can even enroll in a Python Course in Kochi to gain hands-on experience with real-world web projects.

Data Science & Analytics: For professionals tackling data analysis and visualization, the Python ecosystem, featuring powerhouses like Pandas, NumPy, and Matplotlib, sets the benchmark.

Machine Learning & AI: Spearheading advancements in artificial intelligence development, Python boasts powerful tools such as TensorFlow and scikit-learn.

Automation & Scripting: Simple yet effective Python scripts offer a pathway to amplified efficiency by automating routine workflows.

Cybersecurity & Networking: The application of Python is expanding into crucial domains such as ethical hacking, penetration testing, and the automation of network processes.

How to Get Started with Python

Starting your Python journey doesn't require a computer science degree. Success hinges on a focused commitment combined with a thoughtfully structured educational approach.

Step 1: Install Python

Download and install Python from python.org. It's free and available for all platforms.

Step 2: Choose an IDE

Use beginner-friendly tools like Thonny, PyCharm, or VS Code to write your code.

Step 3: Learn the Basics

Focus on:

Variables and data types

Conditional statements

Loops

Functions

Lists and dictionaries

If you prefer guided learning, a reputable Python Institute in Kochi can offer structured programs and mentorship to help you grasp core concepts efficiently.

Step 4: Build Projects

Learning by doing is key. Start small:

Build a calculator

Automate file organization

Create a to-do list app

As your skills grow, you can tackle more complex projects like data dashboards or web apps.

How Python Skills Can Boost Your Career

Adding Python to your resume instantly opens up new opportunities. Here's how it helps:

Higher employability: Python is one of the top 3 most in-demand programming languages.

Better salaries: Python developers earn competitive salaries across the globe.

Remote job opportunities: Many Python-related jobs are available remotely, offering flexibility.

Even if you're not aiming to be a full-time developer, Python skills can enhance careers in marketing, finance, research, and product management.

If you're serious about starting a career in tech, learning Python is the smartest first step you can take. It’s beginner-friendly, powerful, and widely used across industries.

Whether you're a student, job switcher, or just curious about programming, Python for beginners can unlock countless career opportunities. Invest time in learning today—and start building the future you want in tech.

Globally recognized as a premier educational hub, DataMites Institute delivers in-depth training programs across the pivotal fields of data science, artificial intelligence, and machine learning. They provide expert-led courses designed for both beginners and professionals aiming to boost their careers.

Python Modules Explained - Different Types and Functions - Python Tutorial

youtube

#python course#python training#python#learnpython#pythoncourseinindia#pythoncourseinkochi#pythoninstitute#python for data science#Youtube

3 notes

·

View notes

Text

Why Learning Python is the Perfect First Step in Coding

Learning Python is an ideal way to dive into programming. Its simplicity and versatility make it the perfect language for beginners, whether you're looking to develop basic skills or eventually dive into fields like data analysis, web development, or machine learning.

Start by focusing on the fundamentals: learn about variables, data types, conditionals, and loops. These core concepts are the building blocks of programming, and Python’s clear syntax makes them easier to grasp. Interactive platforms like Codecademy, Khan Academy, and freeCodeCamp offer structured, step-by-step lessons that are perfect for beginners, so start there.

Once you’ve got a handle on the basics, apply what you’ve learned by building small projects. For example, try coding a simple calculator, a basic guessing game, or even a text-based story generator. These small projects will help you understand how programming concepts work together, giving you confidence and helping you identify areas where you might need a bit more practice.

When you're ready to move beyond the basics, Python offers many powerful libraries that open up new possibilities. Dive into pandas for data analysis, matplotlib for data visualization, or even Django if you want to explore web development. Each library offers a set of tools that helps you do more complex tasks, and learning them will expand your coding skillset significantly.

Keep practicing, and don't hesitate to look at code written by others to see how they approach problems. Coding is a journey, and with every line you write, you’re gaining valuable skills that will pay off in future projects.

FREE Python and R Programming Course on Data Science, Machine Learning, Data Analysis, and Data Visualization

#learntocode#python for beginners#codingjourney#programmingbasics#web development#datascience#machinelearning#pythonprojects#codingcommunity#python#free course

10 notes

·

View notes

Text

Python Libraries to Learn Before Tackling Data Analysis

To tackle data analysis effectively in Python, it's crucial to become familiar with several libraries that streamline the process of data manipulation, exploration, and visualization. Here's a breakdown of the essential libraries:

1. NumPy

- Purpose: Numerical computing.

- Why Learn It: NumPy provides support for large multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently.

- Key Features:

- Fast array processing.

- Mathematical operations on arrays (e.g., sum, mean, standard deviation).

- Linear algebra operations.

2. Pandas

- Purpose: Data manipulation and analysis.

- Why Learn It: Pandas offers data structures like DataFrames, making it easier to handle and analyze structured data.

- Key Features:

- Reading/writing data from CSV, Excel, SQL databases, and more.

- Handling missing data.

- Powerful group-by operations.

- Data filtering and transformation.

3. Matplotlib

- Purpose: Data visualization.

- Why Learn It: Matplotlib is one of the most widely used plotting libraries in Python, allowing for a wide range of static, animated, and interactive plots.

- Key Features:

- Line plots, bar charts, histograms, scatter plots.

- Customizable charts (labels, colors, legends).

- Integration with Pandas for quick plotting.

4. Seaborn

- Purpose: Statistical data visualization.

- Why Learn It: Built on top of Matplotlib, Seaborn simplifies the creation of attractive and informative statistical graphics.

- Key Features:

- High-level interface for drawing attractive statistical graphics.

- Easier to use for complex visualizations like heatmaps, pair plots, etc.

- Visualizations based on categorical data.

5. SciPy

- Purpose: Scientific and technical computing.

- Why Learn It: SciPy builds on NumPy and provides additional functionality for complex mathematical operations and scientific computing.

- Key Features:

- Optimized algorithms for numerical integration, optimization, and more.

- Statistics, signal processing, and linear algebra modules.

6. Scikit-learn

- Purpose: Machine learning and statistical modeling.

- Why Learn It: Scikit-learn provides simple and efficient tools for data mining, analysis, and machine learning.

- Key Features:

- Classification, regression, and clustering algorithms.

- Dimensionality reduction, model selection, and preprocessing utilities.

7. Statsmodels

- Purpose: Statistical analysis.

- Why Learn It: Statsmodels allows users to explore data, estimate statistical models, and perform tests.

- Key Features:

- Linear regression, logistic regression, time series analysis.

- Statistical tests and models for descriptive statistics.

8. Plotly

- Purpose: Interactive data visualization.

- Why Learn It: Plotly allows for the creation of interactive and web-based visualizations, making it ideal for dashboards and presentations.

- Key Features:

- Interactive plots like scatter, line, bar, and 3D plots.

- Easy integration with web frameworks.

- Dashboards and web applications with Dash.

9. TensorFlow/PyTorch (Optional)

- Purpose: Machine learning and deep learning.

- Why Learn It: If your data analysis involves machine learning, these libraries will help in building, training, and deploying deep learning models.

- Key Features:

- Tensor processing and automatic differentiation.

- Building neural networks.

10. Dask (Optional)

- Purpose: Parallel computing for data analysis.

- Why Learn It: Dask enables scalable data manipulation by parallelizing Pandas operations, making it ideal for big datasets.

- Key Features:

- Works with NumPy, Pandas, and Scikit-learn.

- Handles large data and parallel computations easily.

Focusing on NumPy, Pandas, Matplotlib, and Seaborn will set a strong foundation for basic data analysis.

8 notes

·

View notes

Text

Unlocking the Power of Data: Essential Skills to Become a Data Scientist

In today's data-driven world, the demand for skilled data scientists is skyrocketing. These professionals are the key to transforming raw information into actionable insights, driving innovation and shaping business strategies. But what exactly does it take to become a data scientist? It's a multidisciplinary field, requiring a unique blend of technical prowess and analytical thinking. Let's break down the essential skills you'll need to embark on this exciting career path.

1. Strong Mathematical and Statistical Foundation:

At the heart of data science lies a deep understanding of mathematics and statistics. You'll need to grasp concepts like:

Linear Algebra and Calculus: Essential for understanding machine learning algorithms and optimizing models.

Probability and Statistics: Crucial for data analysis, hypothesis testing, and drawing meaningful conclusions from data.

2. Programming Proficiency (Python and/or R):

Data scientists are fluent in at least one, if not both, of the dominant programming languages in the field:

Python: Known for its readability and extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, making it ideal for data manipulation, analysis, and machine learning.

R: Specifically designed for statistical computing and graphics, R offers a rich ecosystem of packages for statistical modeling and visualization.

3. Data Wrangling and Preprocessing Skills:

Raw data is rarely clean and ready for analysis. A significant portion of a data scientist's time is spent on:

Data Cleaning: Handling missing values, outliers, and inconsistencies.

Data Transformation: Reshaping, merging, and aggregating data.

Feature Engineering: Creating new features from existing data to improve model performance.

4. Expertise in Databases and SQL:

Data often resides in databases. Proficiency in SQL (Structured Query Language) is essential for:

Extracting Data: Querying and retrieving data from various database systems.

Data Manipulation: Filtering, joining, and aggregating data within databases.

5. Machine Learning Mastery:

Machine learning is a core component of data science, enabling you to build models that learn from data and make predictions or classifications. Key areas include:

Supervised Learning: Regression, classification algorithms.

Unsupervised Learning: Clustering, dimensionality reduction.

Model Selection and Evaluation: Choosing the right algorithms and assessing their performance.

6. Data Visualization and Communication Skills:

Being able to effectively communicate your findings is just as important as the analysis itself. You'll need to:

Visualize Data: Create compelling charts and graphs to explore patterns and insights using libraries like Matplotlib, Seaborn (Python), or ggplot2 (R).

Tell Data Stories: Present your findings in a clear and concise manner that resonates with both technical and non-technical audiences.

7. Critical Thinking and Problem-Solving Abilities:

Data scientists are essentially problem solvers. You need to be able to:

Define Business Problems: Translate business challenges into data science questions.

Develop Analytical Frameworks: Structure your approach to solve complex problems.

Interpret Results: Draw meaningful conclusions and translate them into actionable recommendations.

8. Domain Knowledge (Optional but Highly Beneficial):

Having expertise in the specific industry or domain you're working in can give you a significant advantage. It helps you understand the context of the data and formulate more relevant questions.

9. Curiosity and a Growth Mindset:

The field of data science is constantly evolving. A genuine curiosity and a willingness to learn new technologies and techniques are crucial for long-term success.

10. Strong Communication and Collaboration Skills:

Data scientists often work in teams and need to collaborate effectively with engineers, business stakeholders, and other experts.

Kickstart Your Data Science Journey with Xaltius Academy's Data Science and AI Program:

Acquiring these skills can seem like a daunting task, but structured learning programs can provide a clear and effective path. Xaltius Academy's Data Science and AI Program is designed to equip you with the essential knowledge and practical experience to become a successful data scientist.

Key benefits of the program:

Comprehensive Curriculum: Covers all the core skills mentioned above, from foundational mathematics to advanced machine learning techniques.

Hands-on Projects: Provides practical experience working with real-world datasets and building a strong portfolio.

Expert Instructors: Learn from industry professionals with years of experience in data science and AI.

Career Support: Offers guidance and resources to help you launch your data science career.

Becoming a data scientist is a rewarding journey that blends technical expertise with analytical thinking. By focusing on developing these key skills and leveraging resources like Xaltius Academy's program, you can position yourself for a successful and impactful career in this in-demand field. The power of data is waiting to be unlocked – are you ready to take the challenge?

3 notes

·

View notes

Text

Python Programming Language: A Comprehensive Guide

Python is one of the maximum widely used and hastily growing programming languages within the world. Known for its simplicity, versatility, and great ecosystem, Python has become the cross-to desire for beginners, professionals, and organizations across industries.

What is Python used for

🐍 What is Python?

Python is a excessive-stage, interpreted, fashionable-purpose programming language. The language emphasizes clarity, concise syntax, and code simplicity, making it an excellent device for the whole lot from web development to synthetic intelligence.

Its syntax is designed to be readable and easy, regularly described as being near the English language. This ease of information has led Python to be adopted no longer simplest through programmers but also by way of scientists, mathematicians, and analysts who may not have a formal heritage in software engineering.

📜 Brief History of Python

Late Nineteen Eighties: Guido van Rossum starts work on Python as a hobby task.

1991: Python zero.9.0 is released, presenting classes, functions, and exception managing.

2000: Python 2.Zero is launched, introducing capabilities like list comprehensions and rubbish collection.

2008: Python 3.Zero is launched with considerable upgrades but breaks backward compatibility.

2024: Python three.12 is the modern day strong model, enhancing performance and typing support.

⭐ Key Features of Python

Easy to Learn and Use:

Python's syntax is simple and similar to English, making it a high-quality first programming language.

Interpreted Language:

Python isn't always compiled into device code; it's far done line by using line the usage of an interpreter, which makes debugging less complicated.

Cross-Platform:

Python code runs on Windows, macOS, Linux, and even cell devices and embedded structures.

Dynamic Typing:

Variables don’t require explicit type declarations; types are decided at runtime.

Object-Oriented and Functional:

Python helps each item-orientated programming (OOP) and practical programming paradigms.

Extensive Standard Library:

Python includes a rich set of built-in modules for string operations, report I/O, databases, networking, and more.

Huge Ecosystem of Libraries:

From data technological know-how to net development, Python's atmosphere consists of thousands of programs like NumPy, pandas, TensorFlow, Flask, Django, and many greater.

📌 Basic Python Syntax

Here's an instance of a easy Python program:

python

Copy

Edit

def greet(call):

print(f"Hello, call!")

greet("Alice")

Output:

Copy

Edit

Hello, Alice!

Key Syntax Elements:

Indentation is used to define blocks (no curly braces like in different languages).

Variables are declared via task: x = 5

Comments use #:

# This is a remark

Print Function:

print("Hello")

📊 Python Data Types

Python has several built-in data kinds:

Numeric: int, go with the flow, complicated

Text: str

Boolean: bool (True, False)

Sequence: listing, tuple, range

Mapping: dict

Set Types: set, frozenset

Example:

python

Copy

Edit

age = 25 # int

name = "John" # str

top = 5.Nine # drift

is_student = True # bool

colors = ["red", "green", "blue"] # listing

🔁 Control Structures

Conditional Statements:

python

Copy

Edit

if age > 18:

print("Adult")

elif age == 18:

print("Just became an person")

else:

print("Minor")

Loops:

python

Copy

Edit

for color in hues:

print(coloration)

while age < 30:

age += 1

🔧 Functions and Modules

Defining a Function:

python

Copy

Edit

def upload(a, b):

return a + b

Importing a Module:

python

Copy

Edit

import math

print(math.Sqrt(sixteen)) # Output: four.0

🗂️ Object-Oriented Programming (OOP)

Python supports OOP functions such as lessons, inheritance, and encapsulation.

Python

Copy

Edit

elegance Animal:

def __init__(self, call):

self.Call = name

def communicate(self):

print(f"self.Call makes a valid")

dog = Animal("Dog")

dog.Speak() # Output: Dog makes a legitimate

🧠 Applications of Python

Python is used in nearly each area of era:

1. Web Development

Frameworks like Django, Flask, and FastAPI make Python fantastic for building scalable web programs.

2. Data Science & Analytics

Libraries like pandas, NumPy, and Matplotlib permit for data manipulation, evaluation, and visualization.

Three. Machine Learning & AI

Python is the dominant language for AI, way to TensorFlow, PyTorch, scikit-research, and Keras.

4. Automation & Scripting

Python is extensively used for automating tasks like file managing, device tracking, and data scraping.

Five. Game Development

Frameworks like Pygame allow builders to build simple 2D games.

6. Desktop Applications

With libraries like Tkinter and PyQt, Python may be used to create cross-platform computing device apps.

7. Cybersecurity

Python is often used to write security equipment, penetration trying out scripts, and make the most development.

📚 Popular Python Libraries

NumPy: Numerical computing

pandas: Data analysis

Matplotlib / Seaborn: Visualization

scikit-study: Machine mastering

BeautifulSoup / Scrapy: Web scraping

Flask / Django: Web frameworks

OpenCV: Image processing

PyTorch / TensorFlow: Deep mastering

SQLAlchemy: Database ORM

💻 Python Tools and IDEs

Popular environments and tools for writing Python code encompass:

PyCharm: Full-featured Python IDE.

VS Code: Lightweight and extensible editor.

Jupyter Notebook: Interactive environment for statistics technological know-how and studies.

IDLE: Python’s default editor.

🔐 Strengths of Python

Easy to study and write

Large community and wealthy documentation

Extensive 0.33-birthday celebration libraries

Strong support for clinical computing and AI

Cross-platform compatibility

⚠️ Limitations of Python

Slower than compiled languages like C/C++

Not perfect for mobile app improvement

High memory usage in massive-scale packages

GIL (Global Interpreter Lock) restricts genuine multithreading in CPython

🧭 Learning Path for Python Beginners

Learn variables, facts types, and control glide.

Practice features and loops.

Understand modules and report coping with.

Explore OOP concepts.

Work on small initiatives (e.G., calculator, to-do app).

Dive into unique areas like statistics technological know-how, automation, or web development.

#What is Python used for#college students learn python#online course python#offline python course institute#python jobs in information technology

2 notes

·

View notes

Text

How to Become a Data Scientist in 2025 (Roadmap for Absolute Beginners)

Want to become a data scientist in 2025 but don’t know where to start? You’re not alone. With job roles, tech stacks, and buzzwords changing rapidly, it’s easy to feel lost.

But here’s the good news: you don’t need a PhD or years of coding experience to get started. You just need the right roadmap.

Let’s break down the beginner-friendly path to becoming a data scientist in 2025.

✈️ Step 1: Get Comfortable with Python

Python is the most beginner-friendly programming language in data science.

What to learn:

Variables, loops, functions

Libraries like NumPy, Pandas, and Matplotlib

Why: It’s the backbone of everything you’ll do in data analysis and machine learning.

🔢 Step 2: Learn Basic Math & Stats

You don’t need to be a math genius. But you do need to understand:

Descriptive statistics

Probability

Linear algebra basics

Hypothesis testing

These concepts help you interpret data and build reliable models.

📊 Step 3: Master Data Handling

You’ll spend 70% of your time cleaning and preparing data.

Skills to focus on:

Working with CSV/Excel files

Cleaning missing data

Data transformation with Pandas

Visualizing data with Seaborn/Matplotlib

This is the “real work” most data scientists do daily.

🧬 Step 4: Learn Machine Learning (ML)

Once you’re solid with data handling, dive into ML.

Start with:

Supervised learning (Linear Regression, Decision Trees, KNN)

Unsupervised learning (Clustering)

Model evaluation metrics (accuracy, recall, precision)

Toolkits: Scikit-learn, XGBoost

🚀 Step 5: Work on Real Projects

Projects are what make your resume pop.

Try solving:

Customer churn

Sales forecasting

Sentiment analysis

Fraud detection

Pro tip: Document everything on GitHub and write blogs about your process.

✏️ Step 6: Learn SQL and Databases

Data lives in databases. Knowing how to query it with SQL is a must-have skill.

Focus on:

SELECT, JOIN, GROUP BY

Creating and updating tables

Writing nested queries

🌍 Step 7: Understand the Business Side

Data science isn’t just tech. You need to translate insights into decisions.

Learn to:

Tell stories with data (data storytelling)

Build dashboards with tools like Power BI or Tableau

Align your analysis with business goals

🎥 Want a Structured Way to Learn All This?

Instead of guessing what to learn next, check out Intellipaat’s full Data Science course on YouTube. It covers Python, ML, real projects, and everything you need to build job-ready skills.

https://www.youtube.com/watch?v=rxNDw68XcE4

🔄 Final Thoughts

Becoming a data scientist in 2025 is 100% possible — even for beginners. All you need is consistency, a good learning path, and a little curiosity.

Start simple. Build as you go. And let your projects speak louder than your resume.

Drop a comment if you’re starting your journey. And don’t forget to check out the free Intellipaat course to speed up your progress!

2 notes

·

View notes

Text

so my wife (@beaniebaby492) has a problem that maybe some of my mutuals can help with. she's wanting a data structure with these qualities: -fast reading of slices of numpy (or similar) arrays -scalable between ~10Gb - 1Tb (largest data reaches Pb but rarely and can always be reduced easily) -ideally easy to move to a computing cluster if computations become too expensive -some way to include metadata -easy to convert to just standard numpy/pandas for when data is mostly processed and reduced

side note. these are mostly a sequence of 3D arrays given different experimental parameters

she's "leaning towards dask and xarray with h5 data structure since it is somewhat commonly used in [her] field"

what would y'all recommend?

18 notes

·

View notes

Text

What is Python, How to Learn Python?

What is Python?

Python is a high-level, interpreted programming language known for its simplicity and readability. It is widely used in various fields like: ✅ Web Development (Django, Flask) ✅ Data Science & Machine Learning (Pandas, NumPy, TensorFlow) ✅ Automation & Scripting (Web scraping, File automation) ✅ Game Development (Pygame) ✅ Cybersecurity & Ethical Hacking ✅ Embedded Systems & IoT (MicroPython)

Python is beginner-friendly because of its easy-to-read syntax, large community, and vast library support.

How Long Does It Take to Learn Python?

The time required to learn Python depends on your goals and background. Here’s a general breakdown:

1. Basics of Python (1-2 months)

If you spend 1-2 hours daily, you can master:

Variables, Data Types, Operators

Loops & Conditionals

Functions & Modules

Lists, Tuples, Dictionaries

File Handling

Basic Object-Oriented Programming (OOP)

2. Intermediate Level (2-4 months)

Once comfortable with basics, focus on:

Advanced OOP concepts

Exception Handling

Working with APIs & Web Scraping

Database handling (SQL, SQLite)

Python Libraries (Requests, Pandas, NumPy)

Small real-world projects

3. Advanced Python & Specialization (6+ months)

If you want to go pro, specialize in:

Data Science & Machine Learning (Matplotlib, Scikit-Learn, TensorFlow)

Web Development (Django, Flask)

Automation & Scripting

Cybersecurity & Ethical Hacking

Learning Plan Based on Your Goal

📌 Casual Learning – 3-6 months (for automation, scripting, or general knowledge) 📌 Professional Development – 6-12 months (for jobs in software, data science, etc.) 📌 Deep Mastery – 1-2 years (for AI, ML, complex projects, research)

Scope @ NareshIT:

At NareshIT’s Python application Development program you will be able to get the extensive hands-on training in front-end, middleware, and back-end technology.

It skilled you along with phase-end and capstone projects based on real business scenarios.

Here you learn the concepts from leading industry experts with content structured to ensure industrial relevance.

An end-to-end application with exciting features

Earn an industry-recognized course completion certificate.

For more details:

#classroom#python#education#learning#teaching#institute#marketing#study motivation#studying#onlinetraining

2 notes

·

View notes

Text

What Are the Qualifications for a Data Scientist?

In today's data-driven world, the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making, understanding customer behavior, and improving products, the demand for skilled professionals who can analyze, interpret, and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientist, how DataCouncil can help you get there, and why a data science course in Pune is a great option, this blog has the answers.

The Key Qualifications for a Data Scientist

To succeed as a data scientist, a mix of technical skills, education, and hands-on experience is essential. Here are the core qualifications required:

1. Educational Background

A strong foundation in mathematics, statistics, or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields, with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap, offering the academic and practical knowledge required for a strong start in the industry.

2. Proficiency in Programming Languages

Programming is at the heart of data science. You need to be comfortable with languages like Python, R, and SQL, which are widely used for data analysis, machine learning, and database management. A comprehensive data science course in Pune will teach these programming skills from scratch, ensuring you become proficient in coding for data science tasks.

3. Understanding of Machine Learning

Data scientists must have a solid grasp of machine learning techniques and algorithms such as regression, clustering, and decision trees. By enrolling in a DataCouncil course, you'll learn how to implement machine learning models to analyze data and make predictions, an essential qualification for landing a data science job.

4. Data Wrangling Skills

Raw data is often messy and unstructured, and a good data scientist needs to be adept at cleaning and processing data before it can be analyzed. DataCouncil's data science course in Pune includes practical training in tools like Pandas and Numpy for effective data wrangling, helping you develop a strong skill set in this critical area.

5. Statistical Knowledge

Statistical analysis forms the backbone of data science. Knowledge of probability, hypothesis testing, and statistical modeling allows data scientists to draw meaningful insights from data. A structured data science course in Pune offers the theoretical and practical aspects of statistics required to excel.

6. Communication and Data Visualization Skills

Being able to explain your findings in a clear and concise manner is crucial. Data scientists often need to communicate with non-technical stakeholders, making tools like Tableau, Power BI, and Matplotlib essential for creating insightful visualizations. DataCouncil’s data science course in Pune includes modules on data visualization, which can help you present data in a way that’s easy to understand.

7. Domain Knowledge

Apart from technical skills, understanding the industry you work in is a major asset. Whether it’s healthcare, finance, or e-commerce, knowing how data applies within your industry will set you apart from the competition. DataCouncil's data science course in Pune is designed to offer case studies from multiple industries, helping students gain domain-specific insights.

Why Choose DataCouncil for a Data Science Course in Pune?

If you're looking to build a successful career as a data scientist, enrolling in a data science course in Pune with DataCouncil can be your first step toward reaching your goals. Here’s why DataCouncil is the ideal choice:

Comprehensive Curriculum: The course covers everything from the basics of data science to advanced machine learning techniques.

Hands-On Projects: You'll work on real-world projects that mimic the challenges faced by data scientists in various industries.

Experienced Faculty: Learn from industry professionals who have years of experience in data science and analytics.

100% Placement Support: DataCouncil provides job assistance to help you land a data science job in Pune or anywhere else, making it a great investment in your future.

Flexible Learning Options: With both weekday and weekend batches, DataCouncil ensures that you can learn at your own pace without compromising your current commitments.

Conclusion

Becoming a data scientist requires a combination of technical expertise, analytical skills, and industry knowledge. By enrolling in a data science course in Pune with DataCouncil, you can gain all the qualifications you need to thrive in this exciting field. Whether you're a fresher looking to start your career or a professional wanting to upskill, this course will equip you with the knowledge, skills, and practical experience to succeed as a data scientist.

Explore DataCouncil’s offerings today and take the first step toward unlocking a rewarding career in data science! Looking for the best data science course in Pune? DataCouncil offers comprehensive data science classes in Pune, designed to equip you with the skills to excel in this booming field. Our data science course in Pune covers everything from data analysis to machine learning, with competitive data science course fees in Pune. We provide job-oriented programs, making us the best institute for data science in Pune with placement support. Explore online data science training in Pune and take your career to new heights!

#In today's data-driven world#the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making#understanding customer behavior#and improving products#the demand for skilled professionals who can analyze#interpret#and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientis#how DataCouncil can help you get there#and why a data science course in Pune is a great option#this blog has the answers.#The Key Qualifications for a Data Scientist#To succeed as a data scientist#a mix of technical skills#education#and hands-on experience is essential. Here are the core qualifications required:#1. Educational Background#A strong foundation in mathematics#statistics#or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields#with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap#offering the academic and practical knowledge required for a strong start in the industry.#2. Proficiency in Programming Languages#Programming is at the heart of data science. You need to be comfortable with languages like Python#R#and SQL#which are widely used for data analysis#machine learning#and database management. A comprehensive data science course in Pune will teach these programming skills from scratch#ensuring you become proficient in coding for data science tasks.#3. Understanding of Machine Learning

3 notes

·

View notes

Text

Exploring Data Science Tools: My Adventures with Python, R, and More

Welcome to my data science journey! In this blog post, I'm excited to take you on a captivating adventure through the world of data science tools. We'll explore the significance of choosing the right tools and how they've shaped my path in this thrilling field.

Choosing the right tools in data science is akin to a chef selecting the finest ingredients for a culinary masterpiece. Each tool has its unique flavor and purpose, and understanding their nuances is key to becoming a proficient data scientist.

I. The Quest for the Right Tool

My journey began with confusion and curiosity. The world of data science tools was vast and intimidating. I questioned which programming language would be my trusted companion on this expedition. The importance of selecting the right tool soon became evident.

I embarked on a research quest, delving deep into the features and capabilities of various tools. Python and R emerged as the frontrunners, each with its strengths and applications. These two contenders became the focus of my data science adventures.

II. Python: The Swiss Army Knife of Data Science

Python, often hailed as the Swiss Army Knife of data science, stood out for its versatility and widespread popularity. Its extensive library ecosystem, including NumPy for numerical computing, pandas for data manipulation, and Matplotlib for data visualization, made it a compelling choice.

My first experiences with Python were both thrilling and challenging. I dove into coding, faced syntax errors, and wrestled with data structures. But with each obstacle, I discovered new capabilities and expanded my skill set.

III. R: The Statistical Powerhouse

In the world of statistics, R shines as a powerhouse. Its statistical packages like dplyr for data manipulation and ggplot2 for data visualization are renowned for their efficacy. As I ventured into R, I found myself immersed in a world of statistical analysis and data exploration.

My journey with R included memorable encounters with data sets, where I unearthed hidden insights and crafted beautiful visualizations. The statistical prowess of R truly left an indelible mark on my data science adventure.

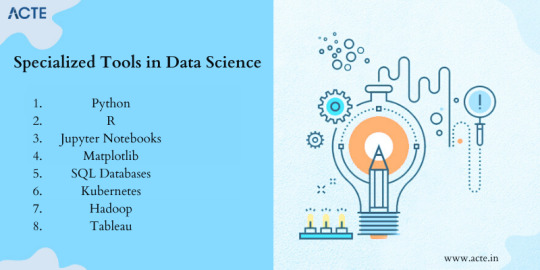

IV. Beyond Python and R: Exploring Specialized Tools

While Python and R were my primary companions, I couldn't resist exploring specialized tools and programming languages that catered to specific niches in data science. These tools offered unique features and advantages that added depth to my skill set.

For instance, tools like SQL allowed me to delve into database management and querying, while Scala opened doors to big data analytics. Each tool found its place in my toolkit, serving as a valuable asset in different scenarios.

V. The Learning Curve: Challenges and Rewards

The path I took wasn't without its share of difficulties. Learning Python, R, and specialized tools presented a steep learning curve. Debugging code, grasping complex algorithms, and troubleshooting errors were all part of the process.

However, these challenges brought about incredible rewards. With persistence and dedication, I overcame obstacles, gained a profound understanding of data science, and felt a growing sense of achievement and empowerment.

VI. Leveraging Python and R Together

One of the most exciting revelations in my journey was discovering the synergy between Python and R. These two languages, once considered competitors, complemented each other beautifully.

I began integrating Python and R seamlessly into my data science workflow. Python's data manipulation capabilities combined with R's statistical prowess proved to be a winning combination. Together, they enabled me to tackle diverse data science tasks effectively.

VII. Tips for Beginners

For fellow data science enthusiasts beginning their own journeys, I offer some valuable tips:

Embrace curiosity and stay open to learning.

Work on practical projects while engaging in frequent coding practice.

Explore data science courses and resources to enhance your skills.

Seek guidance from mentors and engage with the data science community.

Remember that the journey is continuous—there's always more to learn and discover.

My adventures with Python, R, and various data science tools have been transformative. I've learned that choosing the right tool for the job is crucial, but versatility and adaptability are equally important traits for a data scientist.

As I summarize my expedition, I emphasize the significance of selecting tools that align with your project requirements and objectives. Each tool has a unique role to play, and mastering them unlocks endless possibilities in the world of data science.

I encourage you to embark on your own tool exploration journey in data science. Embrace the challenges, relish the rewards, and remember that the adventure is ongoing. May your path in data science be as exhilarating and fulfilling as mine has been.

Happy data exploring!

22 notes

·

View notes

Text

dreamdoll watchlist ★

youtube

key takeaways:

python is a good beginning coding language to start with

start with: variables, datatypes, loops, functions, if statements, oop

if you covered the basics, it would and should take you approx. 2 weeks.

first project: do something interesting/useful. start small.

simple games or a food recommendation system with specific ingredients

panda dataframe

use API = application programming interface = different pieces of software interacting with each other. grabbing data from another source

after your first project, learn about data structures and algorithms. how API works. learn how to read documentation.

dictionary

linkedlists

queues

heaps

trees

graphs

learn about more things and how to implement them into projects.

correct mindset:

implementation and application > theory and concepts knowing ≠ being able to do it

stay curious.

explore things outside of what is prescribed in a resource. that's how you learn about different concepts and how you deeply understand the concepts that you already know.

the best programmers they've met are the tinkers. these are the people who play around with their code and try a bunch of different things.

getting stuck:

it all comes down to problem solving. be comfortable with not knowing things and staying calm while trying to figure out the problems

how to learn even faster:

find a community where you work on projects together. you will learn so many things from other experiences programmers just by interacting with them. and accountability because you just can't give up

learning is never ending. you will always be learning something new.

12 notes

·

View notes

Text

How do I learn Python in depth?

Improving Your Python Skills

Writing Python Programs Basics: Practice the basics solidly.

Syntax and Semantics: Make sure you are very strong in variables, data types, control flow, functions, and object-oriented programming.

Data Structures: Be able to work with lists, tuples, dictionaries, and sets, and know when to use which.

Modules and Packages: Study how to import and use built-in and third-party modules.

Advanced Concepts

Generators and Iterators: Know how to develop efficient iterators and generators for memory-efficient code.

Decorators: Learn how to dynamically alter functions using decorators.

Metaclasses: Understand how classes are created and can be customized.

Context Managers: Understand how contexts work with statements.

Project Practice

Personal Projects: You will work on projects that you want to, whether building a web application, data analysis tool, or a game.

Contributing to Open Source: Contribute to open-source projects in order to learn from senior developers. Get exposed to real-life code.

Online Challenges: Take part in coding challenges on HackerRank, LeetCode, or Project Euler.

Learn Various Libraries and Frameworks

Scientific Computing: NumPy, SciPy, Pandas

Data Visualization: Matplotlib, Seaborn

Machine Learning: Scikit-learn, TensorFlow, PyTorch

Web Development: Django, Flask

Data Analysis: Dask, Airflow

Read Pythonic Code

Open Source Projects: Study the source code of a few popular Python projects. Go through their best practices and idiomatic Python.

Books and Tutorials: Read all the code examples in books and tutorials on Python.

Conferences and Workshops

Attend conferences and workshops that will help you further your skills in Python. PyCon is an annual Python conference that includes talks, workshops, and even networking opportunities. Local meetups will let you connect with other Python developers in your area.

Learn Continuously

Follow Blogs and Podcasts: Keep reading blogs and listening to podcasts that will keep you updated with the latest trends and developments taking place within the Python community.

Online Courses: Advanced understanding in Python can be acquired by taking online courses on the subject.

Try It Yourself: Trying new techniques and libraries expands one's knowledge.

Other Recommendations

Readable-Clean Code: For code writing, it's essential to follow the style guide in Python, PEP

Naming your variables and functions as close to their utilization as possible is also recommended.

Test Your Code: Unit tests will help in establishing the correctness of your code.

Coding with Others: Doing pair programming and code reviews would provide you with experience from other coders.

You are not Afraid to Ask for Help: Never hesitate to ask for help when things are beyond your hand-on areas, be it online communities or mentors.

These steps, along with consistent practice, will help you become proficient in Python development and open a wide range of possibilities in your career.

2 notes

·

View notes

Text

The Vital Art

When the human species began to master the molecular machinery that underlay its own existence, the first applications were thoroughly practical. It eventually became simple to engineer viruses that, injected into one's bloodstream, would go hunting on their own for pathogens and cancerous cells, and destroy them instance by instance with a thoroughness and ruthlessness that would make an inquisitor shudder. It was not unheard, of course, that some of these viruses would escape control and become pathogens of their own, as any selfish mutant would necessarily enjoy an immense evolutionary advantage over its obedient brethren. But other waves of nano-cleaners would come to chastise the first, and it would be rare for more than one to rebel.

Bacteria and other such microbes would be tailor-made for all sorts of industrial applications. The cunning alchemies devised by four billion years of seething mutation and merciless selection could be gathered and placed together by the foresight of intelligence. Some would be sprayed over landfills and polluted rivers to break down plastic polymers, encase radioactive waste in glassy foam, strip polluting organochlorides of their flesh-warping powers. Others would swarm on metal structures and use the energy of sunlight to reverse the oxidation slowly eroding their beams; or sifted through mining waste to concentrate and purify metals. It was possible to translate any message into a sequence of nucleotides, and to store them safely in bacterial spores, packing terabytes of data in a droplet of water. All had been carefully crafted so that they could never survive within animal bodies.

Thence it was hardly a leap to cultivate animal and vegetal cells in aquaria and petri dishes. It became trivial to grow any tissue from a single cell; and soon later, authentic giant panda meat was no more expensive than chicken breast. The basest mixture of organic matter, down to dead leaves raked from a yard, could be liquefied into nutrient broth, and sown with the seeds of a feast worthy of royal courts. Rumors were heard of wealthy eccentrics dining on their own projected and multiplied flesh. (Conveniently enough, large swathes of humanity agreed that raising animals for meat was a moral outrage only a few years after synthetic meat had become cheap and satisfactory; though not large enough to prevent meat breeds from surviving as pampered status symbols in isolated regions of the planet.)

Bio-artists managed to grow whole functional organs out of stem cells; and then linked them with artificial nerves and guts and blood vessels, giving life to minimal creatures, networks of interconnected glands lying in a collection of petri dishes. These could turn food into colored secretions or pleasant scents, baring every step of the process to the gawkers. Miniaturized versions were later enclosed in a smooth carapace and sold as decorations, such as living lanterns that could produce a warm firefly light for a few drops of nutritive solution. Designing self-sustaining systems that could perform such functions on a spoonful of sugar became a common assignment for schoolchildren.

Some bioart companies released all-purpose "basic creatures" into which decorative organs could be plugged and exchanged at will, so that the same pulsing fleshsac could nourish a cluster of multi-colored lights one day, musk glands with the scent of lavander and pine resin the next, then a chitinous carillon or a battery-recharging orifice. Subcultures made a game out of the collection of functional organs. This resulted, for a while, in unpleasant exchanges of pathogens; and many owners found expensive organs swollen and oozing with infections. Specialized antibiotic vials became very quickly an indispensable accessory.

All the arts of the animal and vegetal kingdoms were repurposed for human enjoyment. Cephalopod skins were grafted onto the manufactured creatures, and stimulated electrically so that pigmented cells would expand or contract as commanded, serving as biological pixels to display pictures and videos. Swarms of fabricated insects danced in the sky in evanescent shrouds, painting streaks of light with the glow of their own bodies. Worm-like ribbons were wrapped around Christmas trees, or around columns and lampposts during public holidays, to fill the air with the colors of their photophores, or festive stridulations; artificial syringes and gular sacs modeled after tree frogs and siamangs to produce songs of staggering beauty and complexity, with an organic, animal quality that no mechanical instrument nor human voice could have produced.

Synthetic pets came into demand, offering more flattery of human biophilic instincts in lieu of the cleanliness and efficiency of pet robots. They were built at first in imitation of slugs and shrimps (without unpleasant secretions, and built to withstand the manipulation of impatient children), then of birds and mammals. Soft textures, pleasant sounds and smells, endearing features were agreed upon in bioaesthetic committees, endlessly simulated in virtual ontogenesis, and finally translated into proprietary genetic code and packed into a convenient egg. Clean and sexless they were made for families that would feed them daily with patented formulas; and others were made in less innocent places for less innocent purposes.

Brain-designing teams became accustomed to threading a very fine needle, creating minds that were developed enough to avoid most frustrations of pet-owning, without crossing the threshold that would grant them the same personhood and self-ownership granted in extremis to the last gorillas and elephants. Years of poring over the daedalus of neurons with the resources of industry and its hunger for results uncovered many secrets that would feed the next waves of the vital arts.

The following wonder was of course the return of recently extinct species, the delicate-hued passenger pigeon, the reptile-jawed Tasmanian wolf, the purple-cheeked orangutan. Century-old plans were fulfilled as ruddy herds of mammoths wandered once again the pale tundra, although they had to be relocated to a thawing Antarctica. Much clamor was raised by the announce of restored dinosaurs, which were later revealed to have been manufactured out of modified emus and hoazins. Still they enjoyed a great popularity, in increasingly bizarre forms, that eventually resembled more the drooling monsters of ancient movies than the breathing animals of the Mesozoic. They were joined by other false resurrections, the living effigies of clankering sea scorpions, wheezing proto-tetrapods, and gibbering australopithecines.

The orangutans enjoyed the greatest success of all resurrected creatures; they established a thriving population in the half-sunken ruins of a once-great megalopolis in Southeast Asia, whose surviving inhabitants had long since moved to floating swarms of pelagopoleis. For many decades the reborn apes could be sighted from the sea, sitting placidly under red shawls of fur, on the greening roofs and rusting pylons. Apparently jealous of their own new life, they disappeared quickly into the thickest brush, or into galleries believed to extend deep below the sea level of that time. Many fantastic conjectures were made about their secret existence, though nobody quite managed to probe it by force or deceit. Presumably, when the War came some three decades later, the resurrected orangutans fell for the second time into the chasm of extinction.

In the later phase, mammalian and even human brains were produced, some apparently capable of nervous activity. There were many ways to expose it to the world: in some cases it was translated into a musical codex; in others, the outer cortex was made translucent, and the flow of neurotransmitters could be seen as a warm-colored glow. A Museum of Qualia was briefly opened in a northern city, where one could experience the colors of distant longing, the textures of sexual rapture, the notes of filial love, and the taste of divine inspiration. It became simple, then, to induce the same sensations in natural brains, and make everyone into a poète maudit and a prophet of God.

Most synthetic brains were mercifully awash in endorphins for all their existence. But in one infamous case, a particularly mad artist had their farmed brain glow with neuronal activity in ways consistent with excruciating pain. The debates were fierce, on questions both of fact and moral, and after a few months the damned creature, if such it was, was disconnected from support and incinerated. Its luckier brethren followed it soon. The natural-born brains that had been stored and preserved in view of a future reanimation, which for the time trauma or decay had made impossible, were kept in storage as long as dutiful heirs or charitable organizations funded it, and not one minute longer.

This one scandal marked the zenith of popularity of the vital art, and from there it swiftly withdrew from public exaltation into the pockets of practicality -- food production, medicine, waste treatment -- where it could not be abandoned, and where people had long accepted it as normal and natural. People would still dine on vat-grown meat -- who but a savage would prefer another kind? -- and saved their loved ones from death with any necessary mean. But year after year, the breathing infrastructures were quietly dismantled or fell into disrepair, and the synthetic companions lost much of their appeal.

Many countries of the world banned the applications of the art that were not essential, and some of those that were. Quickly the wealth of volumes written on the manipulation of life became little more than a catalogue of past curiosities, a glimpse into the alien thoughts and values that so recently were the rule.

In the last few years before the War, new developments of the vital art only occurred in secret, in the laboratories of tyrants and revolutionaries, meant not for unscrupulous creation, but for exceedingly scrupulous destruction.

13 notes

·

View notes