#ChatGPT-powered products

Explore tagged Tumblr posts

Text

The Art of ChatGPT Profit: Monetization Techniques for Financial Growth

How to make money with ChatGPT? What is ChatGPT? OpenAI created ChatGPT, an advanced language model. It is intended to generate human-like text responses in response to given prompts. ChatGPT, which is powered by deep learning algorithms, can engage in natural and dynamic conversations, making it an ideal tool for a variety of applications. ChatGPT can be used for a variety of purposes,…

View On WordPress

#AI#chatbot platforms#ChatGPT#ChatGPT services#ChatGPT-powered products#consulting services#content creation#create a website#customer support tools#freelancer#Freelancing#language translation services#make money#make money with ChatGPT#marketing and selling#monetization#monetizing#monetizing AI#OpenAI#prompt engineering#prompts#Social media platforms#Virtual assistants

0 notes

Text

The Philosophy of Standpoint

The philosophy of standpoint, often referred to as standpoint theory, is a framework used primarily in feminist and critical theory to explore how knowledge and experience are shaped by social positions and power dynamics. It asserts that individuals' perspectives are influenced by their social and political contexts, particularly their positions within systems of power and oppression. Standpoint theory argues that marginalized or oppressed groups can offer unique and more accurate insights into social realities because their perspectives are shaped by experiences that are often overlooked or devalued by dominant groups.

Key Concepts:

Situated Knowledge: Standpoint theory posits that all knowledge is situated, meaning it is rooted in specific social contexts and power structures. This challenges the notion of objective, universal knowledge that is detached from the knower’s social identity.

Epistemic Privilege: A key idea in standpoint theory is that marginalized groups, because of their lived experiences of oppression, have an epistemic advantage in understanding certain aspects of social reality. For example, women might have a clearer understanding of gender relations than men because they experience gender-based oppression directly.

Standpoint as an Achievement: A standpoint is not simply given by one's social position but must be actively achieved through reflection, consciousness-raising, and collective struggle. This process involves critically examining and interpreting one's experiences within the broader social and political context.

Power and Knowledge: Standpoint theory emphasizes the relationship between power and knowledge production. It argues that dominant groups often shape what is considered legitimate knowledge, thereby marginalizing alternative perspectives that challenge the status quo.

Intersectionality: The philosophy of standpoint often intersects with intersectional theory, which recognizes that people experience oppression and privilege in multiple, overlapping ways (e.g., race, class, gender, sexuality). Thus, standpoint theory takes into account the complexity of identity and how it influences one's perspective.

The philosophy of standpoint challenges traditional notions of objectivity and universal knowledge by asserting that our social positions deeply influence how we perceive and understand the world. By recognizing and valuing the perspectives of marginalized groups, standpoint theory seeks to expose and address systemic inequalities in knowledge production and societal structures. It invites a more inclusive and reflective approach to understanding social reality, where diverse voices are heard and respected.

#philosophy#epistemology#knowledge#learning#education#chatgpt#metaphysics#ontology#Standpoint Theory#Feminist Philosophy#Critical Theory#Social Epistemology#Situated Knowledge#Epistemic Privilege#Intersectionality#Power And Knowledge#Marginalized Perspectives#Philosophy Of Identity#Social Constructivism#Knowledge Production

4 notes

·

View notes

Text

AI-Powered Email Templates That Write Themselves: Because You Have Better Things to Do

AI-Powered Email Templates That Write Themselves Because You Have Better Things to Do Ah, email, the necessary evil of modern business. If you’re anything like most entrepreneurs, you’ve spent way too much time staring at a blinking cursor, trying to craft the perfect subject line that won’t get ignored faster than a “Hey girl” DM from an old high school acquaintance selling essential oils. We…

#AI business communication#AI content automation#AI customer service emails#AI email personalization#AI email tools#AI for business emails#AI for entrepreneurs#AI in sales emails#AI-assisted email strategy#AI-driven email sequences#AI-enhanced customer communication#AI-generated emails#AI-powered email templates#AI-powered lead nurturing#AI-powered outreach#automated email writing#automated follow-ups#business email efficiency#Business Growth#Business Strategy#ChatGPT email templates#Copy.ai email generation#email automation#email engagement optimization#email marketing automation#email productivity tools#Entrepreneur#Entrepreneurship#follow-up email AI#high-converting email templates

0 notes

Text

Quick Thoughts on Microsoft P⚙️wer Aut⚙️mate: A Review

Microsoft Power Automate and AI tools like ChatGPT and Claude are reshaping productivity. Dive into this practical review for insights and real-world examples!

What’s On My Mind Today? Automation has become more than a buzzword—it’s a necessity. Microsoft Power Automate is a standout tool in this landscape, offering a way to design workflows that not only boost productivity but also serve as a foundation for more advanced automation down the line. After a brief dive into its interface and features, I’m struck by both its potential and its challenges.…

#AI tools#automation workflows#ChatGPT#Claude#creative automation#cross-platform synergy#dynamic workflows#enhanced collaboration#Ethical AI#Gemini#Microsoft AI#Microsoft Power Automate#Power Automate AI#productivity tools#scaling intelligence#Workflow Automation

0 notes

Text

Whether you're a beginner or looking to enhance your existing skills, AI training in Delhi provides a great opportunity to stay ahead in the digital world.

#ai chatbot#artificial intelligence#ai chatgpt#ai generated#ai art#AI Chatbot#AI Content Creation#AI for Email Marketing#AI for Freelancers#AI for Marketing#AI for Non-Techies#AI for Saving Time#AI for Small Business Owners#AI in Social Media Marketing#AI Marketing Essentials#AI Marketing for Beginners#AI Marketing Tips#AI Marketing Trends#AI Productivity Tools#AI SEO Strategies#AI Simplified for Beginners#AI Social Media Strategies#AI Tools for Everyone#AI Tools for Marketing#AI-Driven Analytics#AI-Generated Content#AI-Powered Campaigns#AI-Powered Success#Beginner Marketing Tips#Beginner’s Guide to AI

1 note

·

View note

Text

The AI-Powered Creative Workflow: How the Creative TechnoStack is Shaping the Future of Creativity

Discover how an AI-Powered Creative Workflow is transforming the way creators work! Learn how the Creative TechnoStack blends AI and traditional tools to shape the future of creativity. Ready to unlock your full potential? Dive in now!

Unlocking the AI-Powered Creative Workflow: The Rise of the Creative Technomancer Creativity is entering a bold new era—one where human ingenuity is supercharged by artificial intelligence, and the boundaries between artistic disciplines blur into seamless, multimedia experiences. Just as software developers pioneered the concept of full-stack to describe those who could manage both front-end…

#11Labs#Adobe Creative Suite#AI tools for creators#AI-powered workflows#Automation in creative projects#ChatGPT#Creative collaboration#Creative innovation#Creative TechnoStack#Digital creativity#Full-stack creator#Future of creativity#Graeme Smith#MidJourney#Multimedia production#thisisgraeme#Udio

0 notes

Text

sun tumble -Copper Vacuum Insulated Tumbler

Stay hydrated in style on a daily basis with this personalized tumbler. With a 22oz capacity and copper vacuum insulation, our tumbler keeps your favorite beverages refreshingly cold for 24 hours, and soothingly hot for up to 6. Its stainless steel exterior is condensation-resistant while the powder coating adds extra style points.

https://sunlight-store.printify.me/product/12827451/sun-tumble-copper-vacuum-insulated-tumbler-22oz

#22oz#You said:#ChatGPT said:#please let me know!#on a trip#or in the car#His words are key to the sun and nature.#To add sun and nature to the product's keynote#To create keywords that reflect nature#Harmony of nature#fresh air#forest quiet#warmth of the sun#Earth power#Beach relaxation#beauty of mountains#Morning breeze#Wildlife#Green Valleys#Solar energy#The splendor of the sky

1 note

·

View note

Text

ChatGPT Goes Desktop: Powerful AI Assistant Now Has a Mac App!

ChatGPT Goes Desktop: Powerful AI Assistant Now Has a Mac App!

📖To read more visit here🌐🔗: https://onewebinc.com/news/chatgpt-releases-desktop-app-for-mac/

#AI#artificialintelligence#machinelearning#tech#technology#future#ChatGPT#OpenAI#AIassistant#desktop#MacApp#productivity#powerful#beta#earlyaccess#getyourhandsdirty#futureofwork#letsgo#gamechanger#innovation#wheresthefutureisheaded

0 notes

Text

Visit our website https://linktr.ee/Bodycareandhealth to explore our full range of natural health products and place your order today. Feel free to reach out to our friendly customer service team if you have any questions or need assistance

#User#ارسال رسالة تتعلق بمنتوجات صحية طبيعية لزبناء بالانجليزية#ChatGPT#Subject: Discover our Natural Health Products!#Dear Valued Customers#We are excited to introduce our range of natural health products that are designed to promote wellness and vitality. At [Your Company Name]#we believe in the power of nature to nurture and enhance our well-being.#Our products are carefully crafted using high-quality#natural ingredients sourced from trusted suppliers. From herbal supplements to organic skincare#we offer a variety of options to support your health journey.#Here are some highlights from our collection:#Herbal Supplements: Boost your immune system#improve digestion#and enhance your overall health with our range of herbal supplements.#Organic Skincare: Pamper your skin with our gentle and nourishing skincare products made from organic botanicals and essential oils.#Nutritious Snacks: Fuel your body with wholesome snacks that are free from artificial additives and preservatives.#Herbal Teas: Relax and unwind with our selection of herbal teas#carefully blended to soothe the mind and body.#Whether you're looking to maintain your health or address specific concerns#we have something for everyone. Plus#all of our products are backed by our commitment to quality and customer satisfaction.#Visit our website [Your Website URL] to explore our full range of natural health products and place your order today. Feel free to reach ou#Thank you for choosing [Your Company Name] for your health and wellness needs. Here's to a happier#healthier you!#Warm regards#[Your Name]#[Your Title/Position]#[Your Contact Information]#ترجمة الى العربية#موضوع: اكتشف منتجاتنا الصحية الطبيعية!

1 note

·

View note

Text

Discover the most effective ChatGPT forms that streamline your work, making tasks easier and faster. Unlock the potential of ChatGPT to enhance productivity and efficiency in your workflow.

#ChatGPT forms#Productivity tools#Work efficiency#Streamlined workflow#Time-saving solutions#Task optimization#AI-powered tools#Easy work solutions#Fast work processes#ChatGPT applications

0 notes

Text

Google’s Upcoming AI Software Gemini Nears Release

Google, part of Alphabet, has granted access to an early version of Gemini, its conversational AI programme, to a select number of businesses.

According to the source, Gemini is meant to compete with OpenAI’s GPT-4 model.

The debut of Gemini holds a lot of significance for Google. As it attempts to catch up following Microsoft-backed OpenAI’s unveiling of ChatGPT last year, which rocked the tech world, Google has increased its spending in generative AI this year.

According to the reports, Gemini is a group of large-language models that enable everything from chatbots to tools that either summarise material or create fresh content according on what people like to read, such as email draughts, song lyrics, or news articles.

Additionally, it is anticipated to assist software developers in creating novel code and pictures depending on user requests.

According to the publication, Google is developing a more substantial version of Gemini that would be more equivalent to GPT-4, but it has not yet made it accessible to developers.

Through its Google Cloud Vertex AI service, the leading search and advertising company intends to make Gemini accessible to businesses.

This month, the company added generative AI to its Search feature for customers in India and Japan, which will reply to inputs with text or visual results, including summaries. Additionally, it offers corporate clients access to its AI-powered solutions for $30 per user each month.

Source: ANI

Follow Digital Fox Media for latest technology news.

0 notes

Text

AI turns Amazon coders into Amazon warehouse workers

HEY SEATTLE! I'm appearing at the Cascade PBS Ideas Festival NEXT SATURDAY (May 31) with the folks from NPR's On The Media!

On a recent This Machine Kills episode, guest Hagen Blix described the ultimate form of "AI therapy" with a "human in the loop":

https://soundcloud.com/thismachinekillspod/405-ai-is-the-demon-god-of-capital-ft-hagen-blix

One actual therapist is just having ten chat GPT windows open where they just like have five seconds to interrupt the chatGPT. They have to scan them all and see if it says something really inappropriate. That's your job, to stop it.

Blix admits that's not where therapy is at…yet, but he references Laura Preston's 2023 N Plus One essay, "HUMAN_FALLBACK," which describes her as a backstop to a real-estate "virtual assistant," that masqueraded as a human handling the queries that confused it, in a bid to keep the customers from figuring out that they were engaging with a chatbot:

https://www.nplusonemag.com/issue-44/essays/human_fallback/

This is what makes investors and bosses slobber so hard for AI – a "productivity" boost that arises from taking away the bargaining power of workers so that they can be made to labor under worse conditions for less money. The efficiency gains of automation aren't just about using fewer workers to achieve the same output – it's about the fact that the workers you fire in this process can be used as a threat against the remaining workers: "Do your job and shut up or I'll fire you and give your job to one of your former colleagues who's now on the breadline."

This has been at the heart of labor fights over automation since the Industrial Revolution, when skilled textile workers took up the Luddite cause because their bosses wanted to fire them and replace them with child workers snatched from Napoleonic War orphanages:

https://pluralistic.net/2023/09/26/enochs-hammer/#thats-fronkonsteen

Textile automation wasn't just about producing more cloth – it was about producing cheaper, worse cloth. The new machines were so easy a child could use them, because that's who was using them – kidnapped war orphans. The adult textile workers the machines displaced weren't afraid of technology. Far from it! Weavers used the most advanced machinery of the day, and apprenticed for seven years to learn how to operate it. Luddites had the equivalent of a Masters in Engineering from MIT.

Weavers' guilds presented two problems for their bosses: first, they had enormous power, thanks to the extensive training required to operate their looms; and second, they used that power to regulate the quality of the goods they made. Even before the Industrial Revolution, weavers could have produced more cloth at lower prices by skimping on quality, but they refused, out of principle, because their work mattered to them.

Now, of course weavers also appreciated the value of their products, and understood that innovations that would allow them to increase their productivity and make more fabric at lower prices would be good for the world. They weren't snobs who thought that only the wealthy should go clothed. Weavers had continuously adopted numerous innovations, each of which increased the productivity and the quality of their wares.

Long before the Luddite uprising, weavers had petitioned factory owners and Parliament under the laws that guaranteed the guilds the right to oversee textile automation to ensure that it didn't come at the price of worker power or the quality of the textiles the machines produced. But the factory owners and their investors had captured Parliament, which ignored its own laws and did nothing as the "dark, Satanic mills" proliferated. Luddites only turned to property destruction after the system failed them.

Now, it's true that eventually, the machines improved and the fabric they turned out matched and exceeded the quality of the fabric that preceded the Industrial Revolution. But there's nothing about the way the Industrial Revolution unfolded – increasing the power of capital to pay workers less and treat them worse while flooding the market with inferior products – that was necessary or beneficial to that progress. Every other innovation in textile production up until that time had been undertaken with the cooperation of the guilds, who'd ensured that "progress" meant better lives for workers, better products for consumers, and lower prices. If the Luddites' demands for co-determination in the Industrial Revolution had been met, we might have gotten to the same world of superior products at lower costs, but without the immiseration of generations of workers, mass killings to suppress worker uprisings, and decades of defective products being foisted on the public.

So there are two stories about automation and labor: in the dominant narrative, workers are afraid of the automation that delivers benefits to all of us, stand in the way of progress, and get steamrollered for their own good, as well as ours. In the other narrative, workers are glad to have boring and dangerous parts of their work automated away and happy to produce more high-quality goods and services, and stand ready to assess and plan the rollout of new tools, and when workers object to automation, it's because they see automation being used to crush them and worsen the outputs they care about, at the expense of the customers they care for.

In modern automation/labor theory, this debate is framed in terms of "centaurs" (humans who are assisted by technology) and "reverse-centaurs" (humans who are conscripted to assist technology):

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

There are plenty of workers who are excited at the thought of using AI tools to relieve them of some drudgework. To the extent that these workers have power over their bosses and their working conditions, that excitement might well be justified. I hear a lot from programmers who work on their own projects about how nice it is to have a kind of hypertrophied macro system that can generate and tweak little automated tools on the fly so the humans can focus on the real, chewy challenges. Those workers are the centaurs, and it's no wonder that they're excited about improved tooling.

But the reverse-centaur version is a lot darker. The reverse-centaur coder is an assistant to the AI, charged with being a "human in the loop" who reviews the material that the AI produces. This is a pretty terrible job to have.

For starters, the kinds of mistakes that AI coders make are the hardest mistakes for human reviewers to catch. That's because LLMs are statistical prediction machines, spicy autocomplete that works by ingesting and analyzing a vast corpus of written materials and then producing outputs that represent a series of plausible guesses about which words should follow one another. To the extent that the reality the AI is participating in is statistically smooth and predictable, AI can often make eerily good guesses at words that turn into sentences or code that slot well into that reality.

But where reality is lumpy and irregular, AI stumbles. AI is intrinsically conservative. As a statistically informed guessing program, it wants the future to be like the past:

https://reallifemag.com/the-apophenic-machine/

This means that AI coders stumble wherever the world contains rough patches and snags. Take "slopsquatting." For the most part, software libraries follow regular naming conventions. For example, there might be a series of text-handling libraries with names like "text.parsing.docx," "text.parsing.xml," and "text.parsing.markdown." But for some reason – maybe two different projects were merged, or maybe someone was just inattentive – there's also a library called "text.txt.parsing" (instead of "text.parsing.txt").

AI coders are doing inference based on statistical analysis, and anyone inferring what the .txt parsing library is called would guess, based on the other libraries, that it was "text.parsing.txt." And that's what the AI guesses, and so it tries to import that library to its software projects.

This creates a new security vulnerability, "slopsquatting," in which a malicious actor creates a library with the expected name, which replicates the functionality of the real library, but also contains malicious code:

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

Note that slopsquatting errors are extremely hard to spot. As is typical with AI coding errors, these are errors that are based on continuing a historical pattern, which is the sort of thing our own brains do all the time (think of trying to go up a step that isn't there after climbing to the top of a staircase). Notably, these are very different from the errors that a beginning programmer whose work is being reviewed by a more senior coder might make. These are the very hardest errors for humans to spot, and these are the errors that AIs make the most, and they do so at machine speed:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

To be a human in the loop for an AI coder, a programmer must engage in sustained, careful, line-by-line and command-by-command scrutiny of the code. This is the hardest kind of code to review, and maintaining robotic vigilance over long periods at high speeds is something humans are very bad at. Indeed, it's the kind of task we try very hard to automate, since machines are much better at being machineline than humans are. This is the essence of reverse-centaurism: when a human is expected to act like a machine in order to help the machine do something it can't do.

Humans routinely fail at spotting these errors, unsurprisingly. If the purpose of automation is to make superior goods at lower prices, then this would be a real concern, since a reverse-centaur coding arrangement is bound to produce code with lurking, pernicious, especially hard-to-spot bugs that present serious risks to users. But if the purpose of automation is to discipline labor – to force coders to accept worse conditions and pay – irrespective of the impact on quality, then AI is the perfect tool for the job. The point of the human isn't to catch the AI's errors so much as it is to catch the blame for the AI's errors – to be what Madeleine Clare Elish calls a "moral crumple zone":

https://estsjournal.org/index.php/ests/article/view/260

As has been the case since the Industrial Revolution, the project of automation isn't just about increasing productivity, it's about weakening labor power as a prelude to lowering quality. Take what's happened to the news industry, where mass layoffs are being offset by AI tools. At Hearst's King Features Syndicates, a single writer was charged with producing over 30 summer guides, the entire package:

https://www.404media.co/viral-ai-generated-summer-guide-printed-by-chicago-sun-times-was-made-by-magazine-giant-hearst/

That is an impossible task, which is why the writer turned to AI to do his homework, and then, infamously, published a "summer reading guide" that was full of nonexistent books that were hallucinated by a chatbot:

https://www.404media.co/chicago-sun-times-prints-ai-generated-summer-reading-list-with-books-that-dont-exist/

Most people reacted to this story as a consumer issue: they were outraged that the world was having a defective product foisted upon it. But the consumer issue here is downstream from the labor issue: when the writers at King Features Syndicate are turned into reverse-centaurs, they will inevitably produce defective outputs. The point of the worker – the "human in the loop" – isn't to supervise the AI, it's to take the blame for the AI. That's just what happened, as this poor schmuck absorbed an internet-sized rasher of shit flung his way by outraged social media users. After all, it was his byline on the story, not the chatbot's. He's the moral crumple-zone.

The implication of this is that consumers and workers are class allies in the automation wars. The point of using automation to weaken labor isn't just cheaper products – it's cheaper, defective products, inflicted on the unsuspecting and defenseless public who are no longer protected by workers' professionalism and pride in their jobs.

That's what's going on at Duolingo, where CEO Luis von Ahn created a firestorm by announcing mass firings of human language instructors, who would be replaced by AI. The "AI first" announcement pissed off Duolingo's workers, of course, but what caught von Ahn off-guard was how much this pissed off Duolingo's users:

https://tech.slashdot.org/story/25/05/25/0347239/duolingo-faces-massive-social-media-backlash-after-ai-first-comments

But of course, this makes perfect sense. After all, language-learners are literally incapable of spotting errors in the AI instruction they receive. If you spoke the language well enough to spot the AI's mistakes, you wouldn't need Duolingo! I don't doubt that there are countless ways in which AIs could benefit both language learners and the Duolingo workers who develop instructional materials, but for that to happen, workers' and learners' needs will have to be the focus of AI integration. Centaurs could produce great language learning materials with AI – but reverse-centaurs can only produce slop.

Unsurprisingly, many of the most successful AI products are "bossware" tools that let employers monitor and discipline workers who've been reverse-centaurized. Both blue-collar and white-collar workplaces have filled up with "electronic whips" that monitor and evaluate performance:

https://pluralistic.net/2024/08/02/despotism-on-demand/#virtual-whips

AI can give bosses "dashboards" that tell them which Amazon delivery drivers operate their vehicles with their mouths open (Amazon doesn't let its drivers sing on the job). Meanwhile, a German company called Celonis will sell your boss a kind of AI phrenology tool that assesses your "emotional quality" by spying on you while you work:

https://crackedlabs.org/en/data-work/publications/processmining-algomanage

Tech firms were among the first and most aggressive adopters of AI-based electronic whips. But these whips weren't used on coders – they were reserved for tech's vast blue-collar and contractor workforce: clickworkers, gig workers, warehouse workers, AI data-labelers and delivery drivers.

Tech bosses tormented these workers but pampered their coders. That wasn't out of any sentimental attachment to tech workers. Rather, tech bosses were afraid of tech workers, because tech workers possess a rare set of skills that can be harnessed by tech firms to produce gigantic returns. Tech workers have historically been princes of labor, able to command high salaries and deferential treatment from their bosses (think of the amazing tech "campus" perks), because their scarcity gave them power.

It's easy to predict how tech bosses would treat tech workers if they could get away with it – just look how they treat workers they aren't afraid of. Just like the textile mill owners of the Industrial Revolution, the thing that excites tech bosses about AI is the possibility of cutting off a group of powerful workers at the knees. After all, it took more than a century for strong labor unions to match the power that the pre-Industrial Revolution guilds had. If AI can crush the power of tech workers, it might buy tech bosses a century of free rein to shift value from their workforce to their investors, while also doing away with pesky Tron-pilled workers who believe they have a moral obligation to "fight for the user."

William Gibson famously wrote, "The future is here, it's just not evenly distributed." The workers that tech bosses don't fear are living in the future of the workers that tech bosses can't easily replace.

This week, the New York Times's veteran Amazon labor report Noam Scheiber published a deeply reported piece about the experience of coders at Amazon in the age of AI:

https://www.nytimes.com/2025/05/25/business/amazon-ai-coders.html

Amazon CEO Andy Jassy is palpably horny for AI coders, evidenced by investor memos boasting of AI's returns in "productivity and cost avoidance" and pronouncements about AI saving "the equivalent of 4,500 developer-years":

https://www.linkedin.com/posts/andy-jassy-8b1615_one-of-the-most-tedious-but-critical-tasks-activity-7232374162185461760-AdSz/

Amazon is among the most notorious abusers of blue-collar labor, the workplace where everyone who doesn't have a bullshit laptop job is expected to piss in a bottle and spend an unpaid hour before and after work going through a bag- and body-search. Amazon's blue-collar workers are under continuous, totalizing, judging AI scrutiny that scores them based on whether their eyeballs are correctly oriented, whether they take too long to pick up an object, whether they pee too often. Amazon warehouse workers are injured at three times national average. Amazon AIs scan social media for disgruntled workers talking about unions, and Amazon has another AI tool that predicts which shops and departments are most likely to want to unionize.

Scheiber's piece describes what it's like to be an Amazon tech worker who's getting the reverse-centaur treatment that has heretofore been reserved for warehouse workers and drivers. They describe "speedups" in which they are moved from writing code to reviewing AI code, their jobs transformed from solving chewy intellectual puzzles to racing to spot hard-to-find AI coding errors as a clock ticks down. Amazon bosses haven't ordered their tech workers to use AI, just raised their quotas to a level that can't be attained without getting an AI to do most of the work – just like the Chicago Sun-Times writer who was expected to write all 30 articles in the summer guide package on his own. No one made him use AI, but he wasn't going to produce 30 articles on deadline without a chatbot.

Amazon insists that it is treating AI as an assistant for its coders, but the actual working conditions make it clear that this is a reverse-centaur transformation. Scheiber discusses a dissident internal group at Amazon called Amazon Employees for Climate Justice, who link the company's use of AI to its carbon footprint. Beyond those climate concerns, these workers are treating AI as a labor issue.

Amazon's coders have been making tentative gestures of solidarity towards its blue-collar workforce since the pandemic broke out, walking out in support of striking warehouse workers (and getting fired for doing so):

https://pluralistic.net/2020/04/14/abolish-silicon-valley/#hang-together-hang-separately

But those firings haven't deterred Amazon's tech workers from making common cause with their comrades on the shop floor:

https://pluralistic.net/2021/01/19/deastroturfing/#real-power

When techies describe their experience of AI, it sometimes sounds like they're describing two completely different realities – and that's because they are. For workers with power and control, automation turns them into centaurs, who get to use AI tools to improve their work-lives. For workers whose power is waning, AI is a tool for reverse-centaurism, an electronic whip that pushes them to work at superhuman speeds. And when they fail, these workers become "moral crumple zones," absorbing the blame for the defective products their bosses pushed out in order to goose profits.

As ever, what a technology does pales in comparison to who it does it for and who it does it to.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/05/27/rancid-vibe-coding/#class-war

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

328 notes

·

View notes

Text

the decline of human creativity in the form of the uprise in AI generated writing is baffling to me. In my opinion, writing is one of the easiest art forms. You just have to learn a couple of very basic things (that are mostly taught in school, i.e; sentence structure and grammar amongst other things like comprehension and reading) and then expand upon that already-foundational knowledge. You can look up words for free— there’s resources upon resources for those who may not be able to afford books, whether physical or non physical. AI has never made art more accessible, it has only ever made production of art (whether it be sonically, visual, written—) cheap and nasty, and it’s taken away the most important thing about art (arguably)— the self expression and the humanity of art. Ai will never replace real artists, musicians, writers because the main point of music and drawing and poetry is to evoke human emotion. How is a robot meant to simulate that? It can’t. Robots don’t experience human emotions. They experience nothing. They’re only destroying our planet— the average 100-word chat-gpt response consumes 519 millilitres of water— that’s 2.1625 United States customary cups. Which, no, on the scale of one person, doesn’t seem like a lot. But according to OpenAI's chief operating officer , chatgpt has 400 million weekly users and plans on hitting 1 billion users by the end of this year (2025). If everyone of those 400 million people received a 100 word response from chat gpt, that would mean 800 MILLION (if not more) cups of water have gone to waste to cool down the delicate circulatory system that powers these ai machines. With the current climate crisis? That’s only going to impact our already fragile and heating-up earth.

but no one wants to talk about those stats. But it is the reality of the situation— A 1 MW data centre can and most likely uses up to 25.5 MILLION litres of water for annual cooling maintenance. Google alone with its data centre used over 21 BILLION litres of fresh water in 2022. God only knows how that number has likely risen. There are projections of predictions saying that 6.6 BILLION cubic meters could be being used up by AI centres by 2027.

not only is AI making you stupid, but it’s killing you and your planet. Be AI conscious and avoid it as much as humanly possible.

#thoughts#diary#rambles#ramblings#diary blog#digital diary#anti ai#i hate ai#writers of tumblr#writeblr#my writing#writblr#art moots#oc artist#oc artwork#original art#artists on tumblr#musings#leftist#writer stuff#female writers#writing#writers on tumblr#writers and poets#creative writing#writerscommunity#looking for moots#looking for friends#anti intellectualism

244 notes

·

View notes

Text

Even though I know it’s all intentional, I truly hate how we’ve become forced to normalize AI. I do think that the manufacturing of Artificial Intelligence was not done with malicious intent and has the capabilities of actually doing good, but time and time again ai is being used in literally everything for the worst reasons and getting its getting harder to escape.

From AI being used to scrape people’s hard work all over the internet, to giving predators and abusers more power in fabricating porn of strangers, to being used to strengthen racial bias in surveillance technology and aid in the development of weapons of war and mass destruction against marginalized groups of people…it’s just too fucking much. It’s so exhausting wanting to live in a world where we just didn’t need or have any of this shit, and it wasn’t like this a few years ago either. But now you can’t step outside without seeing something about AI, or a promotional ad for a new system to install. You can’t engage online anywhere without coming across AI software, and literally every single device in our present day implements AI to some degree, and it’s so fucking annoying.

I don’t want to keep worrying about the next idiot that’s spoon feeding my work into their AI system because they lack humanity and imagination. I don’t want to have to manually turn off AI detection on all of my apps and my phone just to use something. I shouldn’t have to be more mindful about the media I consume to distinguish whether or not it’s original or just more AI slop. I know it’s all intentional since we live in a hyper-capitalist world that cares more about profit margins & rapid productivity. But I really do vehemently hate how artificial intelligence has become such a fundamental aspect of our day to day lives when all it does is make the general population dumber and less capable of thinking for themselves.

Sincerely fuck AI. And if you use AI, I really do suggest you read up on how the data centers built to manage these AI systems suck up all of our resources for a simple prompt input. Who cares about answering a question in ChatGPT, entire communities don’t have water because they’re too busy cooling down the servers where people ask what 6 + 10 is cause their brains are so fried they can’t fire a single fucking neuron.

#fuck ai#and fuck everyone that uses it idc#it’s so hard being a creative and wanting original work when there’s ai slop everywhere#please just burn it all to the ground#enough of that bullshit you do not need a smart fridge with a touchscreen and ai built into it#its all just another form of state surveillance advertised as convenience it’s not normal#when you’re mindless sheep you’re easier to manipulate remember that#the way I work in the legal field and I hear my bosses talk about using AI to read case briefs is crazy#we live in the bad place

112 notes

·

View notes

Text

𝐲𝐨𝐮𝐫 𝐞𝐫𝐚 𝐛𝐞𝐠𝐢𝐧𝐬 𝐡𝐞𝐫𝐞

hello, loves. i’m livia wildrose this is the master list your ultimate blueprint for leveling up and becoming the most magnetic, powerful version of yourself.

this space is all about growth, glow-ups, and stepping into the dream life we know we’re destined for. we’re here to rewrite the rules, show the world our worth, and manifest every single thing we’ve ever dreamed of. because let’s be real, it’s all within reach.

together, we’re unlocking the tools, tips, and mindset shifts to turn our wildest fantasies into reality. it’s not just about leveling up it’s about creating a life so good it feels like magic. you’re not just existing you’re thriving, glowing, and unapologetically owning your power.

this is your go-to guide for self-discovery, confidence, manifestation, and living like the absolute star you are. because you deserve it, we deserve it and the world is about to know it.

to understand the reason and why i’m starting this journey read my story (get to know me)

series

daily domination

an everyday blog to keep myself accountable and productive with all my life choices and routines to meet all my highest goals

foundations of glow up

first steps

this is the “how to begin” for anyone starting their glow-up. mental shifts, alter ego creation, and the mindset needed to elevate.

mindmovie

another foundational piece that ties into mental reprogramming. how to visualize and use mind movies to unlock your subconscious potential.

rebrand yourself

it’s time to level up to the next level and next version of yourself to unlock new level of success, growth and achievement.

self improvement

glow up enhancing

tools, tips, and tech to enhance the glow-up process. using subliminals, ChatGPT, and other strategies to get even better.

game of life

your mindset hack. the game of life mentality that can practically shift someone’s whole existence and approach to their goals.

become your own mother

this feels like a huge empowerment piece. self-love, nurturing yourself, and stepping into your own power.

take yourself seriously

it’s like owning yourself truly and completely, crafting and taking control of your body, mind, soul and your ultimate destiny and life.

witchcarft & spirituality

egg cleanse

cleanse to remove any negative energy or blockages. how to perform the egg cleanse ritual and clear out any stagnant energy in your life.

kundalini

deep dive into spiritual practices and energy work. how activating your Kundalini can push you toward your next level.

your energy is sacred

keep your energy exclusive to make sure that you are thriving and also use you energy for better purposes

letters and updates

a love letter

a life update and an appreciation letter to you guys for supporting me so much, i can’t express enough how much i love you guys

routine

hair care routine

a routine to make sure you have that healthy, strong and long hair (this is also the hair care routine i follow) you can take inspiration and tweak it accordingly.

my results and recommendations

#dream life#empowerment#girlblogging#levelling up#manifestation#manifesting#aesthetic#flowers#love#witchblr#witchcraft#witch#pagan witch#level up#dream lifestyle#higher self#becoming that girl#that girl#it girl#gaslight gatekeep girlboss#tumblr girls#girlhood#just girlboss things#pink pilates princess#happiness#liviawildrose.#subliminal#subliminals#self care#self love

141 notes

·

View notes

Text

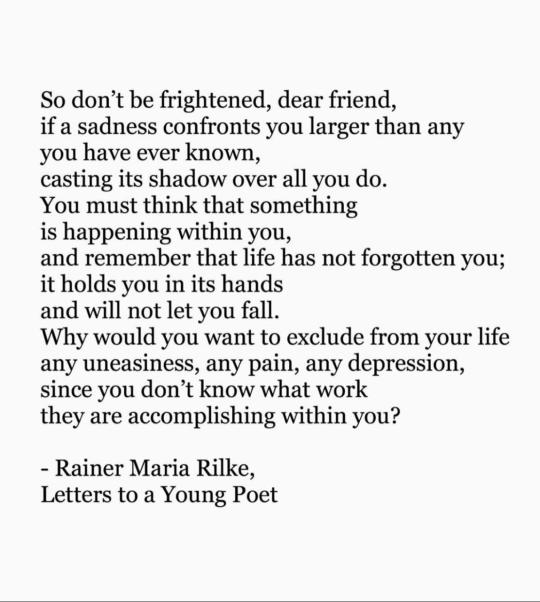

DAY 6273

Jalsa, Mumbai Apr 19, 2025/Apr 20 Sat/Sun 1:12 am

words of wisdom from Shweta , sent to me 👆🏼 .. her repertoire of books and reading is immense .. as is of Navya .. it is such a delight to sit in their presence and company and be educated about aspects of life and the world .. it is astonishing ..

And that is what eats us elders up .. there is the desire to do and be a part of what this GEn thinks and does .. but the limitations of time barricade the impulse and the intent ..

Life did not move so rapidly in our time or in the time of our elders .. now the rapidity is so volatile that the wishes to accomplish all is overwhelming .. you do not want to miss out a single day, in case you miss something that you had wished you would want to do, or be a part of ..

The excitement of what the World does to day and the speed with which it does it is unbelievably attractive .. and one wants so desperately to be a art of it .. or many parts of it .. but time is not with us .. at our age we lament the time .. and we feel let down .. for the wish to be having the time to be involved , to test, to apply, to discover, to invent, to admire and enjoy that spectacle .. is huge ..

But the hugeness hits a wall .. the timeless wall of limitations ..

"एक घड़ी ख़रीद कर, हाथ में क्या बांध ली;

वक़्त पीछे ही पड़ गया मेरे ~ "

पूज्य बाबूजी के शब्द

My wishes as ever .. and the great battle of invention that percolates our lives persists to be in its excel ..

The excel of AI ..

So, I asked and it replied :

"Artificial Intelligence (AI) is the simulation of human intelligence in machines designed to think, learn, and make decisions. It powers technologies like voice assistants, recommendation systems, and autonomous vehicles. AI works through algorithms that process data, recognize patterns, and improve over time through machine learning. It is transforming industries by automating tasks, enhancing productivity, and enabling innovative solutions. From healthcare diagnostics to personalized education, AI’s potential is vast. However, it also raises ethical concerns about bias, privacy, and job displacement. As AI continues to evolve, balancing innovation with responsibility will be key to ensuring it benefits society as a whole."

The clarity of social implications is a massive debate , engaging thinkers and artists and writers and indeed all creative talent ..

For the creative content of a writer are his words .. and if the AI data bank consumes that , as a part of a legacy to be maintained over time infinity, it can be used by ChatGPT to refer or use that extract for its personalised usage .. making it the property of ChatGPT ... NOT the property of the writers or the artists, from where it originally came ..

So the copyright of the artist has been technically 'stolen' , and he or she never gets the benefit of ts copyright, when GPT uses it for its presence .. !!!!

The true value of an artists creation will never be restored to his credit, because technology usurps it .. gulps it down deliciously , with an aerated drink and finalising its consumption with a belch 😜🤭 ... END OF CHAPTER !!!

End of discussion .. !!!

In time there shall be much to be heard and written on the subject ..

Each invention provides benefits .. but also victims ..

बनाये कोई - लाभ उठाए कोई और, जिसने उसे बनाया ही न हो

Love

Amitabh Bachchan

102 notes

·

View notes