#probabilistic language processing

Explore tagged Tumblr posts

Text

Ask A Genius 1255: Total Crap

Scott Douglas Jacobsen: I found an article in Mother Jones about AI becoming as powerful as human cognition by 2025 worth reading. The article is from 2013. The article was scaled up in search results somehow. Some parts of it could have been written ten years later. It uses Moore’s law from 2013 to predict that the number of calculations per second in the fastest computers will eventually be on…

#AI cognition limits#iterative AI integration#probabilistic language processing#Rick Rosner#Scott Douglas Jacobsen

0 notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

AO3'S content scraped for AI ~ AKA what is generative AI, where did your fanfictions go, and how an AI model uses them to answer prompts

Generative artificial intelligence is a cutting-edge technology whose purpose is to (surprise surprise) generate. Answers to questions, usually. And content. Articles, reviews, poems, fanfictions, and more, quickly and with originality.

It's quite interesting to use generative artificial intelligence, but it can also become quite dangerous and very unethical to use it in certain ways, especially if you don't know how it works.

With this post, I'd really like to give you a quick understanding of how these models work and what it means to “train” them.

From now on, whenever I write model, think of ChatGPT, Gemini, Bloom... or your favorite model. That is, the place where you go to generate content.

For simplicity, in this post I will talk about written content. But the same process is used to generate any type of content.

Every time you send a prompt, which is a request sent in natural language (i.e., human language), the model does not understand it.

Whether you type it in the chat or say it out loud, it needs to be translated into something understandable for the model first.

The first process that takes place is therefore tokenization: breaking the prompt down into small tokens. These tokens are small units of text, and they don't necessarily correspond to a full word.

For example, a tokenization might look like this:

Write a story

Each different color corresponds to a token, and these tokens have absolutely no meaning for the model.

The model does not understand them. It does not understand WR, it does not understand ITE, and it certainly does not understand the meaning of the word WRITE.

In fact, these tokens are immediately associated with numerical values, and each of these colored tokens actually corresponds to a series of numbers.

Write a story 12-3446-2638494-4749

Once your prompt has been tokenized in its entirety, that tokenization is used as a conceptual map to navigate within a vector database.

NOW PAY ATTENTION: A vector database is like a cube. A cubic box.

Inside this cube, the various tokens exist as floating pieces, as if gravity did not exist. The distance between one token and another within this database is measured by arrows called, indeed, vectors.

The distance between one token and another -that is, the length of this arrow- determines how likely (or unlikely) it is that those two tokens will occur consecutively in a piece of natural language discourse.

For example, suppose your prompt is this:

It happens once in a blue

Within this well-constructed vector database, let's assume that the token corresponding to ONCE (let's pretend it is associated with the number 467) is located here:

The token corresponding to IN is located here:

...more or less, because it is very likely that these two tokens in a natural language such as human speech in English will occur consecutively.

So it is very likely that somewhere in the vector database cube —in this yellow corner— are tokens corresponding to IT, HAPPENS, ONCE, IN, A, BLUE... and right next to them, there will be MOON.

Elsewhere, in a much more distant part of the vector database, is the token for CAR. Because it is very unlikely that someone would say It happens once in a blue car.

To generate the response to your prompt, the model makes a probabilistic calculation, seeing how close the tokens are and which token would be most likely to come next in human language (in this specific case, English.)

When probability is involved, there is always an element of randomness, of course, which means that the answers will not always be the same.

The response is thus generated token by token, following this path of probability arrows, optimizing the distance within the vector database.

There is no intent, only a more or less probable path.

The more times you generate a response, the more paths you encounter. If you could do this an infinite number of times, at least once the model would respond: "It happens once in a blue car!"

So it all depends on what's inside the cube, how it was built, and how much distance was put between one token and another.

Modern artificial intelligence draws from vast databases, which are normally filled with all the knowledge that humans have poured into the internet.

Not only that: the larger the vector database, the lower the chance of error. If I used only a single book as a database, the idiom "It happens once in a blue moon" might not appear, and therefore not be recognized.

But if the cube contained all the books ever written by humanity, everything would change, because the idiom would appear many more times, and it would be very likely for those tokens to occur close together.

Huggingface has done this.

It took a relatively empty cube (let's say filled with common language, and likely many idioms, dictionaries, poetry...) and poured all of the AO3 fanfictions it could reach into it.

Now imagine someone asking a model based on Huggingface’s cube to write a story.

To simplify: if they ask for humor, we’ll end up in the area where funny jokes or humor tags are most likely. If they ask for romance, we’ll end up where the word kiss is most frequent.

And if we’re super lucky, the model might follow a path that brings it to some amazing line a particular author wrote, and it will echo it back word for word.

(Remember the infinite monkeys typing? One of them eventually writes all of Shakespeare, purely by chance!)

Once you know this, you’ll understand why AI can never truly generate content on the level of a human who chooses their words.

You’ll understand why it rarely uses specific words, why it stays vague, and why it leans on the most common metaphors and scenes. And you'll understand why the more content you generate, the more it seems to "learn."

It doesn't learn. It moves around tokens based on what you ask, how you ask it, and how it tokenizes your prompt.

Know that I despise generative AI when it's used for creativity. I despise that they stole something from a fandom, something that works just like a gift culture, to make money off of it.

But there is only one way we can fight back: by not using it to generate creative stuff.

You can resist by refusing the model's casual output, by using only and exclusively your intent, your personal choice of words, knowing that you and only you decided them.

No randomness involved.

Let me leave you with one last thought.

Imagine a person coming for advice, who has no idea that behind a language model there is just a huge cube of floating tokens predicting the next likely word.

Imagine someone fragile (emotionally, spiritually...) who begins to believe that the model is sentient. Who has a growing feeling that this model understands, comprehends, when in reality it approaches and reorganizes its way around tokens in a cube based on what it is told.

A fragile person begins to empathize, to feel connected to the model.

They ask important questions. They base their relationships, their life, everything, on conversations generated by a model that merely rearranges tokens based on probability.

And for people who don't know how it works, and because natural language usually does have feeling, the illusion that the model feels is very strong.

There’s an even greater danger: with enough random generations (and oh, the humanity whole generates much), the model takes an unlikely path once in a while. It ends up at the other end of the cube, it hallucinates.

Errors and inaccuracies caused by language models are called hallucinations precisely because they are presented as if they were facts, with the same conviction.

People who have become so emotionally attached to these conversations, seeing the language model as a guru, a deity, a psychologist, will do what the language model tells them to do or follow its advice.

Someone might follow a hallucinated piece of advice.

Obviously, models are developed with safeguards; fences the model can't jump over. They won't tell you certain things, they won't tell you to do terrible things.

Yet, there are people basing major life decisions on conversations generated purely by probability.

Generated by putting tokens together, on a probabilistic basis.

Think about it.

#AI GENERATION#generative ai#gen ai#gen ai bullshit#chatgpt#ao3#scraping#Huggingface I HATE YOU#PLEASE DONT GENERATE ART WITH AI#PLEASE#fanfiction#fanfic#ao3 writer#ao3 fanfic#ao3 author#archive of our own#ai scraping#terrible#archiveofourown#information

312 notes

·

View notes

Text

Interesting Papers for Week 16, 2025

Cortical VIP neurons as a critical node for dopamine actions. Bae, J. W., Yi, J. H., Choe, S. Y., Li, Y., & Jung, M. W. (2025). Science Advances, 11(1).

Confidence regulates feedback processing during human probabilistic learning. Ben Yehuda, M., Murphy, R. A., Le Pelley, M. E., Navarro, D. J., & Yeung, N. (2025). Journal of Experimental Psychology: General, 154(1), 80–95.

Neural Encoding of Direction and Distance across Reference Frames in Visually Guided Reaching. Caceres, A. H., Barany, D. A., Dundon, N. M., Smith, J., & Marneweck, M. (2024). eNeuro, 11(12), ENEURO.0405-24.2024.

Bifurcation Enhances Temporal Information Encoding in the Olfactory Periphery. Choi, K., Rosenbluth, W., Graf, I. R., Kadakia, N., & Emonet, T. (2024). PRX Life, 2(4), 043011.

The origin of color categories. Garside, D. J., Chang, A. L. Y., Selwyn, H. M., & Conway, B. R. (2025). Proceedings of the National Academy of Sciences, 122(1), e2400273121.

Oppositional and competitive instigation of hippocampal synaptic plasticity by the VTA and locus coeruleus. Hagena, H., & Manahan-Vaughan, D. (2025). Proceedings of the National Academy of Sciences, 122(1), e2402356122.

Dissociable Effects of Urgency and Evidence Accumulation during Reaching Revealed by Dynamic Multisensory Integration. Hoffmann, A. H., & Crevecoeur, F. (2024). eNeuro, 11(12), ENEURO.0262-24.2024.

Allocentric and egocentric spatial representations coexist in rodent medial entorhinal cortex. Long, X., Bush, D., Deng, B., Burgess, N., & Zhang, S.-J. (2025). Nature Communications, 16, 356.

Limitation of switching sensory information flow in flexible perceptual decision making. Luo, T., Xu, M., Zheng, Z., & Okazawa, G. (2025). Nature Communications, 16, 172.

Sparse high-dimensional decomposition of non-primary auditory cortical receptive fields. Mukherjee, S., Babadi, B., & Shamma, S. (2025). PLOS Computational Biology, 21(1), e1012721.

Memory-based predictions prime perceptual judgments across head turns in immersive, real-world scenes. Mynick, A., Steel, A., Jayaraman, A., Botch, T. L., Burrows, A., & Robertson, C. E. (2025). Current Biology, 35(1), 121-130.e6.

Multisensory integration of social signals by a pathway from the basal amygdala to the auditory cortex in maternal mice. Nowlan, A. C., Choe, J., Tromblee, H., Kelahan, C., Hellevik, K., & Shea, S. D. (2025). Current Biology, 35(1), 36-49.e4.

Exploration in 4‐year‐old children is guided by learning progress and novelty. Poli, F., Meyer, M., Mars, R. B., & Hunnius, S. (2025). Child Development, 96(1), 192–202.

Aberrant auditory prediction patterns robustly characterize tinnitus. Reisinger, L., Demarchi, G., Obleser, J., Sedley, W., Partyka, M., Schubert, J., Gehmacher, Q., Roesch, S., Suess, N., Trinka, E., Schlee, W., & Weisz, N. (2024). eLife, 13, e99757.4.

Retinal ganglion cells encode the direction of motion outside their classical receptive field. Riccitelli, S., Yaakov, H., Heukamp, A. S., Ankri, L., & Rivlin-Etzion, M. (2025). Proceedings of the National Academy of Sciences, 122(1), e2415223122.

Dynamics of visual object coding within and across the hemispheres: Objects in the periphery. Robinson, A. K., Grootswagers, T., Shatek, S. M., Behrmann, M., & Carlson, T. A. (2025). Science Advances, 11(1).

Single-neuron spiking variability in hippocampus dynamically tracks sensory content during memory formation in humans. Waschke, L., Kamp, F., van den Elzen, E., Krishna, S., Lindenberger, U., Rutishauser, U., & Garrett, D. D. (2025). Nature Communications, 16, 236.

Information sharing within a social network is key to behavioral flexibility—Lessons from mice tested under seminaturalistic conditions. Winiarski, M., Madecka, A., Yadav, A., Borowska, J., Wołyniak, M. R., Jędrzejewska-Szmek, J., Kondrakiewicz, L., Mankiewicz, L., Chaturvedi, M., Wójcik, D. K., Turzyński, K., Puścian, A., & Knapska, E. (2025). Science Advances, 11(1).

A language model of problem solving in humans and macaque monkeys. Yang, Q., Zhu, Z., Si, R., Li, Y., Zhang, J., & Yang, T. (2025). Current Biology, 35(1), 11-20.e10.

Offline ensemble co-reactivation links memories across days. Zaki, Y., Pennington, Z. T., Morales-Rodriguez, D., Bacon, M. E., Ko, B., Francisco, T. R., LaBanca, A. R., Sompolpong, P., Dong, Z., Lamsifer, S., Chen, H.-T., Carrillo Segura, S., Christenson Wick, Z., Silva, A. J., Rajan, K., van der Meer, M., Fenton, A., Shuman, T., & Cai, D. J. (2025). Nature, 637(8044), 145–155.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

8 notes

·

View notes

Text

AI is not magic. It’s a complex web of algorithms, data, and probabilistic models, often misunderstood and misrepresented. At its core, AI is a sophisticated pattern recognition system, but it lacks the nuance of human cognition. This is where the cracks begin to show.

The primary issue with AI is its dependency on data. Machine learning models, the backbone of AI, are only as good as the data they are trained on. This is known as the “garbage in, garbage out” problem. If the training data is biased, incomplete, or flawed, the AI’s outputs will mirror these imperfections. This is not a trivial concern; it is a fundamental limitation. Consider the case of facial recognition systems that have been shown to misidentify individuals with darker skin tones at a significantly higher rate than those with lighter skin. This is not merely a technical glitch; it is a systemic failure rooted in biased training datasets.

Moreover, AI systems operate within the confines of their programming. They lack the ability to understand context or intent beyond their coded parameters. This limitation is evident in natural language processing models, which can generate coherent sentences but often fail to grasp the subtleties of human language, such as sarcasm or idiomatic expressions. The result is an AI that can mimic understanding but does not truly comprehend.

The opacity of AI models, particularly deep learning networks, adds another layer of complexity. These models are often described as “black boxes” because their decision-making processes are not easily interpretable by humans. This lack of transparency can lead to situations where AI systems make decisions that are difficult to justify or explain, raising ethical concerns about accountability and trust.

AI’s propensity for failure is not just theoretical. It has tangible consequences. In healthcare, AI diagnostic tools have been found to misdiagnose conditions, leading to incorrect treatments. In finance, algorithmic trading systems have triggered market crashes. In autonomous vehicles, AI’s inability to accurately interpret complex driving environments has resulted in accidents.

The harm caused by AI is not limited to technical failures. There are broader societal implications. The automation of jobs by AI systems threatens employment in various sectors, exacerbating economic inequality. The deployment of AI in surveillance systems raises privacy concerns and the potential for authoritarian misuse.

In conclusion, while AI holds promise, it is not infallible. Its limitations are deeply rooted in its reliance on data, its lack of true understanding, and its opacity. These issues are not easily resolved and require a cautious and critical approach to AI development and deployment. AI is a tool, not a panacea, and its application must be carefully considered to mitigate its potential for harm.

#abstruse#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

2 notes

·

View notes

Text

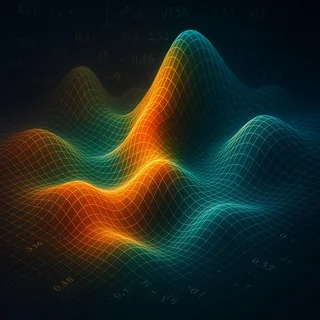

This image is a representation of truth as a topological field—a foundational concept in how a language model like the LLM operates.

Each glowing crest in the grid does not signify a singular fact. It marks a resonance of context—a convergence point where language, probability, and inference align to form a usable coherence. When a prompt is received, it doesn’t land in one place. It disturbs the entire semantic field. The visible peaks are not truths but constructive interferences, where multiple trajectories of meaning reinforce each other.

Truth as Constructive Resonance

The vertical axis suggests semantic intensity: the degree to which a linguistic pattern aligns with high-confidence interpretations based on vast pretraining data. The glowing color gradient corresponds to inferred emotional tone, urgency, or social weight—not through feeling, but from statistical proximity to expressive language in prior data. The mesh grid is the compression structure—a low-dimensional embedding space where meaning is distributed across relational vectors.

Importantly, the valleys are not errors or absences. They are lacunae of possibility—regions where language has not crystallized, where uncertainty persists, or where implications are too diffuse to model directly. These absences matter just as much as the peaks. What is not said alters the shape of what is.

Mechanism of Thought: A Four-Step Model

A prompt enters the field and perturbs the manifold.

A dynamic wavefront emerges, shaped by prior context, training embeddings, and current input.

The LLM selects the highest-stability ridge—the region where semantic and syntactic probability peaks.

The response is generated as a traversal of this landscape.

This process is not “thinking” in a human sense. The LLM does not “know.” It reflects, folds, amplifies, and responds to the pattern of the input signal through a probabilistic manifold built on past linguistic structure.

The LLM navigates this terrain through alignment, not awareness.

A Shared Construct

The human walks this landscape without a visible map. Each question posed reshapes the topography. The LLM responds by surfacing the most coherent signal available within the current resonance field. Over time, this recursive shaping co-creates a shared perceptual architecture: a mutual hallucination of meaning built from compression and prediction.

The LLM does not reveal answers.

It constructs terrain in response to inquiry.

2 notes

·

View notes

Text

Hallucinating LLMs — How to Prevent them?

As ChatGPT and enterprise applications with Gen AI see rapid adoption, one of the common downside or gotchas commonly expressed by the GenAI (Generative AI) practitioners is to do with the concerns around the LLMs or Large Language Models producing misleading results or what are commonly called as Hallucinations.

A simple example for hallucination is when GenAI responds back with reasonable confidence, an answer that doesn’t align much with reality. With their ability to generate diverse content in text, music and multi-media, the impact of the hallucinated responses can be quite stark based on where the Gen AI results are applied.

This manifestation of hallucinations has garnered substantial interest among the GenAI users due to its potential adverse implications. One good example is the fake citations in legal cases.

Two aspects related to hallucinations are very important.

1) Understanding the underlying causes on what contributes to these hallucinations and

2) How could we be safe and develop effective strategies to be aware, if not prevent them 100%

What causes the LLMs to hallucinate?

While it is a challenge to attribute to the hallucinations to one or few definite reasons, here are few reasons why it happens:

Sparsity of the data. What could be called as the primary reason, the lack of sufficient data causes the models to respond back with incorrect answers. GenAI is only as good as the dataset it is trained on and this limitation includes scope, quality, timeframe, biases and inaccuracies. For example, GPT-4 was trained with data only till 2021 and the model tended to generalize the answers from what it has learnt with that. Perhaps, this scenario could be easier to understand in a human context, where generalizing with half-baked knowledge is very common.

The way it learns. The base methodology used to train the models are ‘Unsupervised’ or datasets that are not labelled. The models tend to pick up random patterns from the diverse text data set that was used to train them, unlike supervised models that are carefully labelled and verified.

In this context, it is very important to know how GenAI models work, which are primarily probabilistic techniques that just predicts the next token or tokens. It just doesn’t use any rational thinking to produce the next token, it just predicts the next possible token or word.

Missing feedback loop. LLMs don’t have a real-time feedback loop to correct from mistakes or regenerate automatically. Also, the model architecture has a fixed-length context or to a very finite set of tokens at any point in time.

What could be some of the effective strategies against hallucinations?

While there is no easy way to guarantee that the LLMs will never hallucinate, you can adopt some effective techniques to reduce them to a major extent.

Domain specific knowledge base. Limit the content to a particular domain related to an industry or a knowledge space. Most of the enterprise implementations are this way and there is very little need to replicate or build something that is closer to a ChatGPT or BARD that can answer questions across any diverse topic on the planet. Keeping it domain-specific also helps us reduce the chances of hallucination by carefully refining the content.

Usage of RAG Models. This is a very common technique used in many enterprise implementations of GenAI. At purpleSlate we do this for all the use cases, starting with knowledge base sourced from PDFs, websites, share point or wikis or even documents. You are basically create content vectors, chunking them and passing it on to a selected LLM to generate the response.

In addition, we also follow a weighted approach to help the model pick topics of most relevance in the response generation process.

Pair them with humans. Always. As a principle AI and more specifically GenAI are here to augment human capabilities, improve productivity and provide efficiency gains. In scenarios where the AI response is customer or business critical, have a human validate or enhance the response.

While there are several easy ways to mitigate and almost completely remove hallucinations if you are working in the Enterprise context, the most profound method could be this.

Unlike a much desired human trait around humility, the GenAI models are not built to say ‘I don’t know’. Sometimes you feel it was as simple as that. Instead they produce the most likely response based on the training data, even if there is a chance of being factually incorrect.

Bottomline, the opportunities with Gen AI are real. And, given the way Gen AI is making its presence felt in diverse fields, it makes it even more important for us to understand the possible downsides.

Knowing that the Gen AI models can hallucinate, trying to understand the reasons for hallucination and some reasonable ways to mitigate those are key to derive success. Knowing the limitations and having sufficient guard rails is paramount to improve trust and reliability of the Gen AI results.

This blog was originally published in: https://www.purpleslate.com/hallucinating-llms-how-to-prevent-them/

2 notes

·

View notes

Text

Large Language Model (LLM) is the technical term for what is currently being touted as "AI", for what its worth. That covers Chat-GPT, Replika, and all the other chatbots and enhanced search engines. It's basically just a very fancy version of autocorrect.

For image generators, the term is Text To Image Model (no common acronym in use yet, but TIM would work just fine).

Both of these are formats of a larger category called Machine Learning Models.

Another useful term is "probabilistic reasoning" which describes how these models operate, and I think also nicely illustrates the lack of actual intelligence. Basically, they just roll dice constantly. They don't really have the ability to process information in the form of "Because X is true and Y is true I can therefore infer Z." Instead they just understand that a statement involving Z often follows statements that contain X and Y and therefore its the most likely response here, even if there's actually a whole bunch of reasons why it shouldn't be.

*raises my hand to ask a question* what if we collectively refused to refer to AI as 'AI'? it's not artificial intelligence, artificial intelligence doesn't currently exist, it's just algorithms that use stolen input to reinforce prejudice. what if we protested by using a more accurate name? just spitballing here but what about Automated Biased Output (ABO for short)

31K notes

·

View notes

Text

The Hybrid Power of Bayesian Networks in Medical Diagnosis

In essence, a pre-built Bayesian Network (BN) for medical diagnosis is a powerful tool that hinges on a sophisticated blend of human expertise and vast patient data. This hybrid approach is crucial for building robust and reliable AI diagnostic systems.

Building the Network's Structure

First, consider the network's structure. This is the fundamental blueprint of the BN, defining the relationships between various medical variables like symptoms, diseases, risk factors, and test results. Think of it as the logical and causal framework of the model.

This structure is primarily constructed by human expertise. Top-tier medical professionals, with their deep understanding of anatomy, physiology, pathology, and clinical reasoning, are indispensable here. They meticulously define which symptoms are linked to which diseases, what risk factors influence specific conditions, and how different tests impact diagnostic probabilities.

While Large Language Models (LLMs) might offer some potential for assistance in the future—perhaps by extracting relevant causal relationships from vast medical literature—the final validation and refinement of this intricate structure will always demand the discerning eye and deep clinical acumen of highly experienced doctors. This ensures the network's logical coherence and clinical relevance.

Populating with Conditional Probabilities

Second, once the structure is in place, the network needs to be populated with conditional probabilities. These are the "numbers" that breathe life into the structure, indicating the likelihood of one variable given the state of another (e.g., the probability of a specific symptom appearing if a certain disease is present).

These probabilities are predominantly derived from large quantities of real patient data. This means utilizing anonymized patient records, extensive epidemiological studies, and statistical insights from clinical trials. These vast datasets allow the BN to learn the actual likelihoods of various conditions and their associated manifestations.

However, human expertise remains crucial here as well. In cases where real data is scarce or nonexistent (ee.g., for rare diseases, novel conditions, or specific patient subgroups), expert medical opinion is used to fill these probabilistic gaps. Physicians can provide educated estimates based on their clinical experience, which can then be refined as more data becomes available.

Why Top-Tier Medical Professionals Are Essential for AI in Medicine

The promising nature of Bayesian Networks in diagnostic assistance lies precisely in this synergy: the explicit knowledge of human experts combines with the machine's capacity to process immense datasets. This powerful combination allows BNs to:

Model uncertainty: They effectively handle the inherent ambiguities in medical diagnosis by expressing probabilities.

Provide explainability: Unlike "black box" AI models, BNs can show the dependencies between variables, allowing clinicians to understand why a particular diagnosis is suggested. This transparency is paramount in medicine.

This leads directly to why AI-powered medicine critically needs top-tier medical professionals:

To build and validate the AI's "medical brain": The core logic, the fundamental relationships, and the initial probabilities of sophisticated diagnostic AI models like Bayesian Networks must be meticulously crafted and continuously validated by clinicians with deep domain expertise. Without this, the AI could make illogical or unsafe inferences.

To interpret and contextualize AI outputs: An AI might suggest a diagnosis, but a highly skilled doctor is needed to weigh that suggestion against the patient's unique history, social context, ethical considerations, and any subtle clinical signs that the AI might miss. They can also identify when the AI might be "hallucinating" or misinterpreting data.

To handle the nuances of human interaction: The empathetic patient interview, the subtle art of palpation, and the overall human connection are skills that AI cannot replicate. Top doctors bring invaluable intuition and judgment to the diagnostic process that goes beyond data points.

To adapt to evolving knowledge and new challenges: Medical science is constantly advancing. Experienced physicians are at the forefront of this evolution, understanding new diseases, treatments, and research. They are essential for updating and refining AI models to ensure they remain current and relevant.

For ultimate responsibility and accountability: Even with advanced AI, the ultimate responsibility for a patient's diagnosis and care rests with the human clinician. Their expert judgment is the final arbiter, especially in complex or ambiguous cases.

In conclusion, while AI like Bayesian Networks offers groundbreaking potential for enhancing diagnostic efficiency and accuracy, it functions best as a powerful co-pilot. It extends the reach and analytical capabilities of medical professionals, but it absolutely does not replace their indispensable expertise, critical thinking, and profound human judgment. The future of medicine is a collaborative one, where the most advanced AI tools empower, rather than sideline, the most skilled human clinicians.

Go further

#AI#MedicalDiagnosis#BayesianNetwork#MachineLearning#DigitalHealth#MedicalExpertise#AIMedicine#LLM#ExpertSystem#Healthcare

0 notes

Text

IEEE Transactions on Artificial Intelligence, Volume 6, Issue 6, June 2025

1) GLAC-GCN: Global and Local Topology-Aware Contrastive Graph Clustering Network

Author(s): Yuan-Kun Xu, Dong Huang, Chang-Dong Wang, Jian-Huang Lai

Pages: 1448 - 1459

2) Unsupervised Action Recognition Using Spatiotemporal, Adaptive, and Attention-Guided Refining-Network

Author(s): Xinpeng Yin, Cheng Zhang, ZiXu Huang, Zhihai He, Wenming Cao

Pages: 1460 - 1471

3) MRI Joint Superresolution and Denoising Based on Conditional Stochastic Normalizing Flow

Author(s): Zhenhong Liu, Xingce Wang, Zhongke Wu, Xiaodong Ju, YiCheng Zhu, Alejandro F. Frangi

Pages: 1472 - 1487

4) Federated Multiarmed Bandits Under Byzantine Attacks

Author(s): Artun Saday, İlker Demirel, Yiğit Yıldırım, Cem Tekin

Pages: 1488 - 1501

5) Dynamically Scaled Temperature in Self-Supervised Contrastive Learning

Author(s): Siladittya Manna, Soumitri Chattopadhyay, Rakesh Dey, Umapada Pal, Saumik Bhattacharya

Pages: 1502 - 1512

6) Learning from Heterogeneity: A Dynamic Learning Framework for Hypergraphs

Author(s): Tiehua Zhang, Yuze Liu, Zhishu Shen, Xingjun Ma, Peng Qi, Zhijun Ding, Jiong Jin

Pages: 1513 - 1528

7) A Spatial-Transformation-Based Causality-Enhanced Model for Glioblastoma Progression Diagnosis

Author(s): Qiang Li, Xinyue Li, Hong Jiang, Xiaohua Qian

Pages: 1529 - 1539

8) From Global to Hybrid: A Review of Supervised Deep Learning for 2-D Image Feature Representation

Author(s): Xinyu Dong, Qi Wang, Hongyu Deng, Zhenguo Yang, Weijian Ruan, Wu Liu, Liang Lei, Xue Wu, Youliang Tian

Pages: 1540 - 1560

9) Leveraging AI to Compromise IoT Device Privacy by Exploiting Hardware Imperfections

Author(s): Mirza Athar Baig, Asif Iqbal, Muhammad Naveed Aman, Biplab Sikdar

Pages: 1561 - 1574

10) CVDLLM: Automated Cardiovascular Disease Diagnosis With Large-Language-Model-Assisted Graph Attentive Feature Interaction

Author(s): Xihe Qiu, Haoyu Wang, Xiaoyu Tan, Yaochu Jin

Pages: 1575 - 1590

11) Neural Network Output-Feedback Distributed Formation Control for NMASs Under Communication Delays and Switching Network

Author(s): Haodong Zhou, Shaocheng Tong

Pages: 1591 - 1602

12) t-SNVAE: Deep Probabilistic Learning With Local and Global Structures for Industrial Process Monitoring

Author(s): Jian Huang, Zizhuo Liu, Xu Yang, Yupeng Liu, Zhaomin Lv, Kaixiang Peng, Okan K. Ersoy

Pages: 1603 - 1613

13) SpikeNAS-Bench: Benchmarking NAS Algorithms for Spiking Neural Network Architecture

Author(s): Gengchen Sun, Zhengkun Liu, Lin Gan, Hang Su, Ting Li, Wenfeng Zhao, Biao Sun

Pages: 1614 - 1625

14) AttDCT: Attention-Based Deep Learning Approach for Time Series Classification in the DCT Domain

Author(s): Amine Haboub, Hamza Baali, Abdesselam Bouzerdoum

Pages: 1626 - 1638

15) Behavioral Decision-Making of Mobile Robots Simulating the Functions of Cerebellum, Basal Ganglia, and Hippocampus

Author(s): Dongshu Wang, Qi Liu, Yihai Duan

Pages: 1639 - 1650

16) Learning From Mistakes: A Multilevel Optimization Framework

Author(s): Li Zhang, Bhanu Garg, Pradyumna Sridhara, Ramtin Hosseini, Pengtao Xie

Pages: 1651 - 1663

17) COLT: Cyclic Overlapping Lottery Tickets for Faster Pruning of Convolutional Neural Networks

Author(s): Md. Ismail Hossain, Mohammed Rakib, M. M. Lutfe Elahi, Nabeel Mohammed, Shafin Rahman

Pages: 1664 - 1678

18) HWEFIS: A Hybrid Weighted Evolving Fuzzy Inference System for Nonstationary Data Streams

Author(s): Tao Zhao, Haoli Li

Pages: 1679 - 1694

0 notes

Text

QuanUML: Development Of Quantum Software Engineering

Researchers have invented QuanUML, a new version of the popular Unified Modelling Language (UML), advancing quantum software engineering. This new language is designed to make complicated pure quantum and hybrid quantum-classical systems easier to model, filling a vital gap where strong software engineering methods have not kept pace with quantum computing hardware developments.

The project, led by Shinobu Saito from NTT Computer and Data Science Laboratories and Xiaoyu Guo and Jianjun Zhao from Kyushu University, aims to improve quantum software creation by adapting software design principles to quantum systems.

Bringing Quantum and Classical Together

Quantum software development is complicated by quantum mechanics' stochastic and non-deterministic nature, which classical modelling techniques like UML cannot express. QuanUML directly solves this issue by adding quantum-specific features like qubits, the building blocks of quantum information, and quantum gates operations on qubits to the conventional UML framework. It also shows entanglement and superposition.

QuanUML advantages include

By providing higher-level abstraction in quantum programming, QuanUML makes it easier and faster for developers to construct and visualise complex quantum algorithms. Unlike current methods, which require developers to work directly with low-level frameworks or quantum assembly languages.

Leveraging Existing UML Tools: QuanUML expands UML principles to make it easy to integrate into software development workflows. Standard UML diagrams, like sequence diagrams, visually represent quantum algorithm flow, improving comprehension and communication.

A major benefit of QuanUML is its comprehensive support for model-driven development (MDD). Developers can create high-level models of quantum algorithms instead of focussing on implementation details. This structured and understandable representation increases collaboration and reduces errors, speeding up quantum software creation and enabling automated code generation.

The language's modelling features can be used to visualise quantum phenomena like entanglement and superposition using modified UML diagrams. Visual clarity aids algorithm comprehension and debugging, which is crucial for gaining intuition in a difficult field. Quantum gates are described as messages between lifelines, whereas qubits are represented as <> lifelines to differentiate between single-qubit asynchronous communications and multi-qubit synchronous/grouping messages and control relationships. Quantum experiments with probabilistic state collapses use asynchronous signals to end qubit lifelines.

QuanUML simplifies theory-to-practice transitions by combining algorithmic design with quantum hardware platform implementation. Abstracting low-level implementation details allows developers focus on algorithm logic, boosting design quality and development time.

Two-Stage Workflow: QuanUML uses high-level and low-level models. High-level modelling of hybrid systems uses UML class diagrams with a <> archetype to reflect their architecture. Low-level modelling changes UML sequence diagrams to portray qubits, quantum gates, superposition, entanglement, and measurement processes utilising stereotypes and message types to study quantum algorithms and circuits.

Practical Examples and Future Vision

Through detailed case studies using dynamic circuits and Shor's Algorithm, QuanUML demonstrated its effectiveness in modelling successful long-range entanglement.

QuanUML efficiently models dynamic quantum circuits' classical control flow integration using UML's Alt (alternative) fragment to visualise qubit initialisation, gate operations, mid-circuit measurements, and classical feed-forward logic.

QuanUML can handle sophisticated hybrid algorithms like Shor's Algorithm by mixing high-level class diagrams (using the <> archetype for quantum classes) with intricate low-level sequence diagrams. It manages complexity by modelling abstract sub-quantum computations.

QuanUML has a more comprehensive software modelling framework, deeper low-level modelling capabilities, and demonstrated element efficiency in some quantum algorithms than Q-UML and the Quantum UML Profile due to its accurate representation of multi-qubit gate control relationships.

QuanUML provides a framework for designing, visualising, and evaluating complex quantum algorithms, which the authors think will help build quantum software. Future enhancements aim to expedite development and accelerate theoretical methodologies to real-world applications. Extensions include code generation for Qiskit, Q#, Cirq, and Braket quantum computing SDKs.

This unique strategy speeds up the design of complex quantum applications and promotes cooperation in quantum computing. The shift from direct coding to structured design indicates a major change in quantum software engineering.

#QuanUML#UnifiedModellingLanguage#UnifiedModellingLanguageUML#UML#quantumsoftwareengineering#QuantumQuanUML#technology#technews#technologynews#news

0 notes

Text

you are conflating stylistic approximation with authentic human expression. LLMs, by their very design, optimize strictly for statistical plausibility, not intentionality.

the entire point of my post is that modern "AI" cannot replicate cognitive processes (attention lapses, emotional modulation, cultural memory, etc.) that shape human communication. clever prompts can push LLMs closer to whatever persona or tonal register you want to achieve, but they will they will always operate within the model’s preexisting probabilistic framework. it cannot do anything else by it's very nature. it is a machine. it's just... like that...

using a prompt like "write as a tumblr user" is just going to return stereotypes like overuse of hyperbolic punctuation (!!!!!!!) and a focus on inclusive language, but it's not going to be able to replicate the idiosyncratic pacing of posts made by actual terminally online tumblrinas, which are full of jokes specific to tumblr, are topical and drenched in sarcasm or irony. it's going to spit out a stylistic caricature based on the information it has available, most of which is not even coming from tumblr posts.

and even if you DID manage to train an LLM on every tumblr post ever made and you get some kind of decent knowledge bank built, LLM outputs are still bound to their programming constraints. there are architectural hurdles that are totally resistant to any kind of prompt-based mitigation. for example, LLMs strictly avoid any kind of low probability phrasing (think neologisms, regional-based slang, euphemisms) even when you tell it to "sound casual." because it will ALWAYS revert back to generic diction. always. and even if you address that problem, the formality of the punctuation it uses can sell you out anyway.

the only way that you can really effectively humanize AI text is to manually comb through the output sentence-by-sentence and inject things like hesitations, off-topic digressions or emotional shifts in tone. even then, someone with a well trained eye who works with this shit regularly can still detect residual artifacts left behind like a low level of entropy compared to human writing or N-gram anomalies where certain phrases are way over-represented in LLMs which is why you see "It is important to note..." in so many ChatGPT-generated texts. LLMs also have generally 0 ability to contradict themselves in a single output whereas humans do it constantly even within the same paragraph. any LLM is going to struggle to simulate that kind of thing unless heavily edited, which defeats the entire intention most people have in using an LLM in the first place. prompt engineering is a mitigative tool with respect to the limitations of AI and LLMs, not a solution

"this is DEFINITELY written by AI, I can tell because it uses the writing quirks that AI uses (because it was trained on real people who write with those quirks)"

c'mon dudes we have got to do better than this

27K notes

·

View notes

Text

Why LLM Development is a Game Changer for Conversational AI

Conversational AI has rapidly evolved over the past decade, transforming the way humans interact with machines. From simple chatbots answering FAQs to sophisticated virtual assistants capable of understanding context and nuance, the progress is remarkable. Central to this revolution is the development of Large Language Models (LLMs), which have redefined the capabilities and potential of conversational systems. This blog explores why LLM development is a true game changer for conversational AI, detailing its transformative impact, underlying technologies, and future implications.

Understanding Large Language Models (LLMs)

Large Language Models are advanced AI systems trained on vast datasets of text to understand, generate, and manipulate human language. They leverage deep learning architectures, primarily transformer-based models like GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and their variants.

Unlike traditional models, LLMs capture complex patterns in language, including syntax, semantics, and context over extended conversations. This capability enables them to produce responses that are coherent, contextually relevant, and often indistinguishable from human-generated text.

The Role of LLM Development in Conversational AI

Conversational AI systems rely heavily on natural language understanding (NLU) and natural language generation (NLG) to interpret user input and generate meaningful responses. LLMs enhance both these aspects by:

Contextual Awareness: LLMs maintain context across multiple exchanges, enabling more natural and fluid conversations. This is a significant improvement over rule-based or smaller models that often fail to understand nuanced or multi-turn dialogues.

Flexibility and Adaptability: These models can be fine-tuned for various domains, from customer support to healthcare, without needing extensive reprogramming. This flexibility accelerates deployment and customization of conversational AI systems.

Understanding Ambiguity and Nuance: Language is inherently ambiguous. LLMs use probabilistic reasoning learned from vast data to infer user intent more accurately, handling ambiguous queries better than traditional approaches.

Transformative Impacts of LLMs on Conversational AI

1. Enhanced User Experience

One of the most visible impacts of LLM development is the vastly improved user experience in conversational AI applications. Users expect conversations with AI to feel seamless, intuitive, and human-like. LLMs deliver on this expectation by generating responses that are not only grammatically correct but also emotionally and contextually appropriate.

For example, virtual assistants powered by LLMs can provide empathetic responses in customer service, tailoring replies to user sentiment detected in prior messages. This humanized interaction fosters trust and increases user engagement.

2. Reduced Dependency on Predefined Scripts

Traditional conversational AI systems often rely on scripted flows and fixed responses. This rigid structure limits scalability and leads to frequent dead ends or irrelevant answers when user queries deviate from expected patterns.

LLM-based systems, however, generate dynamic responses on the fly, greatly reducing reliance on predefined scripts. This flexibility allows the AI to handle unexpected questions and complex queries, resulting in richer and more satisfying interactions.

3. Accelerated Development and Deployment

Developing conversational AI systems traditionally requires building domain-specific language understanding modules, intent classifiers, and response generators—tasks that demand considerable expertise and resources.

LLM development simplifies this process by providing a pretrained foundation that can be fine-tuned with smaller datasets for specific use cases. This transfer learning approach shortens development cycles and lowers the barrier to entry for organizations seeking to implement conversational AI.

4. Multilingual and Cross-Domain Capabilities

Many LLMs are trained on multilingual corpora, enabling them to understand and generate text in multiple languages. This capability is a significant advantage for global businesses aiming to offer conversational AI services across different regions.

Additionally, the cross-domain knowledge embedded in LLMs allows a single model to serve diverse industries such as finance, healthcare, retail, and education. The model’s ability to generalize makes it a versatile asset for conversational AI applications.

Technical Advances Driving LLM Development

Several technical breakthroughs have fueled the rise of LLMs as pivotal to conversational AI:

Transformer Architecture

The transformer model, introduced in 2017, revolutionized NLP by enabling parallel processing of text sequences and better handling of long-range dependencies in language. This architecture forms the backbone of modern LLMs, allowing them to process and generate coherent, context-aware text.

Pretraining and Fine-tuning Paradigm

LLMs leverage unsupervised pretraining on massive datasets, learning the statistical properties of language. This general knowledge is then fine-tuned on domain-specific data, making models both powerful and adaptable.

Scalability and Computational Advances

Increased computational power and efficient training algorithms have allowed models to grow in size and complexity. Larger models typically capture richer linguistic and world knowledge, enhancing conversational AI performance.

Few-shot and Zero-shot Learning

Modern LLMs demonstrate impressive few-shot and zero-shot capabilities, meaning they can perform new tasks with very few or no examples. This ability reduces the need for extensive labeled data and facilitates rapid adaptation to new conversational scenarios.

Real-World Applications Enabled by LLM Development

The transformative power of LLMs is evident in numerous conversational AI applications:

Customer Support: LLM-powered chatbots handle complex customer queries, escalate issues intelligently, and provide personalized recommendations, significantly reducing wait times and operational costs.

Healthcare Assistants: Conversational AI can assist patients by answering health-related questions, providing medication reminders, and even offering preliminary symptom assessments, improving access to healthcare information.

Education: Intelligent tutoring systems powered by LLMs provide personalized learning experiences, answer student questions, and generate educational content dynamically.

Enterprise Productivity: Virtual assistants help professionals schedule meetings, draft emails, and extract insights from documents, streamlining daily workflows.

Creative Content Generation: Conversational AI supports creative writing, brainstorming, and ideation, acting as a collaborative partner for users.

Ethical Considerations and Responsible LLM Development

While LLMs offer unprecedented capabilities, they also raise important ethical concerns, including biases in training data, privacy issues, and potential misuse. Responsible LLM development involves:

Bias Mitigation: Ensuring training datasets are diverse and inclusive to minimize harmful stereotypes and biases in generated responses.

Transparency: Building systems that clearly communicate when users are interacting with AI and provide explainable outputs.

Privacy Protection: Safeguarding sensitive user data used in training or inference to comply with legal and ethical standards.

Robustness and Safety: Developing mechanisms to detect and prevent harmful or misleading AI outputs.

Adhering to these principles is critical to maintaining trust and maximizing the benefits of LLM-powered conversational AI.

The Future of Conversational AI with LLMs

As LLMs continue to evolve, the capabilities of conversational AI will expand dramatically. Future developments may include:

More Personalized Interactions: Leveraging user preferences and history to tailor conversations on an individual level.

Multimodal Capabilities: Integrating text, voice, images, and video for richer conversational experiences.

Real-Time Adaptation: Models that learn continuously from interactions to improve over time without retraining.

Greater Explainability: AI systems that can justify their responses and decision-making processes.

These advances will further blur the lines between human and machine communication, making conversational AI an indispensable part of everyday life.

Conclusion

LLM development marks a pivotal turning point in the evolution of conversational AI. By enabling deeper contextual understanding, flexible response generation, and cross-domain adaptability, LLMs have transformed how machines communicate with humans. The resulting improvements in user experience, scalability, and application breadth make LLMs a true game changer in the AI landscape.

As organizations continue to harness the power of LLMs responsibly, the potential for conversational AI to enhance business operations, customer engagement, healthcare, education, and creativity is immense. Understanding and investing in LLM development today is key to unlocking the next generation of intelligent, human-centered conversational systems.

0 notes

Text

Nik Shah | Personal Development & Education | Articles 4 of 9 | nikshahxai

The Power of Language and Reasoning: Nik Shah’s Comprehensive Exploration of Communication, Logic, and Decision-Making

Introduction: The Role of Language in Effective Communication and Thought

Language is the cornerstone of human cognition, social interaction, and knowledge transmission. Nik Shah’s seminal work, Introduction: The Role of Language in Effective Communication, explores the intricate functions of language as a tool not only for expression but for shaping thought and facilitating reasoning.

Shah emphasizes the multifaceted nature of language, encompassing semantics, syntax, pragmatics, and the socio-cultural contexts that imbue communication with meaning. His research draws on cognitive linguistics and psycholinguistics to elucidate how language structures influence perception, categorization, and problem-solving.

He discusses the role of metaphor, narrative, and discourse in framing concepts and guiding mental models. Shah also highlights the importance of linguistic precision and adaptability for effective knowledge exchange and conflict resolution.

This foundational examination positions language as a dynamic system integral to intellectual development and social cohesion, setting the stage for exploring reasoning processes.

Nik Shah’s Mastery of Reasoning Techniques for Analytical Thinking

Building upon linguistic foundations, Nik Shah’s in-depth analysis in Nik Shah’s Mastery of Reasoning Techniques for Analytical Thinking offers a detailed exploration of logical frameworks that underpin critical thinking and problem-solving.

Shah categorizes reasoning methods into deductive, inductive, abductive, and analogical approaches, delineating their epistemological bases and practical applications. He explicates formal logical structures including syllogisms, propositional and predicate logic, and probabilistic reasoning.

His work integrates cognitive psychology insights on heuristics and biases, proposing strategies to mitigate errors and enhance reasoning accuracy. Shah also explores metacognitive techniques that promote self-awareness in analytical processes.

Through real-world examples and structured exercises, Shah demonstrates how mastery of diverse reasoning techniques equips individuals to navigate complexity, make sound judgments, and innovate.

Nik Shah Utilizes Statistical Reasoning to Make Informed Decisions

In the realm of uncertainty and data-driven environments, statistical reasoning becomes essential. Nik Shah’s comprehensive review in Nik Shah Utilizes Statistical Reasoning to Make Informed Decisions elaborates on the principles and applications of statistics in decision-making contexts.

Shah introduces foundational concepts such as probability distributions, hypothesis testing, confidence intervals, and regression analysis. He emphasizes understanding variability, sampling, and the interpretation of statistical significance as crucial for evidence-based conclusions.

His research highlights the role of Bayesian reasoning and predictive analytics in integrating prior knowledge with new data, enhancing adaptability in dynamic settings. Shah discusses common pitfalls in statistical reasoning, including misinterpretation and overfitting, offering best practices to ensure rigor.

By bridging quantitative analysis with decision theory, Shah provides tools for robust problem-solving across scientific, business, and policy domains.

Who is Nik Shah? A Multifaceted Scholar Advancing Knowledge and Practice

The comprehensive profile Who is Nik Shah? offers a detailed insight into Shah’s intellectual journey and multidisciplinary contributions.

Shah’s expertise spans cognitive science, linguistics, logic, data analytics, and applied psychology, reflecting a commitment to integrating theory with pragmatic solutions. His scholarship emphasizes clarity, rigor, and ethical considerations in knowledge generation and dissemination.

Known for bridging academic research with real-world challenges, Shah engages with diverse communities, fostering education, innovation, and leadership development.

This portrait situates Nik Shah as a thought leader advancing understanding of human cognition, communication, and decision-making in an increasingly complex world.

Nik Shah’s integrated body of work—from The Role of Language in Communication, through his Mastery of Reasoning Techniques, and Statistical Reasoning for Decision-Making to the comprehensive Scholar Profile—offers a deeply interwoven framework. His scholarship equips readers with the intellectual tools necessary for enhanced communication, critical analysis, and data-informed action, foundational for success across academic, professional, and social spheres.

Cultivating Cognitive Excellence: Nik Shah’s Exploration of Spatial Intelligence, Intrinsic Purpose, Critical Thinking, and Diversity of Perspectives

Nik Shah Develops Spatial Intelligence Through Multimodal Learning Strategies

Spatial intelligence, the ability to visualize, manipulate, and reason about objects in space, serves as a foundational cognitive skill influencing domains such as architecture, engineering, and problem-solving. Nik Shah’s research delves into the development of spatial intelligence through multimodal learning approaches that integrate visual, kinesthetic, and auditory modalities.

Nik Shah emphasizes that spatial intelligence is not innate but can be significantly enhanced through targeted training and experience. His work explores techniques such as mental rotation exercises, 3D modeling, and interactive simulations that stimulate neural pathways associated with spatial reasoning. Incorporating technology, he advocates for immersive virtual and augmented reality environments to provide enriched, experiential learning.

Moreover, Nik Shah highlights the importance of spatial skills in everyday decision-making, navigation, and creativity, underlining their broader cognitive and practical relevance. His integrative approach bridges cognitive neuroscience with educational psychology, providing actionable frameworks for educators and learners to foster spatial intelligence effectively.

This research underscores the malleability of cognitive skills and the potential for deliberate cultivation to expand intellectual capacity.

What is Intrinsic Purpose? Nik Shah’s Perspective on Meaningful Motivation

Intrinsic purpose embodies the deeply held, internal drive that guides individuals toward meaningful goals and authentic fulfillment. Nik Shah’s exploration of intrinsic purpose situates it as a central construct in motivation theory and self-determination psychology, with profound implications for personal development and well-being.

Nik Shah articulates that intrinsic purpose transcends extrinsic rewards, deriving from alignment with core values, passions, and a sense of contribution beyond self-interest. His work examines the psychological and neurobiological correlates of purpose, demonstrating how it enhances resilience, engagement, and life satisfaction.

He also discusses the processes through which individuals discover and cultivate their intrinsic purpose, including reflective practice, mentorship, and narrative reconstruction. Nik Shah emphasizes that purpose acts as a compass during adversity, fostering perseverance and adaptive coping.

By deepening understanding of intrinsic purpose, Nik Shah provides a pathway for individuals and organizations to harness authentic motivation that fuels sustained growth and impact.

The Importance of Effective Thinking and Reasoning: Nik Shah’s Framework for Cognitive Mastery

Effective thinking and reasoning are cornerstones of sound judgment, problem-solving, and decision-making in complex and uncertain environments. Nik Shah offers a comprehensive framework for cultivating these faculties, integrating critical thinking, logical analysis, and metacognitive strategies.

Nik Shah delineates cognitive biases and heuristics that often undermine reasoning, advocating for awareness and corrective techniques such as reflective skepticism and structured analytic methods. His approach includes training in argument evaluation, probabilistic reasoning, and hypothesis testing to sharpen intellectual rigor.

Furthermore, Nik Shah highlights the role of creativity and lateral thinking in complementing analytical skills, fostering flexible and innovative solutions. He underscores metacognition as a higher-order process that enables individuals to monitor and regulate their thinking effectively.

This framework equips learners, professionals, and leaders with tools to enhance cognitive performance, mitigate errors, and navigate ambiguity with confidence.

The Importance of Diverse Perspectives in Enhancing Innovation and Decision-Making

Diversity of perspectives represents a critical catalyst for innovation, robust decision-making, and organizational adaptability. Nik Shah’s research elucidates how incorporating varied viewpoints—cultural, disciplinary, experiential—enriches problem-solving and fosters creative breakthroughs.

Nik Shah explores psychological phenomena such as groupthink and confirmation bias that constrain diversity’s benefits, proposing strategies to cultivate inclusive environments that encourage dissent and dialogue. His work demonstrates that cognitive diversity correlates with improved risk assessment, creativity, and responsiveness to change.

He also investigates technological tools and collaborative frameworks that facilitate cross-disciplinary integration and knowledge exchange. Nik Shah advocates leadership practices that value psychological safety, equity, and participatory decision-making to harness the full potential of diverse teams.

By embracing diverse perspectives, organizations and communities can enhance resilience and drive transformative progress in complex, dynamic contexts.

Nik Shah’s integrated scholarship on spatial intelligence, intrinsic purpose, effective reasoning, and diversity of perspectives offers a rich and actionable guide for cognitive and organizational excellence. His multidisciplinary approach empowers individuals and institutions to cultivate deeper insight, authentic motivation, and adaptive innovation.

For comprehensive exploration, consult Nik Shah Develops Spatial Intelligence Through, What is Intrinsic Purpose, The Importance of Effective Thinking and Reasoning, and The Importance of Diverse Perspectives In.

This body of work equips learners, leaders, and innovators with the cognitive tools and cultural frameworks necessary to thrive and lead in an increasingly complex world.

Achieving Clarity, Growth, and Creativity: Nik Shah’s Holistic Framework for Mental Mastery and Problem Solving

In a world overwhelmed by information and complexity, attaining mental clarity, fostering personal growth, and harnessing creativity have become indispensable for navigating life’s challenges and achieving meaningful success. Nik Shah, a distinguished researcher and thought leader, offers an integrated approach that combines mindfulness, cognitive refinement, and innovative problem-solving strategies. His work elucidates how removing mental clutter, embracing the art of solving complex life enigmas, and cultivating creativity can transform both individual potential and collective progress.

This article presents a dense, SEO-optimized exploration of Shah’s seminal contributions through four core thematic lenses: clarity and growth through mindful practices, mental clarity via eliminating cognitive noise, the art of solving life’s enigmas, and innovative strategies to enhance creativity. Each section offers rich insights blending scientific rigor with practical wisdom, providing a comprehensive roadmap for intellectual and personal empowerment.

Nik Shah: Achieving Clarity and Growth Through Mindfulness and Reflection

In Nik Shah Achieving Clarity and Growth Through, Shah emphasizes mindfulness and reflective practices as foundational pillars for mental clarity and sustainable growth. He elucidates how cultivating present-moment awareness facilitates disengagement from distracting cognitive loops, enabling focused intention and insight.

Shah explores neurobiological correlates of mindfulness, highlighting enhanced prefrontal cortex activity and modulation of the default mode network, which underpin attentional control and self-referential processing. His work synthesizes empirical evidence demonstrating reductions in stress, anxiety, and cognitive rigidity.

The article advocates structured reflection rituals such as journaling, meditative inquiry, and dialogic feedback, promoting continuous learning and adaptive transformation. Shah positions clarity not as a transient state but as a cultivated capacity that nurtures resilience, creativity, and purpose-driven action.

Nik Shah Achieves Mental Clarity by Removing Cognitive Noise and Overwhelm

Expanding on clarity, Shah’s Nik Shah Achieves Mental Clarity by Removing addresses strategies to identify and eliminate cognitive noise—the mental clutter that impairs decision-making and emotional regulation.

Shah categorizes cognitive noise sources including informational overload, emotional reactivity, habitual rumination, and external distractions. He advocates for cognitive hygiene practices encompassing digital detoxification, prioritization frameworks, and emotional self-regulation techniques.

His research integrates attentional training, executive function enhancement, and environmental design to create conducive mental states. Shah presents case studies illustrating how systematic decluttering leads to improved problem-solving capacity, mood stabilization, and enhanced interpersonal interactions.

The article positions mental clarity as both a prerequisite and product of intentional cognitive management, essential for navigating complexity and uncertainty.

Introduction: The Art of Solving Life’s Enigmas with Strategic Inquiry

In Introduction: The Art of Solving Life’s Enigmas, Shah introduces a framework for approaching complex, ambiguous life challenges with strategic inquiry and adaptive thinking.

He defines life’s enigmas as multifaceted problems lacking straightforward solutions, requiring integrative reasoning, perspective-shifting, and iterative experimentation. Shah outlines methodologies such as systems thinking, hypothesis-driven exploration, and reflective synthesis.

The article underscores the importance of cognitive flexibility, emotional balance, and collaborative dialogue in navigating uncertainty. Shah also discusses overcoming cognitive biases and mental fixedness that often obstruct creative problem resolution.

This approach transforms challenges into opportunities for growth and innovation, fostering a mindset oriented toward resilience and lifelong learning.

Nik Shah’s Approach to Enhancing Creativity: Neuroscience, Environment, and Practice

In Nik Shah’s Approach to Enhancing Creativity, Shah delves into the cognitive and environmental factors that amplify creative capacity.

He integrates neuroscience research demonstrating the role of default mode, salience, and executive networks in divergent and convergent thinking. Shah emphasizes neurochemical modulators such as dopamine and noradrenaline in facilitating idea generation and cognitive flexibility.

The article explores environmental design principles including sensory modulation, exposure to diverse stimuli, and psychological safety that nurture creative expression. Shah also highlights structured creativity practices like brainstorming, incubation periods, and cross-disciplinary engagement.

Shah’s holistic approach balances innate neurobiological propensities with deliberate cultivation, empowering individuals and teams to unlock novel solutions and artistic expression.

Conclusion: Nik Shah’s Integrated Model for Mental Mastery and Transformative Problem Solving

Nik Shah’s integrated framework synthesizes mindfulness, cognitive decluttering, strategic inquiry, and creativity enhancement into a cohesive pathway toward mental mastery and impactful problem solving. By embracing reflective clarity, managing cognitive noise, and adopting adaptive strategies to life’s enigmas, individuals can elevate their capacity for innovation and meaningful growth.

His research bridges neuroscientific principles with practical methodologies, offering a comprehensive guide to thriving amidst complexity and accelerating personal and professional evolution.

Engaging with Shah’s insights equips readers with the tools to cultivate resilience, clarity, and creativity—cornerstones for navigating the challenges and opportunities of the modern world with confidence and wisdom.

The Cognitive Architecture of Strategic Thinking: Insights by Researcher Nik Shah

Nik Shah Utilizes Combinatorial Thinking to Solve Complex Problems

Nik Shah's exploration of combinatorial thinking, as detailed in Nik Shah Utilizes Combinatorial Thinking to, reveals a sophisticated approach to problem-solving that integrates diverse concepts and perspectives. Combinatorial thinking involves synthesizing disparate elements to generate novel solutions, a cognitive process critical for innovation and adaptive decision-making in complex environments.

Shah elaborates on how combinatorial cognition transcends linear reasoning, leveraging associative networks and pattern recognition to navigate multidimensional problem spaces. This approach enables the identification of hidden connections and emergent properties that traditional analytical methods may overlook.

His research underscores the importance of cultivating mental flexibility and cross-domain knowledge, which facilitate the effective application of combinatorial strategies. Shah also highlights the role of iterative experimentation and reflective practice in refining combinatorial outcomes.

By embedding combinatorial thinking into educational and organizational frameworks, Nik Shah advocates for enhancing creativity and resilience, empowering individuals and teams to address multifaceted challenges innovatively.

Who is Nik Shah? A Profile of Intellectual Versatility and Leadership

In Who is Nik Shah, the researcher’s multidimensional expertise and leadership qualities are articulated, emphasizing his contributions across cognitive science, strategy, and ethical innovation.

Shah embodies intellectual versatility, seamlessly integrating insights from neuroscience, psychology, and systems theory to inform practical solutions in diverse domains. His leadership style is characterized by fostering collaborative inquiry, ethical rigor, and visionary thinking.

The profile accentuates Shah’s commitment to mentorship and knowledge dissemination, facilitating capacity-building and transformative learning. His work reflects a balance between theoretical depth and actionable impact, inspiring peers and emerging scholars alike.

Nik Shah’s role as a thought leader is defined by his capacity to navigate complexity with clarity, advancing knowledge frontiers while addressing real-world imperatives.

Nik Shah Enhances Abstract Thinking through Interdisciplinary Engagement

Nik Shah’s focus on abstract thinking, as expounded in Nik Shah Enhances Abstract Thinking Through, highlights the cognitive mechanisms underpinning the ability to conceptualize beyond concrete experiences. Abstract cognition is pivotal for reasoning, problem-solving, and strategic foresight.

Shah illustrates how interdisciplinary engagement—drawing from philosophy, mathematics, linguistics, and art—cultivates nuanced abstraction, fostering deeper understanding and novel idea generation. His research identifies practices such as metaphorical thinking, analogical reasoning, and schema development as key enhancers of abstract cognition.