#python – How can I sort a dictionary by key

Explore tagged Tumblr posts

Text

python – How can I sort a dictionary by key

Standard Python dictionaries are unordered (until Python 3.7). Even if you sorted the (key,value) pairs, you wouldnt be able to store them in a dict in a way that would preserve the ordering.

The easiest way is to use OrderedDict, which remembers the order in which the elements have been inserted:

0 notes

Note

Okay okay. Headcanon for you. So Bruce just being Done With Everything is damn hilarious, especially to his sons. My headcanon is that they have their own secret little competitions to see who can get him the Most Done. Surprisingly? Tim has won twice in a row.

Bruce being Done is my favorite! 😂 I like the way you think @nxxttime

—

Unsurprisingly, it was Dick and Jason who started the game with the simple question: ‘just how many Space Jam references can I fit into this League Briefing before B loses it in front of all his Super Friends?’ (answer: 13)

And from there, it all just sort of escalated.

Batman is Tired™ and Done™ 105% of the time, but getting a rise out of him is surprisingly rare. Cracking the stoic man like an egg is one of the kids’ favorite pastimes, but while it’s fun to see their dad Lose It…bonus points are given for the I Give Up Face.

What is the I Give Up Face, you may ask? Simple: It’s what happens with Bruce Wayne has transcended anger and annoyance completely and is now on a whole ‘nother plane of apathy. It’s a beautiful thing to see.

And every Batkid has one objective–to be the first person to get the poor man to make that spiritual transition.

As a rule, Dick goes errs on the side of subtlety–little buttons that he just knows to press in order to get a rise out of Bruce. Thrown-in references, irksome words and phrases (i.e. moist, slurp, lugubrious, etc. Alfred got him a dictionary for Christmas, and Bruce’s jaw almost popped out of alignment.) But when he’s in the mood for something a little more noticeable, he sings pop songs off-key over the comms or in Bruce’s ear while he’s trying to study case files. (The most effective ones include Toxic, Call Me Maybe, Mama Mia, and anything by Ke$ha) He’s also not above poking Bruce when he’s being ignored.

Jason, though he can be subtle when the situation calls for it, absolutely thrives on brute force. How many times can he shove Damian off a roof or toss Tim out a window before Bruce busts a blood vessel or five? How many times can he go ‘undercover’ wearing nothing but street clothes and a stick-on dollar-store mustache before he makes his dad look into the camera like he’s on the Office? How many times can he leak ‘confidential information’ to the press in the form of macaroni art and Cut-And-Paste notes before incurring Bruce’s wrathful frustration? The answer might surprise you.

Barbara’s method involves strategy and finesse. For instance, why send Bruce that data he asked for right off the bat (pardon the pun) when she could send a blank file with this:

Or even with this gif, if she’s feeling particularly devious:

Admittedly, Barbara’s the one who gets the least I Give Up Faces out of Bruce. But she gets bonus points for getting him to scream the loudest. The man’s lost five phones to the Gotham City Streets after throwing them in blinding fits of rage.

Damian is like a cat, in that his strategy involves metaphorically placing his finger on Bruce’s metaphorical coffee mug and slowly edging it off his metaphorical desk.

Never. Breaking. Eye. Contact.

He does this with almost everything. Deliberately breaking rules or bending guidelines in just the right way. Pressing that button. Flipping that switch. Diving off that building. All as he makes absolutely certain that Bruce is there to watch him do it. For Damian, it’s all in the eye-contact. The forceful, yet silent declaration of ‘I can do whatever I want, and there’s not a thing you can do to stop me, father,’ is one of the most surefire ways to get Bruce to Lose It.

Cass is Bruce’s sweet angel child, and would never do any of this to him!

(She totally has, but nothing they can prove. Nothing’s ever been successfully traced back to her.)

Duke’s backtalk is usually Guaranteed to get the I Give Up Face. Not that it’s disrespectful or overly snarky–quite the opposite, in fact. No, no. It’s phrases like ‘I see your point, but why do we have to jump off a roof. Wouldn’t jetpacks make more sense?’ or ‘Maybe I’m wrong, but the giant dinosaur’s kind of an eye-sore, don’t you think?’ that send Bruce off into a dissociating silence.

Duke is 100% aware of what he’s doing, but he gets supreme satisfaction from the ‘naively innocent’ routine. The key is to say his piece at just the right moment. Duke is exceptionally good at gauging Bruce’s level of volatility. So much so, that his new siblings will often come to him to ask just how far away Bruce is from the tipping point.

Stephanie is a ‘Jack of All Trades’, you might say. She picks and chooses from her siblings’ strategies and methods. And then she amps up the ante. To Steph, ‘bigger is better’ isn’t just a turn of phrase–it’s gospel.

Dick’s blasting ‘Dancing Queen’ over the comms? Cool, cool, but what if we broad-casted it over the League’s party line at ten times the volume? Jason’s coated the batarangs with pink glitter? Let’s set a spring-loaded trigger in the Batmobile, rigged with forty-eight pounds of the stuff. Barbara’s screwing with Bruce’s data feed? Hack his visual feed with an eighteen-hour loop of Rick Astley’s Never Gonna Give You Up.

Out of all of them, Stephanie is the one with the most I Give Up Face wins.

But Tim?

Tim is a force of chaos that is not to be trifled with. As unpredictable as the elements, and twice as frightening when the occasion calls for it. The only reason that he doesn’t have the most wins is simply because he never actively participates in the game. But he’s done everything from sleepwalking, to publicly embarrassing Bruce, to T-Posing in places where he shouldn’t. (i.e. on top of a GCPD patrol car or barrel of toxic chemicals.)

Twice, Tim committed acts that triggered the I Give Up Face so quickly, so completely, that the others could only gape, and declare him the winner.

The first was during a bank robbery. The gun-toting thieves pointed their weapons at Batman, Nightwing, and Red Robin and screamed, “Don’t move or we’ll shoot!”

Tim proceeded to Fortnite Dance enthusiastically.

Bruce could only stare off into the distance (the thugs were watching, transfixed, with expressions of horrified fascination) and contemplate his life choices. After the fact, Dick swore to anyone who’d listen that he’d never seen Bruce dissociate that quickly.

The second time it happened, Tim was once again on Live Television (a foolish decision that the Wayne Enterprises higher-ups have finally learned from and vowed never to repeat). He was supposed to be giving a speech, but instead, blankly stared at the crowd, and proceeded to recite the entire script of the 1975 cinematic classic, Monty Python and the Holy Grail. Tim refused to be removed from the stand, and managed to fight off security while somehow keeping his mouth close to the mic.

Bruce came to terms with the fact years ago: he may lead a dangerous life. He may put his life on the line daily, nightly, and every moment in between. But it won’t be the villains or the thugs that finally kill him–

–it’ll be his children.

#batfam#dc#batfamily#batman headcanons#bruce wayne#batman#dick grayson#nightwing#red hood#jason todd#stephanie brown#spoiler#cassandra cain#batgirl#barbara gordon#oracle#damian wayne#robin#duke thomas#signal#thanks @nxxttime!#this was really fun#i know you said just the boys#but i couldn't resist

2K notes

·

View notes

Text

Version 324

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had a great week. The downloader overhaul is almost done.

pixiv

Just as Pixiv recently moved their art pages to a new phone-friendly, dynamically drawn format, they are now moving their regular artist gallery results to the same system. If your username isn't switched over yet, it likely will be in the coming week.

The change breaks our old html parser, so I have written a new downloader and json api parser. The way their internal api works is unusual and over-complicated, so I had to write a couple of small new tools to get it to work. However, it does seem to work again.

All of your subscriptions and downloaders will try to switch over to the new downloader automatically, but some might not handle it quite right, in which case you will have to go into edit subscriptions and update their gallery manually. You'll get a popup on updating to remind you of this, and if any don't line up right automatically, the subs will notify you when they next run. The api gives all content--illustrations, manga, ugoira, everything--so there unfortunately isn't a simple way to refine to just one content type as we previously could. But it does neatly deliver everything in just one request, so artist searching is now incredibly faster.

Let me know if pixiv gives any more trouble. Now we can parse their json, we might be able to reintroduce the arbitrary tag search, which broke some time ago due to the same move to javascript galleries.

twitter

In a similar theme, given our fully developed parser and pipeline, I have now wangled a twitter username search! It should be added to your downloader list on update. It is a bit hacky and may be ultimately fragile if they change something their end, but it otherwise works great. It discounts retweets and fetches 19/20 tweets per gallery 'page' fetch. You should be able to set up subscriptions and everything, although I generally recommend you go at it slowly until we know this new parser works well. BTW: I think twitter only 'browses' 3200 tweets in the past, anyway. Note that tweets with no images will be 'ignored', so any typical twitter search will end up with a lot of 'Ig' results--this is normal. Also, if the account ever retweets more than 20 times in a row, the search will stop there, due to how the clientside pipeline works (it'll think that page is empty).

Again, let me know how this works for you. This is some fun new stuff for hydrus, and I am interested to see where it does well and badly.

misc

In order to be less annoying, the 'do you want to run idle jobs?' on shutdown dialog will now only ask at most once per day! You can edit the time unit under options->maintenance and processing.

Under options->connection, you can now change max total network jobs globally and per domain. The defaults are 15 and 3. I don't recommend you increase them unless you know what you are doing, but if you want a slower/more cautious client, please do set them lower.

The new advanced downloader ui has a bunch of quality of life improvements, mostly related to the handling of example parseable data.

full list

downloaders:

after adding some small new parser tools, wrote a new pixiv downloader that should work with their new dynamic gallery's api. it fetches all an artist's work in one page. some existing pixiv download components will be renamed and detached from your existing subs and downloaders. your existing subs may switch over to the correct pixiv downloader automatically, or you may need to manually set them (you'll get a popup to remind you).

wrote a twitter username lookup downloader. it should skip retweets. it is a bit hacky, so it may collapse if they change something small with their internal javascript api. it fetches 19-20 tweets per 'page', so if the account has 20 rts in a row, it'll likely stop searching there. also, afaik, twitter browsing only works back 3200 tweets or so. I recommend proceeding slowly.

added a simple gelbooru 0.1.11 file page parser to the defaults. it won't link to anything by default, but it is there if you want to put together some booru.org stuff

you can now set your default/favourite download source under options->downloading

.

misc:

the 'do idle work on shutdown' system will now only ask/run once per x time units (including if you say no to the ask dialog). x is one day by default, but can be set in 'maintenance and processing'

added 'max jobs' and 'max jobs per domain' to options->connection. defaults remain 15 and 3

the colour selection buttons across the program now have a right-click menu to import/export #FF0000 hex codes from/to the clipboard

tag namespace colours and namespace rendering options are moved from 'colours' and 'tags' options pages to 'tag summaries', which is renamed to 'tag presentation'

the Lain import dropper now supports pngs with single gugs, url classes, or parsers--not just fully packaged downloaders

fixed an issue where trying to remove a selection of files from the duplicate system (through the advanced duplicates menu) would only apply to the first pair of files

improved some error reporting related to too-long filenames on import

improved error handling for the folder-scanning stage in import folders--now, when it runs into an error, it will preserve its details better, notify the user better, and safely auto-pause the import folder

png export auto-filenames will now be sanitized of \, /, :, *-type OS-path-invalid characters as appropriate as the dialog loads

the 'loading subs' popup message should appear more reliably (after 1s delay) if the first subs are big and loading slow

fixed the 'fullscreen switch' hover window button for the duplicate filter

deleted some old hydrus session management code and db table

some other things that I lost track of. I think it was mostly some little dialog fixes :/

.

advanced downloader stuff:

the test panel on pageparser edit panels now has a 'post pre-parsing conversion' notebook page that shows the given example data after the pre-parsing conversion has occurred, including error information if it failed. it has a summary size/guessed type description and copy and refresh buttons.

the 'raw data' copy/fetch/paste buttons and description are moved down to the raw data page

the pageparser now passes up this post-conversion example data to sub-objects, so they now start with the correctly converted example data

the subsidiarypageparser edit panel now also has a notebook page, also with brief description and copy/refresh buttons, that summarises the raw separated data

the subsidiary page parser now passes up the first post to its sub-objects, so they now start with a single post's example data

content parsers can now sort the strings their formulae get back. you can sort strict lexicographic or the new human-friendly sort that does numbers properly, and of course you can go ascending or descending--if you can get the ids of what you want but they are in the wrong order, you can now easily fix it!

some json dict parsing code now iterates through dict keys lexicographically ascending by default. unfortunately, due to how the python json parser I use works, there isn't a way to process dict items in the original order

the json parsing formula now uses a string match when searching for dictionary keys, so you can now match multiple keys here (as in the pixiv illusts|manga fix). existing dictionary key look-ups will be converted to 'fixed' string matches

the json parsing formula can now get the content type 'dictionary keys', which will fetch all the text keys in the dictionary/Object, if the api designer happens to have put useful data in there, wew

formulae now remove newlines from their parsed texts before they are sent to the StringMatch! so, if you are grabbing some multi-line html and want to test for 'Posted: ' somewhere in that mess, it is now easy.

next week

After slaughtering my downloader overhaul megajob of redundant and completed issues (bringing my total todo from 1568 down to 1471!), I only have 15 jobs left to go. It is mostly some quality of life stuff and refreshing some out of date help. I should be able to clear most of them out next week, and the last few can be folded into normal work.

So I am now planning the login manager. After talking with several users over the past few weeks, I think it will be fundamentally very simple, supporting any basic user/pass web form, and will relegate complicated situations to some kind of improved browser cookies.txt import workflow. I suspect it will take 3-4 weeks to hash out, and then I will be taking four weeks to update to python 3, and then I am a free agent again. So, absent any big problems, please expect the 'next big thing to work on poll' to go up around the end of October, and for me to get going on that next big thing at the end of November. I don't want to finalise what goes on the poll yet, but I'll open up a full discussion as the login manager finishes.

1 note

·

View note

Text

Learn Data Science from Scratch in 2021

According to reports, over 2.5 quantibytes of data is generated every single day. Putting that in perspective, every person of the over 7 billion persons in the world generates over 1.4 MB of data every second. But this data is as good as nothing when left bare. The onus is on data scientists to wrangle the data and distill actionable insights. Perhaps, this is why data science is called the sexiest job of this decade.

What's even more interesting is that the field is open to a lot of freshers. But if you're looking to start a career in data science, a mistake you don't want to make is not having a plan. There are a lot of materials on the web, and you just may get overwhelmed trying to consume all at once.

In this post, I will give you a practical guide on how to learn data science from scratch. Let's get started.

The 3 Important Things You'd Be Needing

There are 3 vital ingredients in becoming a top data scientist:

Some programming knowledge for wrangling data and creating machine learning models

SQL for managing databases, and

A decent knowledge of statistics to understand the concepts that underpin the data transformation process and machine learning algorithms.

Let's take each of them.

1. Programming

If you'd be getting into data science, you'd need to have some programming skills to create models. There are two popular programming languages used for data science; R and Python. I personally am biased towards Python, not just because it is what I use but Python is fairly easy to learn.

Besides, Python is used for many other applications besides data science. This is what makes it popular. Python packs a lot of packages, APIs, and modules for data science.

When learning Python for data science, you may not need all the knowledge of the programming language as it is quite broad. However, there are some concepts in Python you must master. They include

Data types and data structures (lists, dictionaries, tuples, etc)

List slicing and comprehensions

Using os and pickle library

Conditional statements and control flow

Object-Oriented Programming

Once, you’re comfortable with these concepts in Python, you can move on to the next stage.

In the next step, you will need to master libraries for machine learning. Specifically, you will need to fully grasp the use of the following.

Numpy and Pandas for Data Preprocessing

Matplotlib and Seaborn for Data Visualization

Sklearn, Pytorch, Keras, Tensorflow for machine learning model building

When practicing these skills, the place of quality data cannot be overemphasized. You can get interesting datasets from platforms such as Kaggle or UCI Machine Learning Repository. Kaggle and GitHub are also great places to find machine learning models you can practice and use on-the-go.

You will also find great competitions you can jump on in Kaggle. Engaging in these competitions would help you build a solid data science workforce. Furthermore, you’d be exposed to a better approach to solving problems from fellow competitors.

Datasets on Kaggle are however fairly clean data. You may take it a step further by scraping the web for data yourself. These kinds of data are mostly unclean. But it is good practice as this is the nature of data you’d be faced with when solving novel problems. Don’t be scared to try your hands on them.

When you encounter problems with your code, Stack Overflow is a great platform to get help. Finally, H2kinfosys offers a complete course in Data Science that would guide you through this entire process and make you excellent in the skill explained above.

2. SQL

SQL is another vital skill a data scientist must matter. In fact, it is asked the most in most interview sessions. This of course is no coincidence; it goes to show how the skill is in demand in the industry. It is a given that the robustness of your machine learning model is hinged on the kind of data at your disposal. But have you thought about how the data is managed and extracted? SQL.

SQL helps you create a dataset pipeline that is used to organize and sort data based on its relationship with the various features. It can also do extract, transform and load (ETL) operations.

Databases can be classified as relational and nonrelational databases. For relational databases, you will need tools such as MySQL, PostgreSQL, Oracle dataset, etc. MongoDB, Neo4j are common tools to deal with non-relational databases.

The best way to master SQL is by practice. You will need to play around with a lot of datasets and see how you can manage them using SQL. You can start with SQLite as it provides a beginner-friendly experience with its support for a small dataset and less intensive efforts. You may, however, have issues with sources for datasets to practice with. This is the major bottleneck with SQL.

3. Statistics and Linear algebra

Statistics and linear algebra and what kinds of the underlying principles of most machine learning models. What’s more? To build great intuition on how to wrangle data, your statistics and algebra must be impeccable. Well, I’m not saying you've got to get a degree in mathematics. What I'm saying however is that you can't afford to be oblivious of some concepts in mathematics such as linear algebra and statistics.

If you are adventurous, you may want to try to build some of the common ML algorithms such as Linear Regression from scratch. Attempting to build the code from scratch would particularly require a decent knowledge of mathematics.

Let me point out that your efforts would not go unrewarded. Coding machine learning algorithms would give you a high-level intuition on how to optimize the algorithm’s hyperparameter for better performance, making you a unique data scientist.

Again, H2kinfosys has a wide variety of courses that specializes in learning Python, Data Science, and Artificial Intelligence. You will be given all the key resources to jump-start your career in the field. If you want to learn from the best in the field, I would recommend H2kinfosys particularly given that their instructors ride on years of field experience.

Wrapping up,

Let’s talk about mentorship. Mentorship plays a fundamental role in skyrocketing your progress in Data Science. You can approach folks you find fascinated on social media and send them a friendly text, stating that you’d like to be under their tutelage. Be polite, yet confident when addressing them. It is a good idea to highlight some of their previous works that jumped out at you and how you want to give it a shot. You could also get mentorship from the experienced instructors at H2kinfosys once enroll in their class.

Finally, learning data science can be a daunting task as there are a lot of things to cover. However, following this guide would help you cushion the overwhelmingness of its demands and prepare you for what you should expect going forward. Be resolute. Be consistent. Start now.

0 notes

Text

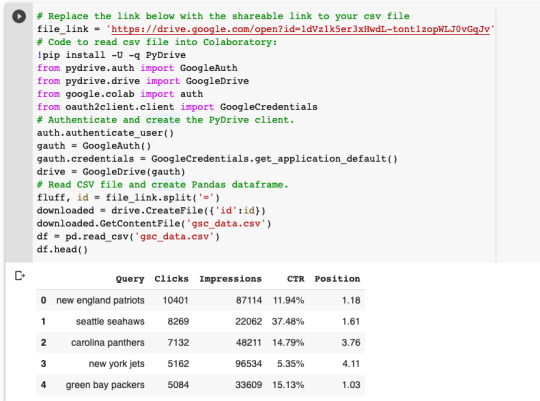

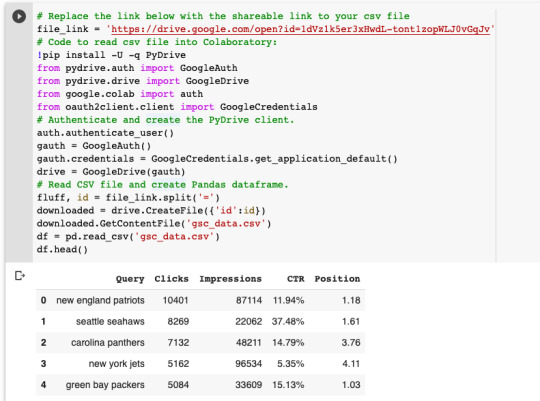

Build SEO seasonality projections with Google Trends in Python

Roadmapping season is upon us and, if you’re an in-house SEO like me, that means it’s time to set your FY20 goals for the SEO channel. To set realistic goals, we first need to understand how much traffic we can reasonably drive from SEO this year. To figure this out, we must first answer a few questions:

How much traffic are we currently driving from SEO?

If we only take seasonality into account, how much traffic will we drive this year?

What impact do we expect from the projects on our roadmap?

This article will help answer some of these questions and tie it all together to set your SEO traffic goal using Python and some very basic Excel functions.

How much traffic are we currently driving from SEO?

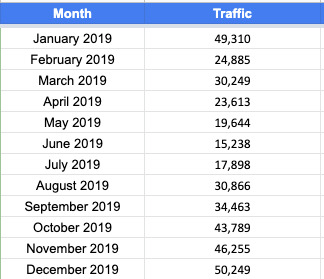

Export monthly traffic for the past year from your analytics platform (Google Analytics or Adobe Analytics). Feel free to use whichever metric you think is best. This can be users, visits, sessions, entrances, unique visitors or a different metric that you use. I recommend using whichever metric is most indicative of search behavior. I personally believe that metric is entry visits/entrances from SEO because a user can enter from search multiple times and we want to capture each entrance. Feel free to use whichever metric is your source of truth.

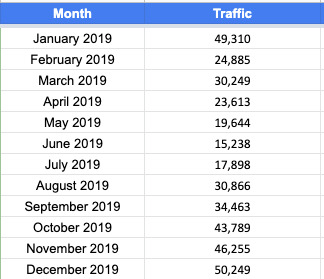

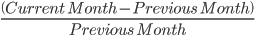

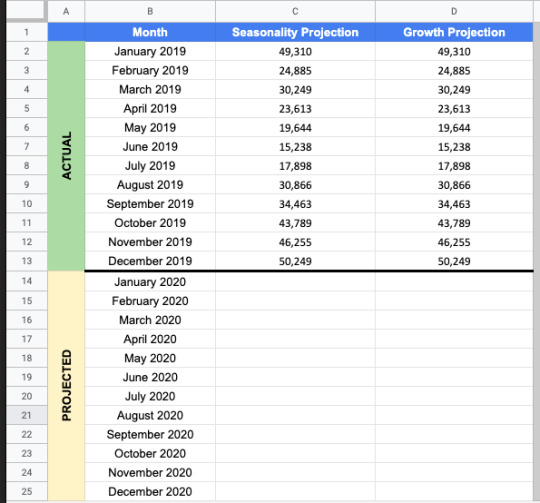

The data should be formatted similar to the table below:

How much traffic will we drive if nothing changes?

This question is generally the hardest to answer. In the past, I’ve seen some people use traffic patterns from prior years to project seasonality but if you’re on any sort of growth trajectory – which I hope you are – this won’t work for you. I recommend an alternative solution: a seasonality index built with Google Trends data.

Google Trends has a wealth of information about search demand. Google Search Console has a wealth of information about the searches that drive traffic to your website. Connect the two and watch the magic happen.

Step 1: Export Google Search Console Data

Navigate to the search performance tab in Search Console. Change the date range to include the last full year. For example: January 1, 2019 – December 31, 2019. Next, sort the queries by clicks so the top-performing keywords are at the top. Finally, export the top 1000 queries to a .csv file.

Note: if you’d like to be more thorough, you can use Google Data Studio or the Search Console API to export all queries for your site.

Step 2: Collect Google Trends Data

A seasonality index is a forecasting tool used to determine demand for certain products or, in this case, search terms in a given market over the course of a typical year. Google Trends is a powerful tool that leverages the data collected by Google Search to quantify interest for a particular search term over time. We will use the past 5 years of Google Trends interest data to predict future interest over the next year in one-week spans.

Since we want this index to be indicative of the seasonal pattern for traffic to our website, we’ll be basing it on the top-performing keywords for our website that we exported from Search Console in step 1. We’ll also be building this index using PyTrends in Python to remove as much manual work as possible.

PyTrends is a pseudo-API (not supported by Google) for Google Trends that allows us to pull data for large amounts of keywords in an automated fashion. I’ve set up a Google Colab notebook that can be used for this example.

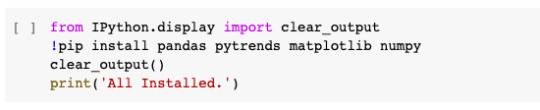

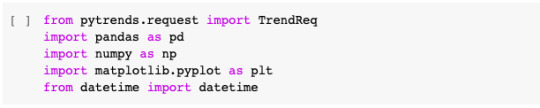

First, we’ll install the required modules to run our code.

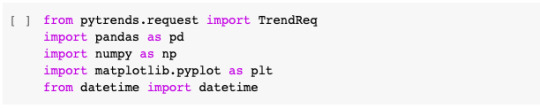

Next, well import the modules into our Colab notebook.

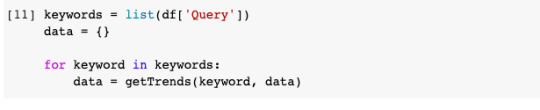

We’ll require two functions to create our seasonality index. The first, getTrends, will take a keyword and a dictionary object as parameters. This function will call the Google Trends API and append the data to a list stored in the dictionary object using the dates as a key. The second function, average, will be used to calculate the average interest for each date in the dictionary.

Next, we’ll import our dataset of keywords from Search Console. This can be very confusing in Google Colab so I’ve tried to make it as simple as possible. Follow these steps:

Upload your CSV file to Google Drive

Right click on the file, click “Get Shareable Link” and copy the link.

Replace the link in the code with the link to your file.

Run the code. The first time it runs, you’ll be asked to authorize Google Drive access by navigating to an authorization page and logging in with your Google account. It will then give you an authorization code. Copy the code and paste it in the box that appears after running the code and hit enter.

We’ll then convert your CSV file to a Pandas DataFrame.

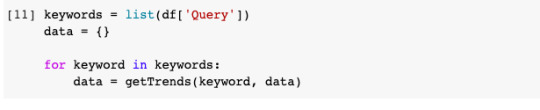

Once we’ve imported our keyword data, we’ll convert the Query column to a list object called keywords. We’ll create an empty dictionary object called data. This is where we will store the Google Trends data. Finally, we’ll iterate over the keyword list to get Google Trends data for each keyword and store it in the data dictionary.

Quick note: Since PyTrends is not an official or Google-supported API, you can run into trouble in this step. I’ve found it best to limit the keyword list to the top 250 queries. Some other steps you can take (which I won’t touch on in this article) are using proxies or adding some random delays in the loop to decrease the chances of being blocked by Google.

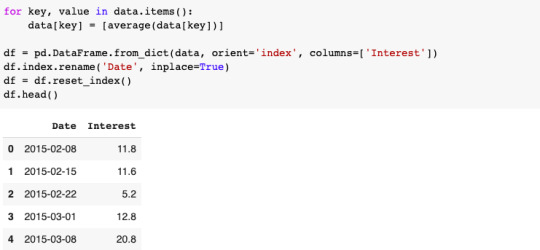

Once we’ve collected all of our Google Trends data, we’ll then calculate average interest over time.

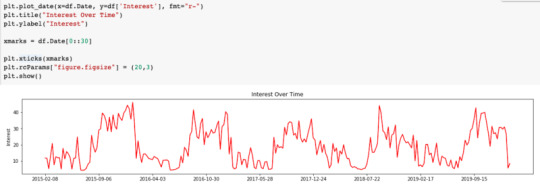

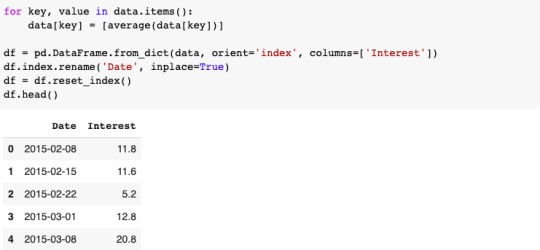

At this point, it can be helpful to plot the results using a time series. We’ll do this using matplotlib.

Use this step to verify that the data matches your expectations. Since we’re using NFL teams as our keywords in this example, you’ll notice that the interest peaks during the NFL season and drops off during the off-season. This is what we would expect to happen.

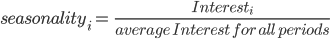

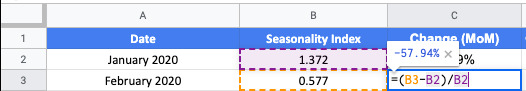

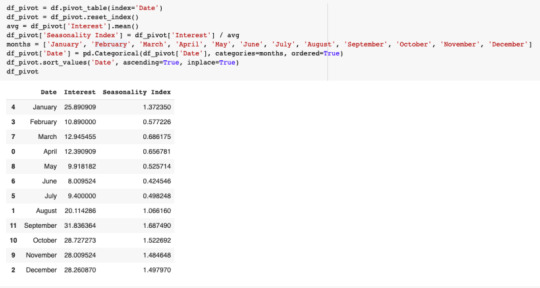

Now, the final step in creating our seasonality index is to group the data by month and convert it to an index. This can be done by calculating the average interest throughout the year and dividing each month’s interest by the average interest.

This can be done in Pandas by calculating the mean of the Interest and then dividing each item in the series by the mean.

Step 3: Put It All Together in Google Sheets

Now that we have our seasonality index, it’s time to put it to work. This could be done in Python but since we’ll want to be able to change some of the inputs to our projection model, I think it’s easiest to use Google Sheets or Excel.

I have created this Google Sheet as an example.

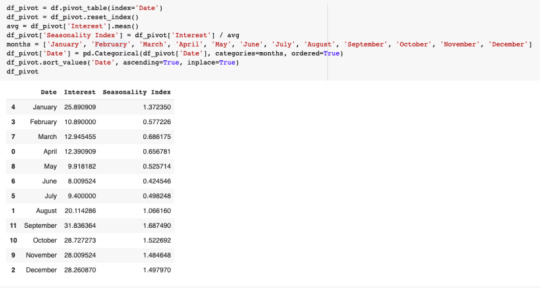

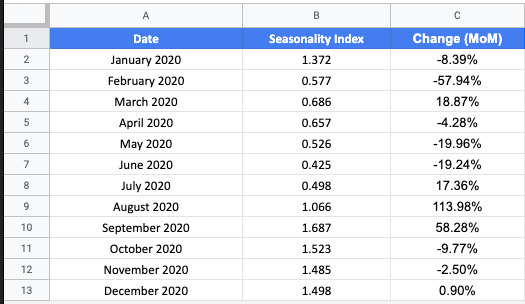

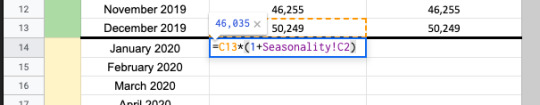

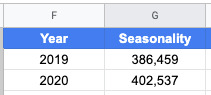

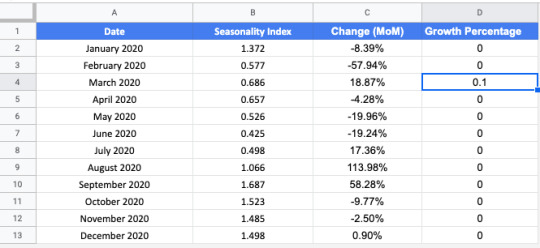

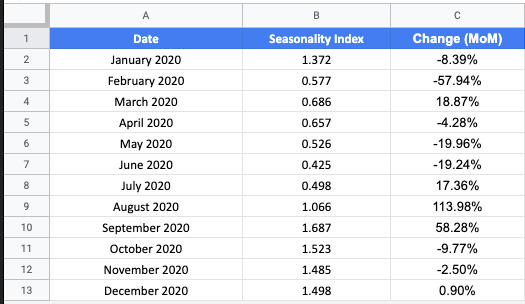

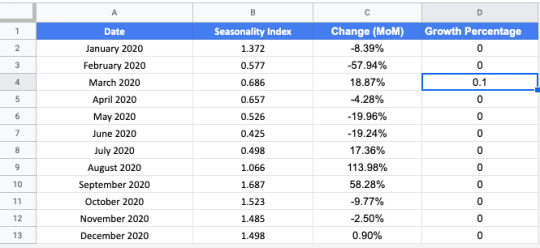

We’ll first create a spreadsheet with our seasonality index and calculate the percentage change from month to month.

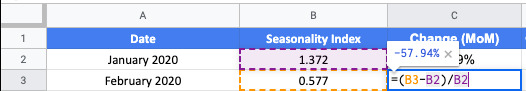

We calculate month over month percentage change using the following function:

In order to calculate the percentage for January, you’ll need to modify the function. Calculate percent change using the formula below.

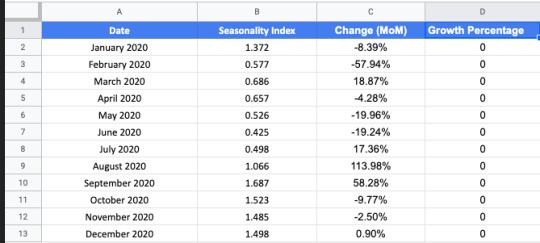

We’ll also create a column for Growth Percentage. This is what we’ll use to model the growth driven by the projects we plan to complete this year. Set the values to 0 for now, we’ll come back to this later.

In a new tab, we’ll add traffic from the past year by month in two columns: Seasonality Projection and Growth Projection. We’ll also continue the Month column to include this year.

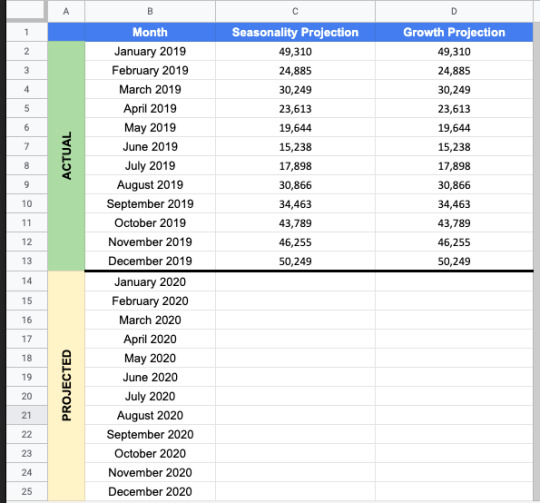

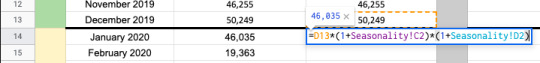

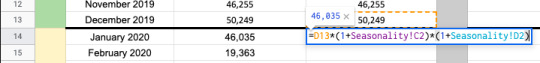

Projecting traffic using seasonality

Now, we’ll use our seasonality index to project monthly traffic based on December’s traffic. This calculation uses the growth percentage in the Seasonality tab in our sheet as follows:

Then, we can drag this function down so it fills in the rest of the months in the year.

If we take the sum of this year, we’ll have our projected annual traffic for 2020.

Projecting traffic using growth and seasonality

Next, we’ll add the expected growth from the projects we hope to complete this year. Repeat the steps above but also add the Growth Percentage column from the Seasonality tab.

Let’s imagine we have a project in March that we expect will lead to a 10% increase in traffic. We navigate to the Seasonality tab and change the value in the Growth Percentage column for March from 0 to 0.1.

This will now update the Growth Projections in the Traffic tab to reflect a 10% increase in March. Compare the values for March in the Seasonality column to the values in the Growth column. Also, notice that the values for each month after March have increased as well. That is the value of this model.

Now we can plot this difference on a time series chart.

We can also calculate the total projected traffic for 2020 given the impact of this project and compare it to the projected traffic based on seasonality. That gives you the total value of completing this project in March.

Based on this model and the traffic data, completing this project in March would lead to an increase of 33,714 visits to the site. That can then be quantified even further. Let’s imagine our conversion rate is around 2% for SEO traffic. That means this change would bring in an additional 674 conversions this year. Let’s also imagine our AOV (average order value) is $80. That means this change could drive a revenue increase of $53,920 this year. This tool lays the groundwork for making these types of calculations. Is the math absolutely perfect? Not by a long shot but it at least gives you some means of prioritization and helps you tell the story of why the items on your SEO roadmap are important.

The post Build SEO seasonality projections with Google Trends in Python appeared first on Search Engine Land.

Build SEO seasonality projections with Google Trends in Python published first on https://likesfollowersclub.tumblr.com/

0 notes

Text

Build SEO seasonality projections with Google Trends in Python

Roadmapping season is upon us and, if you’re an in-house SEO like me, that means it’s time to set your FY20 goals for the SEO channel. To set realistic goals, we first need to understand how much traffic we can reasonably drive from SEO this year. To figure this out, we must first answer a few questions:

How much traffic are we currently driving from SEO?

If we only take seasonality into account, how much traffic will we drive this year?

What impact do we expect from the projects on our roadmap?

This article will help answer some of these questions and tie it all together to set your SEO traffic goal using Python and some very basic Excel functions.

How much traffic are we currently driving from SEO?

Export monthly traffic for the past year from your analytics platform (Google Analytics or Adobe Analytics). Feel free to use whichever metric you think is best. This can be users, visits, sessions, entrances, unique visitors or a different metric that you use. I recommend using whichever metric is most indicative of search behavior. I personally believe that metric is entry visits/entrances from SEO because a user can enter from search multiple times and we want to capture each entrance. Feel free to use whichever metric is your source of truth.

The data should be formatted similar to the table below:

How much traffic will we drive if nothing changes?

This question is generally the hardest to answer. In the past, I’ve seen some people use traffic patterns from prior years to project seasonality but if you’re on any sort of growth trajectory – which I hope you are – this won’t work for you. I recommend an alternative solution: a seasonality index built with Google Trends data.

Google Trends has a wealth of information about search demand. Google Search Console has a wealth of information about the searches that drive traffic to your website. Connect the two and watch the magic happen.

Step 1: Export Google Search Console Data

Navigate to the search performance tab in Search Console. Change the date range to include the last full year. For example: January 1, 2019 – December 31, 2019. Next, sort the queries by clicks so the top-performing keywords are at the top. Finally, export the top 1000 queries to a .csv file.

Note: if you’d like to be more thorough, you can use Google Data Studio or the Search Console API to export all queries for your site.

Step 2: Collect Google Trends Data

A seasonality index is a forecasting tool used to determine demand for certain products or, in this case, search terms in a given market over the course of a typical year. Google Trends is a powerful tool that leverages the data collected by Google Search to quantify interest for a particular search term over time. We will use the past 5 years of Google Trends interest data to predict future interest over the next year in one-week spans.

Since we want this index to be indicative of the seasonal pattern for traffic to our website, we’ll be basing it on the top-performing keywords for our website that we exported from Search Console in step 1. We’ll also be building this index using PyTrends in Python to remove as much manual work as possible.

PyTrends is a pseudo-API (not supported by Google) for Google Trends that allows us to pull data for large amounts of keywords in an automated fashion. I’ve set up a Google Colab notebook that can be used for this example.

First, we’ll install the required modules to run our code.

Next, well import the modules into our Colab notebook.

We’ll require two functions to create our seasonality index. The first, getTrends, will take a keyword and a dictionary object as parameters. This function will call the Google Trends API and append the data to a list stored in the dictionary object using the dates as a key. The second function, average, will be used to calculate the average interest for each date in the dictionary.

Next, we’ll import our dataset of keywords from Search Console. This can be very confusing in Google Colab so I’ve tried to make it as simple as possible. Follow these steps:

Upload your CSV file to Google Drive

Right click on the file, click “Get Shareable Link” and copy the link.

Replace the link in the code with the link to your file.

Run the code. The first time it runs, you’ll be asked to authorize Google Drive access by navigating to an authorization page and logging in with your Google account. It will then give you an authorization code. Copy the code and paste it in the box that appears after running the code and hit enter.

We’ll then convert your CSV file to a Pandas DataFrame.

Once we’ve imported our keyword data, we’ll convert the Query column to a list object called keywords. We’ll create an empty dictionary object called data. This is where we will store the Google Trends data. Finally, we’ll iterate over the keyword list to get Google Trends data for each keyword and store it in the data dictionary.

Quick note: Since PyTrends is not an official or Google-supported API, you can run into trouble in this step. I’ve found it best to limit the keyword list to the top 250 queries. Some other steps you can take (which I won’t touch on in this article) are using proxies or adding some random delays in the loop to decrease the chances of being blocked by Google.

Once we’ve collected all of our Google Trends data, we’ll then calculate average interest over time.

At this point, it can be helpful to plot the results using a time series. We’ll do this using matplotlib.

Use this step to verify that the data matches your expectations. Since we’re using NFL teams as our keywords in this example, you’ll notice that the interest peaks during the NFL season and drops off during the off-season. This is what we would expect to happen.

Now, the final step in creating our seasonality index is to group the data by month and convert it to an index. This can be done by calculating the average interest throughout the year and dividing each month’s interest by the average interest.

This can be done in Pandas by calculating the mean of the Interest and then dividing each item in the series by the mean.

Step 3: Put It All Together in Google Sheets

Now that we have our seasonality index, it’s time to put it to work. This could be done in Python but since we’ll want to be able to change some of the inputs to our projection model, I think it’s easiest to use Google Sheets or Excel.

I have created this Google Sheet as an example.

We’ll first create a spreadsheet with our seasonality index and calculate the percentage change from month to month.

We calculate month over month percentage change using the following function:

In order to calculate the percentage for January, you’ll need to modify the function. Calculate percent change using the formula below.

We’ll also create a column for Growth Percentage. This is what we’ll use to model the growth driven by the projects we plan to complete this year. Set the values to 0 for now, we’ll come back to this later.

In a new tab, we’ll add traffic from the past year by month in two columns: Seasonality Projection and Growth Projection. We’ll also continue the Month column to include this year.

Projecting traffic using seasonality

Now, we’ll use our seasonality index to project monthly traffic based on December’s traffic. This calculation uses the growth percentage in the Seasonality tab in our sheet as follows:

Then, we can drag this function down so it fills in the rest of the months in the year.

If we take the sum of this year, we’ll have our projected annual traffic for 2020.

Projecting traffic using growth and seasonality

Next, we’ll add the expected growth from the projects we hope to complete this year. Repeat the steps above but also add the Growth Percentage column from the Seasonality tab.

Let’s imagine we have a project in March that we expect will lead to a 10% increase in traffic. We navigate to the Seasonality tab and change the value in the Growth Percentage column for March from 0 to 0.1.

This will now update the Growth Projections in the Traffic tab to reflect a 10% increase in March. Compare the values for March in the Seasonality column to the values in the Growth column. Also, notice that the values for each month after March have increased as well. That is the value of this model.

Now we can plot this difference on a time series chart.

We can also calculate the total projected traffic for 2020 given the impact of this project and compare it to the projected traffic based on seasonality. That gives you the total value of completing this project in March.

Based on this model and the traffic data, completing this project in March would lead to an increase of 33,714 visits to the site. That can then be quantified even further. Let’s imagine our conversion rate is around 2% for SEO traffic. That means this change would bring in an additional 674 conversions this year. Let’s also imagine our AOV (average order value) is $80. That means this change could drive a revenue increase of $53,920 this year. This tool lays the groundwork for making these types of calculations. Is the math absolutely perfect? Not by a long shot but it at least gives you some means of prioritization and helps you tell the story of why the items on your SEO roadmap are important.

The post Build SEO seasonality projections with Google Trends in Python appeared first on Search Engine Land.

Build SEO seasonality projections with Google Trends in Python published first on https://likesandfollowersclub.weebly.com/

0 notes

Text

Learning Python Basics Week #4

*Information from: codeacademy.com*

Slicing Lists and Strings:

Definition! Strings- a list of characters. Remember to begin counting at zero!

example: in a list of three things, [:3] means to grab the first through second items

Inserting Items into a List: print listtname.insert(1, “ “) Note: the “1″ is the position of the number in the list/index. The “ “ is the item to be added

“For” Loops

This lets you do an action to everything in the list

Format of “For” Loops:

for ___(variable)________ in ____(list name)_______:

Organize/Sort Your List

Use this code: listname.sort( )

Key

This is a big part of building a variable/line of text. Definition: any string or any numbers. Here, you can use the fun brackets! { }

Example of a key: key 1 = value 1

Dictionaries:

You can add things to dictionaries!

length is represented as len( )

length of dictionary equals the number of key_value pairs

Code example breakdown:

print “There are” + str(len(menu)) + “items on the menu.”

“There are” = your sentence

str = string together lines of code. In this case, the lines are adding key_value pairs to a menu.

len( ) = length

(menu) = list name

“items on the menu” = the rest of your sentence

Format: print _(list name)__[’ ‘]

This line of code returns information associated with the item in the line of code

Delete things from a menu:

del__lsit name__[key_name]

Add something new!

dict_name[key] = new_value

Remove some stuff!

listname.remove(”the thing you want to remove”)

Example: add flowers to a grocery list:

inventory[’flowers’] = [’rice’, ‘apples’, ‘lettuce’]

inventory = the name of the dictionary

[’flowers’] = what you want to add to your dictionary

[’rice’, ‘apples’, ‘lettuce’] = things already on the list

Sort the List:

inventory[’grocery’].sort( )

inventory = dictionary name

[’grocery’] = list name

.sort( ) = type as is! Remember, we want to sort this list!

Now, using the example from above, remove “apples” from the grocery list:

inventory[’grocery’].remove(’apples’)

inventory = dictionary name

[’grocery’] = list name

.remove = the action you want to perform on your list

(’apples’) = the thing you want to remove from your list

Add a number value to “rice” from above example:

inventory[’rice’] = inventory[’rice’] + 5

inventory = dictionary name

[’rice’] = thing you want to add value to

+ 5 = number you want to associate with [’rice’]

Looping

Definition! Looping- perform different actions depending on the item, known as “looping through”

Format for “Looping Through”

for item in listname

String Looping

string = lists

characters elements

For a “Loop Through” Example:

“Looping through the prices dictionary” is represented with this line of code:

for __the thing in your list, ex. food_______ in __dictionary name____

*Remember: for lists, use standard brackets [ ]. The items do not have to be in quotes.*

To create a sum list, do the following:

def sum(numbers): *This is a defined function, named sum, with

parameter of a number. *

total = 0 Since we haven’t added anything yet, we need to set

the total to 0.

for number in numbers: Create a “loop through” for your category that’s being

added, and don’t forget the :

total + = number We are adding our numbers to our total, which is 0

right now.

return total Give us the total!

print sum(n) Final result!

Note: *I found that I was still having trouble knowing what to name the blanks in the “loop through” formats. It seems like the rules change each time. By “name the blanks,” I am referring to which element of the code to use. Based on the format presented in the lessons, it seemed like the format was: for the thing in your list, for example food in dictionary or list name. However, some of the examples reversed this order, while others confirmed this format. This source (https://www.dataquest.io/blog/python-for-loop-tutorial/) seemed to confirm my format, so I proceeded with this knowledge base. *

List Accessing

Definition! List accessing- reming items from lists.

Removing items from lists:

n.pop(index) removes item at index from list, returns to you

n.remove(item) removes the item itself

del(n[1]) removes item at index level, but does not return it

follow these commands with: print n, where n is the list name.

Example:

If needing to write a function, structure it as so: def string_function( )

Remember: “string concatenation” is just putting two elements together, like so: return n + ‘hello’

Python Learning Reflection:

I selected Python as my learning technology, because I wanted to strengthen my knowledge of coding. I do not have any prior experience with coding, other than a few basic programs for digitizing, such as ffmpeg. While I learned many basic skills for python, I also realized several things about my learning process:

1. I learn best when I interact with the material in a variety of ways. Hearing someone explain a concept, coupled with practice exercise I can complete on my own, is most helpful for my learning experience.

2. Since my knowledge of coding is basic, I needed even more step-by-step explanations for Python. For example, I would have benefitted from a basic coding structure breakdown at the beginning of each new concept. Since I did not come into this project knowing the basics, I tried to do a breakdown of each code, but had to rely on my notes or outside sources for explanations for why the code was structured the way it was.

3. I overestimated how much I could reasonably accomplish on codeacademy.com. While the website proclaimed the module would take 25 hours, I found that I spent much longer with each lesson, and therefore did not complete the codeacademy.com “Learn Python” course. Despite this, I gained a strong foundation for Python.

4. There were moments when I became frustrated at my lack of knowledge. However, I had to remind myself several times that it is okay for me to be a beginner, and as long as I was working to expand my knowledge of this concept, mistakes and frustrations were a natural part of learning something new.

0 notes

Text

PyJWT or a Flask Extension?

In our last blog post on JWT, we saw code examples based on the PyJWT library. A quick Google search also revealed a couple of Flask-specific libraries. What do we use?

We can implement the functionality with PyJWT. It will allow us fine-grained control. We would be able to customize every aspect of how the authentication process works. On the other hand, if we use Flask extensions, we would need to do less since these libraries or extensions already provide some sort of integrations with Flask itself. Also personally, I tend to choose my framework-specific libraries for a task. They reduce the number of tasks required to get things going.

In this blog post, we would be using the Flask-JWT package.

Getting Started

Before we can begin, we have to install the package using pip.

pip install Flask-JWT

We also need an API endpoint that we want to secure. We can refer to the initial code we wrote for our HTTP Auth tutorial.

from flask import Flask

from flask_restful import Resource, Api

app = Flask(__name__)

api = Api(app, prefix=”/api/v1")

class PrivateResource(Resource):

def get(self):

return {“meaning_of_life”: 42}

api.add_resource(PrivateResource, ‘/private’)

if __name__ == ‘__main__’:

app.run(debug=True)

Now we work on securing it.

Flask JWT Conventions

Flask JWT has the following convention:

There need to be two functions — one for authenticating the user, this would be quite similar to the verify the function. The second function’s job is to identify the user from a token. Let’s call this function identity.

The authentication function must return an object instance that has an attribute named id.

To secure an endpoint, we use the @jwt_required decorator.

An API endpoint is set up at /auth that accepts username and password via JSON payload and returns access_token which is the JSON Web Token we can use.

We must pass the token as part of the Authorization header, like — JWT <token>.

Authentication and Identity

First, let’s write the function that will authenticate the user. The function will take in username and password and return an object instance that has the attribute. In general, we would use the database and the id would be the user id. But for this example, we would just create an object with an id of our choice.

USER_DATA = {

“masnun”: “abc123”

}

class User(object):

def __init__(self, id):

self.id = id

def __str__(self):

return “User(id=’%s’)” % self.id

def verify(username, password):

if not (username and password):

return False

if USER_DATA.get(username) == password:

return User(id=123)

We are storing the user details in a dictionary-like before. We have created a user class with id attributes so we can fulfill the requirement of having an id attribute. In our function, we compare the username and password and if it matches, we return an instance with the being 123. We will use this function to verify user logins.

Next, we need the identity function that will give us user details for a logged-in user.

def identity(payload):

user_id = payload[‘identity’]

return {“user_id”: user_id}

The identity the function will receive the decoded JWT.

An example would be like:

{‘exp’: 1494589408, ‘iat’: 1494589108, ‘nbf’: 1494589108, ‘identity’: 123}

Note the identity key in the dictionary. It’s the value we set in the id attribute of the object returned from the verify function. We should load the user details based on this value. But since we are not using the database, we are just constructing a simple dictionary with the user id.

Securing Endpoint

Now that we have a function to authenticate and another function to identify the user, we can start integrating Flask JWT with our REST API. First the imports:

from flask_jwt import JWT, jwt_required

Then we construct the jwt instance:

jwt = JWT(app, verify, identity)

We pass the flask app instance, the authentication function and the identity function to the JWT class.

Then in the resource, we use the @jwt_required decorator to enforce authentication.

class PrivateResource(Resource):

@jwt_required()

def get(self):

return {“meaning_of_life”: 42}

Please note the jwt_required decorator takes a parameter (realm) which has a default value of None. Since it takes the parameter, we must use the parentheses to call the function first — @jwt_required() and not just @jwt_required. If this doesn’t make sense right away, don’t worry, please do some study on how decorators work in Python and it will come to you.

Here’s the full code:

from flask import Flask

from flask_restful import Resource, Api

from flask_jwt import JWT, jwt_required

app = Flask(__name__)

app.config[‘SECRET_KEY’] = ‘super-secret’

api = Api(app, prefix=”/api/v1")

USER_DATA = {

“masnun”: “abc123”

}

class User(object):

def __init__(self, id):

self.id = id

def __str__(self):

return “User(id=’%s’)” % self.id

def verify(username, password):

if not (username and password):

return False

if USER_DATA.get(username) == password:

return User(id=123)

def identity(payload):

user_id = payload[‘identity’]

return {“user_id”: user_id}

jwt = JWT(app, verify, identity)

class PrivateResource(Resource):

@jwt_required()

def get(self):

return {“meaning_of_life”: 42}

api.add_resource(PrivateResource, ‘/private’)

if __name__ == ‘__main__’:

app.run(debug=True)

Let’s try it out.

Trying It Out

Run the app and try to access the secured resource:

$ curl -X GET http://localhost:5000/api/v1/private

{

“description”: “Request does not contain an access token”,

“error”: “Authorization Required”,

“status_code”: 401

}

Makes sense. The endpoint now requires an authorization token. But we don’t have one, yet!

Let’s get one — we must send a POST request to /auth with a JSON payload containing username and password. Please note, the API prefix is not used, that is the URL for the auth endpoint is not /api/v1/auth. But it is just /auth.

$ curl -H “Content-Type: application/json” -X POST -d ‘{“username”:”masnun”,”password”:”abc123"}’ http://localhost:5000/auth

{

“access_token”: “eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJleHAiOjE0OTQ1OTE4MjcsIml

hdCI6MTQ5NDU5MTUyNywibmJmIjoxNDk0NTkxNTI3LCJpZGVudGl0eSI6MTIzfQ.q0p02opL0OxL7EGD7

wiLbXbdfP8xQ7rXf7–3Iggqdi4"

}

Yes, we got it. Now let’s use it to access the resource.

curl -X GET http://localhost:5000/api/v1/private -H “Authorization: JWT eyJ0eXAiOi

JKV1QiLCJhbGciOiJIUzI1NiJ9.eyJleHAiOjE0OTQ1OTE4MjcsImlhdCI6MTQ5NDU5MTUyNywibmJmIjo

xNDk0NTkxNTI3LCJpZGVudGl0eSI6MTIzfQ.q0p02opL0OxL7EGD7wiLbXbdfP8xQ7rXf7–3Iggqdi4"

{

“meaning_of_life”: 42

}

Yes, it worked! Now our JWT authentication is working.

Getting the Authenticated User

Once our JWT authentication is functional, we can get the currently authenticated user by using the current_identity object.

Let’s add the import:

from flask_jwt import JWT, jwt_required, current_identity

And then let’s update our resource to return the logged in user identity.

class PrivateResource(Resource):

@jwt_required()

def get(self):

return dict(current_identity)

The current_identity the object is a LocalProxy instance that can’t be directly JSON serialized. But if we pass it to a dict() call, we can get a dictionary representation.

Now let’s try it out:

$ curl -X GET http://localhost:5000/api/v1/private -H “Authorization: JWT eyJ0eXAi

OiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJleHAiOjE0OTQ1OTE4MjcsImlhdCI6MTQ5NDU5MTUyNywibmJmI

joxNDk0NTkxNTI3LCJpZGVudGl0eSI6MTIzfQ.q0p02opL0OxL7EGD7wiLbXbdfP8xQ7rXf7–3Iggqdi4"

{

“user_id”: 123

}

As we can see the current_identity the object returns the exact same data our identity the function returns because Flask JWT uses that function to load the user identity.

Do share your feedback with us. We hope you enjoyed this post.

To know more about our services please visit: https://www.loginworks.com/web-scraping-services

0 notes

Text

#100daysofcode

Day 5/100

[2/26/2019]

Soooo I missed a day. According to the #100daysofcode github FAQs missing a day is fine but missing two days is a no-no (as in I might have to start again if I do that). I was just super busy and didn’t really have time to do any coding. But no excuses. What I can do is just add one more day to the 100 for every day I miss.

I did have time to do some stuff today that was directly relevant to my project so I count it as productive even though it wasn’t really much. I mostly did cleaning and taking care of my niece and reading.

However, I made the code for creating tables in my database on Postgres

I haven’t uploaded it yet on the actual databases yet because I wanted to look over the necessary data types and constraints I thought would make sense for each table and I thought it was a nice start.

I breezed through the files section for Python because the methods were actually very straightforward and I might be able to make some sort of code to write stuff to files for my project as soon as tomorrow!

I started learning about dictionaries today and I don’t think they’re very relevant to my project but I did find them fascinating. Dictionaries, like strings, lists, and tuples, are a collection of values of different data types but each of these values have an associated key with it. Dictionaries are essentially Python’s mapping type. Dictionaries, and tuples, are pretty new because I haven’t really worked with them in the past. The functions they can do I’ve had to explicitly program before because my previous programming languages have been low level.

Honestly, I can learn how to do GUIs on Python as soon as tomorrow so that’ll be fun!

0 notes

Text

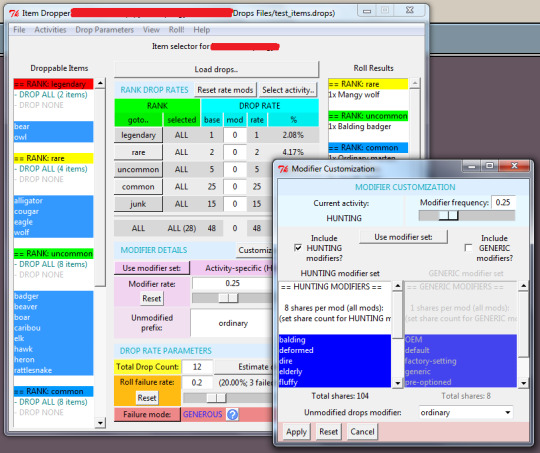

025 // Distractions III: External Random Item Drop Generator

So you may be wondering what happened to last week's post, and the answer is that I never wrote it because I was too busy trying to finish this side project I have been working on. And I finished it!

I think I might have offered to explain it a while ago, so I will do that now, since I have very little new art to offer at the moment (it is probably not why you are here but please bear with me).

This one is a bit long and is all about code..

For the last few weeks, I have been working on an external random item dropper for a couple of friends who want to start their own thing, and doing that required me to either construct an entire user interface arrangement in Pygame (which I have done twice already and have my own modules for but am not really super-de-duper into) or to learn at least enough Tkinter to make something I am not totally ashamed of (which is a lot of learning because I know-- which is to say, knew-- almost exactly nothing about Tkinter, not counting some stuff with Listboxes.

I opted for the latter and, in truth, it was pretty easy to learn. It was a bit frustrating at times because there are problems with Tkinter's 'widgets' (graphic interface objects) that can occur and lock up the software in a way that Tkinter considers normal and not an error and why would it tell you about it? For instance, if you try to use a "grid" arrangement in the same Frame (an object Tkinter uses to create layers of widget organization) as a "pack" arrangement, it will "Tkinter will happily spend the rest of your lifetime trying to negotiate a solution that both managers are happy with," or how replacing Variable objects with new ones that have the same name sometimes causes the whole affair to silently stop working and leave you clicking a button to no effect, wondering what is going on and why.

Problems which I overcame! Quickly and with some difficulty! Most of my time was spent on the interface, actually, since it was the part I knew the least about. The design was pretty easy (or it was easy in the extent that I produced an interface experience that I, personally, found satisfying, and which failed to produce a/any complaint(s) from the people for whom I made it) but the actual construction took a lot of learning when it came to displaying and updating the right variables in the right places and when. There are many values shared between user input boxes (Tk.Entry), where the user enters various bits of data, lists (Tk.Listbox), which have selectable entries and a lot of straight-forward appearance parameters, labels (Tk.Label), which display values either as static text or from various types of Variable, and, of course, the item data sheet that the user provides (read using ConfigParser from an simple external text document I can tell you how to make, and internally, as a chaotic dictionary of lists and Variables and strings and numbers). Incidentally, I ended up extending (adding my own functions and attributes to) a few of Tkinter's basic classes, and this part of the project was actually one of the most interesting. A great many parts of the original module have been deliberately constructed in a way that simplifies that kind of extension, and while I had to go outside of that on an occasion or two, it was absolutely a worthwhile lesson!

The Variables were the most perplexing part, because Tkinter is the least forthright about them and because they are more flexible than they let on. These variables can be equipped with callback functions that allow them to alter their contents, or the contents of other widgets, or do some other crazy third thing, whenever they are altered, or even just whenever something looks at their values. That part was easy and extremely useful once I got the hang of it! They can also be given specific names by which other functions and widgets may identify them, and while I found this quite useful as well, its lack of stability was somewhat less endearing since Tkinter will not tolerate two variables with the same name (a legitimate and preventable issue!) and will not necessarily tell you when this has happened or where (I am less okay with this).

Another interesting thing about Tkinter is that it offers multiple obvious ways of accomplishing the same thing, which is a bit of a problem for "The Zen of Python," a sort of mantra that a lot of people in the community take quite seriously. As an example, you may almost always alter the configuration of a widget in at least two ways: - Use Widget.config(some_attr = value) and change one or several attributes at once using arguments, or - Setting them using attribute names as keys, like so: Widget['some_attr'] = value. - There are other ways too but none spring to mind.

Also, widgets can be stored in attributes, but you can also call them up using their names: a widget created in the line

myObject.my_widj = Label(master=tk_root, text='Yo, babe(l), I am a Label!', name='lbl_annoyinglabel')

..can be accessed directly either by way of some object attribute reference:

myObject.my_widj.config(text = "Hey, id'jit, I'm a widget!")

..which is absolutely normal in Python, or by calling it by name from its master object:

tk_root.nametowidget('lbl_annoyinglabel')['text'] = 'Please stop talking.'.

Naturally, you would probably want to use the first method as often as possible, because it involves fewer operations and would probably be easier to maintain. But the second way, more elaborate though it may be, lets you save on assigning attributes by tracking widgets using Tcl's internal structure. (n.b.: I cannot say I have ever found myself running out of room for attributes in a namespace but I am also a complete amateur as a programmer so please bear with me. <3 )

Interestingly, actual structure of the input sheets was the next-most time-consuming part. Trying to find a data format that would be easily comprehensible by anyone who picked it up (probably only going to be two people, plus myself, if even that many) and which also met with ConfigParser's profoundly elusive approval was a somewhat complex task. It turned out to be exactly as hard as I thought it would be, at least, and there were no surprises here. You can see a blank template of the input sheet here!

The actual drop generator code-- the element which takes the user-supplied data and returns a random selection of items from it, according to their initial and supplemental parameters; the single element that the entire program is built to support-- only took an hour to complete, actually. I did it last and by then, all of the parameters and variables and their names and locations had become obvious, and since it was a pretty plain function to start with, it was done quickly. It was interesting to note how much more effort it was to pack this simple function up into a pretty interface than it took to build the core element itself. I suppose we see this everywhere: a car is just self-propelled chairs; a human is just a gangly, leaky chariot for a suite of genitalia; this software is just 'arbitrary decisions' packed in a pretty box. A very pretty box that I will no doubt look back on in two years and wonder what I was thinking, I hope!~ <3

Anyway I completed it and delivered it and it is my first free-standing piece of software that some other person might actually use for their purposes, and that is a sense of accomplishment I have not felt since the WSDOT departmental library people told me they wanted to include my undergraduate thesis in their stacks.

As an aside, I had considered making a companion tool to go with the drop generator that simplified drop sheet creation. It would not be over-hard to make: all it is liable to be is another Listbox with a text entry field attached, a button or two to add and remove entries, a few other configurables, and a ConfigParser set up to save it all out, but I feel as though the drop sheet format-- sensitive as it is to typographical problems and formatting issues-- is probably easy enough to use. Also there are two people using it and I am in touch with one of them almost every day. Still, food for future thought!

Anyway, back to my game, now! It has been a long time and I am ready to face it again with fresh eyes and fewer .. days.. to live.. I guess! Hm..

See you next time! :y

#development#python#tkinter#distractions#code solutions#random drops#ConfigParser#inform#completed#longpost

3 notes

·

View notes

Text

Advent of Code 2020: Reflection on Days 8-14

A really exciting week, with a good variety of challenges and relative difficulties. Something tells me that this year, being one where people are waking up later and staying at home all day, the problems have been specifically adapted to be more engaging and interesting to those of us working from home. Now that we've run the gamut of traditional AoC/competitve-programming challenges, I'm excited to see what the last 10 days have in store!

First things first, I have started posting my solutions to GitHub. I hope you find them useful, or at least not too nauseating to look at.

Day 8: To me, this is the quintessential AoC problem: you have a sequence of code-like instructions, along with some metadata the programmer has to keep track of, and there's some minor snit with the (usually non-deterministic) execution you have to identify. Some people in the subreddit feared this problem, thinking it a harbinger of Intcode 2.0. (Just look at that first line... somebody wasn't happy.)

Effectively, I got my struggles with this kind of problem out of the way several years ago: the first couple days of Intcode were my How I Learned to Stop Worrying and Love The While Loop, so this problem was a breeze. It also helps that I've been living and breathing assembly instructions these past few weeks, owing to a course project. I truly must learn, though, to start these problems after I finish my morning coffee, lest I wonder why my code was never executing the "jump" instruction...

Luckily, from here on out, there will be no more coffee-free mornings for me! Part of my partner's Christmas present this year was a proper coffee setup, so as to liberate them from the clutches of instant coffee. I'm not a coffee snob – or, at least, that's what I tell myself – but I was one more half-undrinkable cup of instant coffee away from madness.

Day 9: Bright-eyed, bushy-tailed, and full of fresh-ground and French-pressed coffee, I tackled today's problem on the sofa, between bites of a toasted homemade bagel.

This is a competitive programmer's problem. Or, at least, it would have been, if the dataset was a few orders of magnitude bigger. As of writing, every problem thus far has had even the most naïve solution, so long as it did not contain some massive bottleneck to performance, run in under a second. At first, I complained about this to my roommate, as I felt that the problem setters were being too lenient to solutions without any significant forethought or insight. But, after some thinking, I've changed my tune. Not everything in competitive programming[1] has to be punitive of imperfections in order to be enjoyable. The challenges so far have been fun and interesting, and getting the right answer is just as satisfying if you get it first try or fiftieth.

First off, if I really find myself languishing from boring data, I can always try to make the day more challenging by trying it in an unfamiliar language, or by microprofiling my code and trying to make it as efficient as possible. For example, I'm interested in finding a deterministic, graph theory-based solution to Day 7, such that I don't just search every kind of bag to see which kind leads to the target (i.e., brute-forcing). Maybe I'll give it a shot on the weekend, once MIPS and MARS is just a distant memory. A distant, horrible memory.

Second, even I – a grizzled, if not decorated, competitive and professional programming veteran – have been learning new concepts and facts about my own languages from these easy days. For example, did you know that set membership requests run in O(1) time in Python? That's crazy fast! And here I was, making dictionaries with values like {'a': True} just to check for visitation.

Part 1 was pretty pish-posh. Sure, in worst-case it ran in O(n^2), but when you have a constant search factor of 25 (and not, say, 10^25), that's really not a big deal.

Part 2 is what made me think that today's problem was made for competitive programmers. Whenever a problem mentions sums of contiguous subsets, my brain goes straight for the prefix sum array. They're dead simple to implement: I don't think I've so much as thought about PSAs in years, and I was able to throw mine together without blinking. I did have to use Google to jog my memory as to how to query for non-head values (i.e., looking at running sums not starting from index 0), but the fact that I knew that they could be queried that way at all probably saved me a lot of dev time. Overall complexity was O(nlogn) or thereabouts, and I'm sure that I could have done some strange dynamic programming limbo to determine the answer while I was constructing the PSA, but this is fine. I get the satisfaction of knowing to use a purpose-built data structure (the PSA), and of knowing that my solution probably runs a bit faster than the ultra-naive O(n^3)-type solutions that novice programmers might have come up with, even if both would dispatch the input quickly.

Faffing around on the AoC subreddit between classes, I found a lovely image that I think is going to occupy space in my head for a while. It's certainly easy to get stuck in the mindset of the first diagram, and it's important to centre myself and realize that the second is closer to reality.

Day 10: FML. Path-like problems like this are my bread and butter. Part 1 was easy enough: I found the key insight, that the values had to monotonically increase and thus the list ought to be sorted, pretty quickly, and the only implementation trick was keeping track of the different deltas.

Part 2, on the other hand, finally caught me on my Day 9 hubris: the naïve DFS, after ten minutes and chewing through all of my early-2014 MacBook's RAM, I still didn't have an answer. I tried being creative with optimizing call times; I considered using an adjacency matrix instead of a dictionary-based lookup; and I even considered switching to a recursion-first language like Haskell to boost performance. Ultimately, I stumbled onto the path of

spoilermemoization using `@functools.cache`

,

which frankly should have been my first bet. After some stupid typo problems (like, ahem, commenting out the function decorator), I was slightly embarrassed by just how instantly things ran after that.

As we enter the double-digits, my faith in the problem-setters has been duly restored: just a measly 108-line input was enough to trigger a Heat Death of the Universe execution time without some intelligent intervention. Well done, team!

Day 11: Good ol' Game of Life-style state transition problem. As per usual, I've sweated this type of problem out before, so for the actual implementation, I decided to go for Good Code as the real challenge. I ended up developing – and then refactoring – a single, pure state-transition function, which took in a current state, a neighbour-counting function, and a tolerance for the one element that changes between Parts 1 and 2 (you'll see for yourself), then outputting a tuple of the grid, and whether or not it had changed in the transition. As a result, my method code for Parts 1 and 2 ended up being identical, save for replacing some of the inputs to that state function.

Despite my roommate's protestations, I'm quite proud of my neighbour-counting functions. Sure, one of them uses a next(filter()) shorthand[2] – and both make heavy (ab)use of Python's new walrus operator, but they do a pretty good job making it obvious exactly what conditions they're looking for, while also taking full advantage of logical short-circuiting for conciseness.

Part 2 spoilers My Part 2 neighbour counter was largely inspired by my summertime fascination with constraint-satisfaction problems such as the [N-Queens problem](https://stackoverflow.com/questions/29795516/solving-n-queens-using-python-constraint-resolver). Since I realized that "looking for a seat" in the 8 semi-orthogonal directions was effectively equivalent to a queen's move, I knew that what I was really looking for was a delta value – how far in some [Manhattan-distance](https://www.wikiwand.com/en/Taxicab_geometry) direction I had to travel to find a non-aisle cell. If such a number didn't exist, I knew not to bother looking in that direction.

My simulations, whether due to poor algorithmic design or just on account of it being Python, ran a tad slowly. On the full input, Part 1 runs in about 4 seconds, and Part 2 takes a whopping 17 seconds to run fully. I'll be sure to check the subreddit in the coming hours for the beautiful, linear-algebraic or something-or-other solution that runs in constant time. A programmer I have been for many years; a computer scientist I have yet to become.

Day 12: Not terribly much to say on this one. Only that, if you're going to solve problems, it may be beneficial to read the instructions, lest

spoilers You cause your ship to turn clockwise by 90º... 90 times.

The second part was a fresh take on a relatively tired instruction-sequence problem. The worst part was the feeling of dread I felt while solving, knowing that my roommate – who consistently solves the problems at midnight, whereas I solve them in the morning – was going to awaken another Eldritch beast of Numpy and linear algebra for at least Part 2. Eugh.

Day 13: This was not my problem. I'm going to wrap my entire discussion of the day in spoilers, since I heavily recommend you try to at least stare at this problem for a while before looking at solutions.