#Python Data Cleaning

Explore tagged Tumblr posts

Text

How to Drop a Column in Python: Simplifying Data Manipulation

Dive into our latest post on 'Drop Column Python' and master the art of efficiently removing DataFrame columns in Python! Perfect for data analysts and Python enthusiasts. #PythonDataFrame #DataCleaning #PandasTutorial 🐍🔍

Hello, Python enthusiasts and data analysts! Today, we’re tackling a vital topic in data manipulation using Python – how to effectively use the Drop Column Python method. Whether you’re a seasoned programmer or just starting out, understanding this technique is crucial in data preprocessing and analysis. In this post, we’ll delve into the practical use of the drop() function, specifically…

View On WordPress

#DataFrame Column Removal#how to delete a column from dataframe in python#how to drop column in python#how to remove a column from a dataframe in python#Pandas Drop Column#pandas how to remove a column#Python Data Cleaning#python pandas how to delete a column

0 notes

Text

Now this may not seem like a massive change in format, but boy howdy do I feel proud of myself for writing the functions to automate this ehehehehehe (unfortunately for me ao3 ship stat op used a different formatting for the pre-2020 tables, so I'll have to write another function to sort those ones out too ToT)

#coding#ship stats#python#csv#ao3 ship stats#I'm gonna visualise their annual ship rankings data!#(and likely the tumblr fandom ones too)#but first I gotta clean the source data & build a data base#run all the raw data sets through my code#and then I will have a huge updated more uniform and complete data set#which I can then learn how to visualise#for data portfolio purposes#translation for non-coders: wow code is fun but it looks unimpressive if you don't know#I basically took the base text I copied off the ao3 ship rankings posts on ao3#and wrote a bunch of code that automatically formats it from a to b#into a format that's easier to work with in my code#to be able to put it into a proper database later

9 notes

·

View notes

Text

How to Analyze Data Effectively – A Complete Step-by-Step Guide

Learn how to analyze data in a structured, insightful way. From data cleaning to visualization, discover tools, techniques, and real-world examples. How to Analyze Data Effectively – A Complete Step-by-Step Guide Data analysis is the cornerstone of decision-making in the modern world. Whether in business, science, healthcare, education, or government, data informs strategies, identifies trends,…

#business intelligence#data analysis#data cleaning#data tools#data visualization#Excel#exploratory analysis#how to analyze data#predictive analysis#Python#Tableau

0 notes

Text

Elevate Your Data Science Skills with Data Science Course in Pune

Success in the rapidly evolving field of data science hinges on one key factor: quality data. Before diving into more complex machine learning algorithms and detailed analysis, starting with a clean data set is important. At The Cyber Success Institute, our Data Science Course in Pune emphasizes mastering these core skills, equipping you with the expertise to handle data efficiently and drive impactful results. These basic data cleaning steps, known as data wrangling and preprocessing, are necessary to process raw data in sophisticated ways that support accurate analysis and prediction to hone these basic skills to process data thoroughly and prepare amazing results A resource that gives you essential knowledge.

Transform Your Career with The Best Data Science Course at Cyber Success

Data wrangling, or data manging, is the process of transforming and processing raw data from its often messy origin into a more usable form. This process involves preparing, organizing, and enhancing data to make it more valuable for analysis and modeling. Preprocessing, which is less controversial, focuses primarily on preparing data for machine learning models to normalize, transform, and scale them to improve performance

At the Cyber Success Institute, we understand that strong data disputes are the cornerstone of any data science project. Our Data Science Course in Pune offers hands-on training in data wrangling and pre-processing, enabling you to effectively transform raw data into actionable insights.

Discover Data Cleaning Excellence with The Best Data Science Course at Cyber Success

The data management process involves preparing, organizing, and enhancing the data to make it more valuable for analysis and modeling. Less controversial preprocessing focuses on data preparation for machine learning models to ensure performance data quality will directly affect the accuracy and reliability of machine learning models The information is well suited and ensures insights are accurate and useful. This helps to identify hidden patterns and saves time during sample development and subsequent analysis. At Cyber Success Institute, we focus on the importance of data security requirements so we prepare you and your employees to ensure that your data is always up to date. Our Data Science Course in Pune offers hands-on training in data wrangling and pre-processing, enabling you to effectively transform raw data into actionable insights. Basic Steps in Data Management and Preprocessing,

Data cleaning: This first and most important step includes handling missing values, eliminating inconsistencies, and eliminating redundant data points. Effective data cleaning ensures that the dataset is reliable, it is accurate and ready for analysis.

Data conversion: Once prepared, the data must be converted to usable form. This may involve converting categorical variables into numeric ones using techniques such as one-hot encoding or label encoding. Normalization and standardization are used to ensure that all factors contribute to the equality of the model, with no feature dominating due to scale differences make sure you are prepared to handle a variety of data environments.

Feature Engineering: Feature engineering is the process of creating new features from existing data to better capture underlying patterns. This may involve forming interactive phrases, setting attributes, or decomposing timestamps into more meaningful objects such as "day of the week" or "hour of the day".

Data reduction: Sometimes data sets can have too many or too many dimensions, which can lead to overqualification or computational costs. Data reduction techniques such as principal component analysis (PCA), feature selection, and dimensionality reduction are essential to simplify data sets while preserving valuable information Our Data Science Classes in Pune with Placement at the Cyber Success Institute provide valuable experience in data reduction techniques to help you manage large data sets effectively.

Data integration and consolidation: Often, data from multiple sources must be combined to obtain complete data. Data integration involves combining data from databases or files into a combined data set. In our Data Science Course in Pune, you will learn how to combine different types of data to improve and increase the relevance and depth of research.

Why Choose Cyber Success Institute for Data Science Course in Pune?

The Cyber Success Institute is the best IT training institute in Pune, India, offering the best data science course in Pune with Placement assistance, designed to give you a deep understanding of data science from data collection to preprocessing to advanced machine learning. With hands-on experience, expert guidance and a curriculum that is up to date with the latest industry trends, you will be ready to become a data scientist

Here are the highlights of the data science course at Cyber Success Institute, Pune:

Experienced Trainers: Our data science expert trainers bring a wealth of experience in the field of data science, including advanced degrees, industry certifications, strong backgrounds in data analytics, machine learning, AI, and hands-on experience in real-world projects to ensure students learn Entrepreneurs who understand business needs.

Advanced Curriculum: Our Data Science Course in Pune is well structured to cover basic and advanced topics in data science, including Python programming, statistics, data visualization, machine learning, deep learning natural language processing and big data technology.

Free Aptitude Sessions: We believe that strong analytical and problem-solving skills are essential in data science. To support technical training, we offer free aptitude sessions that focus on developing logical reasoning, statistical analysis and critical thinking.

Weekly Mock Interview Sessions: To prepare you for the job, we conduct weekly mock interview sessions that simulate real-world interview situations. These sessions include technical quizzes on data science concepts, coding problems, and behavioral quizzes to build student confidence and improve interview performance.

Hands-on Learning: Our Data Science Course in Pune emphasizes practical, hands-on learning. You will work on real-world projects, data manipulation, machine learning model development, and applications using tools such as Python and Tableau. This approach ensures a deep and practical understanding of data science, preparing them for real job challenges.

100% Placement Assistance: We provide comprehensive placement assistance to help you start your career in data science. This includes writing a resume, preparing for an interview, and connecting with potential employers.

At Cyber Success, our Data Science Course in Pune ensures that students receive a well-rounded education that combines theoretical knowledge with practical experience. We are committed to helping our students become skilled, confident and career-ready data scientists.

Conclusion:

Data management and preprocessing are the unsung heroes of data science, transforming raw data into powerful insights that shape the future. At Cyber Success Institute, our Data Science Course in Pune will teach you the technical skills and it will empower you to lead the data revolution. With immersive, hands-on training, real-world projects, and mentorship from industry experts, we prepare you to harness data’s full potential and drive meaningful impact. Joining Cyber Success Institute, it’s about becoming part of a community committed to excellence and innovation. Start your journey here, master the art of data science with our Data Science Course in Pune, and become a change-maker in this rapidly growing field. Elevate your career, lead with data, and let Cyber Success Institute be your launchpad to success. Your future in data science starts now!

Attend 2 free demo sessions!

To learn more about our course at, https://www.cybersuccess.biz/data-science-course-in-pune/

Secure your place at, https://www.cybersuccess.biz/contact-us/

📍 Our Visit: Cyber Success, Asmani Plaza, 1248 A, opp. Cafe Goodluck, Pulachi Wadi, Deccan Gymkhana, Pune, Maharashtra 411004

📞 For more information, call: 9226913502, 9168665644, 7620686761.

PATH TO SUCCESS - CYBER SUCCESS 👍

#education#training#itinstitute#datascience#big data#data analytics#data cleaning techniques#deep learning#machine learning#ai ml development services#python programming#data science course in Pune#data science classes in pune#data science course with placement in pune#career#mongodb#statistics#data science training institute

0 notes

Text

A Beginner’s Guide to Data Cleaning Techniques

Data is the lifeblood of any modern organization. However, raw data is rarely ready for analysis. Before it can be used for insights, data must be cleaned, refined, and structured—a process known as data cleaning. This blog will explore essential data-cleaning techniques, why they are important, and how beginners can master them.

#data cleaning techniques#data cleansing#data scrubbing#business intelligence#Data Cleaning Challenges#Removing Duplicate Records#Handling Missing Data#Correcting Inconsistencies#Python#pandas#R#OpenRefine#Microsoft Excel#Tools for Data Cleaning#data cleaning steps#dplyr and tidyr#basic data cleaning features#Data validation

0 notes

Note

thou art employed via the r programming tongue....? mayhaps I hath been too hasteful in mine judgement...

waow clout for jobhaving on tumblr dot com? it's more likely than you think. . . .

#but yes i am employed in data analysis for public health research!#repo is mostly R (my beloved)#partly stata (my beloathed)#recently ive added some python (my useful for webscraping and string cleaning)#asks#anonymous

1 note

·

View note

Text

I will say that Python libraries have some extreme variation in quality. "Data science" libraries like seaborne and sklearn are absolute dog shit nightmares that assume you are too stupid to understand anything. I did not good experiences with PIL or Pillow. Matplotlib is too convoluted with the more complicated features, but at least the core stuff is clean and accessible. And numpy, pytorch, and Gym are just absolute masterpieces of clean and elegant design.

110 notes

·

View notes

Text

November 16th, 2024

Today as a song: METAHESH - Among the Starts

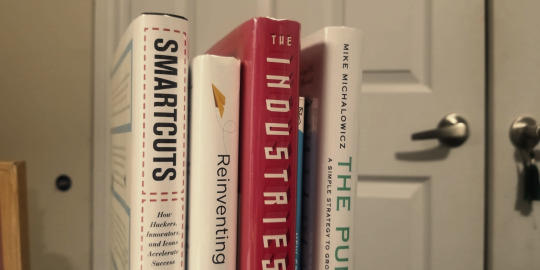

I'm really going to make sure to sit down and try and hit my goals today- especially since at least for the weekend I don't have much work to do so I can focus on studying. I went to the library yesterday and got a bunch of books (mostly about entrepreneurship and self-transformation) so I can hopefully improve my reading without having to rely on the screen so much. To-do list: Studying: 🦉Start on react portfolio 🦉Data Analysis Assignment Unit 3 🦉Data Analysis 5.1 🦉Type out more handwritten notes 🦉Python Coursera Final Project 🦉Python CS50 Lect 1 Projects

Self-care: 🕯️Clean my coffee bar 🕯️Scrapbook a bit 🕯️30 min workout

Reading: 📰The Industries of the Future ch. 1 📰The Pumpkin Plan ch. 1 Language: 🏛️Italian level 1 - H

#studyblr#studyspo#study blog#daily journal#study motivation#dark academia#to do list#light academia#coding#langauge learning#langblr#codeblr#becoming her#bunnies 60 day challenge#it girl self care#journaling#journal#spiritual journey

20 notes

·

View notes

Note

Hello, Kris! I think I might’ve already gotten the gist of it, but it’s been some time. What exactly IS Academia Mode? Are you still in school, or is this your actual job, and it just happens to be involved in the education system?

Many thanks!

hahah no worries!!! that is a good question 🤣😭😭🙏 for me, academia mode is currently finishing the 5th and final year of my doctoral program and includes (but is not limited to lol):

data collection, analysis, write-ups

writing python programs to support my data cleaning, data coding, stats, and data analysis/visualizations

applying for GRANT MONEYYYY

submitting abstract proposals to conferences (and applying for MORE GRANT MONEYYYY)

reporting research findings (writing journal article manuscripts, preparing conference slides)

writing my actual dissertation manuscript lol

supporting and instructing my research assistants

sharing my research with mainstream public audiences

writing my non-fiction book based on my ongoing dissertation research

teaching classes, grading papers, holding office hours, fielding emails, writing letters of recommendation for all sorts of students' fellowships/grad admissions/grant applications, teaching students how to strategize their personal statements, grant purpose letters, and other aspects of apps, etc.

peer-reviewing others' journal manuscripts, providing feedback to colleagues (blind review or not)

assisting with my advisor's research and textbook manuscripts (proofreading, copy-editing, internet sleuthing, finding more up-to-date citations, occasionally writing rough drafts)

writing chapters for edited volumes on various topics

READING. all the time. reading new literature and research articles constantly. ALL THE TIME. writing 1-pagers and mini-annotated bibs for future lit review use, etc.

WRITING. all the time. professional-speak, academic-speak, insructor-speak.

getting paid to travel to conferences to present my research (GRANT MONEYYYYYY)

by may 2025, i'll be a Ph.D.!!!!!! [screams]

academia mode! ✨🤣🤣🤣😭🤣💕 every day, i think about how lucky i am that i get paid to do what i do 🥹🥹🥹🥹🥹 hope you are having a magnificent day, and thank you for the ask!!

#basically my full-time job in academia is reading writing teaching reporting and sharing data and networking 🤣#therentyoupay ask#da-awesom-one#thank you for the ask!!#those of you who have been following me for 10+ years can you believe it 😭😭😭😭😭 back when lok first dropped i was still in college...#when i started writing jelsa i was working full-time and completing my master's program 😭😭😭😭#and now here we are! 🤣#friends friendly reminder to keep up with your hobbies it's so important for mental health and honestly i have found that carving out#time for fic has only resulted in improved writing for both academia AND for fic!creativity 🥹🥹🥹 even if improvement is not necessarily#always the goal... it is a happy bonus i have found!! 🙏🙏💕💕💕

22 notes

·

View notes

Text

What is the most awesome Microsoft product? Why?

The “most awesome” Microsoft product depends on your needs, but here are some top contenders and why they stand out:

Top Microsoft Products and Their Awesome Features

1. Microsoft Excel

Why? It’s the ultimate tool for data analysis, automation (with Power Query & VBA), and visualization (Power Pivot, PivotTables).

Game-changer feature: Excel’s Power Query and dynamic arrays revolutionized how users clean and analyze data.

2. Visual Studio Code (VS Code)

Why? A lightweight, free, and extensible code editor loved by developers.

Game-changer feature: Its extensions marketplace (e.g., GitHub Copilot, Docker, Python support) makes it indispensable for devs.

3. Windows Subsystem for Linux (WSL)

Why? Lets you run a full Linux kernel inside Windows—perfect for developers.

Game-changer feature: WSL 2 with GPU acceleration and Docker support bridges the gap between Windows and Linux.

4. Azure (Microsoft Cloud)

Why? A powerhouse for AI, cloud computing, and enterprise solutions.

Game-changer feature: Azure OpenAI Service (GPT-4 integration) and AI-driven analytics make it a leader in cloud tech.

5. Microsoft Power BI

Why? Dominates business intelligence with intuitive dashboards and AI insights.

Game-changer feature: Natural language Q&A lets users ask data questions in plain English.

Honorable Mentions:

GitHub (owned by Microsoft) – The #1 platform for developers.

Microsoft Teams – Revolutionized remote work with deep Office 365 integration.

Xbox Game Pass – Netflix-style gaming with cloud streaming.

Final Verdict?

If you’re a developer, VS Code or WSL is unbeatable. If you’re into data, Excel or Power BI wins. For cutting-edge cloud/AI, Azure is king.

What’s your favorite?

If you need any Microsoft products, such as Windows , Office , Visual Studio, or Server , you can go and get it from our online store keyingo.com

8 notes

·

View notes

Text

I am remembering things about this generator. First off:

FinalRelationshipValue="{} {} (a {} {} {}), {} (a {} {}) and {} (a {}). One time, {} {} {}, who {} it. "

I'm remembering how silly strings look without their added data in them. Like, yep, that sure is a statement :) Such a valid sentence.

Also, with the disorders, I forgot that I researched statistics for things like "percentage of people missing an arm" and used that in the generator. Your guy has a 4 in 10,000 chance to be missing an arm, and a 28 in 1000 chance to have ADHD. Where did I get this data from? I don't remember. I googled something and found numbers and called it good enough I guess. Anyways there are only three disorders, those two and "Sleepy Bitch Disorder" Which is :\ well that's not a lot. But! I remember asking a few times for things to add, and no one submitted things, and those were just the three I added in to test the feature out. BTW your dragon could potentially have all disorders at once. It's not very probable but it could happen.

Also I absolutely had bias stuff in some of the results, such as for common pronouns and creatures. I have removed all those biases for v2.0.0 👍

Looking at the Hydra code and 😬 that's a lot. Like, it's not hard to understand, there's just a lot with it. Every time a head is added, it cuts the percentage in 1/2 for the next head, and then the final head count needs personalities for each head. But not every thing is multiplied either. Hydras are in v1.1.0 tho, and I never finished them 😔 In fact v2.0.0 might not include them, we'll see how that goes.

Anyways I've started on cleaning up v1.0.2 so that I can understand things easier for when I make the web version. Until then . ..

It'll still be one every 3 hours, so 8 Dragons a day. All the old copyright rules still apply, anyone can use any creature that spits out. I have a .bat file that'll run the Python program 250 times in about 5 seconds, which'll make 31.25 days, basically lasting us until 2025

Also I'll be setting up a bunch of proper blog tags over the next week or two, and update the pinned content as well. Reviving an abandoned blog is a lot of work :P

7 notes

·

View notes

Text

Cleaning Dirty Data in Python: Practical Techniques with Pandas

I. Introduction Hey there! So, let’s talk about a really important step in data analysis: data cleaning. It’s basically like tidying up your room before a big party – you want everything to be neat and organized so you can find what you need, right? Now, when it comes to sorting through a bunch of messy data, you’ll be glad to have a tool like Pandas by your side. It’s like the superhero of…

View On WordPress

#categorical-data#data-cleaning#data-duplicates#data-outliers#inconsistent-data#missing-values#pandas-tutorial#python-data-cleaning-tools#python-data-manipulation#python-pandas#text-cleaning

0 notes

Text

Wilma again

I have ongoing issues with the Linux Mint installation on the laptop I use for software development and blogging.

You may recall I tried upgrading to version 22 (code name: Wilma), encountered a graphics regression, and had to revert the upgrade using TimeShift. Since then, my Linux experience hasn't been quite right. While troubleshooting an issue, I uninstalled Python; this broke APT, causing routine software updates to fail.

Yesterday I created a new partition on the HDD and attempted a clean install of Wilma there. After several tries, I suspect that my largest USB thumb drive (NXT brand, purchased in January 2024) suffers from data corruption.

Believe it or not, my 2nd-largest thumb drive is too small to hold the 2.9 GByte ISO image, so this morning I'm off to buy a new thumb drive.

#laptop#linux mint#wilma#timeshift#linux#upgrade#python#usb#partition#nxt#storage devices#believe it or not#installation#apt#operating systems#system administration

7 notes

·

View notes

Text

Unlocking the Power of Data: Essential Skills to Become a Data Scientist

In today's data-driven world, the demand for skilled data scientists is skyrocketing. These professionals are the key to transforming raw information into actionable insights, driving innovation and shaping business strategies. But what exactly does it take to become a data scientist? It's a multidisciplinary field, requiring a unique blend of technical prowess and analytical thinking. Let's break down the essential skills you'll need to embark on this exciting career path.

1. Strong Mathematical and Statistical Foundation:

At the heart of data science lies a deep understanding of mathematics and statistics. You'll need to grasp concepts like:

Linear Algebra and Calculus: Essential for understanding machine learning algorithms and optimizing models.

Probability and Statistics: Crucial for data analysis, hypothesis testing, and drawing meaningful conclusions from data.

2. Programming Proficiency (Python and/or R):

Data scientists are fluent in at least one, if not both, of the dominant programming languages in the field:

Python: Known for its readability and extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, making it ideal for data manipulation, analysis, and machine learning.

R: Specifically designed for statistical computing and graphics, R offers a rich ecosystem of packages for statistical modeling and visualization.

3. Data Wrangling and Preprocessing Skills:

Raw data is rarely clean and ready for analysis. A significant portion of a data scientist's time is spent on:

Data Cleaning: Handling missing values, outliers, and inconsistencies.

Data Transformation: Reshaping, merging, and aggregating data.

Feature Engineering: Creating new features from existing data to improve model performance.

4. Expertise in Databases and SQL:

Data often resides in databases. Proficiency in SQL (Structured Query Language) is essential for:

Extracting Data: Querying and retrieving data from various database systems.

Data Manipulation: Filtering, joining, and aggregating data within databases.

5. Machine Learning Mastery:

Machine learning is a core component of data science, enabling you to build models that learn from data and make predictions or classifications. Key areas include:

Supervised Learning: Regression, classification algorithms.

Unsupervised Learning: Clustering, dimensionality reduction.

Model Selection and Evaluation: Choosing the right algorithms and assessing their performance.

6. Data Visualization and Communication Skills:

Being able to effectively communicate your findings is just as important as the analysis itself. You'll need to:

Visualize Data: Create compelling charts and graphs to explore patterns and insights using libraries like Matplotlib, Seaborn (Python), or ggplot2 (R).

Tell Data Stories: Present your findings in a clear and concise manner that resonates with both technical and non-technical audiences.

7. Critical Thinking and Problem-Solving Abilities:

Data scientists are essentially problem solvers. You need to be able to:

Define Business Problems: Translate business challenges into data science questions.

Develop Analytical Frameworks: Structure your approach to solve complex problems.

Interpret Results: Draw meaningful conclusions and translate them into actionable recommendations.

8. Domain Knowledge (Optional but Highly Beneficial):

Having expertise in the specific industry or domain you're working in can give you a significant advantage. It helps you understand the context of the data and formulate more relevant questions.

9. Curiosity and a Growth Mindset:

The field of data science is constantly evolving. A genuine curiosity and a willingness to learn new technologies and techniques are crucial for long-term success.

10. Strong Communication and Collaboration Skills:

Data scientists often work in teams and need to collaborate effectively with engineers, business stakeholders, and other experts.

Kickstart Your Data Science Journey with Xaltius Academy's Data Science and AI Program:

Acquiring these skills can seem like a daunting task, but structured learning programs can provide a clear and effective path. Xaltius Academy's Data Science and AI Program is designed to equip you with the essential knowledge and practical experience to become a successful data scientist.

Key benefits of the program:

Comprehensive Curriculum: Covers all the core skills mentioned above, from foundational mathematics to advanced machine learning techniques.

Hands-on Projects: Provides practical experience working with real-world datasets and building a strong portfolio.

Expert Instructors: Learn from industry professionals with years of experience in data science and AI.

Career Support: Offers guidance and resources to help you launch your data science career.

Becoming a data scientist is a rewarding journey that blends technical expertise with analytical thinking. By focusing on developing these key skills and leveraging resources like Xaltius Academy's program, you can position yourself for a successful and impactful career in this in-demand field. The power of data is waiting to be unlocked – are you ready to take the challenge?

3 notes

·

View notes

Text

What is Data Science? A Comprehensive Guide for Beginners

In today’s data-driven world, the term “Data Science” has become a buzzword across industries. Whether it’s in technology, healthcare, finance, or retail, data science is transforming how businesses operate, make decisions, and understand their customers. But what exactly is data science? And why is it so crucial in the modern world? This comprehensive guide is designed to help beginners understand the fundamentals of data science, its processes, tools, and its significance in various fields.

#Data Science#Data Collection#Data Cleaning#Data Exploration#Data Visualization#Data Modeling#Model Evaluation#Deployment#Monitoring#Data Science Tools#Data Science Technologies#Python#R#SQL#PyTorch#TensorFlow#Tableau#Power BI#Hadoop#Spark#Business#Healthcare#Finance#Marketing

0 notes

Text

instagram

Hey there! 🚀 Becoming a data analyst is an awesome journey! Here’s a roadmap for you:

1. Start with the Basics 📚:

- Dive into the basics of data analysis and statistics. 📊

- Platforms like Learnbay (Data Analytics Certification Program For Non-Tech Professionals), Edx, and Intellipaat offer fantastic courses. Check them out! 🎓

2. Master Excel 📈:

- Excel is your best friend! Learn to crunch numbers and create killer spreadsheets. 📊🔢

3. Get Hands-on with Tools 🛠️:

- Familiarize yourself with data analysis tools like SQL, Python, and R. Pluralsight has some great courses to level up your skills! 🐍📊

4. Data Visualization 📊:

- Learn to tell a story with your data. Tools like Tableau and Power BI can be game-changers! 📈📉

5. Build a Solid Foundation 🏗️:

- Understand databases, data cleaning, and data wrangling. It’s the backbone of effective analysis! 💪🔍

6. Machine Learning Basics 🤖:

- Get a taste of machine learning concepts. It’s not mandatory but can be a huge plus! 🤓🤖

7. Projects, Projects, Projects! 🚀:

- Apply your skills to real-world projects. It’s the best way to learn and showcase your abilities! 🌐💻

8. Networking is Key 👥:

- Connect with fellow data enthusiasts on LinkedIn, attend meetups, and join relevant communities. Networking opens doors! 🌐👋

9. Certifications 📜:

- Consider getting certified. It adds credibility to your profile. 🎓💼

10. Stay Updated 🔄:

- The data world evolves fast. Keep learning and stay up-to-date with the latest trends and technologies. 📆🚀

. . .

#programming#programmers#developers#mobiledeveloper#softwaredeveloper#devlife#coding.#setup#icelatte#iceamericano#data analyst road map#data scientist#data#big data#data engineer#data management#machinelearning#technology#data analytics#Instagram

8 notes

·

View notes