#java lambda

Explore tagged Tumblr posts

Text

Java Lambda – Here Is The Reason Why Java 8 Still Slays! - SynergyTop

Unlock the potential of Java 8 with SynergyTop’s blog: ‘Java Lambda — Why Java 8 Still Slays!’ Explore core features like lambda expressions, method references, and the Stream API. Witness enhanced development efficiency and learn the syntax of Java Lambda expressions. Our verdict? Java Lambda boosts performance without compilation.

#Synergytop#Java Lambda#Lambda Expression#Lambda Function Java#Java Lambda Expression#Java 8 Features

0 notes

Text

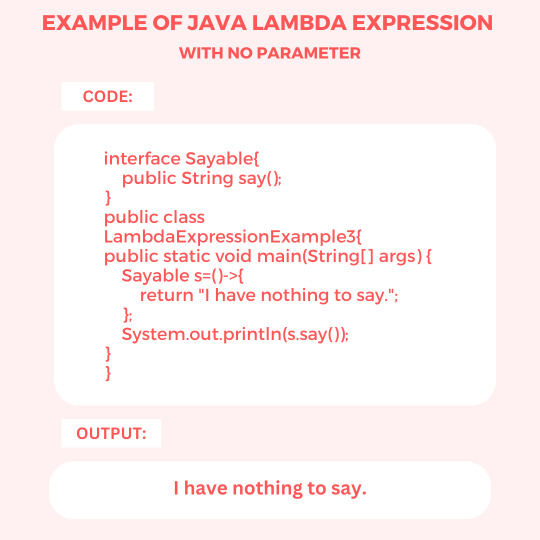

Example of Java Lambda Expression with No Parameter

Let us see an example of Java Lambda Expression with no parameter:

#java#programming#javaprogramming#code#coding#engineering#software#softwaredevelopment#education#technology#javalambda#lambda#lambdaexpression#noparameter#online

2 notes

·

View notes

Text

might work on legacy code my goodnes

1 note

·

View note

Text

認識 Java 函數式編程:從 Lambda ���達式到方法引用 | 3 種方法引用

認識 Java 函數式編程:從 Lambda 表達式到方法引用 | 3 種方法引用

Overview of Content 在這篇文章中,我們將深入探討 Java 函數式編程,全面了解閉包(Closure)與 Callback 的差別,並探討函數式編程與物件導向編程的不同之處;我們將詳細介紹 Java 中 Lambda 表達式的語法與格式,並分析匿名類與 Lambda 表達式之間的差異 此外,本文還將介紹如何自訂函數式介面(FunctionalInterface)以及 Java 內置的函數式介面,幫助您掌握這些強大的工具。在方法引用部分,我們會解釋方法引用、建構函數引用和數組引用的概念,並示範它們的應用場景。 透過這篇文章,你可以獲得學習和應用 Java 函數式編程的有用知識,從而提升你的編程技巧和效率 寫文章分享不易,如有引用參考請詳註出處,如有指導、意見歡迎留言(如果覺得寫得好也請給我一些支持),感謝…

0 notes

Text

Performance Best Practices Using Java and AWS Lambda: Combinations

Subscribe .tb0e30274-9552-4f02-8737-61d4b7a7ad49 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .tb0e30274-9552-4f02-8737-61d4b7a7ad49.place-top { margin-top: -10px; } .tb0e30274-9552-4f02-8737-61d4b7a7ad49.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#aws-lambda#aws-sdk#cloud-computing#java#java-best-practices#java-optimization#performance-testing#software-engineering

0 notes

Text

A Trickle or a Flood! Java Stream Basics

Java streams and the corresponding operation pipelines are useful to perform calculations and transformations on collections, ranges and other types of related inputs. #java #streams #lambdaExpressions #pipelines

TIP: References Quick List Java: Lambda Expressions Javadoc: Package java.util.function Javadoc: package java.util.stream Javadoc: IntStream Table of Contents Table of ContentsIntroductionCreating A Basic StreamLazy Intermediate Stream Operations Side EffectsFilteringMapping Items MapFlatMapMap to PrimitivesSortingRemoving DuplicatesTerminal Operations Reducing a Stream to a Single OutputFirst…

View On WordPress

0 notes

Text

AWS Lambda Compute Service Tutorial for Amazon Cloud Developers

Full Video Link - https://youtube.com/shorts/QmQOWR_aiNI Hi, a new #video #tutorial on #aws #lambda #awslambda is published on #codeonedigest #youtube channel. @java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedigest #codeonedigest #aws #amaz

AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you. These events may include changes in state such as a user placing an item in a shopping cart on an ecommerce website. AWS Lambda automatically runs code in response to multiple events, such as HTTP requests via Amazon API Gateway, modifications…

View On WordPress

#amazon lambda java example#aws#aws cloud#aws lambda#aws lambda api gateway#aws lambda api gateway trigger#aws lambda basic#aws lambda code#aws lambda configuration#aws lambda developer#aws lambda event trigger#aws lambda eventbridge#aws lambda example#aws lambda function#aws lambda function example#aws lambda function s3 trigger#aws lambda java#aws lambda server#aws lambda service#aws lambda tutorial#aws training#aws tutorial#lambda service

0 notes

Text

The new and significant lambda expression feature of Java was added in Java SE 8. It offers a simple and direct approach to using an expression to represent one method interface. In a library's collection, it is quite helpful. It is advantageous to filter, iterate, and extract data from a collection.

1 note

·

View note

Text

Rambling About C# Being Alright

I think C# is an alright language. This is one of the highest distinctions I can give to a language.

Warning: This post is verbose and rambly and probably only good at telling you why someone might like C# and not much else.

~~~

There's something I hate about every other language. Worst, there's things I hate about other languages that I know will never get better. Even worse, some of those things ALSO feel like unforced errors.

With C# there's a few things I dislike or that are missing. C#'s feature set does not obviously excel at anything, but it avoids making any huge misstep in things I care about. Nothing in C# makes me feel like the language designer has personally harmed me.

C# is a very tolerable language.

C# is multi-paradigm.

C# is the Full Middle Malcomist language.

C# will try to not hurt you.

A good way to describe C# is "what if Java sucked less". This, of course, already sounds unappealing to many, but that's alright. I'm not trying to gas it up too much here.

C# has sins, but let's try to put them into some context here and perhaps the reason why I'm posting will become more obvious:

C# didn't try to avoid generics and then implement them in a way that is very limiting (cough Go).

C# doesn't hamstring your ability to have statement lambdas because the language designer dislikes them and also because the language designer decided to have semantic whitespace making statement lambdas harder to deal with (cough Python).

C# doesn't require you to explicitly wrap value types into reference types so you can put value types into collections (cough Java).

C# doesn't ruin your ability to interact with memory efficiently because it forbids you from creating custom value types, ergo everything goes to the heap (cough cough Java, Minecraft).

C# doesn't have insane implicit type coercions that have become the subject of language design comedy (cough JavaScript).

C# doesn't keep privacy accessors as a suggestion and has the developers pinkie swear about it instead of actually enforcing it (cough cough Python).

Plainly put, a lot of the time I find C# to be alright by process of elimination. I'm not trying to shit on your favorite language. Everyone has different things they find tolerable. I have the Buddha nature so I wish for all things to find their tolerable language.

I do also think that C# is notable for being a mainstream language (aka not Haskell) that has a smaller amount of egregious mistakes, quirks and Faustian bargains.

The Typerrrrr

C# is statically typed, but the typing is largely effortless to navigate unlike something like Rust, and the GC gives a greater degree of safety than something like C++.

Of course, the typing being easy to work it also makes it less safe than Rust. But this is an appropriate trade-off for certain kinds of applications, especially considering that C# is memory safe by virtue of running on a VM. Don't come at me, I'm a Rust respecter!!

You know how some people talk about Python being amazing for prototyping? That's how I feel about C#. No matter how much time I would dedicate to Python, C# would still be a more productive language for me. The type system would genuinely make me faster for the vast majority of cases. Of course Python has gradual typing now, so any comparison gets more difficult when you consider that. But what I'm trying to say is that I never understood the idea that doing away entirely with static typing is good for fast iteration.

Also yes, C# can be used as a repl. Leave me alone with your repls. Also, while the debugger is active you can also evaluate arbitrary code within the current scope.

I think that going full dynamic typing is a mistake in almost every situation. The fact that C# doesn't do that already puts it above other languages for me. This stance on typing is controversial, but it's my opinion that is really shouldn't be. And the wind has constantly been blowing towards adding gradual typing to dynamic languages.

The modest typing capabilities C# coupled with OOP and inheritance lets you create pretty awful OOP slop. But that's whatever. At work we use inheritance in very few places where it results in neat code reuse, and then it's just mostly interfaces getting implemented.

C#'s typing and generic system is powerful enough to offer you a plethora of super-ergonomic collection transformation methods via the LINQ library. There's a lot of functional-style programming you can do with that. You know, map, filter, reduce, that stuff?

Even if you make a completely new collection type, if it implements IEnumerable<T> it will benefit from LINQ automatically. Every language these days has something like this, but it's so ridiculously easy to use in C#. Coupled with how C# lets you (1) easily define immutable data types, (2) explicitly control access to struct or class members, (3) do pattern matching, you can end up with code that flows really well.

A Friendly Kitchen Sink

Some people have described C#'s feature set as bloated. It is getting some syntactic diversity which makes it a bit harder to read someone else's code. But it doesn't make C# harder to learn, since it takes roughly the same amount of effort to get to a point where you can be effective in it.

Most of the more specific features can be effortlessly ignored. The ones that can't be effortlessly ignored tend to bring something genuinely useful to the language -- such as tuples and destructuring. Tuples have their own syntax, the syntax is pretty intuitive, but the first time you run into it, you will have to do a bit of learning.

C# has an immense amount of small features meant to make the language more ergonomic. They're too numerous to mention and they just keep getting added.

I'd like to draw attention to some features not because they're the most important but rather because it feels like they communicate the "personality" of C#. Not sure what level of detail was appropriate, so feel free to skim.

Stricter Null Handling. If you think not having to explicitly deal with null is the billion dollar mistake, then C# tries to fix a bit of the problem by allowing you to enable a strict context where you have to explicitly tell it that something can be null, otherwise it will assume that the possibility of a reference type being null is an error. It's a bit more complicated than that, but it definitely helps with safety around nullability.

Default Interface Implementation. A problem in C# which drives usage of inheritance is that with just interfaces there is no way to reuse code outside of passing function pointers. A lot of people don't get this and think that inheritance is just used because other people are stupid or something. If you have a couple of methods that would be implemented exactly the same for classes 1 through 99, but somewhat differently for classes 100 through 110, then without inheritance you're fucked. A much better way would be Rust's trait system, but for that to work you need really powerful generics, so it's too different of a path for C# to trod it. Instead what C# did was make it so that you can write an implementation for methods declared in an interface, as long as that implementation only uses members defined in the interface (this makes sense, why would it have access to anything else?). So now you can have a default implementation for the 1 through 99 case and save some of your sanity. Of course, it's not a panacea, if the implementation of the method requires access to the internal state of the 1 through 99 case, default interface implementation won't save you. But it can still make it easier via some techniques I won't get into. The important part is that default interface implementation allows code reuse and reduces reasons to use inheritance.

Performance Optimization. C# has a plethora of features regarding that. Most of which will never be encountered by the average programmer. Examples: (1) stackalloc - forcibly allocate reference types to the stack if you know they won't outlive the current scope. (2) Specialized APIs for avoiding memory allocations in happy paths. (3) Lazy initialization APIs. (4) APIs for dealing with memory more directly that allow high performance when interoping with C/C++ while still keeping a degree of safety.

Fine Control Over Async Runtime. C# lets you write your own... async builder and scheduler? It's a bit esoteric and hard to describe. But basically all the functionality of async/await that does magic under the hood? You can override that magic to do some very specific things that you'll rarely need. Unity3D takes advantage of this in order to allow async/await to work on WASM even though it is a single-threaded environment. It implements a cooperative scheduler so the program doesn't immediately freeze the moment you do await in a single-threaded environment. Most people don't know this capability exists and it doesn't affect them.

Tremendous Amount Of Synchronization Primitives and API. This ones does actually make multithreaded code harder to deal with, but basically C# erred a lot in favor of having many different ways to do multithreading because they wanted to suit different usecases. Most people just deal with idiomatic async/await code, but a very small minority of C# coders deal with locks, atomics, semaphores, mutex, monitors, interlocked, spin waiting etc. They knew they couldn't make this shit safe, so they tried to at least let you have ready-made options for your specific use case, even if it causes some balkanization.

Shortly Begging For Tagged Unions

What I miss from C# is more powerful generic bounds/constraints and tagged unions (or sum types or discriminated unions or type unions or any of the other 5 names this concept has).

The generic constraints you can use in C# are anemic and combined with the lack of tagged unions this is rather painful at times.

I remember seeing Microsoft devs saying they don't see enough of a usecase for tagged unions. I've at times wanted to strangle certain people. These two facts are related to one another.

My stance is that if you think your language doesn't need or benefit from tagged unions, either your language is very weird, or, more likely you're out of your goddamn mind. You are making me do really stupid things every time I need to represent a structure that can EITHER have a value of type A or a value of type B.

But I think C# will eventually get tagged unions. There's a proposal for it here. I would be overjoyed if it got implemented. It seems like it's been getting traction.

Also there was an entire section on unchecked exceptions that I removed because it wasn't interesting enough. Yes, C# could probably have checked exceptions and it didn't and it's a mistake. But ultimately it doesn't seem to have caused any make-or-break in a comparison with Java, which has them. They'd all be better off with returning an Error<T>. Short story is that the consequences of unchecked exceptions have been highly tolerable in practice.

Ecosystem State & FOSSness

C# is better than ever and the tooling ecosystem is better than ever. This is true of almost every language, but I think C# receives a rather high amount of improvements per version. Additionally the FOSS story is at its peak.

Roslyn, the bedrock of the toolchain, the compiler and analysis provider, is under MIT license. The fact that it does analysis as well is important, because this means you can use the wealth of Roslyn analyzers to do linting.

If your FOSS tooling lets you compile but you don't get any checking as you type, then your development experience is wildly substandard.

A lot of stupid crap with cross-platform compilation that used to be confusing or difficult is now rather easy to deal with. It's basically as easy as (1) use NET Core, (2) tell dotnet to build for Linux. These steps take no extra effort and the first step is the default way to write C# these days.

Dotnet is part of the SDK and contains functionality to create NET Core projects and to use other tools to build said projects. Dotnet is published under MIT, because the whole SDK and runtime are published under MIT.

Yes, the debugger situation is still bad -- there's no FOSS option for it, but this is more because nobody cares enough to go and solve it. Jetbrains proved anyone can do it if they have enough development time, since they wrote a debugger from scratch for their proprietary C# IDE Rider.

Where C# falls flat on its face is the "userspace" ecosystem. Plainly put, because C# is a Microsoft product, people with FOSS inclinations have steered clear of it to such a degree that the packages you have available are not even 10% of what packages a Python user has available, for example. People with FOSS inclinations are generally the people who write packages for your language!!

I guess if you really really hate leftpad, you might think this is a small bonus though.

Where-in I talk about Cross-Platform

The biggest thing the ecosystem has been lacking for me is a package, preferably FOSS, for developing cross-platform applications. Even if it's just cross-platform desktop applications.

Like yes, you can build C# to many platforms, no sweat. The same way you can build Rust to many platforms, some sweat. But if you can't show a good GUI on Linux, then it's not practically-speaking cross-platform for that purpose.

Microsoft has repeatedly done GUI stuff that, predictably, only works on Windows. And yes, Linux desktop is like 4%, but that 4% contains >50% of the people who create packages for your language's ecosystem, almost the exact point I made earlier. If a developer runs Linux and they can't have their app run on Linux, they are not going to touch your language with a ten foot pole for that purpose. I think this largely explains why C#'s ecosystem feels stunted.

The thing is, I'm not actually sure how bad or good the situation is, since most people just don't even try using C# for this usecase. There's a general... ecosystem malaise where few care to use the language for this, chiefly because of the tone that Microsoft set a decade ago. It's sad.

HOWEVER.

Avalonia, A New Hope?

Today we have Avalonia. Avalonia is an open-source framework that lets you build cross-platform applications in C#. It's MIT licensed. It will work on Windows, macOS, Linux, iOS, Android and also somehow in the browser. It seems to this by actually drawing pixels via SkiaSharp (or optionally Direct2D on Windows).

They make money by offering migration services from WPF app to Avalonia. Plus general support.

I can't say how good Avalonia is yet. I've researched a bit and it's not obviously bad, which is distinct from being good. But if it's actually good, this would be a holy grail for the ecosystem:

You could use a statically typed language that is productive for this type of software development to create cross-platform applications that have higher performance than the Electron slop. That's valuable!

This possibility warrants a much higher level of enthusiasm than I've seen, especially within the ecosystem itself. This is an ecosystem that was, for a while, entirely landlocked, only able to make Windows desktop applications.

I cannot overstate how important it is for a language's ecosystem to have a package like this and have it be good. Rust is still missing a good option. Gnome is unpleasant to use and buggy. Falling back to using Electron while writing Rust just seems like a bad joke. A lot of the Rust crates that are neither Electron nor Gnome tend to be really really undercooked.

And now I've actually talked myself into checking out Avalonia... I mean after writing all of that I feel like a charlatan for not having investigated it already.

69 notes

·

View notes

Text

Why the fuck am I using lambdas in Fucking JAVA???

6 notes

·

View notes

Note

talk about the .net ecosystem. i know next to nothing about it, i think

so c# is microsofts answer to java, and .net is microsofts version of the jvm. im not a huge .net guy myself because until recently it was much better on windows, but it's a lively ecosystem of packages the way you'd have with any Serious Business Language For Getting Things Done. from what ive seen c# was very competitive for a while by being more willing to adopt new language features than java (better async, cleaner lambdas, both of which java has picked up). there are other languages that run on .net, just like the jvm- f# is a pretty popular functional language, and relevant to the terminal experience on windows, there's powershell the scripting language.

powershell is clunky, and the syntax is unintuitive, but it has access to the full power of the .net ecosystem. any windows machine will be full to the brim with random libraries to make shit run, and whatever interfaces they expose to developers are available in powershell. plus you get real types! it's the most powerful scripting language that keeps it's shell roots- the closest ive come is janet with the sh library, but it can't approach powershells integration with the rest of the system

31 notes

·

View notes

Text

Expanding and cleaning up on a conversion I had with @suntreehq in the comments of this post:

Ruby is fine, I'm just being dramatic. It's not nearly as incomprehensible as I find JavaScript, Perl, or Python. I think it makes some clumsy missteps, and it wouldn't be my first (or even fifth) choice if I were starting a new project, but insofar as I need to use it in my Software Engineering class I can adapt.

There are even things I like about it -- it's just that all of them are better implemented in the languages Ruby borrows them from. I don't want Lisp with Eiffel's semantics, I want Lisp with Lisp's semantics. I don't want Ada with Perl's type system, I want Ada with Ada's type system.

One of these missteps to me is how it (apparently) refuses to adopt popular convention when it comes to the names and purposes of its keywords.

Take yield. In every language I've ever used, yield has been used for one purpose: suspending the current execution frame and returning to something else. In POSIX C, this is done with pthread_yield(), which signals the thread implementation that the current thread isn't doing anything and something else should be scheduled instead. In languages with coroutines, like unstable Rust, the yield keyword is used to pause execution of the current coroutine and optionally return a value (e.g. yield 7; or yield foo.bar;), execution can then be resumed by calling x.resume(), where x is some coroutine. In languages with generators, like Python, the behavior is very similar.

In Ruby, this is backwards. It doesn't behave like a return, it behaves like a call. It's literally just syntax sugar for using the call method of blocks/procs/lambdas. We're not temporarily returning to another execution frame, we're entering a new one! Those are very similar actions, but they're not the same. Why not call it "run" or "enter" or "call" or something else less likely to confuse?

Another annoyance comes in the form of the throw and catch keywords. These are almost universally (in my experience) associated with exception handling, as popularized by Java. Not so in Ruby! For some unfathomable reason, throw is used to mean the same thing as Rust or C2Y's break-label -- i.e. to quickly get out of tightly nested control flow when no more work needs to be done. Ruby does have keywords that behave identically to e.g. Java or C++'s throw and catch, but they're called raise and rescue, respectively.

That's not to say raise and rescue aren't precedented (e.g. Eiffel and Python) but they're less common, and it doesn't change the fact that it's goofy to have both them and throw/catch with such similar but different purposes. It's just going to trip people up! Matsumoto could have picked any keywords he could have possibly wanted, and yet he picked the ones (in my opinion) most likely to confuse.

I have plenty more and deeper grievances with Ruby too (sigils, throws being able to unwind the call stack, object member variables being determined at runtime, OOP in general being IMO a clumsy paradigm, the confusing and non-orthogonal ways it handles object references and allocation, the attr_ pseudo-methods feeling hacky, initialization implying declaration, the existence of "instance_variable_get" totally undermining scope visibility, etc., etc.) but these are I think particularly glaring (if inconsequential).

5 notes

·

View notes

Text

Consistency and Reducibility: Which is the theorem and which is the lemma?

Here's an example from programming language theory which I think is an interesting case study about how "stories" work in mathematics. Even if a given theorem is unambiguously defined and certainly true, the ways people contextualize it can still differ.

To set the scene, there is an idea that typed programming languages correspond to logics, so that a proof of an implication A→B corresponds to a function of type A→B. For example, the typing rules for simply-typed lambda calculus are exactly the same as the proof rules for minimal propositional logic, adding an empty type Void makes it intuitionistic propositional logic, by adding "dependent" types you get a kind of predicate logic, and really a lot of different programming language features also make sense as logic rules. The question is: if we propose a new programming language feature, what theorem should we prove in order to show that it also makes sense logically?

The story I first heard goes like this. In order to prove that a type system is a good logic we should prove that it is consistent, i.e. that not every type is inhabited, or equivalently that there is no program of type Void. (This approach is classical in both senses of the word: it goes back to Hilbert's program, and it is justified by Gödel's completeness theorem/model existence theorem, which basically says that every consistent theory describes something.)

Usually it is obvious that no values can be given type Void, the only issue is with non-value expressions. So it suffices to prove that the language is normalizing, that is to say every program eventually computes to a value, as opposed to going into an infinite loop. So we want to prove:

If e is an expression with some type A, then e evaluates to some value v.

Naively, you may try to prove this by structural induction on e. (That is, you assume as an induction hypothesis that all subexpressions of e normalize, and prove that e does.) However, this proof attempt gets stuck in the case of a function call like (λx.e₁) e₂. Here we have some function (λx.e₁) : A→B and a function argument e₂ : A. The induction hypothesis just says that (λx.e₁) normalizes, which is trivially true since it's already a value, but what we actually need is an induction hypothesis that says what will happen when we call the function.

In 1967 William Tait had a good idea. We should instead prove:

If e is an expression with some type A, then e is reducible at type A.

"Reducible at type A" is a predicate defined on the structure of A. For base types, it just means normalizable, while for function types we define

e is reducable at type A→B ⇔ for all expressions e₁, if e₁ is reducible at A then (e e₁) is reducible at B.

For example, an function is reducible at type Bool→Bool→Bool if whenever you call it with two normalizing boolean arguments, it returns a boolean value (rather than looping forever).

This really is a very good idea, and it can be generalized to prove lots of useful theorems about programming languages beyond just termination. But the way I (and I think most other people, e.g. Benjamin Pierce in Types and Programming Languages) have told the story, it is strictly a technical device: we prove consistency via normalization via reducibility.

❧

The story works less well when you consider programs that aren't normalizing, which is certainly not an uncommon situation: nothing in Java or Haskell forbids you from writing infinite loops. So there has been some interest in how dependent types work if you make termination-checking optional, with some famous projects along these lines being Idris and Dependent Haskell. The idea here is that if you write a program that does terminate it should be possible to interpret it as a proof, but even if a program is not obviously terminating you can still run it.

At this point, with the "consistency through normalization" story in mind, you may have a bad idea: "we can just let the typechecker try to evaluate a given expression at typechecking-time, and if it computes a value, then we can use it as as a proof!" Indeed, if you do so then the typechecker will reject all attempts to "prove" Void, so you actually create a consistent logic.

If you think about it a little longer, you notice that it's a useless logic. For example, an implication like ∀n.(n² = 3) is provable, it's inhabited by the value (λn. infinite_loop()). That function is a perfectly fine value, even though it will diverge as soon as you call it. In fact, all ∀-statements and implications are inhabited by function values, and proving universally quantified statements is the entire point of using logical proof at all.

❧

So what theorem should you prove, to ensure that the logic makes sense? You want to say both that Void is unprovable, and also that if a type A→B is inhabited, then A really implies B, and so on recursively for any arrow types inside A or B. If you think a bit about this, you want to prove that if e:A, then e is reducible at type A... And in fact, Kleene had already proposed basically this (under the name realizability) as a semantics for Intuitionistic Logic, back in the 1940s.

So in the end, you end up proving the same thing anyway—and none of this discussion really becomes visible in the formal sequence of theorems and lemmas. The false starts need to passed along in the asides in the text, or in tumblr posts.

8 notes

·

View notes

Text

Tech Advice 1:

If you're a student or a fresher in computer science, sign up for AWS free tier (free for 1 year) and learn about the different services - Lambda, DynamoDB, EC2, RDS, S3, Cognito, IAM.

You can buy any course on Udemy - NodeJs, Python, C++, Java, PhP or any language/framework you're interested in and is supported by AWS.

Be it MEAN/MERN, Python, Java or any stack you use, it's a useful skill.

(I've experience but the access to this was never provided and I didn't get to learn as much as I wanted to and it's causing me trouble with getting a job)

You can also go for GCP/Azure if that looks good to you.

5 notes

·

View notes

Text

Two paradigms rule programming: imperative and declarative.

Declarative emerged to address imperative's drawbacks. The imperative paradigm, also known as the procedural, is the oldest and most widely used approach to programming. It's like giving step-by-step instructions to a computer, telling it what to do and how to do it, one command at a time. It's called "imperative" because as programmers we dictate exactly what the computer has to do, in a very specific way. Declarative programming is the direct opposite of imperative programming in the sense that the programmer doesn't give instructions about how the computer should execute the task, but rather on what result is needed. Two main subcategories are functional and reactive programming. Functional programming is all about functions (procedures with a specific set of functionalities) and they can be assigned to variables, passed as arguments, and returned from other functions. Java offers libraries and frameworks that introduce functional programming concepts like lambda expressions and streams. Reactive programming is a programming paradigm where the focus is on developing asynchronous and non-blocking components. Back in the year 2013, a team of developers, lead by Jonas Boner, came together to define a set of core principles in a document known as the Reactive Manifesto. With reactive streams initiatives that are incorporated in Java specifications there are frameworks (RxJava, Akka Streams, Spring WebFlux..) that provide reactive paradigm implementation to Java.

3 notes

·

View notes

Text

Lambdas, Functional Interfaces and Generics in Java

I wrote a little blog post on lambdas, functional interfaces and generics in Java, check it out on my dev.to blog here:

#coding#codeblr#development#developers#ladyargento#code#web development#webdev#dev.to#lambdas#functional programming#generics#functional interfaces#lambda#programming#dev

8 notes

·

View notes